When the first version of Siri came out, she battled to understand natural language patterns, expressions, colloquialisms, and different accents all synthesized into computational algorithms.

Voice technology has improved extensively over the last few years. These changes are all thanks to the implementation of Artificial Intelligence (AI). AI has made voice technology much more adaptive and efficient.

This article focuses on the impact that AI and voice technology have on businesses enabling voice technology services.

AI and Voice Technology

The human brain is complex. Despite this, there are limits to what it can do. For a programmer to think of all the possible eventualities is impractical at best. In traditional software development, developers instruct software on what function to execute and in which circumstances.

It’s a time-consuming and monotonous process. It is not uncommon for developers to make small mistakes that become noticeable bugs once a program is released.

With AI, developers instruct the software on how to function and learn. As the AI algorithm learns, it finds ways to make the process more efficient. Because AI can process data a lot faster than we can, it can come up with innovative solutions based on the previous examples that it accesses.

The revolution of voice tech powered by AI is dramatically changing the way many businesses work. AI, in essence, is little more than a smart algorithm. What makes it different from other algorithms is its ability to learn. We are now moving from a model of programming to teaching.

Traditionally, programmers write code to tell the algorithm how to behave from start to finish. Now programmers can dispense with tedious processes. All they need to do is to teach the program the tasks it needs to perform.

The Rise of AI and Voice Technology

Voice assistants can now do a lot more than just run searches. They can help you book appointments, flights, play music, take notes, and much more. Apple offers Siri, Microsoft has Cortana, Amazon uses Alexa, and Google created Google Assistant. With so many choices and usages, is it any wonder that 40% of us use voice tech daily?

They’re also now able to understand not only the question you’re asking but the general context. This ability allows voice tech to offer better results.

Before it, communication happened via typing or graphical interfaces. Now, sites and applications can harness the power of smart voice technologies to enhance their services in ways previously unimagined. It’s the reason voice-compatible products are on the rise.

Search engines have also had to keep up as optimization targeted text-based search queries only. As voice assistant technology advances, it’s starting to change. In 2019, Amazon sold over 100 million devices, including Echo and third-party gadgets, with Alexa built-in.

According to Google, 20% of all searches are voice, and by 2020 that number could rise to 50%. That means for businesses looking to grow voice technology is one major area to consider, as the global voice commerce is expected to be worth $40B by 2022.

How Voice Technology Works

Voice technology requires two different interfaces. The first is between the end-user and the endpoint device in use. The second is between the endpoint device and the server.

It’s the server that contains the “personality” of your voice assistant. Be it a bare metal server or on the cloud, voice technology is powered by computational resources. It’s where all the AI’s background processes run, despite giving you the feeling that the voice assistant “lives” on your devices.

It seems logical, considering how fast your assistant answers your question. The truth is that your phone alone doesn’t have the required processing power or space to run the full AI program. That’s why your assistant is inaccessible when the internet is down.

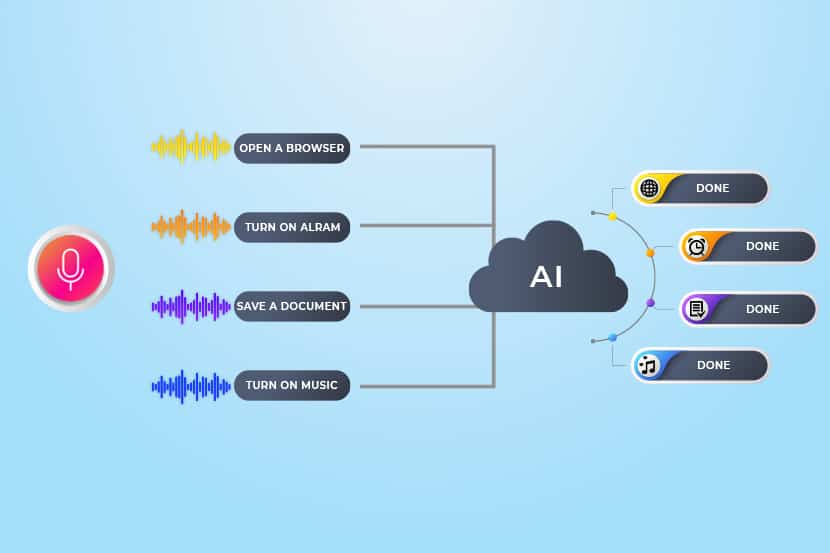

How Does AI in Voice Technology Work?

Say, for example, that you want to search for more information on a particular country. You simply voice your request. Your request then relays to the server. That’s when AI takes over. It uses machine learning algorithms to run searches across millions of sites to find the precise information that you need.

To find the best possible information for you, the AI must also analyze each site very quickly. This rapid analysis enables it to determine whether or not the website pertains to the search query and how credible the information is.

If the site is deemed worthy, it shows up in search results. Otherwise, the AI discards it.

The AI goes one step further and watches how you react. Did you navigate off the site straight away? If so, the technology takes it as a sign that the site didn’t match the search term. When someone else uses similar search terms in the future, AI remembers that and refines its results.

Over time, as the AI learns more and more. It becomes more capable of producing accurate results. At the same time, the AI learns all about your preferences. Unless you say otherwise, it’ll focus on search results in the area or country where you live. It determines what music you like, what settings you prefer, and makes recommendations. This intelligent programming allows that simple voice assistant to improve its performance every time you use it.

Learn how Artificial Intelligence automates procedures in ITOps - What is AIOps.

Servers Power Artificial Intelligence

Connectivity issues, the program’s speed, and the ability of servers to manage all this information are all concerns of voice technology providers.

Companies need to offer these services to run enterprise-level servers. The servers must be capable of storing large amounts of data and processing it at high speed. The alternative is cloud-computing located off-premise by third-party providers, that reduces over-head costs and increases the growth potential of your services and applications.

Alexa and Siri are complex programs, but why would they need so much space on a server? After all, they’re individual programs; how much space could they need? That’s where it becomes tricky.

According to Statista, in 2019, there were 3.25 billion active virtual assistant users globally. Forecasts say that the number will be 8 billion by the end of 2023.

The assistant adapts to the needs of each user. That essentially means that it has to adjust to a possible 3.25 billion permutations of the underlying system. The algorithm learns as it goes, so all that information must pass through the servers.

It’s expected that each user would want their personal settings stored. So, the servers must accommodate not only the new information stored, but also save the old information too.

This ever-growing capacity is why popular providers run large server farms. This is where the on-premise versus cloud computing debate takes on greater meaning.

Takeaway

Without the computational advances made in AI, voice technology would not be possible. The permutations in the data alone would be too much for humans to handle.

Artificial intelligence is redefining tech apps with voice technologies in a variety of businesses. It’s very compatible with AI and will keep improving as machine learning grows.

The incorporation of voice technology using AI in the cloud can provide fast processing and improve businesses dramatically. Businesses can have voice assistants that handle customer care and simultaneously learn from those interactions, teaching itself how to serve your clients better.