What is Quantum Computing & How Does it Work?

Technology giants like Google, IBM, Amazon, and Microsoft are pouring resources into quantum computing. The goal of quantum computing is to create the next generation of computers and overcome classic computing limits.

Despite the progress, there are still unknown areas in this emerging field.

This article is an introduction to the basic concepts of quantum computing. You will learn what quantum computing is and how it works, as well as what sets a quantum device apart from a standard machine.

What is Quantum Computing? Defined

Quantum computing is a new generation of computers based on quantum mechanics, a physics branch that studies atomic and subatomic particles. These supercomputers perform computations at speeds and levels an ordinary computer cannot handle.

These are the main differences between a quantum device and a regular desktop:

- Different architecture: Quantum computers have a different architecture than conventional devices. For example, instead of traditional silicon-based memories or processors, different technology platforms, such as super conducting circuits and trapped atomic ions are utilized.

- Computational intensive use cases: A casual user might not have much use for a quantum computer. The computational-heavy focus and complexity of these machines make them suitable for corporate and scientific settings in the foreseeable future.

Unlike a standard computer, its quantum counterpart can perform multiple operations simultaneously. These machines also store more states per unit of data and operate on more efficient algorithms.

Incredible processing power makes quantum computers capable of solving complex tasks and searching through unsorted data.

What is Quantum Computing Used for? Industry Use Cases

The adoption of more powerful computers benefits every industry. However, some areas already stand out as excellent opportunities for quantum computers to make a mark:

- Healthcare: Quantum computers help develop new drugs at a faster pace. DNA research also benefits greatly from using quantum computing.

- Cybersecurity: Quantum programming can advance data encryption. The new Quantum Key Distribution (QKD) system, for example, uses light signals to detect cyber attacks or network intruders.

- Finance: Companies can optimize their investment portfolios with quantum computers. Improvements in fraud detection and simulation systems are also likely.

- Transport: Quantum computers can lead to progress in traffic planning systems and route optimization.

What are Qubits?

The key behind a quantum computer’s power is its ability to create and manipulate quantum bits, or qubits.

Like the binary bit of 0 and 1 in classic computing, a qubit is the basic building block of quantum computing. Whereas regular bits can either be in the state of 0 or 1, a qubit can also be in the state of both 0 and 1.

Here is the state of a qubit q0:

q0 = a|0> + b|1>, where a2 + b2 = 1

The likelihood of q0 being 0 when measured is a2. The probability of it being 1 when measured is b2. Due to the probabilistic nature, a qubit can be both 0 and 1 at the same time.

For a qubit q0 where a = 1 and b = 0, q0 is equivalent to a classical bit of 0. There is a 100% chance to get to a value of 0 when measured. If a = 0 and b = 1, then q0 is equivalent to a classical bit of 1. Thus, the classical binary bits of 0 and 1 are a subset of qubits.

Now, let’s look at an empty circuit in the IBM Circuit Composer with a single qubit q0 (Figure 1). The “Measurement probabilities” graph shows that the q0 has 100% of being measured as 0. The “Statevector” graph shows the values of a and b, which correspond to the 0 and 1 “computational basis states” column, respectively.

In the case of Figure 1, a is equal to 1 and b to 0. So, q0 has a probability of 12 = 1 to be measured as 0.

A connected group of qubits provides more processing power than the same number of binary bits. The difference in processing is due to two quantum properties: superposition and entanglement.

Superposition in Quantum Computing

When 0 < a and b < 1, the qubit is in a so-called superposition state. In this state, it is possible to jump to either 0 or 1 when measured. The probability of getting to 0 or 1 is defined by a2 and b2.

The Hadamard Gate is the basic gate in quantum computing. The Hadamard Gate moves the qubit from a non-superposition state of 0 or 1 into a superposition state. While in a superposition state, there is a 0.5 probability of it being measured as 0. There is also a 0.5 chance of the qubit ending up as 1.

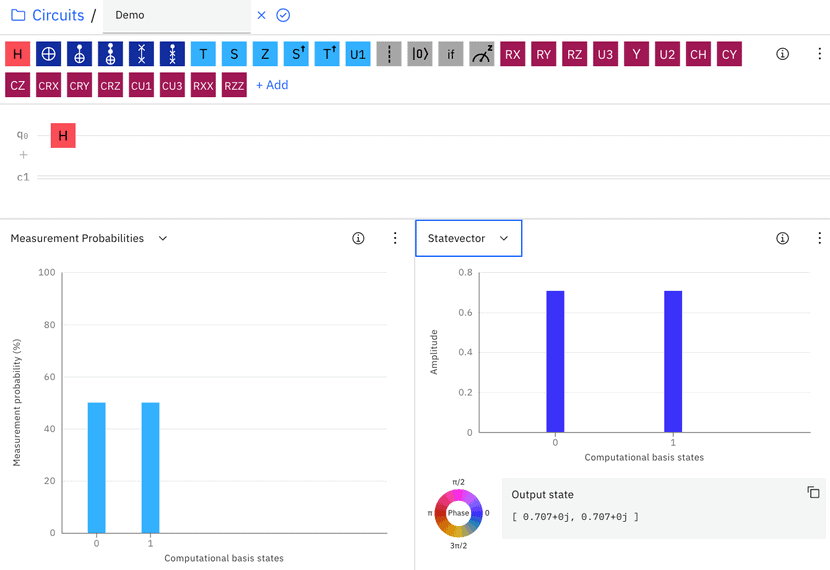

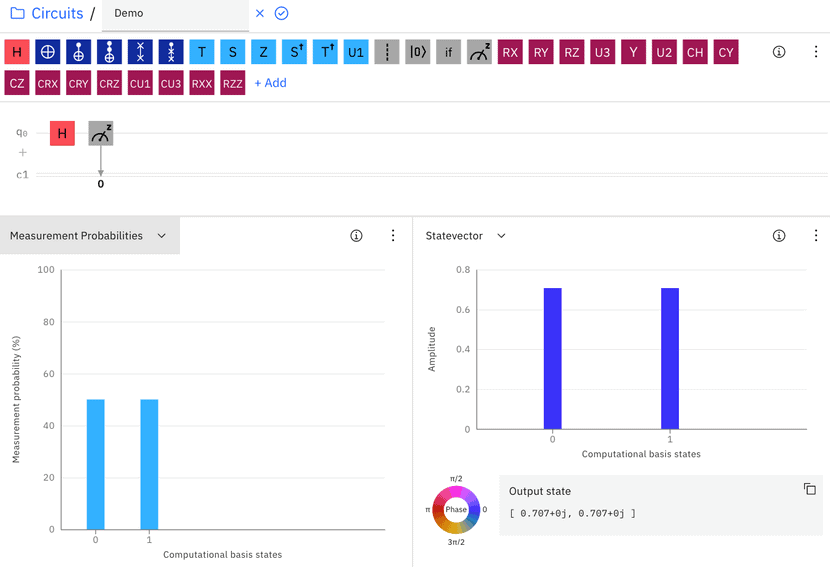

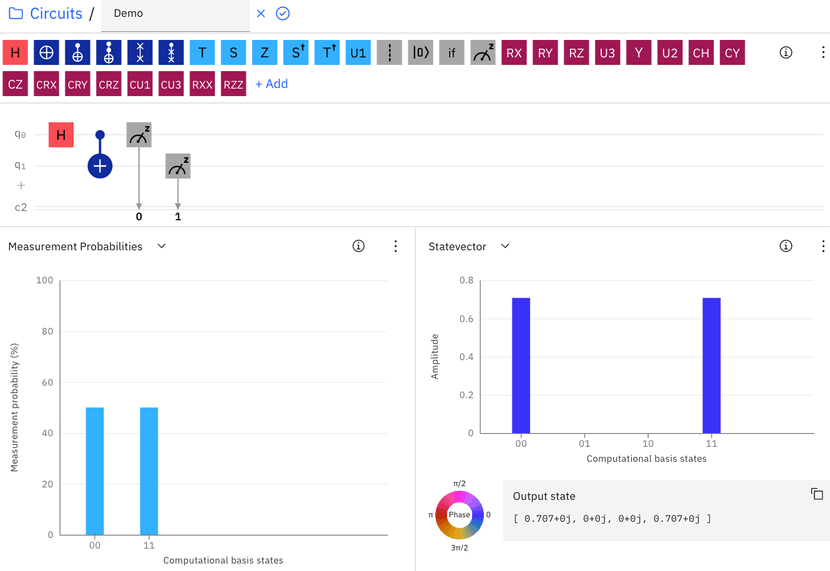

Let’s look at the effect of adding the Hadamard Gate (shown as a red H) on q0 where q0 is currently in a non-superposition state of 0 (Figure 2). After passing the Hadamard gate, the “Measurement Probabilities” graph shows that there is a 50% chance of getting a 0 or 1 when q0 is measured.

The “Statevector” graph shows the value of a and b, which are both square roots of 0.5 = 0.707. The probability for the qubit to be measured to 0 and 1 is 0.7072 = 0.5, so q0 is now in a superposition state.

What Are Measurements?

When we measure a qubit in a superposition state, the qubit jumps to a non-superposition state. A measurement changes the qubit and forces it out of superposition to the state of either 0 or 1.

If a qubit is in a non-superposition state of 0 or 1, measuring it will not change anything. In that case, the qubit is already in a state of 100% being 0 or 1 when measured.

Let us add a measurement operation into the circuit (Figure 3). We measure q0 after the Hadamard gate and output the value of the measurement to bit 0 (a classical bit) in c1:

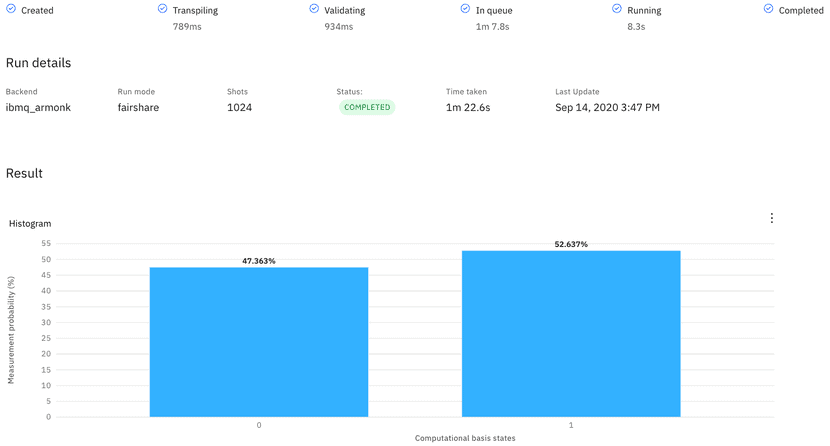

To see the results of the q0 measurement after the Hadamard Gate, we send the circuit to run on an actual quantum computer called “ibmq_armonk.” By default, there are 1024 runs of the quantum circuit. The result (Figure 4) shows that about 47.4% of the time, the q0 measurement is 0. The other 52.6% of times, it is measured as 1:

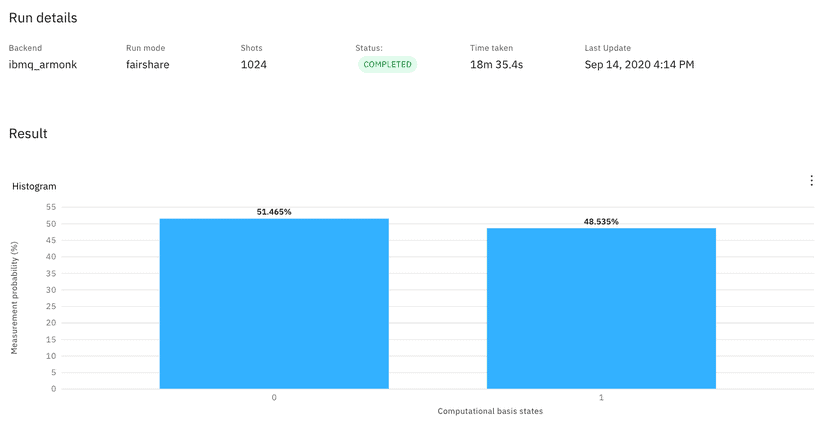

The second run (Figure 5) yields a different distribution of 0 and 1, but still close to the expected 50/50 split:

Entanglement in Quantum Computing

If two qubits are in an entanglement state, the measurement of one qubit instantly “collapses” the value of the other. The same effect happens even if the two entangled qubits are far apart.

If we measure a qubit (either 0 or 1) in an entanglement state, we get the value of the other qubit. There is no need to measure the second qubit. If we measure the other qubit after the first one, the probability of getting the expected result is 1.

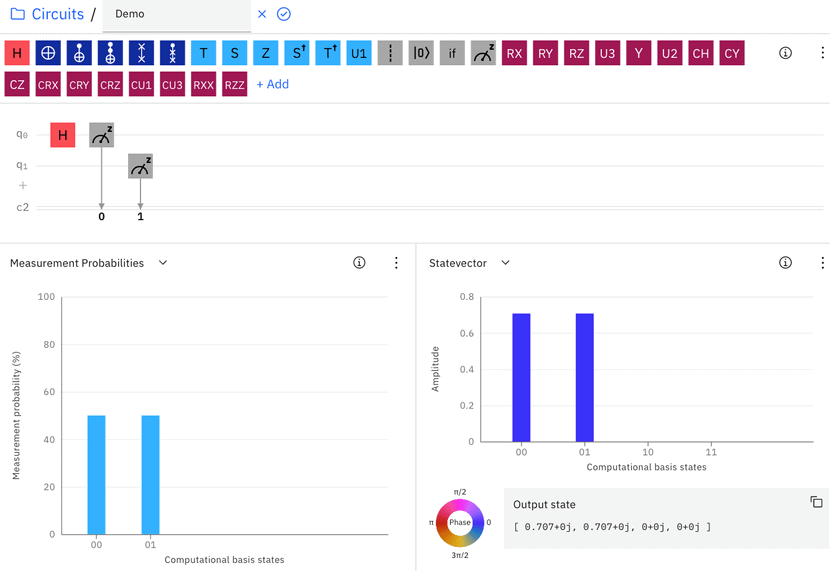

Let us look at an example. A quantum operation that puts two untangled qubits into an entangled state is the CNOT gate. To demonstrate this, we first add another qubit q1, which is initialized to 0 by default. Before the CNOT gate, the two qubits are untangled, so q0 has a 0.5 chance of being 0 or 1 due to the Hadamard gate, while q1 is going to be 0. The “Measurement Probabilities” graph (Figure 6) shows that the probability of (q1, q0) being (0, 0) or (0, 1) is 50%:

Then we add the CNOT gate (shown as a blue dot and the plus sign) that takes the output of q0 from the Hadamard gate and q1 as inputs. The “Measurement Probabilities” graph now shows that there is a 50% chance of (q1, q0) being (0, 0) and 50% of being (1, 1) when measured (Figure 7):

There is zero chance of getting (0, 1) or (1, 0). Once we determine the value of one qubit, we know the other’s value because the two must be equal. In such a state, q0 and q1 are entangled.

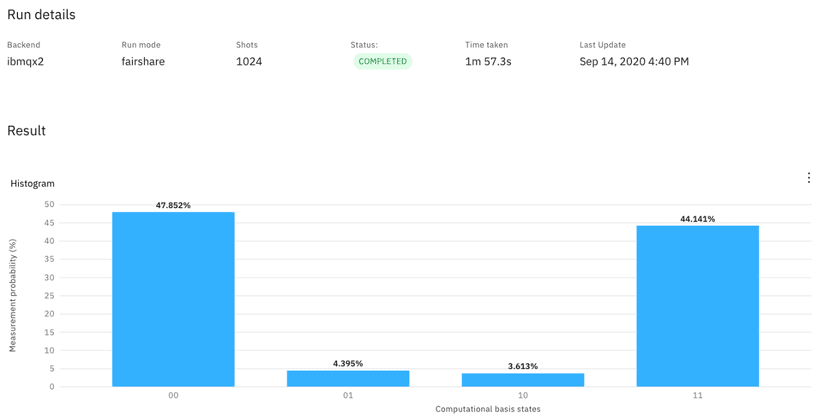

Let us run this on an actual quantum computer and see what happens (Figure 8):

We are close to a 50/50 distribution between the ‘00’ and ‘11’ states. We also see unexpected occurrences of ‘01’ and ‘10’ due to the quantum computer’s high error rates. While error rates for classical computers are almost non-existent, high error rates are the main challenge of quantum computing.

The Bell Circuit is Only a Starting Point

The circuit shown in the ‘Entanglement’ section is called the Bell Circuit. Even though it is basic, that circuit shows a few fundamental concepts and properties of quantum computing, namely qubits, superposition, entanglement, and measurements. The Bell Circuit is often cited as the Hello World program for quantum computing.

By now, you probably have many questions, such as:

- How do we physically represent the superposition state of a qubit?

- How do we physically measure a qubit, and why would that force a qubit into 0 or 1?

- What exactly is the |0> and |1> in the formulation of qubit?

- Why do a2 and b2 correspond to the chance of a qubit being measured as 0 and 1?

- What are the mathematical representations of the Hadamard and CNOT gates? Why do gates put qubits into superposition and entanglement states?

- Can we explain the phenomenon of entanglement?

There are no shortcuts to learning quantum computing. The field touches on complex topics spanning physics, mathematics, and computer science.

There is an abundance of good books and video tutorials that introduce the technology. These resources typically cover pre-requisite concepts like linear algebra, quantum mechanics, and binary computing.

In addition to books and tutorials, you can also learn a lot from code examples. Solutions to financial portfolio optimization and vehicle routing, for example, are great starting points for learning about quantum computing.

The Next Step in Computer Evolution

Quantum computers have the potential to exceed even the most advanced supercomputers. Quantum computing can lead to breakthroughs in science, medicine, machine learning, construction, transport, finances, and emergency services.

The promise is apparent, but the technology is still far from being applicable to real-life scenarios. New advances emerge every day, though, so expect quantum computing to cause significant disruptions in years to come.

Recent Posts

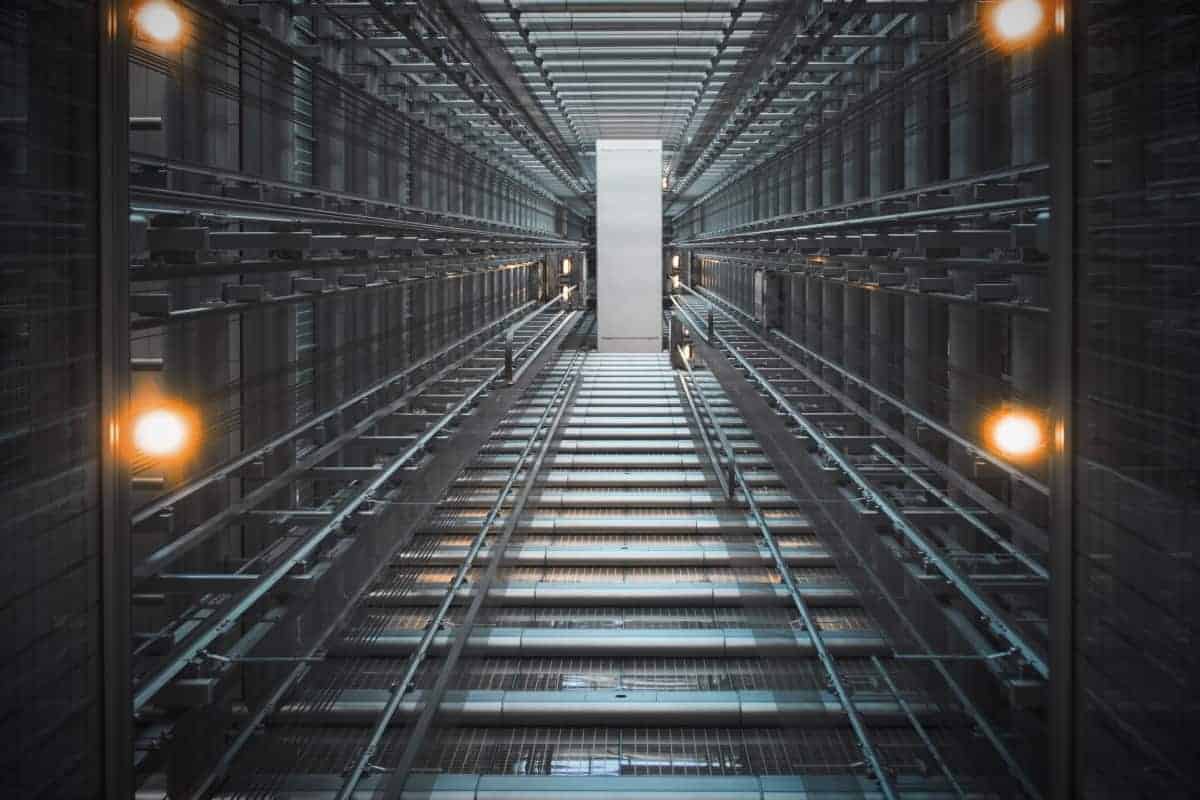

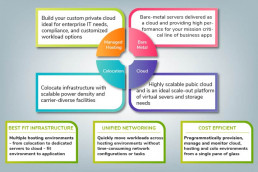

Dedicated Server Benefits: 5 Advantages for Your Business

You understand the value of your company’s online presence.

You have your website, but how is it performing? Many business owners do not realize that they share servers with 100’s or even 1000’s of other websites.

Is it time to take your business to the next level and examine the benefits of using dedicated servers.

You may be looking to expand. Your backend database may be straining under the pressure of all those visitors. To stay ahead of competitors, every effort counts.

Your shared server hosting has limitations to your growing business needs. In short, you need a dedicated hosting provider. Whether it’s with shared or dedicated hosting, you get what you pay for.

Let’s address the question that is top of your (or your CFO’s) mind.

How much will a dedicated server cost the business?

The answer to that question depends on the following:

- the computing power you need

- amount of bandwidth you will require

- the quantity of secure data storage and backup

A dedicated server is more expensive than a shared web hosting arrangement, but the benefits are worthy of the increased costs.

Why? A dedicated server supplies more of what you need. It is in a completely different league. Its power helps you level the playing field. You can be more competitive in the growing world of eCommerce.

Five Dedicated Server Benefits

A dedicated server leverages the power and scalability. With a dedicated server, your business realizes a compound return on its monthly investment in the following ways:

1. Exclusive use of dedicated resources

When you have your own dedicated server, you get the entire web server for your exclusive use. This is a significant advantage when comparing shared hosting vs. dedicated hosting.

The server’s disk space, RAM, bandwidth, etc., now belong to you.

- You have exclusive use of all the CPU or RAM and bandwidth. At peak business times, you continue to get peak performance.

- You have root access to the server. You can add your own software and configure settings and access the server logs. Root access is the key advantage of dedicated servers. Again, it goes back to exclusivity.

So, within the limits of propriety, the way you decide to use your dedicated hosting plan is your business.

You can run your applications or implement special server security measures. You can even use a different operating system from your provider. In short, you can drive your website the way you drive your business—in a flexible, scalable, and responsive way.

2. Flexibility managing your growing business

A dedicated server can accommodate your growing business needs. With a dedicated server, you can decide on your own server configuration. As your business grows, you can add more or modify existing services and applications. You remain more flexible when new opportunities arise or unexpected markets materialize.

It is scalability for customizing to your needs. If you need more processing, storage, or backup, the dedicated server is your platform.

Also, today’s consumers have higher expectations. They want the convenience of quick access to your products. A dedicated server serves your customers with fast page loading and better user experience. If you serve them, they will return.

3. Improved reliability and performance

Reliability is one of the benefits of exclusivity. A dedicated server provides peak performance and reliability.

That reliability also means that server crashes are far less likely. Your website has extra resources during periods of high-volume traffic. If your front end includes videos and image displays, you have the bandwidth you need. Second, only to good website design are speed and performance. The power of a dedicated server contributes to the optimum customer experience.

Managed dedicated web hosting is a powerful solution for businesses. It comes with a higher cost than shared hosting. But you get high power, storage, and bandwidth to host your business.

A dedicated server provides a fast foothold on the web without upfront capital expenses. You have exclusive use of the server, and it is yours alone. Don’t overlook technical assistance advantages. You or your IT teams oversee your website.

Despite that vigilance, sometimes you need outside help. Many dedicated hosting solutions come with equally watchful technicians. With managed hosting, someone at the server end is available for troubleshooting around the clock.

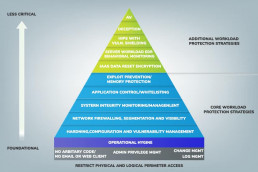

4. Security through data separation

Dedicated servers permit access only to your company.

The server infrastructure includes firewalls and security monitoring.

This means greater security against:

- Malware and hacks: The host’s network monitoring, secure firewalls, and strict access control free you to concentrate on your core business.

- Preventing denial of service attacks: Data separation isolates your dedicated server from the hosting company’s services and data belonging to other customers. That separation ensures quick recovery from backend exploits.

You can also implement your own higher levels of security. You can install your applications to run on the server. Those applications can include new layers of security and access control.

This adds a level of protection to your customer and proprietary business data. You safeguard your customer and business data, again, through separation.

5. No capital or upfront expense

Upfront capital expense outlays are no longer the best way to finance technology. Technology advances outpace their supporting platforms in a game of expensive leapfrog. Growing businesses need to reserve capital for other areas.

Hosting providers provide reasonable fees while providing top of the line equipment.

A dedicated hosting provider company can serve many clients. The cost of that service is a fraction of what you would pay to do it in-house. Plus you the bonuses of physical security and technical support.

What to Consider When Evaluating Dedicated Server Providers

Overall value

Everyone has a budget, and it’s essential to choose a provider that fits within that budget. However, price should not be the first or only consideration. Many times, it can end up costing you more in the long run by simply choosing the lowest priced option. Take a close look at what the provider offers in the other six categories we’ll cover in this guide, and then ask yourself if the overall value aligns with the price you’ll pay to run the reliable business you strive for.

Reputation

What are other people and businesses saying about a provider? Is it good, bad, or is there nothing at all? A great way to know if a provider is reliable and worthy of your business is to learn from others’ experiences. Websites like Webhostingtalk.com provide a community where others are talking about hosting and hosting related topics. One way to help decide on a provider is to ask the community for feedback. Of course, you can do some due diligence ahead of time by simply searching the provider’s name in the search function.

Reliability

Businesses today demand to be online and available to their customers 24 hours a day, seven days a week, and 365 days a year. Anything less means you’re probably losing money. Sure, choosing the lowest priced hosting probably seems like a good idea at first, but you have to ask yourself is the few dollars you save per month worth the headache and lost revenue if your website is offline. The answer is generally no.

Support

You only need it, when you need it. Even if you’re administering your own server, having reliable, 24×7 support available when you need it is critical. The last thing you want is to have a hard time reaching someone when you need them most. Look for service providers that offer multiple support channels such as live chat, email, and phone.

Service level agreements

SLA’s are the promises your provider is making to you in exchange for your payment(s). With the competitiveness of the hosting market today, you shouldn’t choose a provider that doesn’t offer important Service Level Agreements such as uptime, support response times, hardware replacement, and deployment times.

Flexibility

Businesses go through several phases of their lifecycle. It’s important to find a provider that can meet each phase’s needs and allow you to quickly scale up or down based on your needs at any given time. Whether you’re getting ready to launch and need to keep your costs low, or are a mature business looking to expand into new areas, a flexible provider that can meet these needs will allow you to focus on your business and not worry about finding someone to solve your infrastructure headaches.

Hardware quality

Business applications demand 24×7 environments and therefore need to run on hardware that can support those demands. It’s important to make sure that the provider you select offers server-grade hardware, not desktop-grade, which is built for only 40 hour work weeks. The last thing you want is for components to start failing, causing your services to be unavailable to your customers.

Advantages of Dedicated Servers, Ready to Make the Move?

Dedicated hosting provides flexibility, scalability, and better management of your own and your customers’ growth. They also offer reliability and peak performance which ensures the best customer experience.

Include the option of on-call, around-the-clock server maintenance, and you have found the hosting solution your business is looking for.

Recent Posts

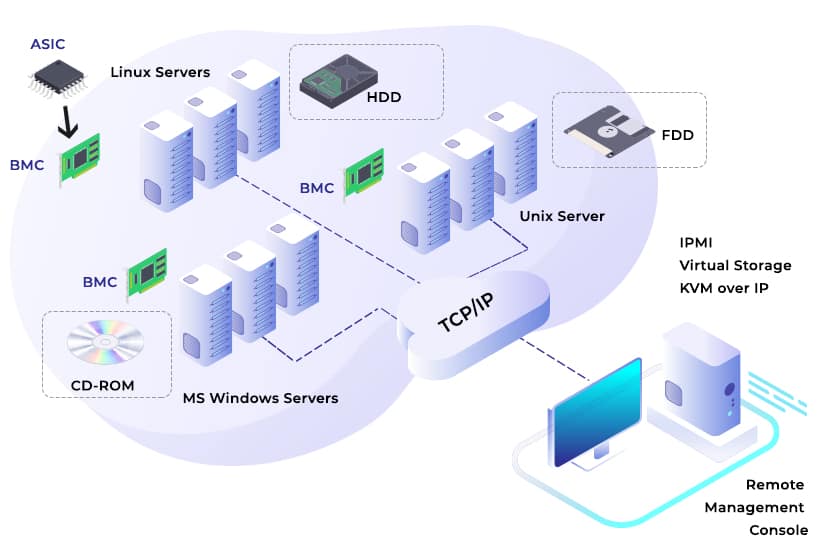

Comprehensive Guide to Intelligent Platform Management Interface (IPMI)

Intelligent Platform Management Interface (IPMI) is one of the most used acronyms in server management. IPMI became popular due to its acceptance as a standard monitoring interface by hardware vendors and developers.

So what is IPMI?

The short answer is that it is a hardware-based solution used for securing, controlling, and managing servers. The comprehensive answer is what this post provides.

What is IPMI Used For?

IPMI refers to a set of computer interface specifications used for out-of-band management. Out-of-band refers to accessing computer systems without having to be in the same room as the system’s physical assets. IPMI supports remote monitoring and does not need permission from the computer’s operating system.

IPMI runs on separate hardware attached to a motherboard or server. This separate hardware is the Baseboard Management Controller (BMC). The BMC acts like an intelligent middleman. BMC manages the interface between platform hardware and system management software. The BMC receives reports from sensors within a system and acts on these reports. With these reports, IPMI ensures the system functions at its optimal capacity.

IPMI collaborates with standard specification sets such as the Intelligent Platform Management Bus (IPMB) and the Intelligent Chassis Management Bus (ICMB). These specifications work hand-in-hand to handle system monitoring tasks.

Alongside these standard specification sets, IPMI monitors vital parameters that define the working status of a server’s hardware. IPMI monitors power supply, fan speed, server health, security details, and the state of operating systems.

You can compare the services IPMI provides to the automobile on-board diagnostic tool your vehicle technician uses. With an on-board diagnostic tool, a vehicle’s computer system can be monitored even with its engine switched off.

Use the IPMItool utility for managing IPMI devices. For instructions and IPMItool commands, refer to our guide on how to install IPMItool on Ubuntu or CentOS.

Features and Components of Intelligent Platform Management Interface

IPMI is a vendor-neutral standard specification for server monitoring. It comes with the following features which help with server monitoring:

- A Baseboard Management Controller – This is the micro-controller component central to the functions of an IPMI.

- Intelligent Chassis Management Bus – An interface protocol that supports communication across chasses.

- Intelligent Platform Management Bus – A communication protocol that facilitates communication between controllers.

- IPMI Memory – The memory is a repository for an IPMI sensor’s data records and system event logs.

- Authentication Features – This supports the process of authenticating users and establishing sessions.

- Communications Interfaces – These interfaces define how IPMI messages send. IPMI send messages via a direct-out-of-band local-area Networks or a sideband local-area network. IPMI communicate through virtual local-area networks.

Comparing IPMI Versions 1.5 & 2.0

The three major versions of IPMI include the first version released in 1998, v1.0, v1.5, and v2.0. Today, both v1.5 and v2.0 are still in use, and they come with different features that define their capabilities.

Starting with v1.5, its features include:

- Alert policies

- Serial messaging and alerting

- LAN messaging and alerting

- Platform event filtering

- Updated sensors and event types not available in v1. 0

- Extended BMC messaging in channel mode.

The updated version, v2.0, comes with added updates which include:

- Firmware Firewall

- Serial over LAN

- VLAN support

- Encryption support

- Enhanced authentication

- SMBus system interface

Analyzing the Benefits of IPMI

IPMI’s ability to manage many machines in different physical locations is its primary value proposition. The option of monitoring and managing systems independent of a machine’s operating system is one significant benefit other monitoring tools lack. Other important benefits include:

Predictive Monitoring – Unexpected server failures lead to downtime. Downtime stalls an enterprise’s operations and could cost $250,000 per hour. IPMI tracks the status of a server and provides advanced warnings about possible system failures. IPMI monitors predefined thresholds and provides alerts when exceeded. Thus, actionable intelligence IPMI provides help with reducing downtime.

Independent, Intelligent Recovery – When system failures occur, IPMI recovers operations to get them back on track. Unlike other server monitoring tools and software, IPMI is always accessible and facilitates server recoveries. IPMI can help with recovery in situations where the server is off.

Vendor-neutral Universal Support – IPMI does not rely on any proprietary hardware. Most hardware vendors integrate support for IPMI, which eliminates compatibility issues. IPMI delivers its server monitoring capabilities in ecosystems with hardware from different vendors.

Agent-less Management – IPMI does not rely on an agent to manage a server’s operating system. With it, making adjustments to settings such as BIOS without having to log in or seek permission from the server’s OS is possible.

The Risks and Disadvantages of IPMI

Using IPMI comes with its risks and a few disadvantages. These disadvantages center on security and usability. User experiences have shown the weaknesses include:

Cybersecurity Challenges – IPMI communication protocols sometimes leave loopholes that can be exploited by cyber-attacks, and successful breaches are expensive as statistics show. The IPMI installation and configuration procedures used can also leave a dedicated server vulnerable and open to exploitation. These security challenges led to the addition of encryption and firmware firewall features in IPMI version 2.0.

Configuration Challenges – The task of configuring IPMI may be challenging in situations where older network settings are skewed. In cases like this, clearing network configuration through a system’s BIOS is capable of solving the configuration challenges encountered.

Updating Challenges – The installation of update patches may sometimes lead to network failure. Switching ports on the motherboard may cause malfunctions to occur. In these situations, rebooting the system is capable of solving the issue that caused the network to fail.

Server Monitoring & Management Made Easy

Intelligent Platform Management brings ease and versatility to the task of server monitoring and management. By 2022, experts expect the IPMI market to hit the $3 billion mark. PheonixNAP bare metal servers come with IPMI, and it gives you access to the IPMI of every server you use. Get started by signing up today.

Recent Posts

17 Best Server Monitoring Software & Tools for 2020

The adoption of cloud technologies has made setting up and managing large numbers of servers for business and application needs quite convenient. Organizations opt for high amounts of servers to satisfy load balancing needs and also to cater to situations like disaster recovery.

Given these trends, server monitoring tools have become extremely important. While there are many types of server management tools, they cater to different aspects of monitoring servers. We looked at 17 of the best software tools for monitoring servers in this article.

Best Monitoring Tools for Servers

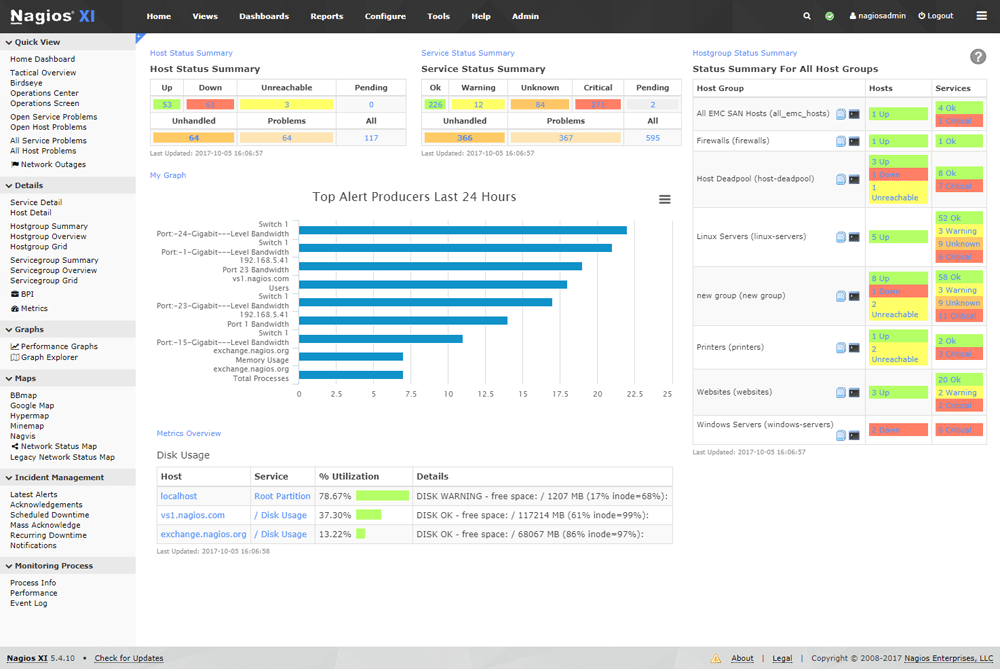

1. Nagios XI

A list of tools server monitoring software, would not be complete without Nagios. It’s a reliable tool to monitor server health. This Linux based monitoring system provides real-time monitoring of operating systems, applications, infrastructure performance monitoring, and systems metrics.

A variety of third-party plugins makes Nagios XI able to monitor all types of in-house applications. Nagios is equipped with a robust monitoring engine and an updated web interface to facilitate excellent monitoring capabilities through visualizations such as graphs.

Getting a central view of your server and network operations is the main benefit of Nagios. Nagios Core is available as a free monitoring system. Nagios XI comes recommended due to its advanced monitoring, reporting, and configuration options.

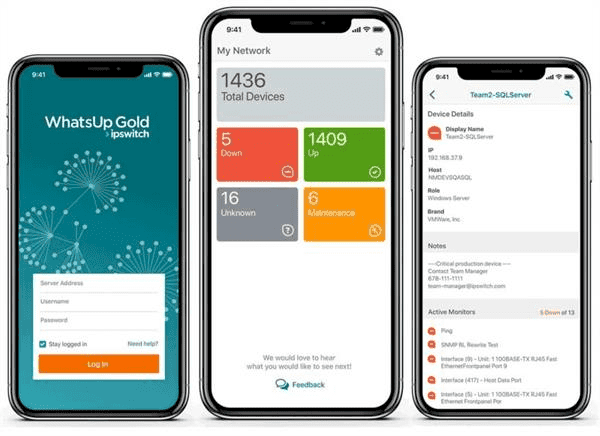

2. WhatsUp Gold

WhatsUp Gold is a well-established monitoring tool for Windows servers. Due to its robust layer 2/3 discovery capabilities, WhatsUp Gold can create detailed interactive maps of the entire networked infrastructure. It can monitor web servers, applications, virtual machines, and traffic flow across Windows, Java, and LAMP environments.

It provides real-time alerts via email and SMS in addition to the monitoring and management capabilities offered in the integrated mobile application. The integrated REST API’s features include capabilities such as integrating monitoring data with other applications and automating many tasks.

WhatsUp Gold provides specific monitoring solutions for AWS, Azure, and SQL Server environments. These integrate with native interfaces and collect data regarding availability, cost, and many other environment-specific metrics.

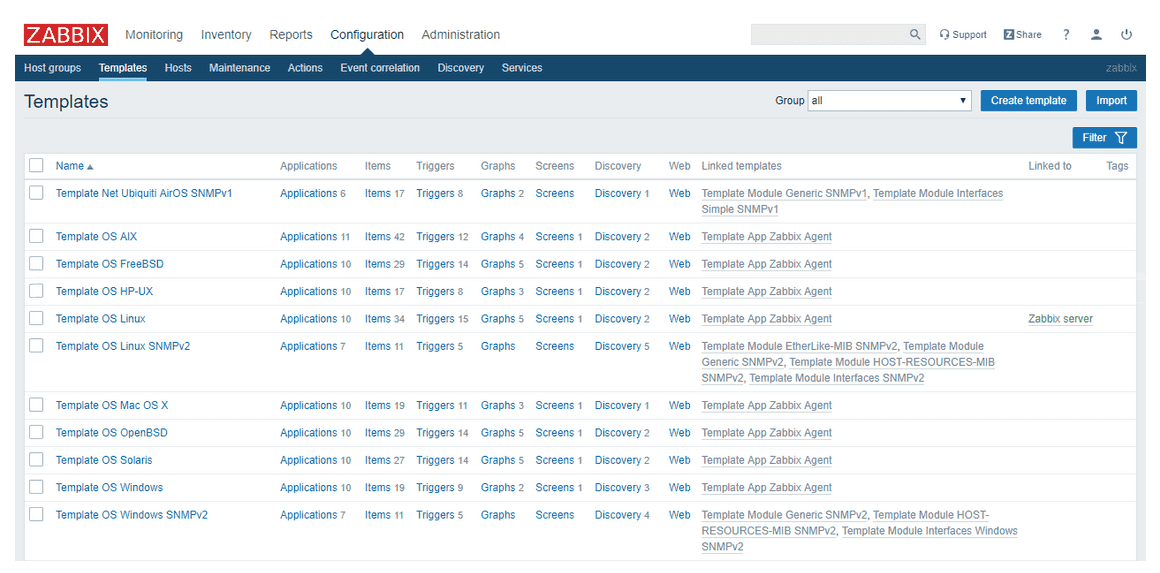

3. Zabbix

Zabbix is a free and open-source Linux server monitoring tool. It is an enterprise-level monitoring solution and facilitates monitoring servers, networks, cloud services, applications, and services. One of its most significant advantages is the ability to configure directly from the web interface, rather than having to manage text files like on some other tools like Nagios.

Zabbix provides a multitude of metrics like CPU usage, free disk space, temperature, fan state, and network status in its network management software. Also, it provides ready-made templates for popular servers like HP, IBM, Lenovo, Dell, and operating systems such as Linux, Ubuntu, and Solaris.

The monitoring capabilities of Zabbix are enhanced even more through the possibility of setting complex triggers and dependencies for data collection and alerting.

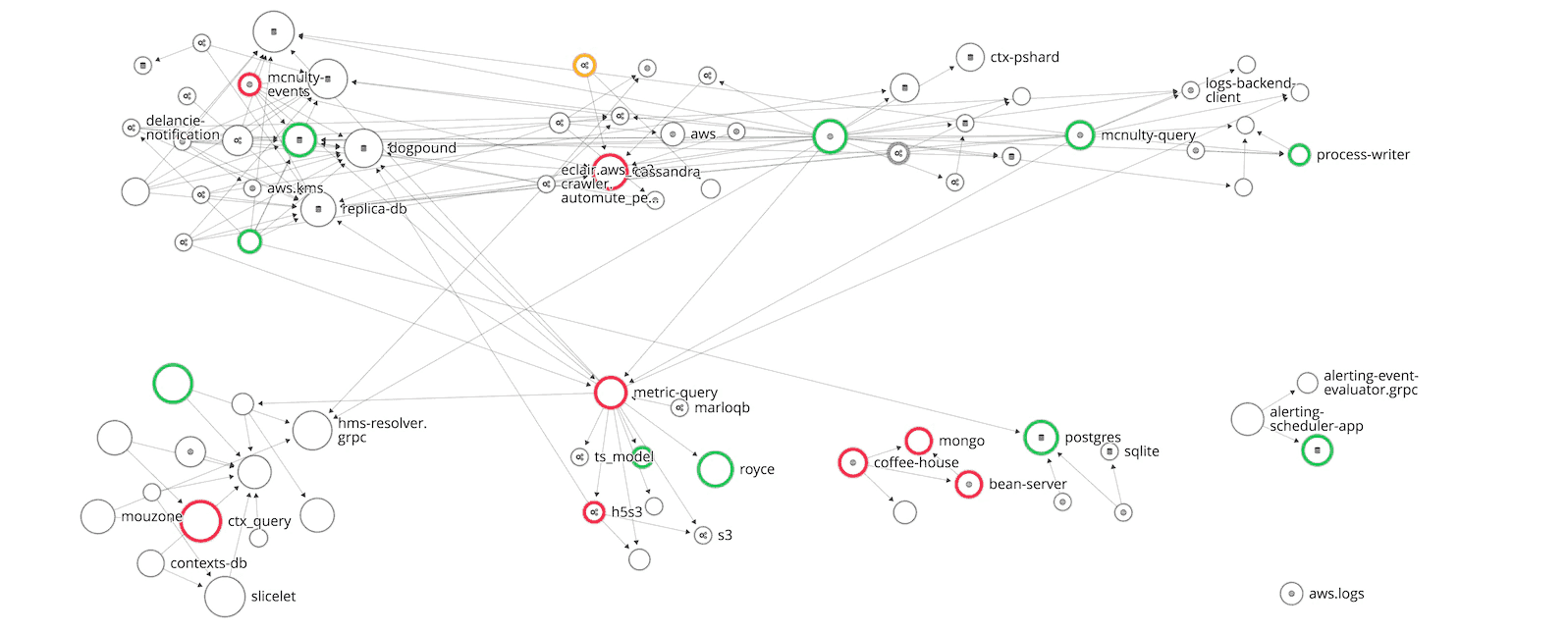

4. Datadog

Datadog is a consolidated monitoring platform for your servers, applications, and stacks. Named a leader in intelligent application and server monitoring in 2019 by Forrester Wave, Datadog boasts of a centralized dashboard that brings many metrics together.

Datadog’s monitoring features include those required for servers and into the realm of source control and bug tracking as well. It also facilitates many metrics, such as traffic by source and containers in cloud-native environments. Notifications are available by email, Slack, and many other channels.

Mapping dependencies and application architecture across teams has allowed users of Datadog to build a complete understanding of how applications and data flow work across large environments.

5. SolarWinds Server and Application Monitor

SolarWinds monitors your server infrastructure, applications, databases, and security. Its Systems Management Software provides monitoring solutions for servers, virtualization, disk space, server configurations, and backups.

The main advantage here is that SolarWinds Server and Application Monitor allows getting started within minutes thanks to their vast number of (1,200+) pre-defined templates for many types of servers and cloud services. These templates can quickly be customized to suit virtually any kind of setup.

SolarWinds application monitoring boasts a comprehensive system for virtual servers across on-premise, cloud, and hybrid environments to overcome VM Sprawl and having to switch to different tools. Tools are available for capacity planning, event monitoring, and data analysis with alerts and dashboards.

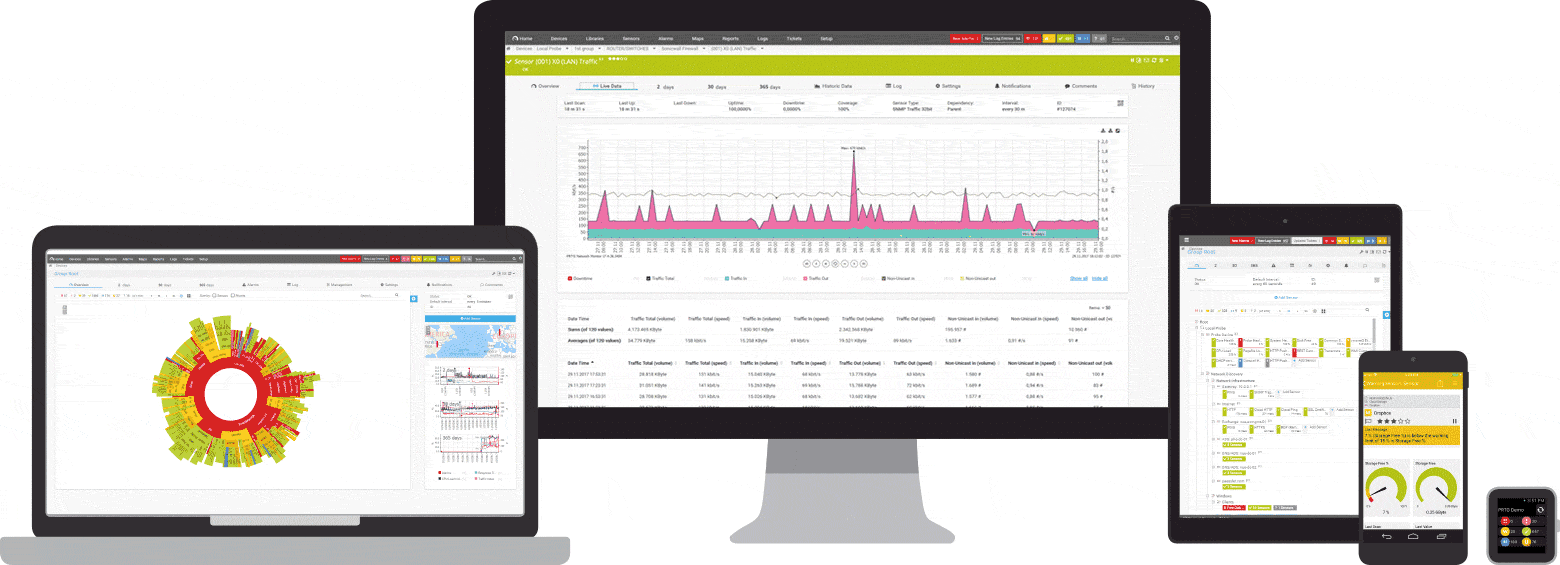

6. Paessler PRTG

Paessler Router Traffic Grapher is a server management software that uses SNMP, Packet Sniffing, and Netflow. PRTG caters to both Windows servers and Linux environments. A wide range of server monitoring software applications is available for services, network, cloud, databases, and applications.

The PTRG server monitoring solution caters to web servers, database servers, mail, and virtual servers. Cloud monitoring is the strong suit of PTRG, providing a centralized monitoring system for all types of IAAS / SAAS / PAAS solutions such as Amazon, Docker, and Azure.

PTRG monitors firewalls and IPs to ensure inbound and outbound traffic. It will provide regular updates regarding firewall status and automatic notifications through the integrated web and mobile applications continually monitoring your network security.

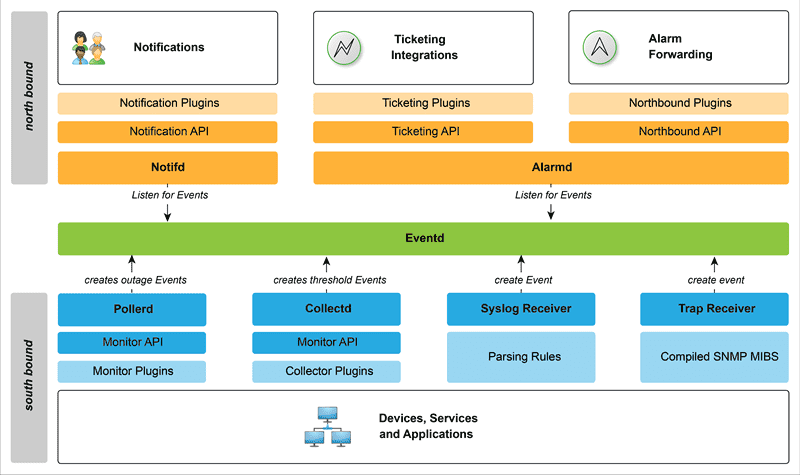

7. OpenNMS

OpenNMS is a fully open-source server monitoring solution published under the AGPLv3 license. It is built for scalability and can monitor millions of devices from a single instance.

It has a flexible and extensible architecture that supports extending service polling and performance data collection frameworks. OpenNMS is supported both by a large community and commercially by the OpenNMS group.

OpenNMS brings together the monitoring of many types of servers and environments by normalizing specific messages and disseminating them through a powerful REST API. Notifications are available via email, Slack, Jabber, Tweets, and the Java native notification strategy API. OpenNMS also provides ticketing integrations to RT, JIRA, OTRS, and many others.

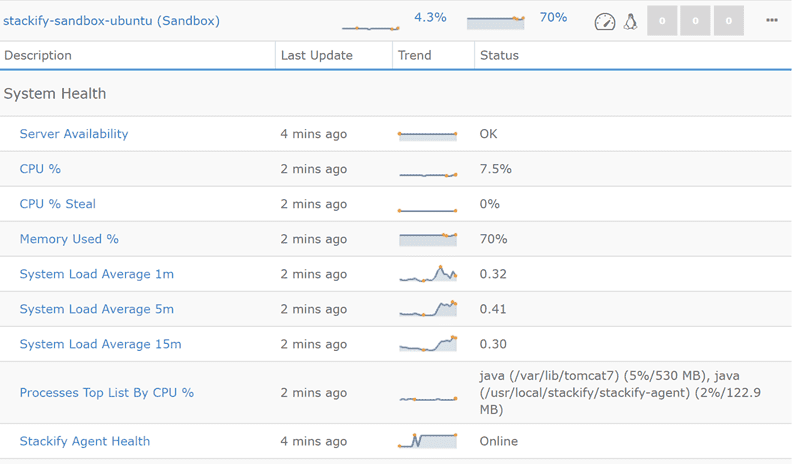

8. Retrace

Retrace includes robust monitoring capabilities and is highly scalable. It is recommended for new teams without much experience as it provides smart defaults based on your environment. This program gives you a headstart in monitoring servers and applications.

It monitors application performance, error tracking, log management, and application metrics. Retrace notifies relevant users via SMS, email, and Slack alerts based on multiple monitoring thresholds and notifications groups.

Custom dashboards allow Retrace to provide both holistic and granular data regarding server health. These dashboard widgets collect data on CPU usage, disk space, network utilization, and uptime. Retrace supports both Windows servers as well as Linux.

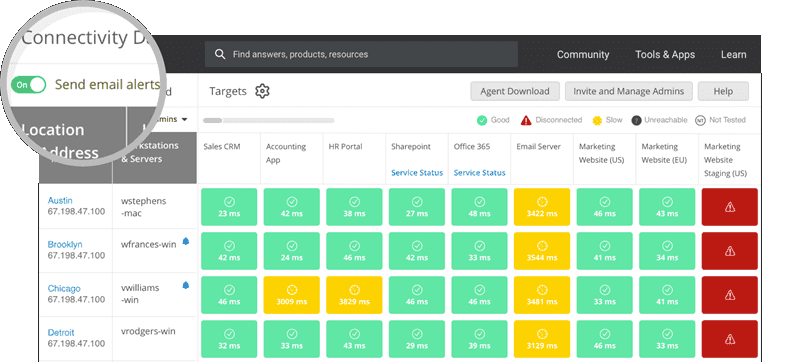

9. Spiceworks Network Monitor

Spiceworks is a simplified free server monitoring software for server and network monitoring. The connectivity dashboard can be set up on any server in minutes, and after application URL configuration, monitoring can begin immediately.

You will be able to receive real-time insights regarding slow network connections and overloaded applications, both on-premise as well as on the cloud. You will be able to fix issues before they become problematic. One disadvantage is that there is no proper mechanism for notifications. Spiceworks has promised a solution to this soon through email alerts for server and application events.

The monitoring solution is fully integrated with the Spiceworks IT management cloud tools suite and also provides free support through online chat and phone.

10. vRealize Hyperic

An open-source tool for server and network monitoring from VMware, vRealize Hyperic provides monitoring solutions for a wide range of operating systems. Including middleware and applications in both physical and virtual environments.

Infrastructure and OS application monitoring tools allow users to understand availability, utilization, events, and changes across every layer of your virtualization stack, from the vSphere hypervisor to guest OSs.

Middleware monitors collect data of thousands of metrics useful for application performance monitoring. The vRealize Operations Manager application provides centralized monitoring for infrastructure, middleware, and applications.

11. Icinga

Icinga has a simple set of goals, monitor availability, provide access to relevant data, and raise alerts to keep users informed promptly. The integrated monitoring engine is capable of monitoring large environments, including data centers.

The fast web interface gives you access to all relevant data. Users will be able to build custom views by grouping and filtering individual elements and combining them in custom dashboards. This setup allows you to take quick action to resolve any issues it’s identified.

Notifications arrive via email, SMS, and integrated web and mobile applications. Icinga is fully integrated with VMware environments and fetches data about hosts, virtual servers, databases, and many other metrics and displays them on a clean dashboard.

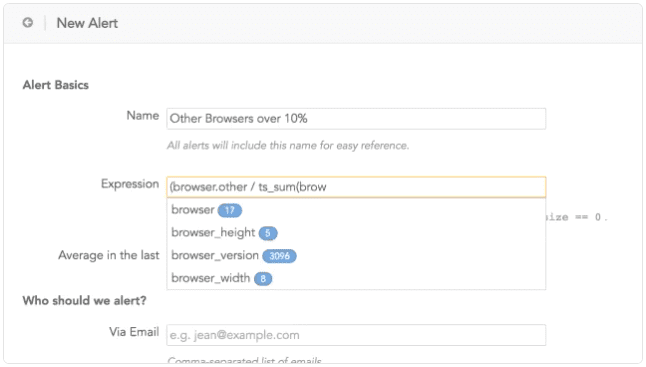

12. Instrumental

Instrumental is a clean and intuitive application that monitors your server and applications. It provides monitoring capabilities across many platforms such as AWS and Docker, many database types, and applications stacks such as .Net, Java, Node.js, PHP, Python, and Ruby.

In addition to the native methods available to collect data, Instrumental also integrates with many other platforms like Statiste, telegraf, and StatsD. The built-in query language allows you to transform, aggregate, and time-shift data to suit any visualization you require.

A purposefully designed dashboard interface allows viewing holistic data as well as digging deep into each server and application. Instrumental provides configurable alerts via email, SMS, and HTTP notification based on changes to metrics.

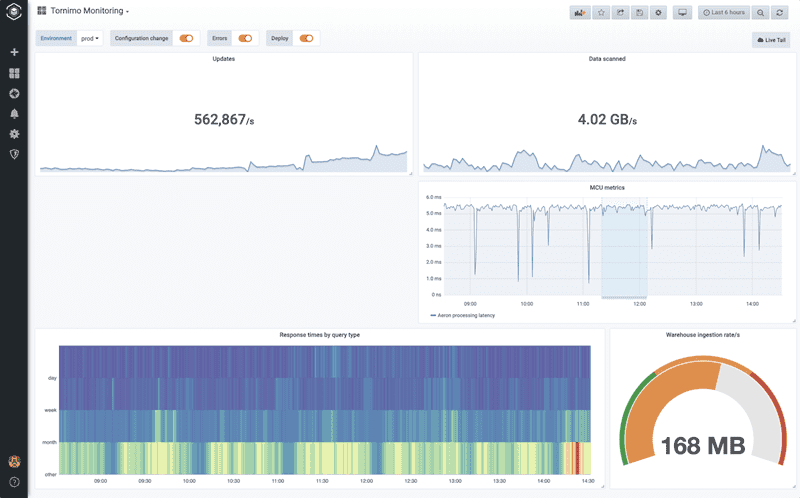

13. Tornimo

Tornimo brings real-time monitoring with unlimited scaling. It is a Graphite compatible application monitoring platform with a front end build on Grafana dashboards. It also provides support for switching from a custom Graphite deployment or many other compatible SaaS platforms in minutes.

Tornimo uses a proprietary database system that allows it to handle up to a million metrics as your environment grows. Clients trust Tornimo to monitor mission-critical systems irrespective of the amount of data they need to monitor as it offers consistent response times.

A significant advantage of Tornimo over many other monitoring tools is that it does not average older data to save on storage. It allows users to leverage older data to identify anomalies with ease.

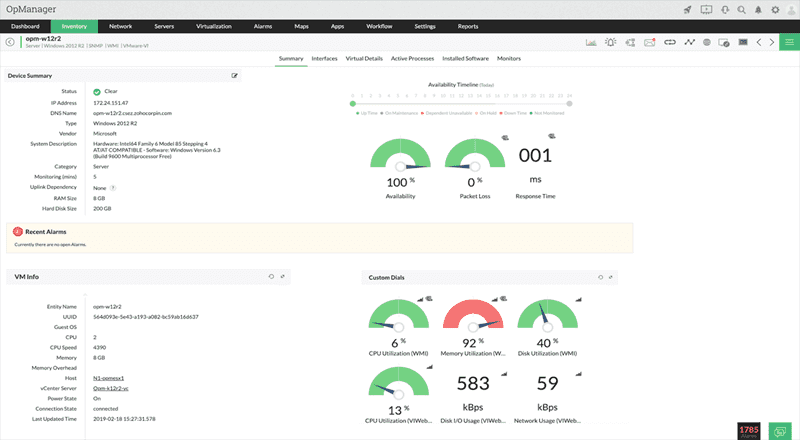

14. ManageEngine OpManager

OpManager from ManageEngine is a trusted server monitoring software that has robust monitoring capabilities for all types of network nodes such as routers and switches, servers, VMs, and almost anything that has an IP.

With over 2,000 built-in server performance monitoring tools, OpManager’s monitoring tools for servers cater to both physical and virtual servers with multi-level thresholds and instant alerts. It provides customizable dashboards to monitor your network at a glance.

As a server monitoring solution for Windows, Linux, Solaris, and Unix, OpManager supports system health monitoring and process monitoring through SNMP and WMI for many platforms such as VMware, Hyper-V, and Citrix XenServer.

15. Sciencelogic SL1

The server management tools from Sciencelogic allow you to monitor all your server and network resources based on their configurations, performance, utilization, and capacity spanning across a multitude of vendors and server technologies.

Supported platforms include cloud services such as AWS, Azure, Google Cloud, and OpenStack. Sciencelogic also supports Hypervisors like VMware, Hyper-V, Xen, and KVM as well as containers like Docker. In terms of operating systems, it supports Windows, Unix, and Linux.

Sciencelogic’s custom dashboards allow monitoring through ready-made or custom monitoring policies, using health checks and ticket queues associated with pre-defined events. It uses advanced API connectivity to merge with cloud services and provide accurate data for monitoring.

16. Panopta

Panopta facilitates server and network monitoring for on-premise, cloud, and hybrid servers. Panopta provides a unified view across all your server environments through server agents and native cloud platform integrations.

A comprehensive library of out-of-the-box metrics makes setting up Panopta quick and convenient. You can configure these via reporting features and customizable dashboards for a clear, holistic view. It avoids alert fatigue and false positives by filtering through accurate and actionable information.

CounterMeasures is a tool offered by Panopta to configure pre-defined remedial actions to resolve recurring issues as they are detected. Panopta’s SaaS-delivered monitoring platform allows organizations to have a single point for monitoring all its infrastructure without any additional equipment or worrying about which OS they use and licenses.

17. Monitis

Monitis is a simplified monitoring tool for servers, applications, and more with a simple sign-up process and no software to be set up. A unified dashboard provides data on uptime and response time, server health, and many other custom metrics.

Instant alerts are supported via email, SMS, Twitter, and phone when any of the pre-defined triggers are activated. Monitis supports alerts even when your network is down. It also provides an API for additional monitoring needs so that users can import metrics and data to external applications.

Monitis provides monitoring capabilities along with reporting that users can share. Users can access these features through both the web interface as well as the integrated mobile applications.

Choosing Server Monitoring Software

The top server monitoring tools we listed have one goal in common – to monitor the uptime and health of your servers and applications. Most of these tools offer free trials or free versions with limited functionality, so make sure to try them out before selecting the best server monitoring tool for your servers.

Looking for application performance monitoring tools, then read our guide on the 7 Best Website Speed and Performance Testing Tools.

If you would like to learn more, bookmark our blog and follow the latest developments on servers, container technology, and many other cloud-related topics.

Recent Posts

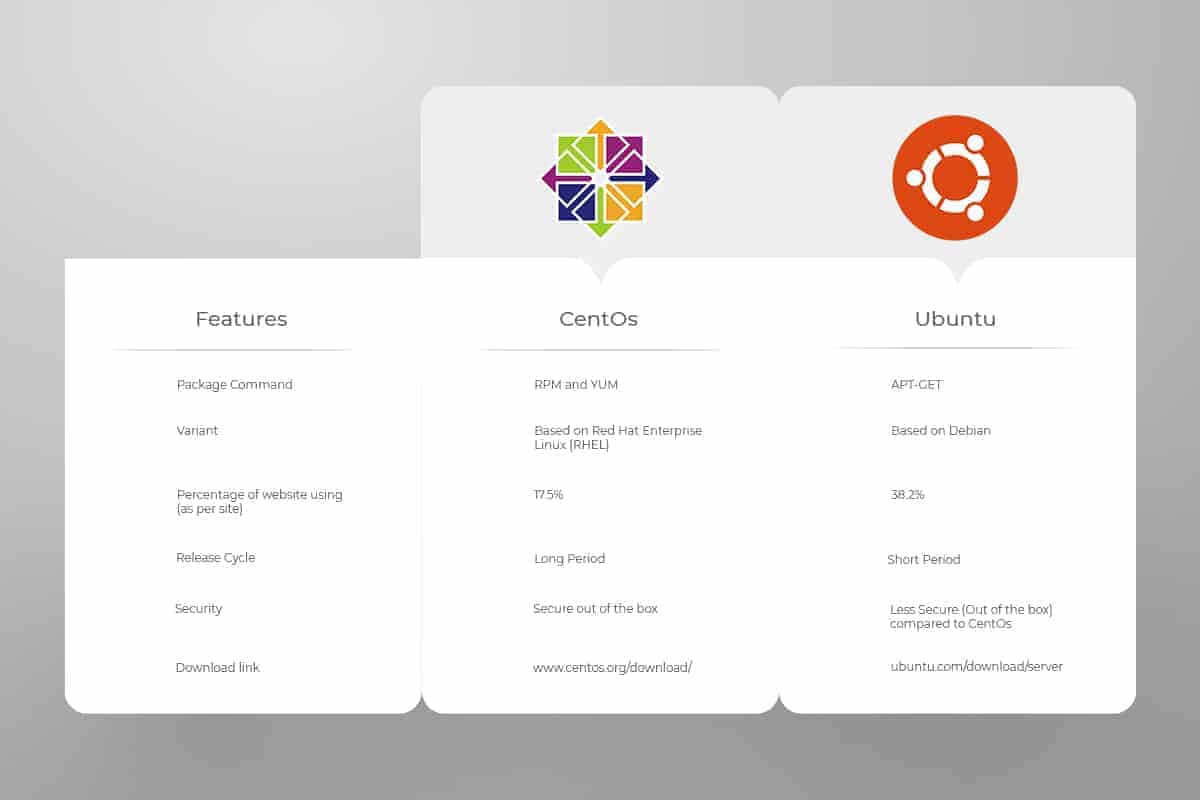

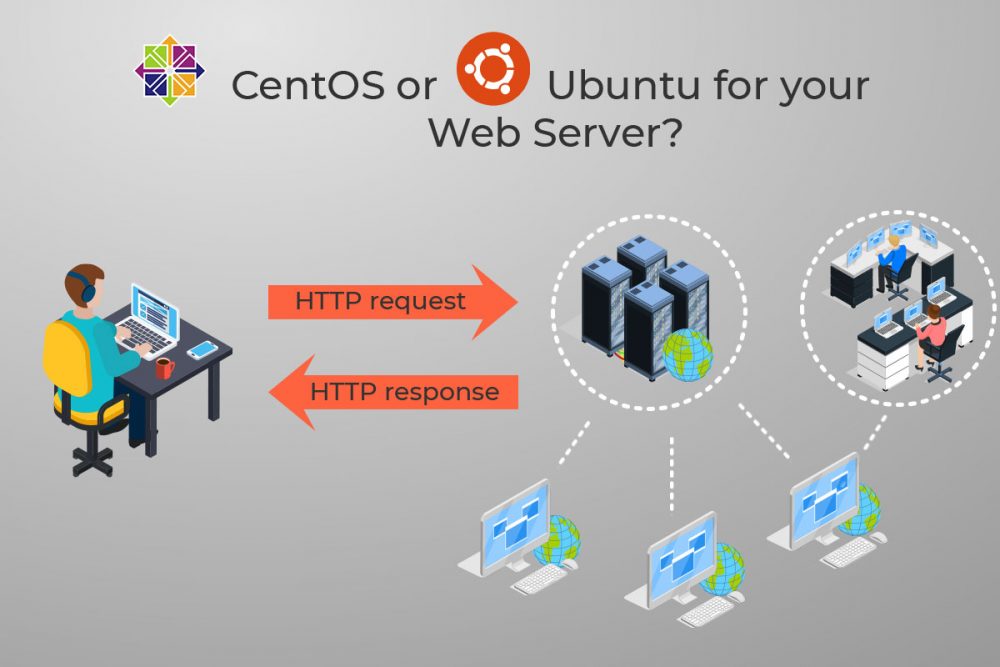

CentOS vs Ubuntu: Choose the Best OS for Your Web Server

Don’t know whether to use CentOS or Ubuntu for your server? Let’s compare both and decide which one you should use on your server/VPS. In highlighting the two principal Linux distributions’ strengths and weaknesses for running a web server, the choice should become clear.

Linux is an open-source operating system currently powering most of the Internet. There are hundreds of different versions of Linux. For web servers, the two most popular versions are Ubuntu and CentOS. Both are open-source and free community-supported operating systems. You’ll be happy to know these distributions have a ton of community support and, therefore, regularly available updates.

Unlike Windows, Linux’s open-source license and encourages users to experiment with the code. This flexibility has created loyal online communities dedicated to building and improving the core Linux operating system.

Quick Overview of Ubuntu and CentOS

Ubuntu

Ubuntu is a Linux distribution based on Debian Linux. The word Ubuntu comes from the Nguni Bantu language, and it generally means “I am what I am because of who we all are.” It represents Ubuntu’s guiding philosophy of helping people come together in the community. Canonical, the Ubuntu developers, sought to make a Linux OS that was easy to use and had excellent community support.

Ubuntu boasts a robust application repository. It is updated frequently and is designed to be intuitive and easy to use. It is also highly customizable, from the graphical interface down to web server packages and internet security.

CentOS

CentOS is a Linux distribution based on Red Hat Enterprise Linux (RHEL). The name CentOS is an acronym for Community Enterprise Operating System. Red Hat Linux has been a stable and reliable distribution since the early days of Linux. It’s been mostly implemented in high-end corporate IT applications. CentOS continues the tradition started by Red Hat, providing an extremely stable and thoroughly-tested operating system.

Like Ubuntu, CentOS is highly customizable and stable. Due to its early dominance, many conventions are built around the CentOS architecture. Cutting-edge corporate security measures were implemented in RHEL, that quickly adapt to CentOS’s architecture.

Comparing the Features of CentOS and Ubuntu Servers

One key feature for CentOS and Ubuntu is that they are both free. You can download a copy for no charge and install it on your own cheap dedicated server.

Each version can be distributed or downloaded to a USB drive, which you can boot into without making permanent changes to your operating system. A bootable drive allows you to take the system for a test run before you install it.

Basic architecture

CentOS is based on the Red Hat Enterprise Linux architecture, while Ubuntu is based on Debian. This is important when looking at software package management systems. Both versions use a package manager to resolve dependencies, perform installations, and track updates.

Ubuntu uses the apt package manager and installs software from .deb packages. CentOS uses the yum package manager and installs .rpm packages. They both work about the same, but .deb packages cannot be installed on CentOS – and vice-versa.

The difference is in the availability of packages for both systems. Some packages will not be available as efficiently on Ubuntu as they are on CentOS. When working with your developers, find out their preference as they usually tend to stick to just one package type (.deb or .rpm)

Another detail is the structure of individual software packages. When installing Apache, one of the leading web server packages, the service works a little differently in Ubuntu than in CentOS. The Apache service in Ubuntu is labeled apache2, while the same service in CentOS is labeled httpd.

Software

If you’re strictly going by the number of packages, Ubuntu has a definitive edge. The Ubuntu repository lists tens of thousands of individual software packages available for installation. CentOS only lists a few thousand. If you go by the number of packages, Ubuntu would clearly win.

The other side of this argument is that many graphical server tools like cPanel are written solely for Red-Hat-based systems. While there are similar tools in Ubuntu, some of the most widely-used tools in the industry are only available in CentOS.

Stability, security, and updates

Ubuntu is updated frequently. A new version is released every six months. Ubuntu offers LTS (Long-Term Support) versions every two years, which are supported for five years. These different releases allow users to choose whether they want the “latest and greatest” or the “tried-and-true.” Because of the frequent updates, Ubuntu often includes newer software into newer releases. That can be fun for playing with new options and technology, but it can also create conflicts with existing software and configurations.

CentOS is updated infrequently in part because the developer team for CentOS is smaller. It’s also due to the extensive testing on each component before release. CentOS versions are supported for ten years from the date of release and include security and compatibility updates. However, the slow release cycle means a lack of access to third-party software updates. You may need to manually install third-party software or updates if they haven’t made it into the repository. CentOS is reliable and stable. As the core operating system, it is relatively small and lightweight compared to its Windows counterpart. This helps improve speed and lowers the size that the operating system takes up on the hard disk.

Both CentOS and Ubuntu are stable and secure, with patches released regularly.

Support and troubleshooting

If something goes wrong, you’ll want to have a support path. Ubuntu has paid support options, like many enterprise IT companies. One additional advantage, though, is that there are many expert users in the Ubuntu forums. It’s usually easy to find a solution to common errors or problems.

With a new release coming out every six months, it’s not feasible to offer full support for every version. Regular releases are supported for nine months from the release date. Regular users will probably upgrade to the newest versions as they are released.

Ubuntu also releases LTS or Long-Term Support versions. These are supported for a full five years from the installation date. Releases have ongoing patches and updates, so you can keep an LTS release installed (without needing to upgrade) for five years.

Third-party providers often manage centos support. It provides excellent documentation, plus forums and developer blogs that can help you resolve an error. In part, CentOS relies on its community of Red Hat users to know and manage problems.

The CentOS Project is open-source and designed to be freely available. If you need paid support, it’s recommended that you consider paying for Red Hat Enterprise licensing and support. Where CentOS shines is in its dedication to helping its customers. A CentOS operating system is supported for ten years from the date of release.

New operating system releases are published every two years. This frequency can lower the total cost of ownership since you can stretch a single operating system cycle for a full decade. Above, ‘support’ refers both to the ability to get help from developers and the developers’ commitment to patching and updating software.

Ease of use

Ubuntu has gone to great lengths to make its system user-friendly. An Ubuntu server is more focused on usability. The graphical interface is intuitive and easy to manage, with a handy search function. Running utilities from the command-line is straightforward. Most commands will suggest the proper usage, and the sudo command is easy to use to resolve “Access denied” errors.

Where CentOS has some help and community support, Ubuntu has a solid support knowledge base. This support includes both how-to guides and tutorials, as well as an active community forum.

Ubuntu uses the apt-get package manager, which uses a different syntax from yum. But functions are about the same. Many of the applications that CentOS server use, such as cPanel, have similar alternatives available for Ubuntu. Finally, Ubuntu Linux offers a more seamless software installation process. You can still tinker under the hood, but the most commonly-used software and operating system features are included and updated automatically.

Ubuntu’s regular updates can be a liability. They can conflict with your existing software configuration. It’s not always a good thing to use the latest technology. Sometimes it’s better to let someone else work out the bugs before you install an update.

CentOS is typically for more advanced users. One flaw with CentOS is a steep learning curve. There are fewer how-to guides and community forums available if you run into a problem.

There seems to be less hand-holding in CentOS – most guides presume that you know the basics, like sudo or basic command-line features. These are skills you can learn working with other Red Hat professionals or by taking certifications.

With CentOS built around the Red Hat architecture, many old-school Linux users find it more familiar and comfortable. CentOS is also used widely across the Internet at the server level, so using it can improve cross-compatibility. Many CentOS server utilities, such as cPanel, are built to work only in Red Hat Linux.

CentOS or Ubuntu for Development

CentOS takes longer for the developers to test and approve updates. That’s why CentOS releases updates much slower than other Linux variants. If you have a strong business need for stability or your environment is not very tolerant of change, this can be more helpful than a faster release schedule.

Due to the lower and slower support for CentOS, some software updates are not applied automatically. A newer version of a software application may be released but may not make it into the official repository. If this happens, it can leave you responsible for manually checking and installing security updates. Less-experienced users might find this process too challenging.

Ubuntu, as an “out-of-the-box” operating system, includes many different features. There are three different versions of Ubuntu:

- Desktop version, which is for basic end-users;

- Server, web hosting over the Internet or in the cloud

- Core, which is for other devices (like cars, smart TV’s, etc.)

A basic installation of Ubuntu Server should include most of the applications you need to configure your server to host files over a network. It also adds extra software. Such as an open-source office productivity software, as well as the latest kernel and operating system features.

Ubuntu’s focus on features and usability relies on the release of new versions every six months. This is very helpful if you prefer to use the latest software available. These updates can also become a liability if you have custom software that doesn’t play nicely with newer updates.

Cloud deployment

Ubuntu offers excellent support for container virtualizations. It provides support for cloud deployment and expands its influence in the market compared to CentOS. Since June 2019, “Canonical announced full enterprise support for Kubernetes 1.15 kubeadm deployments, its Charmed Kubernetes, and MicroK8s; the popular single-node deployment of Kubernetes. “

CentOS is not being left behind and competes by offering three private cloud choices. It also provides a public cloud platform through AWS. CentOS has a high standard of documentation and provides its users with a mature platform so that CentOS users can apply its features further.

Gaming Servers

Unbuntu has a pack that custom-designed for gamers called the Ubuntu GamePack. It’s based on Ubuntu. It does not come with games preinstalled. It instead comes preinstalled with the PlayOnLinux, Wine, Lutric, and Steam client. It’s a like software intersection where games on Windows, Linux, Console, and Steam are played.

It’s a hybrid version of the Ubuntu OS since it also supports Adobe Flash and Oracle Java. It allows for the seamless play of online gaming. Ubuntu gamepack is optimized for over six thousand Windows and Linux games, which guaranteed launch and function in the Ubuntu GamePack. If you’ve more familiar with Ubuntu, then choose the desktop version for gaming.

CentOS is not as popular for gaming as Ubuntu. If you’ve used CentOS for your server, then you can try the Fedora-based distribution for gaming. It’s called Fedora Games Spin, and it’s the preferred Linux distribution for gaming servers for CentOS/RedHat/Fedora Linux users.

Most of the best gaming distros are Debian/Ubuntu-based, but if you’re committed to CentOS, you can run it in live mode from a USB/DVD media without installing it. It’s accompanied by an Xfce desktop environment and has over two thousand Linux games. It’s a single platform that allows you to play all Fedora games.

Comparison Table of CentOS and Ubuntu Linux Versions |

||

| Features | CentOS | Ubuntu |

| Security | Strong | Good (needs further configuration) |

| Support Considerations | Solid documentation. Active but limited user community. | High-level documentation and large support community |

| Update Cycle | Infrequent | Often |

| System Core | Based on Redhat | Based on Debian |

| Cloud Interface | CloudStack, OpenStack, OpenNebula | OpenStack |

| Virtualization | Native KVM Support | Xen, KVM |

| Stability | High | Solid |

| Package Management | YUM | aptitude, apt-get |

| Platform Focal Point | Targets server market, choice of larger corporations | Targets desktop users |

| Speed Considerations | Excellent (depending on hardware) | Excellent (depending on hardware) |

| File Structure | Identical file/folder structure, system services differ by location | Identical file/folder structure, system services differ by location |

| Ease of Use | Difficult/Expert Level | Moderate/User-friendly |

| Manageability | Difficult/Expert Level | Moderate/User-friendly |

| Default applications | Updates as required | Regularly updated |

| Hosting Market Share | 497,233 sites – 17.5% of Linux users | 772,127 sites – 38.2% of Linux users |

Bottom Line on Choosing a Linux Distribution for Your Server

Both CentOS and Ubuntu are free to use. Your decision should reflect the needs of your web server and usage.

If you’re more of a beginner in being a server admin, you might lean towards Ubuntu. If you’re a seasoned pro, CentOS might be more appealing. If you like implementing new software and technology as it’s released, Ubuntu might hold the edge for you. If you hate dealing with updates breaking your server, CentOS might be a better fit. Either way, you shouldn’t worry about one being better than the other.

Both are approximately equal in security, stability, and functionality – Let us help you choose the system that will serve your business best.

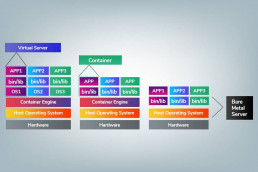

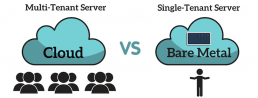

Shared Hosting vs Dedicated Hosting: Make an Informed Choice

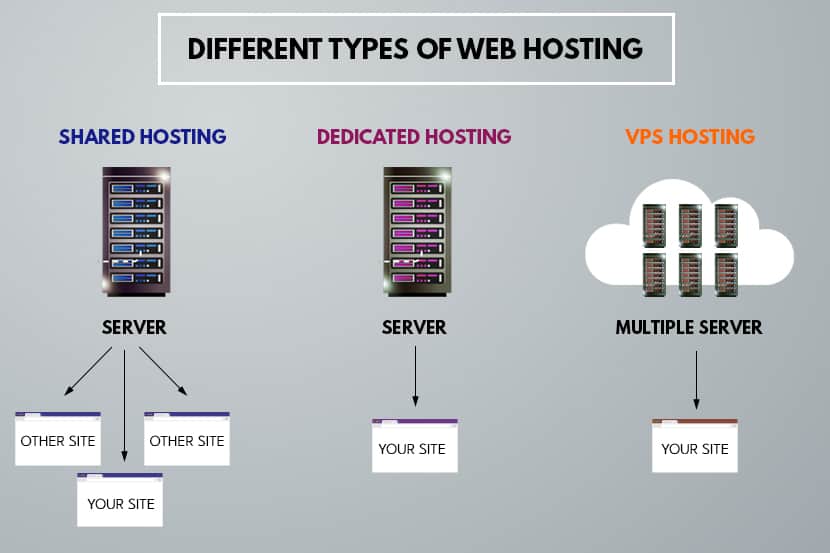

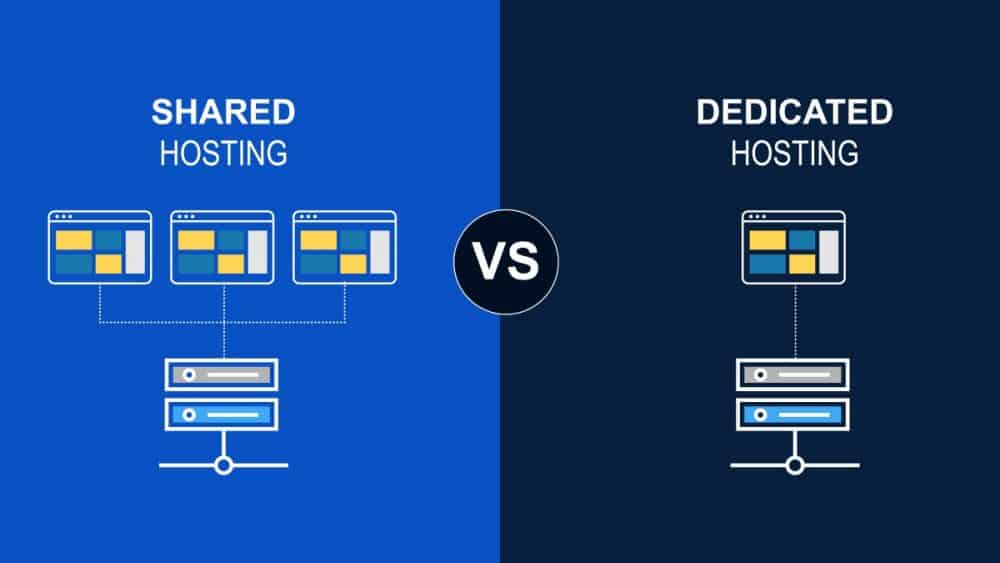

Every provider claims to put unlimited scalability at your disposal, but is that truly the case? In today’s hosting market, it is difficult to tell the difference between a shared and dedicated hosting. Especially mid-sized businesses need to make tough decisions. Most require dedicated resources, but often lack expertise, so both shared and dedicated seem like viable options.

This article considers both options, learn the critical difference between dedicated and shared web hosting.

What is Shared Hosting?

As the name implies, shared hosting means you are sharing a physical server with other tenants. That includes all physical resources such as hard drive, CPU power, memory usage, and bandwidth.

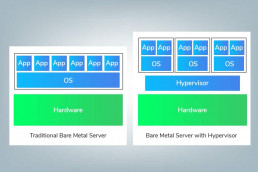

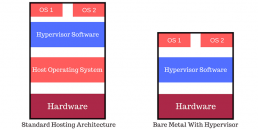

A hypervisor layer segments the server, and each tenant has access to their own isolated virtual environment. You have limited customization options, and the provider manages the environment. It is a more affordable option compared to dedicated hosting.

Below are some of the key characteristics of shared hosting.

Limited Hosting Resources

No matter what providers say, shared hosting always provides limited hosting resources. To be more precise, scaling up is ever a problem with shared hosting. Every small and medium-sized business desires to grow. Their IT resources should facilitate or at least go hand in hand with that growth. Whether that is possible with limited shared plans depends on your type of business and individual use case. Generally, shared hosting may only be scaled up to provide more bandwidth and storage.

Note: Scalability is the room to expand your resources, such as bandwidth, drive space, processing power, etc. It is also the ability to install custom software. Scalability provides room to accommodate for different use cases.

Latency

Shared hosting involves multiple virtualized environments, so it is more prone to suffer from high latency. There is a hypervisor layer between your environment and the underlying bare metal server. That means your software is not connected directly to hardware. As a result, you can expect a higher latency. Performance bottlenecks, which are more common in shared environments, may also lead to higher latency.

Note: Computer latency is the delay between a command being run and the desired output. In ordinary everyday operations, computer latency is more popularly called “lag.”

Limited Customization Options

As noted above, it is the provider who manages shared environments. Thus, customization options are minimal. Once you choose the initial setup, it is challenging to accommodate for changing IT business requirements. Not to mention that you are not always sure which hardware components are really below all the virtualization layers.

Server Optimization

If your online app or website is running slow, you have fewer options to optimize the environment. Shared plans do not provide enough access to implement server-level speed and optimization options. This is another factor that depends on your use case. Most small businesses don’t mind not having to work on server optimization.

Quick Deployment and Migration

Virtualized environments are great for rapid deployments. Getting a virtual environment up and running within an hour is standard practice. Virtual environments’ fast deployment makes it the perfect solution for testing and developing online applications.

Additionally, migrating data is often easier when working with virtual machines.

Shared Hosting is Cheaper

It may come with several limitations, but shared hosting is the most affordable option. Having multiple tenants on a single physical server dilutes the price for individual users. Furthermore, you do not need to have your own IT staff to manage the environment. Hence, shared hosting is an excellent choice for businesses that do not have the resources and expertise to manage their own dedicated servers.

No Unique IP Address

Every server has its own IP address. Hence, with shared hosting, you might end up sharing an IP address with other tenants. This division may pose an issue. If another tenant conducts forbidden actions, the authorities might blacklist your shared IP address as well. For example, if several tenants use a shared IP address for mass email delivering, that action alone can flag your IP address.

Note: Some custom environment setups might require a unique IP address.

Less Responsibility

You may get less access and customization options with shared hosting, but you also have less responsibility. Namely, the provider is responsible for maintenance and uptime. The IT provider should provide full 24/7 support. The tenant needs only limited technical knowledge.

What is Dedicated Hosting

With dedicated hosting, a single-tenant organization has exclusive access rights to a dedicated physical server. The fact that dedicated solutions are not shared is what drives its superiority. Customization options are plentiful, and all server resources are always at your disposal.

Organizations have the option to set up dedicated servers on-premises, collocate them in data centers, or rent.

- On-premises: Hosting on-site is the most expensive option. Organizations that opt to host a server on-site must have highly trained IT staff. You need to pick the configuration, procure hardware, set up the server and configure a high-bandwidth network connection. Upfront costs are high, and network connectivity is often limited.

- Colocation: Colocation is a great option if you need excellent network connectivity, but want to own the equipment. You rent server racks, cooling, power, physical security, and network connectivity. You own the server and access it via SSH. For this option, you need knowledgeable IT staff to manage the server.

- Rent: This is the most affordable dedicated server hosting type. You pick the configuration, but the service provider deploys it. You do not own the equipment, you rent. Upfront costs are minimal, and the provider deals with hardware malfunctions. Organizations that rent do not necessarily need highly trained IT staff.

With dedicated hosting, you know precisely what services you are getting. You pick the hardware and set up the software environment according to your requirements. Below are some of the main characteristics of dedicated hosting.

Stability and Performance

You are not sharing the hardware, so there is no neighbor whose rogue script may affect the stability of your hosting environment. Exclusive control is the deciding factor for many organizations. Web apps need stable and often custom environments, making dedicated hosting the perfect choice.

When it comes to performance, you’ll always get what you pay for. All resources are at your disposal at all times. Whether you opt for a state-of-the-art machine or an affordable low-spec server is up to your budget and use case.

Custom Hosting Environments

From hardware to software, dedicated hosting environments are fully customizable. In terms of hardware, organizations can select the components they see fit. When it comes to software, you can configure any environment necessary for your use case. You can even install a hypervisor and create your own cloud environment. In this case, the sky is the limit.

However, even minor customization requires IT expertise. Whether that is something your organization has in-house is an essential factor when deciding on a platform.

Security & Scalability

Dedicated hosting often comes with precisely configured DDoS protection, IP address blocking, and other server-level security features. RAID configurations become available with dedicated hosting, adding yet another layer of redundancy. It ensures you can recover data from multiple locations. Additionally, dedicated hosting is free of other tenants whose misuse may create gaps in security.

The scalability of a dedicated server is one of its main advantages. The opportunity for growth is immense if you carefully select your hosting configuration. This strategy will prevent any downtime due to server constraints. That is essential if you are running a software-as-a-service (SaaS) application.

Slower Deployment

Deploying a dedicated server is quite complicated. The provider needs to procure parts, build the configuration, and install it in the datacenter. Common configurations may take less time to complete, but custom hardware and software configurations may take several days to deploy. Nonetheless, organizations requiring high performing hosting that plan on time will still benefit from using dedicated resources. Carefully planning for the needs of a growing business is crucial.

Dedicated Hosting Costs

Dedicated hosting may cost up to 15 times more than simple shared solutions. Considering the advanced options dedicated hosting provides, its price is justified. The price of downtime will always greatly overshadow the extra money you put into not experiencing it.

What is a Virtual Private Server (VPS)?

We will briefly talk about the ‘in-between’ option. Providers offer virtual private servers as unshared cloud resources. VPS is a balance between shared and dedicated hosting. In practice, the resources remain shared, but with firm boundaries between tenants.

For such a setup, a physical server is divided only among a handful of tenants. Each instance gets its strict portion of resources. Every VPS acts as an independent server. Thus, access to resources is greater than in any shared option. Scalability is good, while performance, security, and stability are superior compared to shared hosting. However, raw computing power does not reach the level of dedicated hosting.

Additionally, a VPS offers root access. Hence, you have more customization options. That does go hand in hand with the need for dedicated IT staff or managed services.

The total cost is lower than any equivalent dedicated option. That is because the cost of all resources and maintenance is ‘shared’ among several tenants.

Key Differences between Shared Hosting, VPS and Dedicated Hosting

| Shared Hosting | Virtual Private Server | Dedicated Hosting | |

| Suitable for | Websites | Applications, complex and highly visited websites | SaaS, large scale applications |

| Costs | Low | Mid to high | Very high |

| Managed by | Hosting provider | End-user staff or hosting provider | In most cases by end-user staff |

| Security | Low to medium | High | Very high |

| Performance | Low | Medium to high | Very high |

| Bandwidth | Low | Medium to high | Very high |

| Scalability | Limited | Medium to high | Very high |

| Note | Good choice for rapid deployments or if you are dealing with a limited budget. | More bandwidth and better performance than shared hosting, but for a higher price. Flexibility of dedicated hosting for a lower price. | Complete control and ultimate performance…for the ultimate price. The only choice when performance and security are key to an organization’s success. |

Making an Informed Decision – Use Cases

Planning is key. Before sealing the deal, any deal, think about your long-term goals. Where do you see your organization in 3 to 5 years? What will be your internal and external IT requirements?

The right answers will differ from one business to another. However, we will look into common use cases.

Small e-Commerce Shop or Website

If you are running a small e-commerce shop or website with less than six-figure traffic numbers, then opt for shared hosting. The customizations that you may need do not require root user access nor do you need the computing power of dedicated hosting. For the amount of traffic that you receive, and the amount of resources you need, there is no need to splurge on a dedicated option.

The only reason why you would want to opt for dedicated hosting or VPS is if you expect a sharp rise in traffic in the foreseeable future.

Hosting a Software-as-a-Service

Your business provides subscription software to a wide range of users. To keep your business growing, your service must be online at all times with as little downtime as possible. Resources need to be scaled up or down depending on the number of users.

The platform of your choice needs to be customizable and adapt to whatever your next 10+ releases have to provide. As a SaaS provider, you will be handling user data as well. Choose a secure option, such as a PCI DSS v. 2.0 validated service provider.

Most shared hosting offerings will not check the right checkboxes. Dedicated hosting is the best option for this use case.

Hosting Health Data

Your business handles very delicate information such as Protected Health Information (PHI). Storing, transmitting and collecting of medical data needs to be HIPAA compliant and, consequently, security is a major requirement of yours.

You have two options, either opt for dedicated hosting or very secure cloud hosting. Some providers specialize in HIPAA compliant hosting, so there is an entire slew of options.

Video Streaming and Gaming Service

Bandwidth and performance are very important for your business. You expect constant traffic of up to 10 Gbps. One millisecond of lag is the difference between a satisfied customer and one that will avoid your service. Slow response times, lag and poor streaming will hurt your reputation and sales. Being in a very competitive arena, you need a capable dedicated hosting solution to power your service.

Providers offer dedicated streaming media servers. Those are highly scalable, secure and reliable platforms for media hosting and streaming.

Large e-Commerce Shop

Downtime is the last thing an online merchant needs. Downtime equals less profit, so stability, uptime guarantee, site optimization and security are very important for your business. Load times must be lightning quick while custom chat support and dynamic content is often a requirement.

Dedicated hosting will ensure you always have a stable and highly customizable platform for your online business. Shared hosting is not an option. If you want to opt for a cloud solution, choose a VPS or a Managed Private Cloud.

Hosting Service

As a hosting provider, the more resources you can get the better. You have clients of your own who want you to host their website, so shared hosting is out of the picture. You need dedicated hosting with root-level customization options or a VPS. You need easy scalability, system stability and confidence in the underlying infrastructure.

Pros and Cons of Shared vs Dedicated Hosting

| Pros | Cons | |

| Shared Hosting |

|

|

| Virtual Private Server |

|

|

| Dedicated Hosting |

|

|

Making the Choice: shared server vs dedicated server

The use cases mentioned above are just several of many types of businesses that need to decide should they go with shared hosting vs dedicated hosting. If after examining the pros and cons listed above, you are still unsure about what is best for your business, we recommend working with a solutions provider to clarify your needs. Contact phoenixNAP today to get more information.

Recent Posts

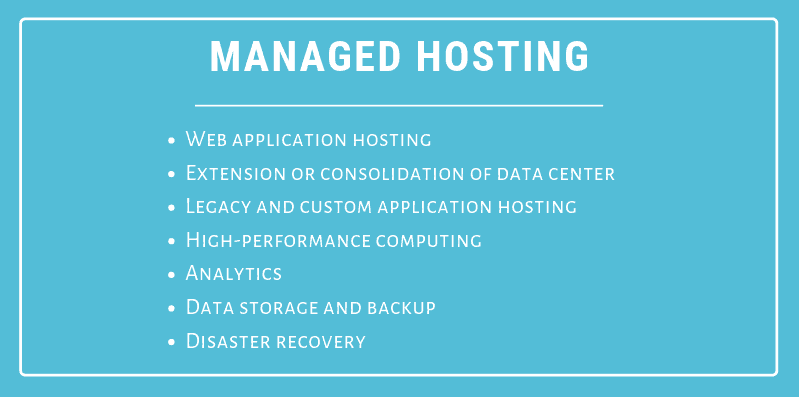

What is Managed Hosting? Top Benefits For Every Business

The cost to buy and maintain server hardware for securely storing corporate data can be high. Find out what managed hosting is and how it can work for your business.

Maintaining servers is not only expensive but time and space exhaustive. Web Hosting Solutions exist to scale costs as your business grows. As the underlying infrastructure that supports IT expands, you’ll need to plan for that and find a solution that caters to increasing demands.

How? Read on, and discover how your organization can benefit from working with a managed services provider.

Managed Server Hosting Defined

Managed IT hosting is a service model where customers rent managed hardware from an MSP or ‘managed service provider.’ This service is also called managed dedicated hosting or single-tenant hosting. The MSP leases servers, storage, and a network dedicated environment to just one client. An option for those who want to migrate their infrastructure to the cloud.

There are no shared environments, such as networking or local storage. Clients that opt for managed server hosting receive dedicated monitoring services and operational management, which means the MSP handles all the administration, management, and support of the infrastructure. It’s all located in a data center which is owned and run by the provider, instead of being located with the client. This feature is especially crucial for a company that has to guarantee information security to its clients.

The main advantage of using managed services is that it allows businesses the freedom to not worry about their server maintenance. As technology continues to develop, companies are finding that by outsourcing day-to-day infrastructure and hardware vendor management, they gain value for money since they do not have to manage it in-house.

The MSP guarantees support to the client for the underlying infrastructure and maintains it. Additionally, it provides a convenient web-interface allowing the client to access their information and data, without fear of data loss or jeopardizing security.

Why Work With a Managed Hosting Provider

Any business that wants to secure and store their data safely, can benefit from managed hosting. Managed services are a good solution to cutting costs and raising efficiency for companies that need:

Network interruptions and server malfunctions cost companies in terms of real-time productivity. Whenever a hardware or performance issues occur you may be at risk of downtime. As you lose time, you inevitably lose money. Especially if you do a portion of your business online.

A survey by CA Technologies revealed just how much impact downtime can have on annual revenues. One of the key findings reported that each year North American businesses are collectively losing $26.5 billion due to IT downtime and recovery alone.

Researchers explained that most of the financial damage could have been avoided with better recovery strategies and data protection.

What are the Benefits of a Managed Host?

Backup and Disaster Recovery

The number one benefit of hiring an MSP is getting uninterrupted service. They work while you rest. Any problems that may arise are handled on the backend, far away from you and your customer base and rarely become customer-facing issues. Redundant servers, network protection, automated backup solutions, and other server configurations all work together to remove the stress from running your business.

Ability To Scale

Managed hosting also you to scale and plan more effectively. You spend less money for more expertise. Instead of employing a team of technicians, you ‘rent’ experienced and skilled experts from the data center, who are assigned according to your requirements. Additionally, you have the benefit of predicting yearly costs for hardware maintenance, according to the configuration chosen.

Increased Security

Managed web hosting services also protects you against cyber attacks by backing up your service states, encrypting your information, and quarantining your data flow. Today’s hackers use automation, AI, computer threading, and many other technologies. To counter this in-house, you would have to spend tens of thousands. The managed hosting service allows you to pay a fraction of this for exponentially more protection.

Redundancy and security increase as you move up service levels. At the highest levels, security on a managed hosting provider is virtually impenetrable.

Lower Operating Costs

One of the biggest benefits of moving to managed hosting is simply that you will be able to significantly reduce the costs of maintaining hardware in-house. Not only will you get to use the infrastructure of the MSP, but also access the expertise of their engineers.

They provide server configuration, storage, and networking requirements. They ensure the maintenance of complex tools, the operating system, and applications that are run on top of the infrastructure. They provide technical support, patching, hardware replacement, security, disaster recovery, firewalls all at a fee that greatly undercuts the costs of having to do it alone. The MSP provisions everything, allowing you to allot budget to other areas of your business.

Hosted vs Managed Services

The difference between owned versus leased or licensed hardware and software assets and hosting services is quantifiable.

Each business must do its own assessment of what will work best. Managed service providers encourage their clients to weigh both the pros and the cons. They will also help create personalized plans that suit specific business needs. This plan would reflect the risks, demands and financial plans an enterprise needs to consider before migrating to the cloud.

Typically there are three (3) conventional managed plans to choose from:

- The Basic package

- The Advanced package

- The Custom package

The basic package provides server and network management capabilities, assistance and support when needed, and periodical performance statistics.

The advanced package would offer fully managed servers, proactive troubleshooting, availability monitoring, and faster meaningful response.

The custom package is recommended for best for business solutions. It includes all advanced features with additional custom work time.