Dedicated Server Benefits: 5 Advantages for Your Business

You understand the value of your company’s online presence.

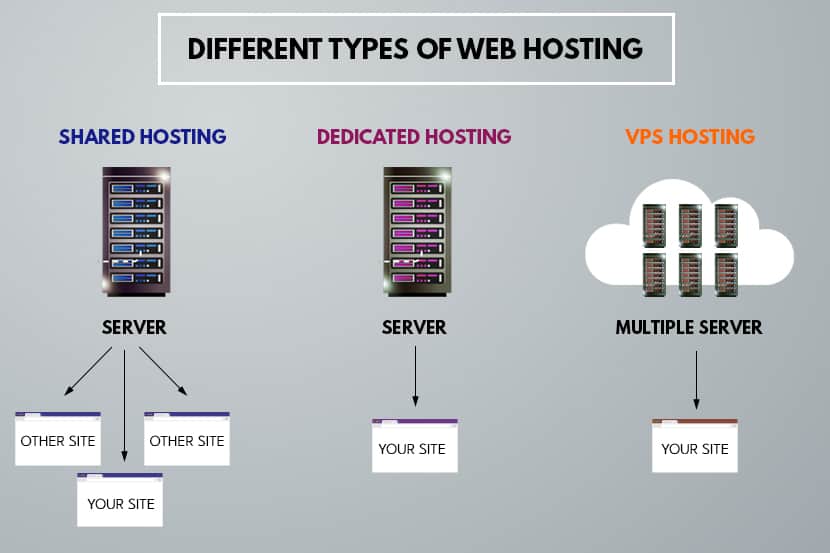

You have your website, but how is it performing? Many business owners do not realize that they share servers with 100’s or even 1000’s of other websites.

Is it time to take your business to the next level and examine the benefits of using dedicated servers.

You may be looking to expand. Your backend database may be straining under the pressure of all those visitors. To stay ahead of competitors, every effort counts.

Your shared server hosting has limitations to your growing business needs. In short, you need a dedicated hosting provider. Whether it’s with shared or dedicated hosting, you get what you pay for.

Let’s address the question that is top of your (or your CFO’s) mind.

How much will a dedicated server cost the business?

The answer to that question depends on the following:

- the computing power you need

- amount of bandwidth you will require

- the quantity of secure data storage and backup

A dedicated server is more expensive than a shared web hosting arrangement, but the benefits are worthy of the increased costs.

Why? A dedicated server supplies more of what you need. It is in a completely different league. Its power helps you level the playing field. You can be more competitive in the growing world of eCommerce.

Five Dedicated Server Benefits

A dedicated server leverages the power and scalability. With a dedicated server, your business realizes a compound return on its monthly investment in the following ways:

1. Exclusive use of dedicated resources

When you have your own dedicated server, you get the entire web server for your exclusive use. This is a significant advantage when comparing shared hosting vs. dedicated hosting.

The server’s disk space, RAM, bandwidth, etc., now belong to you.

- You have exclusive use of all the CPU or RAM and bandwidth. At peak business times, you continue to get peak performance.

- You have root access to the server. You can add your own software and configure settings and access the server logs. Root access is the key advantage of dedicated servers. Again, it goes back to exclusivity.

So, within the limits of propriety, the way you decide to use your dedicated hosting plan is your business.

You can run your applications or implement special server security measures. You can even use a different operating system from your provider. In short, you can drive your website the way you drive your business—in a flexible, scalable, and responsive way.

2. Flexibility managing your growing business

A dedicated server can accommodate your growing business needs. With a dedicated server, you can decide on your own server configuration. As your business grows, you can add more or modify existing services and applications. You remain more flexible when new opportunities arise or unexpected markets materialize.

It is scalability for customizing to your needs. If you need more processing, storage, or backup, the dedicated server is your platform.

Also, today’s consumers have higher expectations. They want the convenience of quick access to your products. A dedicated server serves your customers with fast page loading and better user experience. If you serve them, they will return.

3. Improved reliability and performance

Reliability is one of the benefits of exclusivity. A dedicated server provides peak performance and reliability.

That reliability also means that server crashes are far less likely. Your website has extra resources during periods of high-volume traffic. If your front end includes videos and image displays, you have the bandwidth you need. Second, only to good website design are speed and performance. The power of a dedicated server contributes to the optimum customer experience.

Managed dedicated web hosting is a powerful solution for businesses. It comes with a higher cost than shared hosting. But you get high power, storage, and bandwidth to host your business.

A dedicated server provides a fast foothold on the web without upfront capital expenses. You have exclusive use of the server, and it is yours alone. Don’t overlook technical assistance advantages. You or your IT teams oversee your website.

Despite that vigilance, sometimes you need outside help. Many dedicated hosting solutions come with equally watchful technicians. With managed hosting, someone at the server end is available for troubleshooting around the clock.

4. Security through data separation

Dedicated servers permit access only to your company.

The server infrastructure includes firewalls and security monitoring.

This means greater security against:

- Malware and hacks: The host’s network monitoring, secure firewalls, and strict access control free you to concentrate on your core business.

- Preventing denial of service attacks: Data separation isolates your dedicated server from the hosting company’s services and data belonging to other customers. That separation ensures quick recovery from backend exploits.

You can also implement your own higher levels of security. You can install your applications to run on the server. Those applications can include new layers of security and access control.

This adds a level of protection to your customer and proprietary business data. You safeguard your customer and business data, again, through separation.

5. No capital or upfront expense

Upfront capital expense outlays are no longer the best way to finance technology. Technology advances outpace their supporting platforms in a game of expensive leapfrog. Growing businesses need to reserve capital for other areas.

Hosting providers provide reasonable fees while providing top of the line equipment.

A dedicated hosting provider company can serve many clients. The cost of that service is a fraction of what you would pay to do it in-house. Plus you the bonuses of physical security and technical support.

What to Consider When Evaluating Dedicated Server Providers

Overall value

Everyone has a budget, and it’s essential to choose a provider that fits within that budget. However, price should not be the first or only consideration. Many times, it can end up costing you more in the long run by simply choosing the lowest priced option. Take a close look at what the provider offers in the other six categories we’ll cover in this guide, and then ask yourself if the overall value aligns with the price you’ll pay to run the reliable business you strive for.

Reputation

What are other people and businesses saying about a provider? Is it good, bad, or is there nothing at all? A great way to know if a provider is reliable and worthy of your business is to learn from others’ experiences. Websites like Webhostingtalk.com provide a community where others are talking about hosting and hosting related topics. One way to help decide on a provider is to ask the community for feedback. Of course, you can do some due diligence ahead of time by simply searching the provider’s name in the search function.

Reliability

Businesses today demand to be online and available to their customers 24 hours a day, seven days a week, and 365 days a year. Anything less means you’re probably losing money. Sure, choosing the lowest priced hosting probably seems like a good idea at first, but you have to ask yourself is the few dollars you save per month worth the headache and lost revenue if your website is offline. The answer is generally no.

Support

You only need it, when you need it. Even if you’re administering your own server, having reliable, 24×7 support available when you need it is critical. The last thing you want is to have a hard time reaching someone when you need them most. Look for service providers that offer multiple support channels such as live chat, email, and phone.

Service level agreements

SLA’s are the promises your provider is making to you in exchange for your payment(s). With the competitiveness of the hosting market today, you shouldn’t choose a provider that doesn’t offer important Service Level Agreements such as uptime, support response times, hardware replacement, and deployment times.

Flexibility

Businesses go through several phases of their lifecycle. It’s important to find a provider that can meet each phase’s needs and allow you to quickly scale up or down based on your needs at any given time. Whether you’re getting ready to launch and need to keep your costs low, or are a mature business looking to expand into new areas, a flexible provider that can meet these needs will allow you to focus on your business and not worry about finding someone to solve your infrastructure headaches.

Hardware quality

Business applications demand 24×7 environments and therefore need to run on hardware that can support those demands. It’s important to make sure that the provider you select offers server-grade hardware, not desktop-grade, which is built for only 40 hour work weeks. The last thing you want is for components to start failing, causing your services to be unavailable to your customers.

Advantages of Dedicated Servers, Ready to Make the Move?

Dedicated hosting provides flexibility, scalability, and better management of your own and your customers’ growth. They also offer reliability and peak performance which ensures the best customer experience.

Include the option of on-call, around-the-clock server maintenance, and you have found the hosting solution your business is looking for.

Recent Posts

Comprehensive Guide to Intelligent Platform Management Interface (IPMI)

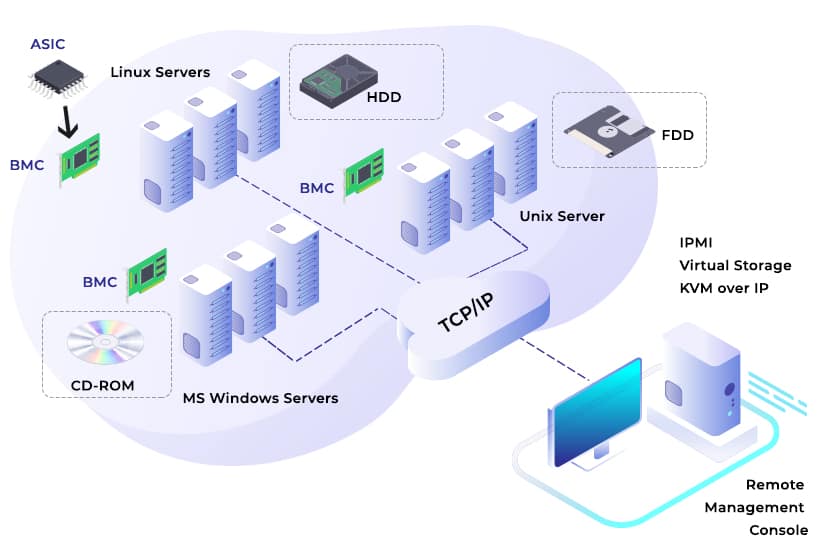

Intelligent Platform Management Interface (IPMI) is one of the most used acronyms in server management. IPMI became popular due to its acceptance as a standard monitoring interface by hardware vendors and developers.

So what is IPMI?

The short answer is that it is a hardware-based solution used for securing, controlling, and managing servers. The comprehensive answer is what this post provides.

What is IPMI Used For?

IPMI refers to a set of computer interface specifications used for out-of-band management. Out-of-band refers to accessing computer systems without having to be in the same room as the system’s physical assets. IPMI supports remote monitoring and does not need permission from the computer’s operating system.

IPMI runs on separate hardware attached to a motherboard or server. This separate hardware is the Baseboard Management Controller (BMC). The BMC acts like an intelligent middleman. BMC manages the interface between platform hardware and system management software. The BMC receives reports from sensors within a system and acts on these reports. With these reports, IPMI ensures the system functions at its optimal capacity.

IPMI collaborates with standard specification sets such as the Intelligent Platform Management Bus (IPMB) and the Intelligent Chassis Management Bus (ICMB). These specifications work hand-in-hand to handle system monitoring tasks.

Alongside these standard specification sets, IPMI monitors vital parameters that define the working status of a server’s hardware. IPMI monitors power supply, fan speed, server health, security details, and the state of operating systems.

You can compare the services IPMI provides to the automobile on-board diagnostic tool your vehicle technician uses. With an on-board diagnostic tool, a vehicle’s computer system can be monitored even with its engine switched off.

Use the IPMItool utility for managing IPMI devices. For instructions and IPMItool commands, refer to our guide on how to install IPMItool on Ubuntu or CentOS.

Features and Components of Intelligent Platform Management Interface

IPMI is a vendor-neutral standard specification for server monitoring. It comes with the following features which help with server monitoring:

- A Baseboard Management Controller – This is the micro-controller component central to the functions of an IPMI.

- Intelligent Chassis Management Bus – An interface protocol that supports communication across chasses.

- Intelligent Platform Management Bus – A communication protocol that facilitates communication between controllers.

- IPMI Memory – The memory is a repository for an IPMI sensor’s data records and system event logs.

- Authentication Features – This supports the process of authenticating users and establishing sessions.

- Communications Interfaces – These interfaces define how IPMI messages send. IPMI send messages via a direct-out-of-band local-area Networks or a sideband local-area network. IPMI communicate through virtual local-area networks.

Comparing IPMI Versions 1.5 & 2.0

The three major versions of IPMI include the first version released in 1998, v1.0, v1.5, and v2.0. Today, both v1.5 and v2.0 are still in use, and they come with different features that define their capabilities.

Starting with v1.5, its features include:

- Alert policies

- Serial messaging and alerting

- LAN messaging and alerting

- Platform event filtering

- Updated sensors and event types not available in v1. 0

- Extended BMC messaging in channel mode.

The updated version, v2.0, comes with added updates which include:

- Firmware Firewall

- Serial over LAN

- VLAN support

- Encryption support

- Enhanced authentication

- SMBus system interface

Analyzing the Benefits of IPMI

IPMI’s ability to manage many machines in different physical locations is its primary value proposition. The option of monitoring and managing systems independent of a machine’s operating system is one significant benefit other monitoring tools lack. Other important benefits include:

Predictive Monitoring – Unexpected server failures lead to downtime. Downtime stalls an enterprise’s operations and could cost $250,000 per hour. IPMI tracks the status of a server and provides advanced warnings about possible system failures. IPMI monitors predefined thresholds and provides alerts when exceeded. Thus, actionable intelligence IPMI provides help with reducing downtime.

Independent, Intelligent Recovery – When system failures occur, IPMI recovers operations to get them back on track. Unlike other server monitoring tools and software, IPMI is always accessible and facilitates server recoveries. IPMI can help with recovery in situations where the server is off.

Vendor-neutral Universal Support – IPMI does not rely on any proprietary hardware. Most hardware vendors integrate support for IPMI, which eliminates compatibility issues. IPMI delivers its server monitoring capabilities in ecosystems with hardware from different vendors.

Agent-less Management – IPMI does not rely on an agent to manage a server’s operating system. With it, making adjustments to settings such as BIOS without having to log in or seek permission from the server’s OS is possible.

The Risks and Disadvantages of IPMI

Using IPMI comes with its risks and a few disadvantages. These disadvantages center on security and usability. User experiences have shown the weaknesses include:

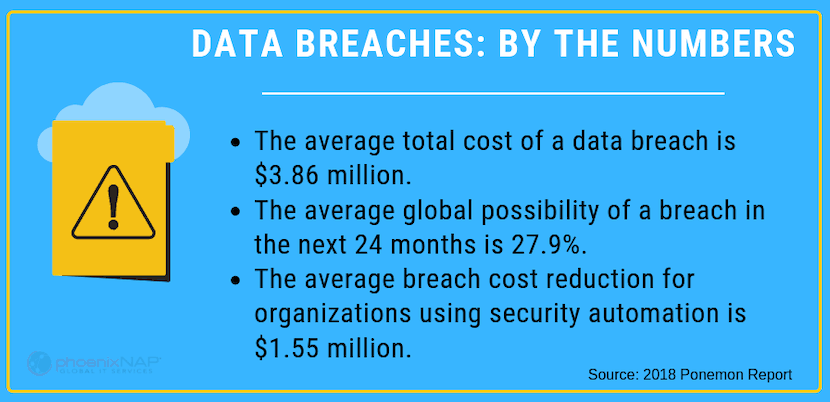

Cybersecurity Challenges – IPMI communication protocols sometimes leave loopholes that can be exploited by cyber-attacks, and successful breaches are expensive as statistics show. The IPMI installation and configuration procedures used can also leave a dedicated server vulnerable and open to exploitation. These security challenges led to the addition of encryption and firmware firewall features in IPMI version 2.0.

Configuration Challenges – The task of configuring IPMI may be challenging in situations where older network settings are skewed. In cases like this, clearing network configuration through a system’s BIOS is capable of solving the configuration challenges encountered.

Updating Challenges – The installation of update patches may sometimes lead to network failure. Switching ports on the motherboard may cause malfunctions to occur. In these situations, rebooting the system is capable of solving the issue that caused the network to fail.

Server Monitoring & Management Made Easy

Intelligent Platform Management brings ease and versatility to the task of server monitoring and management. By 2022, experts expect the IPMI market to hit the $3 billion mark. PheonixNAP bare metal servers come with IPMI, and it gives you access to the IPMI of every server you use. Get started by signing up today.

Recent Posts

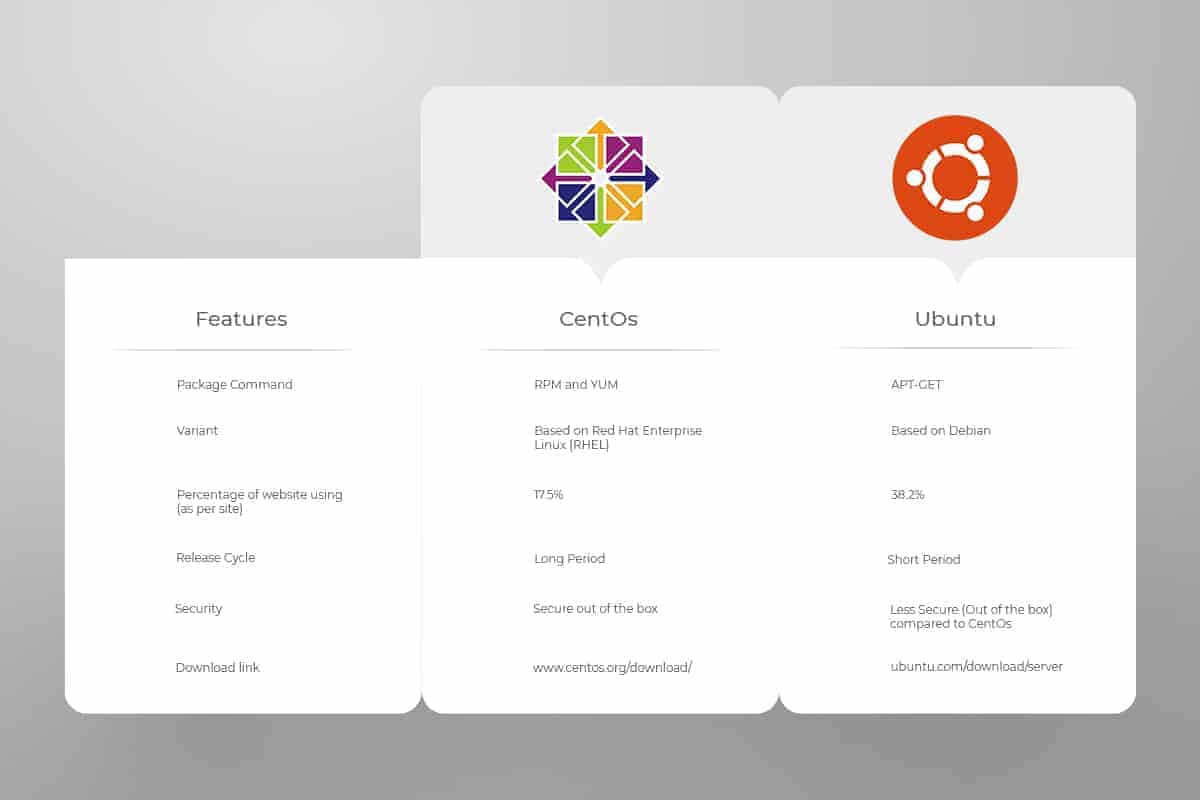

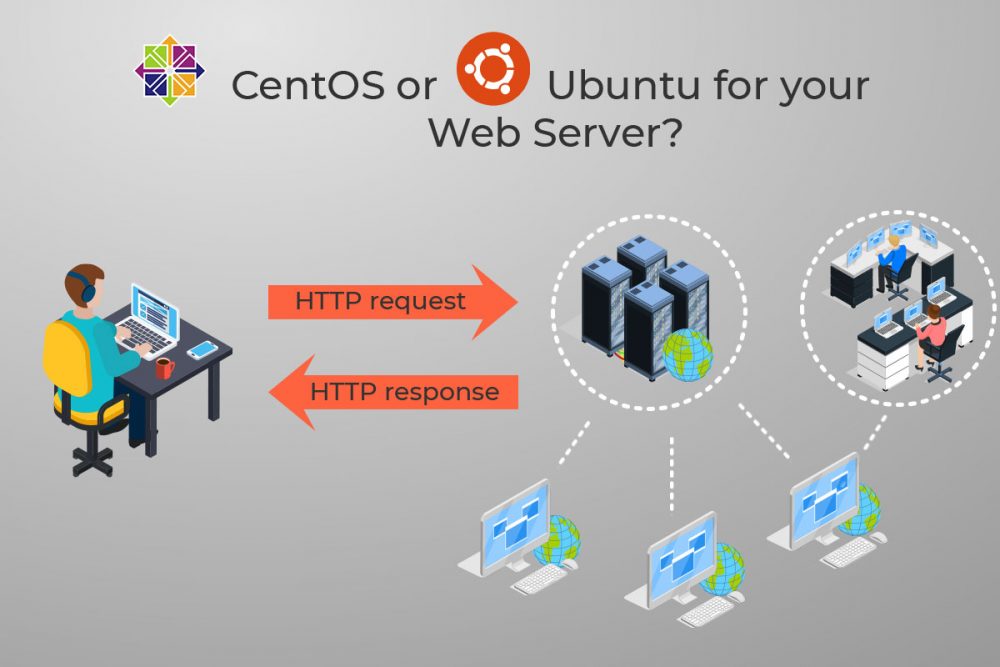

CentOS vs Ubuntu: Choose the Best OS for Your Web Server

Don’t know whether to use CentOS or Ubuntu for your server? Let’s compare both and decide which one you should use on your server/VPS. In highlighting the two principal Linux distributions’ strengths and weaknesses for running a web server, the choice should become clear.

Linux is an open-source operating system currently powering most of the Internet. There are hundreds of different versions of Linux. For web servers, the two most popular versions are Ubuntu and CentOS. Both are open-source and free community-supported operating systems. You’ll be happy to know these distributions have a ton of community support and, therefore, regularly available updates.

Unlike Windows, Linux’s open-source license and encourages users to experiment with the code. This flexibility has created loyal online communities dedicated to building and improving the core Linux operating system.

Quick Overview of Ubuntu and CentOS

Ubuntu

Ubuntu is a Linux distribution based on Debian Linux. The word Ubuntu comes from the Nguni Bantu language, and it generally means “I am what I am because of who we all are.” It represents Ubuntu’s guiding philosophy of helping people come together in the community. Canonical, the Ubuntu developers, sought to make a Linux OS that was easy to use and had excellent community support.

Ubuntu boasts a robust application repository. It is updated frequently and is designed to be intuitive and easy to use. It is also highly customizable, from the graphical interface down to web server packages and internet security.

CentOS

CentOS is a Linux distribution based on Red Hat Enterprise Linux (RHEL). The name CentOS is an acronym for Community Enterprise Operating System. Red Hat Linux has been a stable and reliable distribution since the early days of Linux. It’s been mostly implemented in high-end corporate IT applications. CentOS continues the tradition started by Red Hat, providing an extremely stable and thoroughly-tested operating system.

Like Ubuntu, CentOS is highly customizable and stable. Due to its early dominance, many conventions are built around the CentOS architecture. Cutting-edge corporate security measures were implemented in RHEL, that quickly adapt to CentOS’s architecture.

Comparing the Features of CentOS and Ubuntu Servers

One key feature for CentOS and Ubuntu is that they are both free. You can download a copy for no charge and install it on your own cheap dedicated server.

Each version can be distributed or downloaded to a USB drive, which you can boot into without making permanent changes to your operating system. A bootable drive allows you to take the system for a test run before you install it.

Basic architecture

CentOS is based on the Red Hat Enterprise Linux architecture, while Ubuntu is based on Debian. This is important when looking at software package management systems. Both versions use a package manager to resolve dependencies, perform installations, and track updates.

Ubuntu uses the apt package manager and installs software from .deb packages. CentOS uses the yum package manager and installs .rpm packages. They both work about the same, but .deb packages cannot be installed on CentOS – and vice-versa.

The difference is in the availability of packages for both systems. Some packages will not be available as efficiently on Ubuntu as they are on CentOS. When working with your developers, find out their preference as they usually tend to stick to just one package type (.deb or .rpm)

Another detail is the structure of individual software packages. When installing Apache, one of the leading web server packages, the service works a little differently in Ubuntu than in CentOS. The Apache service in Ubuntu is labeled apache2, while the same service in CentOS is labeled httpd.

Software

If you’re strictly going by the number of packages, Ubuntu has a definitive edge. The Ubuntu repository lists tens of thousands of individual software packages available for installation. CentOS only lists a few thousand. If you go by the number of packages, Ubuntu would clearly win.

The other side of this argument is that many graphical server tools like cPanel are written solely for Red-Hat-based systems. While there are similar tools in Ubuntu, some of the most widely-used tools in the industry are only available in CentOS.

Stability, security, and updates

Ubuntu is updated frequently. A new version is released every six months. Ubuntu offers LTS (Long-Term Support) versions every two years, which are supported for five years. These different releases allow users to choose whether they want the “latest and greatest” or the “tried-and-true.” Because of the frequent updates, Ubuntu often includes newer software into newer releases. That can be fun for playing with new options and technology, but it can also create conflicts with existing software and configurations.

CentOS is updated infrequently in part because the developer team for CentOS is smaller. It’s also due to the extensive testing on each component before release. CentOS versions are supported for ten years from the date of release and include security and compatibility updates. However, the slow release cycle means a lack of access to third-party software updates. You may need to manually install third-party software or updates if they haven’t made it into the repository. CentOS is reliable and stable. As the core operating system, it is relatively small and lightweight compared to its Windows counterpart. This helps improve speed and lowers the size that the operating system takes up on the hard disk.

Both CentOS and Ubuntu are stable and secure, with patches released regularly.

Support and troubleshooting

If something goes wrong, you’ll want to have a support path. Ubuntu has paid support options, like many enterprise IT companies. One additional advantage, though, is that there are many expert users in the Ubuntu forums. It’s usually easy to find a solution to common errors or problems.

With a new release coming out every six months, it’s not feasible to offer full support for every version. Regular releases are supported for nine months from the release date. Regular users will probably upgrade to the newest versions as they are released.

Ubuntu also releases LTS or Long-Term Support versions. These are supported for a full five years from the installation date. Releases have ongoing patches and updates, so you can keep an LTS release installed (without needing to upgrade) for five years.

Third-party providers often manage centos support. It provides excellent documentation, plus forums and developer blogs that can help you resolve an error. In part, CentOS relies on its community of Red Hat users to know and manage problems.

The CentOS Project is open-source and designed to be freely available. If you need paid support, it’s recommended that you consider paying for Red Hat Enterprise licensing and support. Where CentOS shines is in its dedication to helping its customers. A CentOS operating system is supported for ten years from the date of release.

New operating system releases are published every two years. This frequency can lower the total cost of ownership since you can stretch a single operating system cycle for a full decade. Above, ‘support’ refers both to the ability to get help from developers and the developers’ commitment to patching and updating software.

Ease of use

Ubuntu has gone to great lengths to make its system user-friendly. An Ubuntu server is more focused on usability. The graphical interface is intuitive and easy to manage, with a handy search function. Running utilities from the command-line is straightforward. Most commands will suggest the proper usage, and the sudo command is easy to use to resolve “Access denied” errors.

Where CentOS has some help and community support, Ubuntu has a solid support knowledge base. This support includes both how-to guides and tutorials, as well as an active community forum.

Ubuntu uses the apt-get package manager, which uses a different syntax from yum. But functions are about the same. Many of the applications that CentOS server use, such as cPanel, have similar alternatives available for Ubuntu. Finally, Ubuntu Linux offers a more seamless software installation process. You can still tinker under the hood, but the most commonly-used software and operating system features are included and updated automatically.

Ubuntu’s regular updates can be a liability. They can conflict with your existing software configuration. It’s not always a good thing to use the latest technology. Sometimes it’s better to let someone else work out the bugs before you install an update.

CentOS is typically for more advanced users. One flaw with CentOS is a steep learning curve. There are fewer how-to guides and community forums available if you run into a problem.

There seems to be less hand-holding in CentOS – most guides presume that you know the basics, like sudo or basic command-line features. These are skills you can learn working with other Red Hat professionals or by taking certifications.

With CentOS built around the Red Hat architecture, many old-school Linux users find it more familiar and comfortable. CentOS is also used widely across the Internet at the server level, so using it can improve cross-compatibility. Many CentOS server utilities, such as cPanel, are built to work only in Red Hat Linux.

CentOS or Ubuntu for Development

CentOS takes longer for the developers to test and approve updates. That’s why CentOS releases updates much slower than other Linux variants. If you have a strong business need for stability or your environment is not very tolerant of change, this can be more helpful than a faster release schedule.

Due to the lower and slower support for CentOS, some software updates are not applied automatically. A newer version of a software application may be released but may not make it into the official repository. If this happens, it can leave you responsible for manually checking and installing security updates. Less-experienced users might find this process too challenging.

Ubuntu, as an “out-of-the-box” operating system, includes many different features. There are three different versions of Ubuntu:

- Desktop version, which is for basic end-users;

- Server, web hosting over the Internet or in the cloud

- Core, which is for other devices (like cars, smart TV’s, etc.)

A basic installation of Ubuntu Server should include most of the applications you need to configure your server to host files over a network. It also adds extra software. Such as an open-source office productivity software, as well as the latest kernel and operating system features.

Ubuntu’s focus on features and usability relies on the release of new versions every six months. This is very helpful if you prefer to use the latest software available. These updates can also become a liability if you have custom software that doesn’t play nicely with newer updates.

Cloud deployment

Ubuntu offers excellent support for container virtualizations. It provides support for cloud deployment and expands its influence in the market compared to CentOS. Since June 2019, “Canonical announced full enterprise support for Kubernetes 1.15 kubeadm deployments, its Charmed Kubernetes, and MicroK8s; the popular single-node deployment of Kubernetes. “

CentOS is not being left behind and competes by offering three private cloud choices. It also provides a public cloud platform through AWS. CentOS has a high standard of documentation and provides its users with a mature platform so that CentOS users can apply its features further.

Gaming Servers

Unbuntu has a pack that custom-designed for gamers called the Ubuntu GamePack. It’s based on Ubuntu. It does not come with games preinstalled. It instead comes preinstalled with the PlayOnLinux, Wine, Lutric, and Steam client. It’s a like software intersection where games on Windows, Linux, Console, and Steam are played.

It’s a hybrid version of the Ubuntu OS since it also supports Adobe Flash and Oracle Java. It allows for the seamless play of online gaming. Ubuntu gamepack is optimized for over six thousand Windows and Linux games, which guaranteed launch and function in the Ubuntu GamePack. If you’ve more familiar with Ubuntu, then choose the desktop version for gaming.

CentOS is not as popular for gaming as Ubuntu. If you’ve used CentOS for your server, then you can try the Fedora-based distribution for gaming. It’s called Fedora Games Spin, and it’s the preferred Linux distribution for gaming servers for CentOS/RedHat/Fedora Linux users.

Most of the best gaming distros are Debian/Ubuntu-based, but if you’re committed to CentOS, you can run it in live mode from a USB/DVD media without installing it. It’s accompanied by an Xfce desktop environment and has over two thousand Linux games. It’s a single platform that allows you to play all Fedora games.

Comparison Table of CentOS and Ubuntu Linux Versions |

||

| Features | CentOS | Ubuntu |

| Security | Strong | Good (needs further configuration) |

| Support Considerations | Solid documentation. Active but limited user community. | High-level documentation and large support community |

| Update Cycle | Infrequent | Often |

| System Core | Based on Redhat | Based on Debian |

| Cloud Interface | CloudStack, OpenStack, OpenNebula | OpenStack |

| Virtualization | Native KVM Support | Xen, KVM |

| Stability | High | Solid |

| Package Management | YUM | aptitude, apt-get |

| Platform Focal Point | Targets server market, choice of larger corporations | Targets desktop users |

| Speed Considerations | Excellent (depending on hardware) | Excellent (depending on hardware) |

| File Structure | Identical file/folder structure, system services differ by location | Identical file/folder structure, system services differ by location |

| Ease of Use | Difficult/Expert Level | Moderate/User-friendly |

| Manageability | Difficult/Expert Level | Moderate/User-friendly |

| Default applications | Updates as required | Regularly updated |

| Hosting Market Share | 497,233 sites – 17.5% of Linux users | 772,127 sites – 38.2% of Linux users |

Bottom Line on Choosing a Linux Distribution for Your Server

Both CentOS and Ubuntu are free to use. Your decision should reflect the needs of your web server and usage.

If you’re more of a beginner in being a server admin, you might lean towards Ubuntu. If you’re a seasoned pro, CentOS might be more appealing. If you like implementing new software and technology as it’s released, Ubuntu might hold the edge for you. If you hate dealing with updates breaking your server, CentOS might be a better fit. Either way, you shouldn’t worry about one being better than the other.

Both are approximately equal in security, stability, and functionality – Let us help you choose the system that will serve your business best.

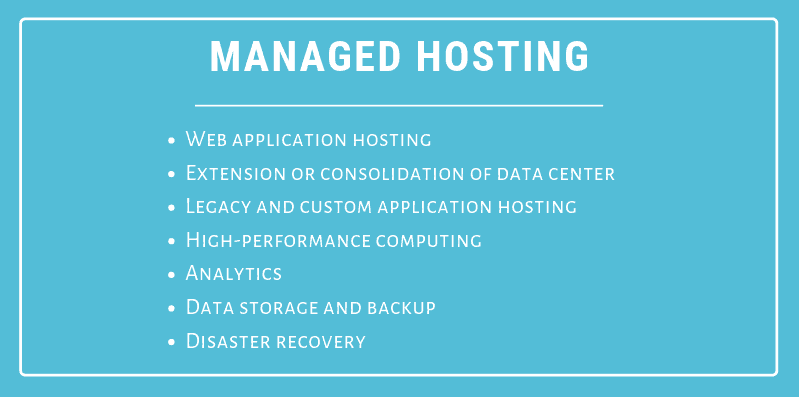

What is Managed Hosting? Top Benefits For Every Business

The cost to buy and maintain server hardware for securely storing corporate data can be high. Find out what managed hosting is and how it can work for your business.

Maintaining servers is not only expensive but time and space exhaustive. Web Hosting Solutions exist to scale costs as your business grows. As the underlying infrastructure that supports IT expands, you’ll need to plan for that and find a solution that caters to increasing demands.

How? Read on, and discover how your organization can benefit from working with a managed services provider.

Managed Server Hosting Defined

Managed IT hosting is a service model where customers rent managed hardware from an MSP or ‘managed service provider.’ This service is also called managed dedicated hosting or single-tenant hosting. The MSP leases servers, storage, and a network dedicated environment to just one client. An option for those who want to migrate their infrastructure to the cloud.

There are no shared environments, such as networking or local storage. Clients that opt for managed server hosting receive dedicated monitoring services and operational management, which means the MSP handles all the administration, management, and support of the infrastructure. It’s all located in a data center which is owned and run by the provider, instead of being located with the client. This feature is especially crucial for a company that has to guarantee information security to its clients.

The main advantage of using managed services is that it allows businesses the freedom to not worry about their server maintenance. As technology continues to develop, companies are finding that by outsourcing day-to-day infrastructure and hardware vendor management, they gain value for money since they do not have to manage it in-house.

The MSP guarantees support to the client for the underlying infrastructure and maintains it. Additionally, it provides a convenient web-interface allowing the client to access their information and data, without fear of data loss or jeopardizing security.

Why Work With a Managed Hosting Provider

Any business that wants to secure and store their data safely, can benefit from managed hosting. Managed services are a good solution to cutting costs and raising efficiency for companies that need:

Network interruptions and server malfunctions cost companies in terms of real-time productivity. Whenever a hardware or performance issues occur you may be at risk of downtime. As you lose time, you inevitably lose money. Especially if you do a portion of your business online.

A survey by CA Technologies revealed just how much impact downtime can have on annual revenues. One of the key findings reported that each year North American businesses are collectively losing $26.5 billion due to IT downtime and recovery alone.

Researchers explained that most of the financial damage could have been avoided with better recovery strategies and data protection.

What are the Benefits of a Managed Host?

Backup and Disaster Recovery

The number one benefit of hiring an MSP is getting uninterrupted service. They work while you rest. Any problems that may arise are handled on the backend, far away from you and your customer base and rarely become customer-facing issues. Redundant servers, network protection, automated backup solutions, and other server configurations all work together to remove the stress from running your business.

Ability To Scale

Managed hosting also you to scale and plan more effectively. You spend less money for more expertise. Instead of employing a team of technicians, you ‘rent’ experienced and skilled experts from the data center, who are assigned according to your requirements. Additionally, you have the benefit of predicting yearly costs for hardware maintenance, according to the configuration chosen.

Increased Security

Managed web hosting services also protects you against cyber attacks by backing up your service states, encrypting your information, and quarantining your data flow. Today’s hackers use automation, AI, computer threading, and many other technologies. To counter this in-house, you would have to spend tens of thousands. The managed hosting service allows you to pay a fraction of this for exponentially more protection.

Redundancy and security increase as you move up service levels. At the highest levels, security on a managed hosting provider is virtually impenetrable.

Lower Operating Costs

One of the biggest benefits of moving to managed hosting is simply that you will be able to significantly reduce the costs of maintaining hardware in-house. Not only will you get to use the infrastructure of the MSP, but also access the expertise of their engineers.

They provide server configuration, storage, and networking requirements. They ensure the maintenance of complex tools, the operating system, and applications that are run on top of the infrastructure. They provide technical support, patching, hardware replacement, security, disaster recovery, firewalls all at a fee that greatly undercuts the costs of having to do it alone. The MSP provisions everything, allowing you to allot budget to other areas of your business.

Hosted vs Managed Services

The difference between owned versus leased or licensed hardware and software assets and hosting services is quantifiable.

Each business must do its own assessment of what will work best. Managed service providers encourage their clients to weigh both the pros and the cons. They will also help create personalized plans that suit specific business needs. This plan would reflect the risks, demands and financial plans an enterprise needs to consider before migrating to the cloud.

Typically there are three (3) conventional managed plans to choose from:

- The Basic package

- The Advanced package

- The Custom package

The basic package provides server and network management capabilities, assistance and support when needed, and periodical performance statistics.

The advanced package would offer fully managed servers, proactive troubleshooting, availability monitoring, and faster meaningful response.

The custom package is recommended for best for business solutions. It includes all advanced features with additional custom work time.

It is essential to note that each plan is implemented differently, tailored to the client individually.

Future of Managed Server Hosting

In 2010, the market size for cloud computing and hosting was $24.63 billion. In 2018, it was $117.96 billion. By 2020, some experts predict it will eclipse the $340 billion mark. The market has been growing exponentially for a decade now. It doesn’t look like it will slow down any time soon.

What has stimulated such a flourishing market over the years, is the ability to scale. When you invest in managed hosting services, you are sharing the cost of setup, maintenance, and security with thousands of other businesses across the world. Hence, companies enjoy greater security benefits than what could be procured by one company alone. The advantages are simply mathematical. Splitting costs saves your business capital.

Every company looking to compete and exist online should be aware of the importance of keeping its data secure and available.

It is now virtually impossible to maintain a fully secure server and management center in-house. Managed dedicated hosting services makes this available to you immediately and at a reasonable cost, since it scales with you. You pay only for what you need. And you have a set of experts to hold accountable for service misfires.

Hosting Solution That Grows With You

Web servers have more resources than ever. Web hosts have more power than ever too. What does this mean for you? Why does managed hosting with an MSP work? It’s because they offer a faster and more reliable service! Although, you will need to partner with a team that knows how to access this power.

Ready to Try a Managed Host?

Take the opportunity now and let us help you determine if managed hosting or one of our other cloud platforms is a good fit for your business? Find out how we can make the cloud work for you.

Recent Posts

Colocation Pricing: Definitive Guide to the Costs of Colocation

Server colocation is an excellent option for businesses that want to streamline server operations. Companies can outsource power and bandwidth costs by leasing space in a data center while keeping full control over hardware and data. The cost savings in power and networking alone can be worth moving your servers offsite, but there are other expenses to consider.

This guide outlines the costs of colocation and helps you better understand how data centers price colocation.

12 Colocation Pricing Considerations Before Selecting a Provider

1. Hardware – You Pick, Buy and Deploy

With colocation server hosting, you are not leasing a dedicated server. You are deploying your own equipment, so you need to buy hardware. As opposed to leasing, that might seem expensive as you are making a one-time upfront purchase. However, there is no monthly fee afterward, like with dedicated servers. Above all, you have full control and select all hardware components.

Prices vary greatly; entry-level servers start as low as $600. However, it would be reasonable to opt for more powerful configurations that cost $1000+. On top of that, you may need to pay for OS licenses. Using open-source solutions like CentOS reduces costs.

Many colocation providers offer hardware-as-a-service in conjunction with colocation. You get the equipment you need without any upfront expenses. If you need the equipment as a long-term investment, look for a lease-to-own agreement. At the end of the contract term, the equipment belongs to you.

When owning equipment, it is also a good idea to have a backup for components that fail occasionally. Essential backup components are hard drives and memory.

2. Rack Capacity – Colocation Costs Per Rack

Colocation pricing is greatly determined by the required physical space rented. Physical space is measured either in rack units (U) or per square foot. One U is equivalent to 1.75 inches in height and may cost $50 – $300 per month.

For example, each 19-inch wide component is designed to fit in a certain number of rack units. Most servers take up one to four rack units of space. Usually, colocation providers set a minimum of a ¼ rack of space. Some may set a 1U minimum, but selling a single U is rare nowadays.

When evaluating a colocation provider, consider square footage, cabinet capacity, and power (KW) availability per rack. Costs will rise if a private cage or suite is required.

Another consideration is that racks come in different sizes. If you are unsure of the type of rack your equipment needs, opt for the standard 42U rack. If standard dimensions don’t work for you, most providers accept custom orders. You pick the dimensions and power capacity. This will increase costs but provides full control over your deployment.

3. Colo Electrical Power Costs – Don’t Skip Redundant Power

The reliability and cost of electricity is a significant consideration for your hosting costs. There are several different billing methods. Per-unit billing costs a certain amount per kilowatt (or per kilovolt-amp). You may be charged for a committed amount, with an extra fee for any usage over that amount. Alternatively, you may pay a metered fee for data center power usage. Different providers have different service levels and power costs.

High-quality colocation providers offer redundancy or A/B power. Some offer it at an additional charge, while others include it by default and roll it into your costs. Redundancy costs little but gives you peace of mind. To avoid potential downtime, opt for a colocation provider that offers risk management.

Finally, consider the needs of your business against the cost of electricity and maximum uptime. If you expect to see massive fluctuations in electrical usage during your contract’s life, let the vendor know upfront. Some providers offer modular pricing that will adjust costs to anticipated usage over time.

4. Setup Fees – Do You Want to Outsource?

Standard colocation Service Level Agreements (SLAs) assume that you will deploy equipment yourself. However, providers offer remote hands onsite and hardware deployment.

You can ship the equipment, and the vendor will deploy it. That’s the so-called Rack and Stack service. They will charge you a one-time setup fee for the service. This is a good option if you do not have enough IT staff. Another reason might be that the location is so far away that the costs of sending your IT team exceed the costs of outsourcing. Deployment may cost from $500 to $3,000 depending on whether you outsource this task or not.

5. Remote Hands – Onsite Troubleshooting

Colocation rates typically do not include support. It is up to your IT team to deploy, set up, and manage hardware. However, many vendors offer a range of managed services for an additional fee.

Those may include changing malfunctioning hardware, monitoring, management, patching, DNS, and SSL management, among others. Vendors will charge by the hour for remote hands.

There are many benefits to having managed services. However, that increases costs and moves you towards a managed hosting solution.

6. Interconnectivity – Make Your Own Bandwidth Blend

The main benefit of colocating is the ability to connect directly to an Internet Service Provider (ISP). Your main office may be in an area limited to a 50 Mbps connection. Data centers contract directly with the ISP to get hundreds or thousands of megabits per second. They also invest in high-end fiber optic cables for maximum interconnectivity. Their scale and expertise help achieve a better price than in-house networks.

The data center itself usually has multiple redundant ISP connections. When leasing racks at a carrier-neutral data center, you can opt to create your own bandwidth blend. That means if one internet provider goes down, you can transfer your critical workloads to a different provider and maintain service.

Lastly, you may have Amazon Cloud infrastructure you need to connect with. If so, search for a data center that serves as an official Amazon AWS Direct Connect edge location. Amazon handpicks data centers and provides premium services.

7. Speed and Latency – Application Specific

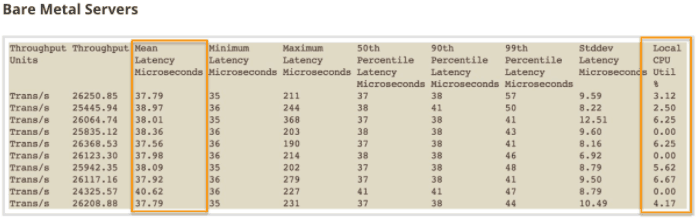

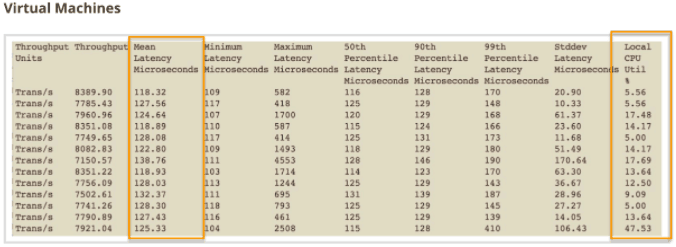

Speed is a measure of how fast the signals travel through a network. It can also refer to the time it takes for a server to respond to a query. As the cost of fiber networking decreases, hosts achieve ever-faster speeds. Look for transfer rates, measured in Gbps. You will usually see numbers like 10Gbps (10 gigabits per second) or 100 Gbps (100 gigabits per second). These are a measure of how fast data travels across the network.

A second speed factor is the server response time in milliseconds (ms). This measures the time between a request and a server reply. 50 milliseconds is a fast response time, 70ms is good, and anything over 200ms might lag noticeably. This factor is also determined by geo-location. Data travels fast, but the further you are from the server, the longer it takes to respond. For example, 70 milliseconds is a good response time for cross-continent points of communication. However, such speeds are below par for close points of communication.

In the end, server response time requirements can differ significantly between different use cases. Consider whether your deployment needs the lowest possible latency. If not, you can get away with higher server response times.

8. Colocation Bandwidth Pricing – Burstable Billing

Bandwidth is a measure of the volume of data that transmits over a network. Depending on your use case, bandwidth needs might be significant. Colocation providers work with clients to determine their bandwidth needs before signing a lease.

Most colocation agreements bill the 95th percentile in a given month. Providers also call this burstable billing. Burstable billing is calculated by measuring peak usage during a five-minute sampling. Vendors ignore the top 5% of peak usage. The other 95% is the usage threshold. In other words, vendors expect your usage will be at or below that amount 95% of the time. As a result, most networks are over-provisioned, and clients can exceed the set amount without advanced planning.

9. Location – Disaster-Free

Location can profoundly affect the cost of hosting. For example, real estate prices impact every data center’s expenses, which are passed along to clients. A data center in an urban area is more expensive than one in a rural area due to several factors.

A data center may charge more for convenience if they are in a central location, near an airport, or easily accessed. Another factor is the cost of travel. You may get a great price on a colocation host that is halfway across the state. That might work if you can arrange a service contract with the vendor to manage your equipment. However, if employees are required onsite, the travel costs might offset savings.

Urban data centers tend to offer more carriers onsite and provide far more significant and cheaper connectivity. However, that makes the facility more desirable and may drive up costs. On the other hand, in rural data centers, you may spend less overall for colocation but more on connectivity. For end-clients, this means a balancing act between location, connectivity, and price.

Finally, location can be a significant factor in Disaster Recovery if you are looking for a colocation provider that is less prone to natural disasters. Natural disasters such as floods, tornados, hurricanes, lightning strikes, and fires seem to be quite common nowadays. However, many locations are less prone to natural disasters. Good data centers go the extra mile to protect the facility even if such disasters occur. You can expect higher fees at a disaster-free data center. But it’s worth the expense if you are looking for a Disaster Recovery site for your backups.

Before choosing a colocation provider, ask detailed questions in the Request for Proposal (RFP). Verify if there was a natural disaster in the last ten years. If yes, determine if there was downtime due to the incident.

10. Facilities and Operations – Day-to-Day Costs

Each colocation vendor has its own day-to-day operating costs. Facilities and operations costs are rolled into a monthly rate and generally cover things like critical environment equipment, facility upkeep and repair, and critical infrastructure.

Other benefits that will enhance your experience are onsite parking, office space, conference rooms, food and beverage services, etc. Some vendors offer such commodities as standard, others charge, while low-end facilities do not provide them at all.

11. Compliance

Compliance refers to special data-handling requirements. For example, some data centers are HIPAA compliant, which is required for a medical company. Such facilities may be more sought after and thus more expensive.

Just bear in mind that if a data center is HIPAA compliant doesn’t necessarily mean that your deployment will be too. You need to make sure that you manage equipment in line with HIPAA regulations.

12. Security

You should get a sense of the level of security included with the colocation fee. Security is critical for the data center. In today’s market, 24/7 video surveillance, a perimeter fence, key card access, mantraps, biometric scans, and many more security features should come as standard.

The Final Word: Colocation Data Center Pricing Factors

The most important takeaway is that colocation hosting should match your business needs. Take a few minutes to learn about your provider and how they operate their data center.

Remember, many of the colocation hosting costs are clear and transparent, like power rates and lease fees. Other considerations are less obvious, like the risk of potential downtime and high latency. Pay special attention to the provider’s Service Level Agreement (SLA). Every service guaranteed is listed in the SLA, including uptime guarantees.

Recent Posts

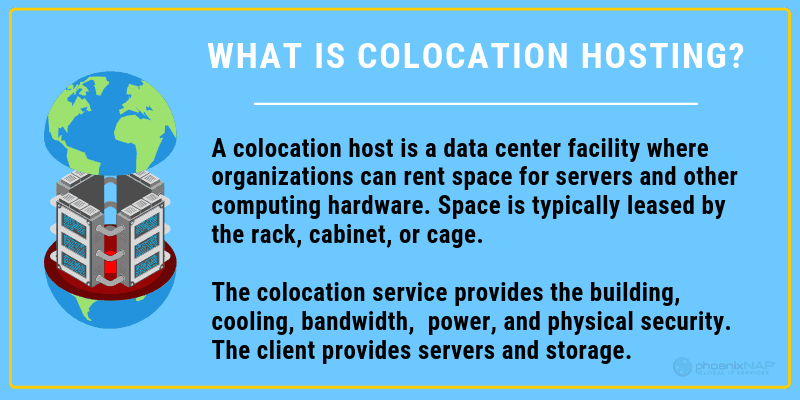

What is Colocation Hosting? How Does it Work?

When your company is in the market for a web hosting solution, there are many options available.

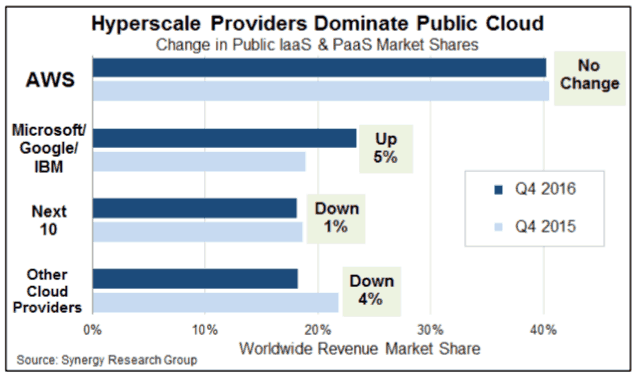

Colocation is popular among businesses seeking benefits of a larger internal IT department without incurring the costs of a managed service provider.

What is Colocation Hosting?

Colocation hosting is a type of service a data center offers, in which it leases space and provides housing for servers. The clients own the servers and claim full authority over the hardware and software. However, the storage facility is responsible for maintaining a secure server environment.

Colocation services are not the same as cloud services. Colocation clients own hardware and lease space, with cloud services they do not have their hardware but lease it from the provider.

Colocation hosting should not be confused with managed (dedicated) services, as the second implies the data center also assumes management and maintenance control over the servers. With colocation hosting, the clients are the one who is responsible for supplying, maintaining, and managing their servers.

How does Server Colocation Hosting Work?

Maintaining and managing servers begins by ensuring the environment allows them to work at full capacity. However, this is the main problem businesses with “server closets” deal with. If companies are incapable of taking on such responsibilities on-premises, they will search for a data center that offers colocation services.

Colocation as a service works for businesses who already own hardware and software, but are unable to provide the conditions to store them. The clients, therefore, lease space from their service providers who offer housing for hardware, as well as environmental management.

Clients move their hardware to a data center, set up, and configure their servers. There is no physical contact between the provider and the clients’ hardware unless they specifically request additional assistance known as remote hands.

While the hardware is hosted, the data center assumes all responsibility for environmental management, such as cooling, a reliable power supply, on-premises security, and protection against natural disasters.

What is Provided by the Colocation Host?

The hosting company’s responsibilities typically include:

Security

The hosting company secures and authorizes access to the physical location. The security measures include installing equipment such as cameras, biometric locks, and identification for any personnel on site. Clients are responsible for securing their servers against cyber-attacks. The provider ensures no one without authorization can come close to the hardware.

Power

The data center is responsible for electricity and any other utilities required by the servers. This also includes energy backups, such as generators, in case of a power outage. Getting and using power efficiently is an essential component. Data centers can provide a power supply infrastructure that guarantees the highest uptime.

Cooling

Servers and network equipment generate a considerable amount of heat. Hosts provide advanced redundant cooling systems, so servers run optimally. Proper cooling can prevent damage and extends the life of your hardware.

Storage

A datacenter leases physical space for your servers. You can decide to store your hardware in any of the three options:

- Stand-alone cabinets: Each cabinet can house several servers in racks. Providers usually lease entire cabinets, and some may even offer partial cabinets.

- Cages: A cage is a separated, locked area in which server cabinets are stored. Cages physically isolate access to the equipment inside and can be built to house as many cabinets as the customer may need.

- Suites: These are secure, fully enclosed rooms in the colocation data center.

Disaster Recovery

The host needs to have a disaster recovery plan. It starts by building a data center away from disaster-prone areas. Also, this means reinforcing the site against disruption. For example, a host uses a backup generator in case of a power outage, or they might contract with two or more internet providers if one goes down.

Compliance

Healthcare facilities, financial services, and other businesses that deal with sensitive, confidential information need to adhere to specific compliance rules. They need unique configuration and infrastructure that are in line with official regulations.

Clients can manage setting up compliant servers. However, the environment in which they y are housed also needs to be compliant. Providing such settings is challenging and expensive. For this reason, customers turn to data centers. For example, a company that stores patients’ medical records requires a HIPAA compliant hosting provider.

Benefits of Colocation Hosting

Benefits of Colocation Hosting

Colocation hosting is an excellent solution for companies with existing servers. However, some clients are a better fit for colocation than others.

Reduced Costs

One of the main advantages of colocation hosting is reduced power and network costs. Building a high-end server environment is expensive and challenging. A colocation provider allows you to reap the benefits of such a facility without investing in all the equipment. Clients colocate servers to a secure, first-class infrastructure without spending money on creating one.

Additionally, colocation services allow customers to organize their finances with a predictable hosting bill. Reduced costs and consistent expenses contribute to stabilizing businesses and frees capital for other IT investments.

Expert Support

By moving servers to a data center, clients ensure full-time expert support. Colocation hosting providers specialize in the day-to-day operation of the facility, relieving your IT department from these duties. With power, cooling, security, and network hardware handled, your business can focus on hardware and software maintenance.

Scalability and Room to Grow

Colocation hosting also has the advantage of providing flexible resources that clients can scale according to their needs without having to make recurring capital investments. Allowing customers to expand to support their market growth is an essential feature if you want to develop into a successful, profitable business.

Availability 24/7/365

Customers turn to colo hosting because it assures their data is always available to them and their users. What they seek is consistent uptime, which is the time when the server is operational. Providers have emergency services and infrastructure redundancy that contribute to better uptime, as well as a service level agreement. The contract assures that if things are not working as required, customers are protected.

Although the servers may be physically inaccessible, clients have full control over them. Remote customers access and work on their hardware vie management software or with the assistance of remote hands. Using the remote hands service applies to delegate in-house technicians from the data center to assist in management and maintenance tasks. With their help, clients can avoid frequent journeys to the facility.

Clearly defined service level agreements (SLAs)

A colo service provider will have clear service level agreements. An SLA is an essential asset that you need to agree upon with your provider to identify which audits, processes, reporting, and resolution response times and definitions are included with the service. Trusted providers have flexible SLAs and are open to negotiating specific terms and conditions.

Additional Considerations of Colocating Your Hosting

Limited Physical Access

Clients who need frequent physical access to servers need to take into account the obligations that come with moving servers to an off-site location. Customers are allowed access to the facility if they live nearby or are willing to travel. Therefore, if they need frequent physical access, they should find a provider located nearby or near an airport.

Clients may consider a host in a region different from their home office. It is essential to consider travel fees as a factor.

Managing and Maintaining

Clients who need a managed server environment may not meet their needs just with colocation hosting. A colocation host only manages the data center. Any server configuration or maintenance is the client’s responsibility. If you need more hands-on service, consider managed services in addition to colocation. However, bear in mind that managed services come with additional costs.

High Cost for Small Businesses

Small businesses may not be big enough to benefit from colocation. Most hosts have a minimum amount of space clients need to lease. Therefore, a small business running one or two machines could spend more on hosting than they can save. Hardware-as-a-Service, virtual servers, or even outsourced IT might be a better solution for small businesses.

Is a Colocation Hosting Provider a Good Fit?

Colocation is an excellent solution for medium to large businesses without an existing server environment.

Leveraging the shared bandwidth of the colocation provider gives your business the capacity it needs without the costs of on-premises hosting. Colocation helps enterprises reduce the costs of power, bandwidth, and improve uptime through redundant infrastructure and security. With server colocation hosting, the client cooperates with the data center.

Now you can make the best choice for your business’s web hosting needs.

Recent Posts

What is a Meet-Me Room? Why They are Critical in a Data Center

Meet-me rooms are an integral part of a modern data center. They provide a reliable low-latency connection with reduced network costs essential to organizations.

What is a Meet-me Room?

A meet-me room (MMR) is a secure place where customers can connect to one or more carriers. This area enables cable companies, ISPs, and other providers to cross-connect with tenants in the data center. An MMR contains cabinets and racks with carriers’ hardware that allows quick and reliable data transfer. MMRs physically connect hundreds of different companies and ISPs located in the same facility. This peering process is what makes the internet exchange possible.

The meet-me room eliminates the round trip traffic has to take and keep the data inside the facility. Packets do not have to travel to the ISP’s main network and back. By eliminating local loops, data exchange is more secure while also lower costs.

Data Exchange and How it Works

Sending data out to the Internet requires a connection to an Internet Service Provider (ISP).

When two organizations are geographically far apart, the data exchange occurs through a global ISP. Hence, if one system wants to communicate with the other, it first needs to exchange the information with the ISP. Then, the ISP routes the packets to the target system. This process is necessary when two systems are located in different countries or continents. In these cases, a global ISP is crucial for the uninterrupted flow of traffic between the parties.

However, when two organizations are geographically close to each other, they can physically connect. A meet-me room in a data center or carrier hotel enables the two systems to exchange information directly.

Benefits of a Meet-me Room

All colocation data centers house an MMR. Most data centers are carrier neutral. Being carrier neutral means there is a wide selection of network providers for tenants to choose from. When there are more carriers, the chances are higher for customers to contract with that data center. The main reason is that by having multiple choices for providers, customers can improve flexibility, redundancy, and optimize their connection.

The benefits of meet-me rooms include:

- Reduced latency: High-bandwidth, direct connection decreases the number of network hops to a minimum. By eliminating network hops, latency is reduced substantially.

- Reduced cost: By connecting directly through a meet-me room, carriers bypass local loop charges. With many carriers in one place, customers may find more competitive rates.

- Quick expansion: MMRs are an excellent method to provide more fiber connection options for tenants. Carrier neutral data centers can bring more carriers and expand their offering.

Security and Restricted Access

Meet-me rooms are monitored and secure areas within a data center typically encased in fire-rated walls. These areas have restricted access, and unescorted visits are impossible. Multi-factor authentication prevents unauthorized personnel from entering the MMR space.

Cameras record every activity in the room. With a 24/7 surveillance system and biometric scans, security breaches are extremely rare.

Meet-me Room Design

The design and size of meet-me rooms can vary significantly in different colocation and data centers. For example, phoenixNAP’s MMR is a 3000 square foot room with a dedicated cross-connect room. Generally, MMRs should provide sufficient expansion space for new carriers. Potential clients avoid leasing space within a data center that cannot accommodate new ISPs.

One of the things MMRs should offer is 45U cabinets for carriers and network providers’ equipment. MMRs do not always have both AC and DC power options. If the facility only provides one type of power, the design should offer more space for additional carrier equipment.

Cooling is an essential part of every MMR. Data centers and colocation providers take into consideration what type of equipment carriers will install in the meet-me room. High-performance cooling units make sure the MMR temperature always stays within acceptable ranges.

Entrance for Carriers

Network carriers enter a data center’s meet-me room by running a fiber cable from the street to the cross-connect room. One of the possible ways is to use meet-me vaults, sometimes referred to as meet-me boxes. These infrastructure points are essential for secure carrier access to the facility. When appropriately designed, each plays a significant role in bringing a high number of providers to the data center.

Vaults

A meet-me vault is a concrete box for carriers’ fiber optic entry into the facility. Achieving maximum redundancy requires more than one vault in large data centers or carrier hotels.

Meet-me vaults are dug under the surface located at the perimeter of a data center. The closer the meet-me vaults are to the providers’ cable network, the lower the costs are to connect to the facility’s infrastructure. Multiple points of entry and well-positioned meet-me vaults attract more providers. In turn, colocation pricing models are also lower for potential customers of the data center.

The design itself allows dozens of providers to bring high bandwidth connection without sharing the ducts. From meet-me vaults, cables go into the cross-connect room through reinforced trenches.

Cross-Connect Room

A cross-connect room (CCR) is a highly secure location within a data center where carriers connect to customers. In these cases, the fiber may go from the CCR to the carrier’s equipment in the meet-me room or other places in the data center. The primary purpose is to establish cross-connects between tenants and different service providers.

Access to cross-connect rooms is minimal. With carriers’ hardware located in the meet-me rooms, CCRs are a strong fiber entry point.

The Most Critical Room in a Data Center

Meet-me rooms are a critical point for uninterrupted Internet exchange and ensure smooth transmission of data between tenants and carriers Enterprise benefit by establishing a direct connection with their partners and service providers.

Recent Posts

Data Center Security: Physical and Digital Layers of Protection

Data is a commodity that requires an active data center security strategy to manage it properly. A single breach in the system will cause havoc for a company and has long-term effects.

Are your critical workloads isolated from outside cyber security threats? That’s the first guarantee you’ll want to know if your company uses (or plans to use) hosted services.

Breaches into trusted data centers tend to happen more often. The public notices when news breaks about advanced persistent threat (APT) attacks succeeding.

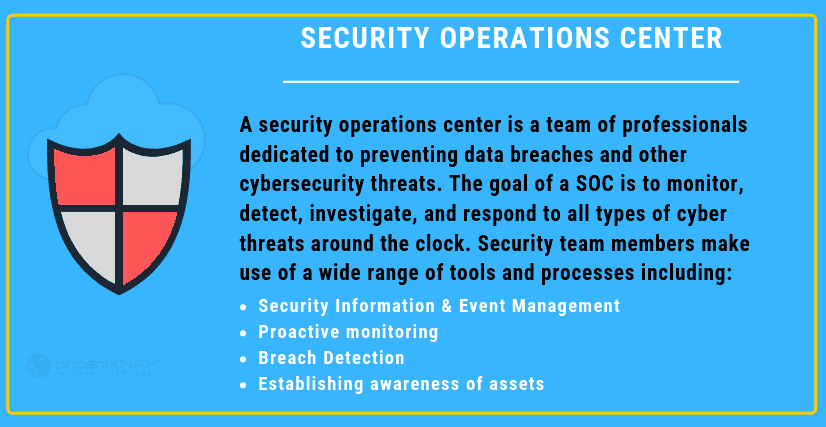

To stop this trend, service providers need to adopt a Zero Trust Model. From the physical structure to the networked racks, each component is designed with this in mind.

Zero Trust Architecture

The Zero Trust Model treats every transaction, movement, or iteration of data as suspicious. It’s one of the latest intrusion detection methods.

The system tracks network behavior, and data flows from a command center in real time. It checks anyone extracting data from the system and alerts staff or revokes rights from accounts an anomaly is detected.

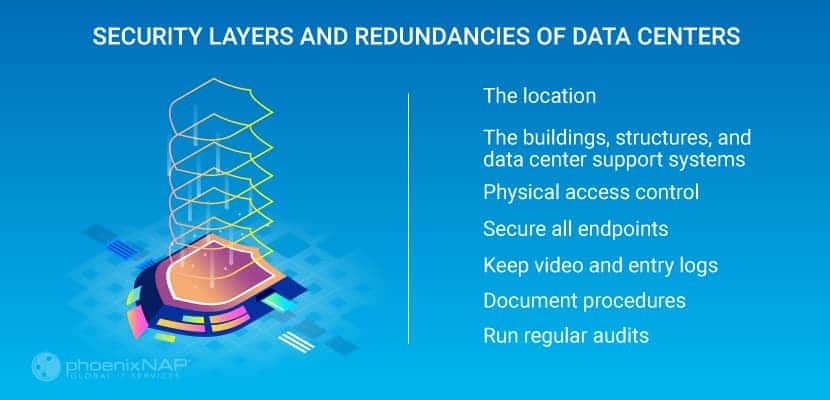

Security Layers and Redundancies of Data Centers

Keeping your data safe requires security controls, and system checks built layer by layer into the structure of a data center. From the physical building itself, the software systems, and the personnel involved in daily tasks.

You can separate the layers into a physical or digital.

Data Center Physical Security Standards

Location

Assessing whether a data center is secure starts with the location.

A trusted Data Center’s design will take into account:

- Geological activity in the region

- High-risk industries in the area

- Any risk of flooding

- Other risks of force majeure

You can prevent some of the risks listed above by having barriers or extra redundancies in the physical design. Due to the harmful effects, these events would have on the operations of the data center; it’s best to avoid them altogether.

The Buildings, Structures, and Data Center Support Systems

The design of the structures that make up the data center needs to reduce any access control risks. The fencing around the perimeter, the thickness, and material of the building’s walls, and the number of entrances it has. All these affect the security of the data center.

Some key factors will also include:

- Server cabinets fitted with a lock.

- Buildings need more than one supplier for both telecom services and electricity.

- Extra power backup systems like UPS and generators are critical infrastructure.

- The use of mantraps. This involves having an airlock between two separate doors, with authentication required for both doors

- Take into account future expansion within the same boundary

- Separate support systems from the white spaces allow authorized staff members to perform their tasks. It also stops maintenance and service technicians from gaining unsupervised entry.

Physical Access Control

Controlling the movement of visitors and staff around the data center is crucial. If you have biometric scanners on all doors – and log who had access to what and when – it’ll help to investigate any potential breach in the future.

Fire escapes and evacuation routes should only allow people to exit the building. There should not be any outdoor handles, preventing re-entry. Opening any safety door should sound an alarm.

All vehicle entry points should use reinforced bollards to guard against vehicular attacks.

Secure All Endpoints

Any device, be it a server, tablet, smartphone or a laptop connected to a data center network is an endpoint.

Data centers give out rack and cage space to clients whose security standards may be dubious. If the customer doesn’t secure the server correctly, the entire data center might be at risk. Attackers are going to try to take advantage of unsecured devices connected to the internet.

For example, most customers want remote access to the power distribution unit (PDU), so they could remotely reboot their servers. Security is a significant concern in such use cases. It is up to facility providers to be aware of and secure all devices connected to the internet.

Maintain Video and Entry Logs

All logs, including video surveillance footage and entry logs, should be kept on file for a minimum of three months. Some breaches are identified when it is already too late, but records help identify vulnerable systems and entry points.

Document Security Procedures

Having strict, well-defined and documented procedures is of paramount importance. Something as simple as a regular delivery needs to well planned to its core details. Do not leave anything open for interpretation.

Run Regular Security Audits

Audits may range from daily security checkups, and physical walkthroughs to quarterly PCI and SOC audits.

Physical audits are necessary to validate that the actual conditions conform to reported data.

Digital Layers of Security in a Data Center

As well as all the physical controls, software, and networks make up the rest of the security and access models for a trusted data center.

There are layers of digital protection that aim to prevent security threats from gaining access.

Intrusion Detection and Prevention Systems

This system checks for advanced persistent threats (APT). It focuses on finding those that have succeeded in gaining access to the data center. APTs are typically sponsored attacks, and the hackers will have a specific goal in mind for the data they have collected.

Detecting this kind of attack requires real-time monitoring of the network and system activity for any unusual events.

Unusual events could include:

- An increase of users with elevated rights accessing the system at odd times

- Increase in service requests which might lead to a distributed-denial of service attack (DDoS)

- Large datasets appearing or moving around the system.

- Extraction of large datasets from the system

- Increase in phishing attempts to crucial personnel

To deal with this kind of attack, intrusion detection and prevention systems (IDPS) use baselines of normal system states. Any abnormal activity gets a response. IDP now uses artificial neural networks or machine learning technologies to find these activities.

Security Best Practices for Building Management Systems

Building management systems (BMS) have grown in line with other data center technologies. They can now manage every facet of a building’s systems. That includes access control, airflow, fire alarm systems, and ambient temperature.

A modern BMS comes equipped with many connected devices. They send data or receive instructions from a decentralized control system. The devices themselves may be a risk, as well as the networks they use. Anything that has an IP address is hackable.

Secure Building Management Systems

Security professionals know that the easiest way to take a data center off the map is by attacking its building management systems.

Manufacturers may not have security in mind when designing these devices, so patches are necessary. Something as insignificant as a sprinkler system can destroy hundreds of servers if set off by a cyber-attack.

Segment the System

Segmenting the building management systems from the main network is no longer optional. What’s more, even with such precautionary measures, attackers can find a way to breach the primary data network.

During the infamous Target data breach, the building management system was on a physically separate network. However, that only slowed down the attackers as they eventually jumped from one network to another.

This leads us to another critical point – monitor lateral movement.

Lateral Movement

Lateral movement is a set of techniques attackers use to move around devices and networks and gain higher privileges. Once attackers infiltrate a system, they map all devices and apps in an attempt to identify vulnerable components.

If the threat is not detected early on, attackers may gain privileged access and, ultimately, wreak havoc. Monitoring for lateral movement limits the time data center security threats are active inside the system.

Even with these extra controls, it is still possible that unknown access points can exist within the BMS.

Secure at the Network Level

The increased use of virtualization-based infrastructure has brought about a new level of security challenges. To this end, data centers are adopting a network-level approach to security.

Network-level encryption uses cryptography at the network data transfer layer, which is in charge of connectivity and routing between endpoints. The encryption is active during data transfer, and this type of encryption works independently from any other encryption, making it a standalone solution.

Network Segmentation

It is good practice to segment network traffic at the software level. This means classifying all traffic into different segments based on endpoint identity. Each segment is isolated from all others, thus acting as an independent subnet.

Network segmentation simplifies policy enforcement. Furthermore, it contains any potential threats in a single subnet, preventing it from attacking other devices and networks.

Virtual Firewalls

Although the data center will have a physical firewall as part of its security system, it may also have a virtual firewall for its customers. Virtual firewalls watch upstream network activity outside of the data center’s physical network. This helps in finding packet injections early without using essential firewall resources.

Virtual firewalls can be part of a hypervisor or live on their own virtualized machines in a bridged mode.

Traditional Threat Protection Solutions

Well-known threat protection solutions include:

- Virtualized private networks and encrypted communications

- Content, packet, network, spam, and virus filtering

- Traffic or NetFlow analyzers and isolators

Combining these technologies will help make sure that data is safe while remaining accessible to the owners.

Data Center Security Standards

The classification system sets standards for data center’s’ controls that ensure availability. As security can affect the uptime of the system, it forms part of their Tier Classification Standard.