5 Cloud Deployment Models: Learn the Differences

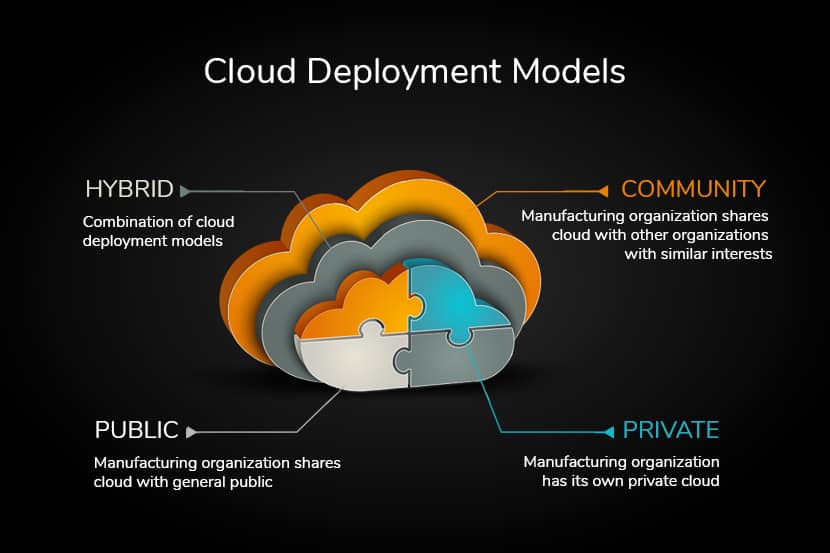

The demand for cloud computing has given rise to different types of cloud deployment models. These models are based on similar technology, but they differ in scalability, cost, performance, and privacy.

It is not always clear which cloud model is an ideal fit for a business. Decision-makers must factor in computing and business needs, and they need to know what different deployment types can offer.

Read on to learn about the five main cloud deployment models and find the best choice for your business.

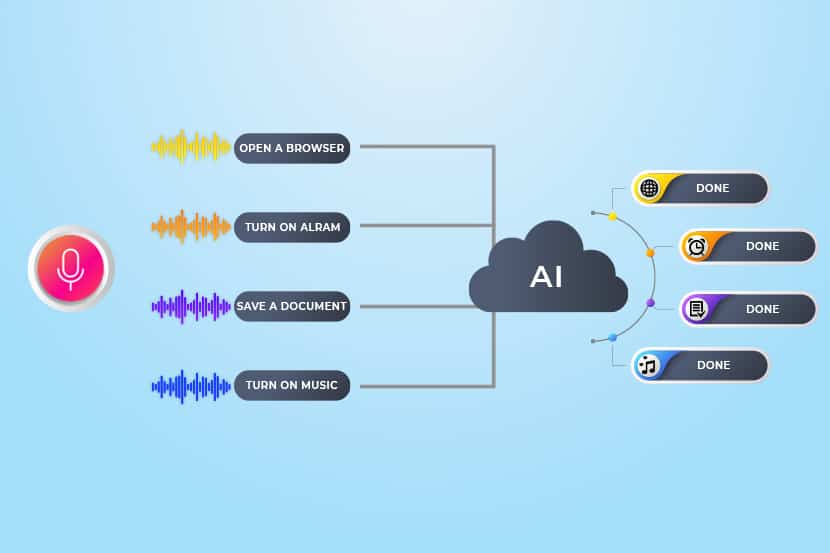

What is Cloud Deployment?

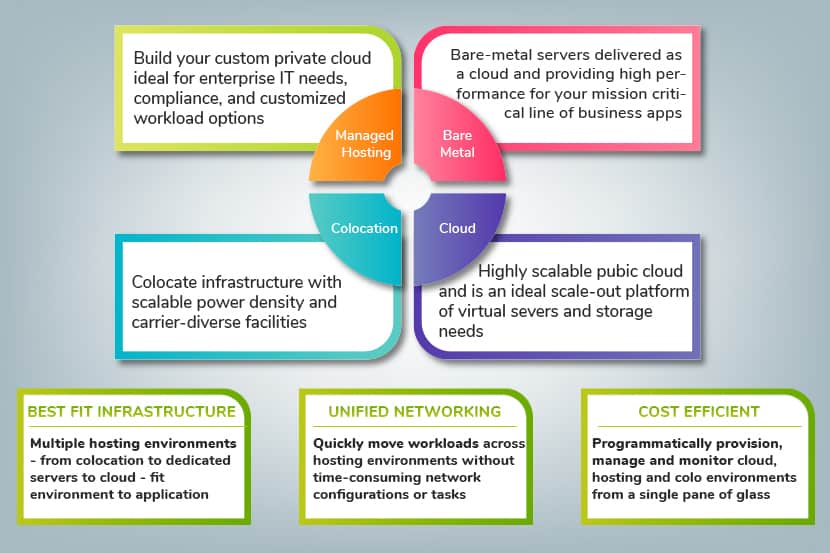

Cloud deployment is the process of building a virtual computing environment. It typically involves the setup of one of the following platforms:

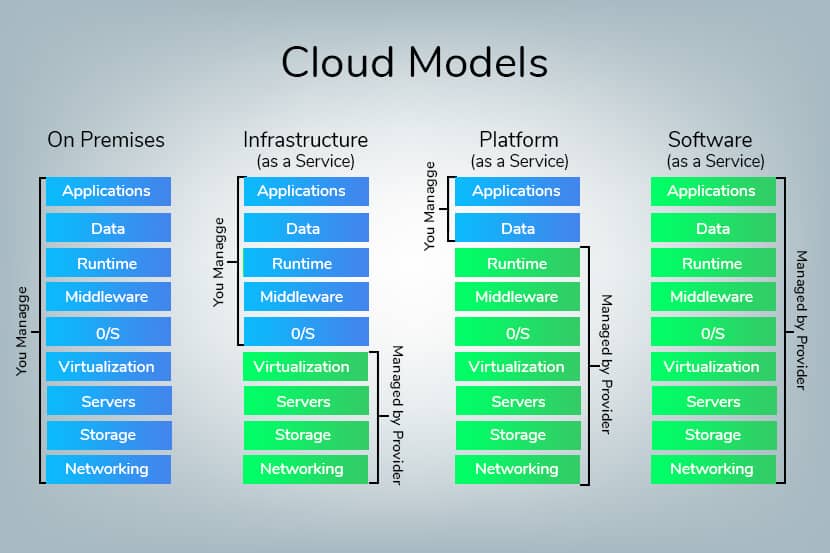

- SaaS (Software as a Service)

- PaaS (Platform as a Service)

- IaaS (Infrastructure as a Service)

Deploying to the cloud provides organizations with flexible and scalable virtual computing resources.

A cloud deployment model is the type of architecture a cloud system is implemented on. These models differ in terms of management, ownership, access control, and security protocols.

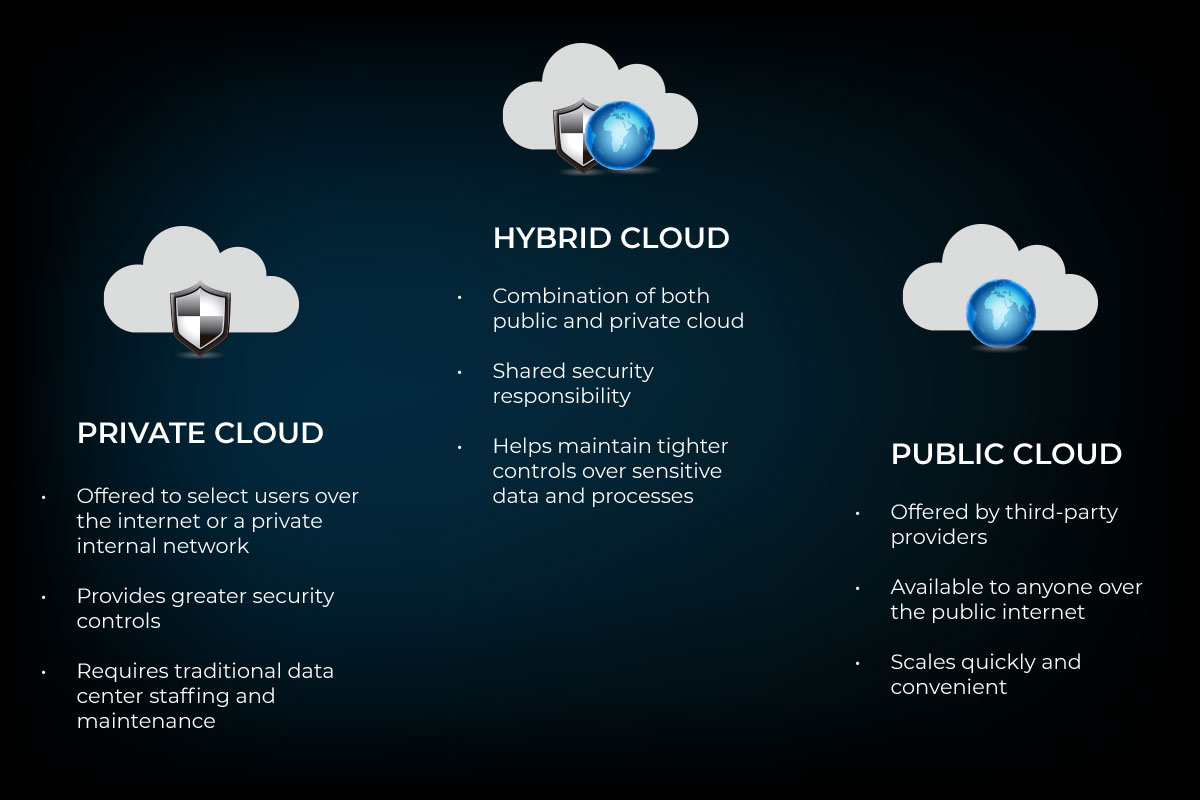

The five most popular cloud deployment models are public, private, virtual private (VPC), hybrid, and community cloud.

Comparison of Cloud Deployment Models

Here is a comparative table that provides an overview of all five cloud deployment models:

Note: The table is scrollable horizontally.

| Public | Private | VPC | Community | Hybrid | |

| Ease of setup | Very easy to set up, the provider does most of the work | Very hard to set up as your team creates the system | Easy to set up, the provider does most of the work (unless the client asks otherwise) | Easy to set up because of community practices | Very hard to set up due to interconnected systems |

| Ease of use | Very easy to use | Complex and requires an in-house team | Easy to use | Relatively easy to use as members help solve problems and establish protocols | Difficult to use if the system was not set up properly |

| Data control | Low, the provider has all control | Very high as you own the system | Low, the provider has all control | High (if members collaborate) | Very high (with the right setup) |

| Reliability | Prone to failures and outages | High (with the right team) | Prone to failures and outages | Depends on the community | High (with the right setup) |

| Scalability | Low, most providers offer limited resources | Very high as there are no other system tenants | Very high as there are no other tenants in your segment of the cloud | Fixed capacity limits scalability | High (with the right setup) |

| Security and privacy | Very low, not a good fit for sensitive data | Very high, ideal for corporate data | Very low, not a good fit for sensitive data | High (if members collaborate on security policies) | Very high as you keep the data on a private cloud |

| Setup flexibility | Little to no flexibility, service providers usually offer only predefined setups | Very flexible | Less than a private cloud, more than a public one | Little flexibility, setups are usually predefined to an extent | Very flexible |

| Cost | Very Inexpensive | Very expensive | Affordable | Members share the costs | Cheaper than a private model, pricier than a public one |

| Demand for in-house hardware | No | In-house hardware is not a must but is preferable | No | No | In-house hardware is not a must but is preferable |

The following sections explain cloud deployment models in further detail.

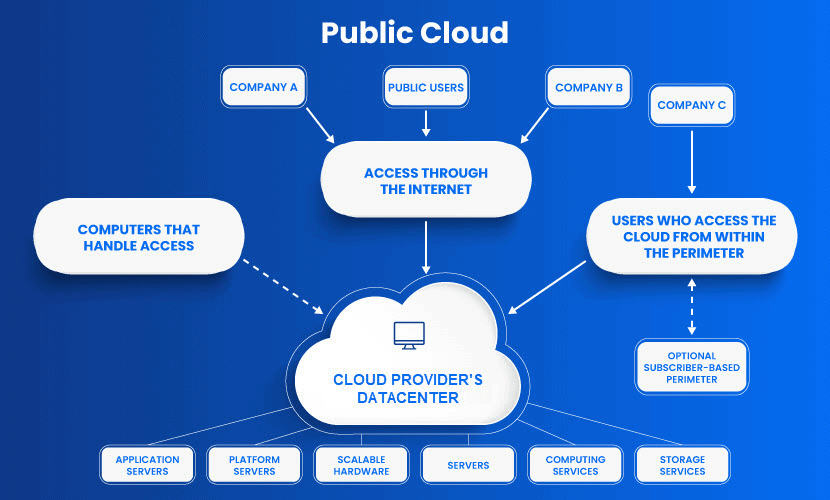

Public Cloud

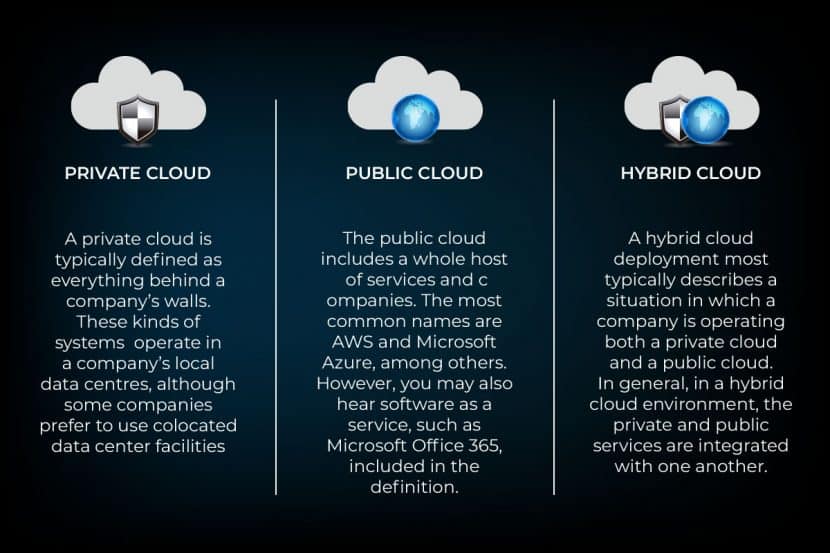

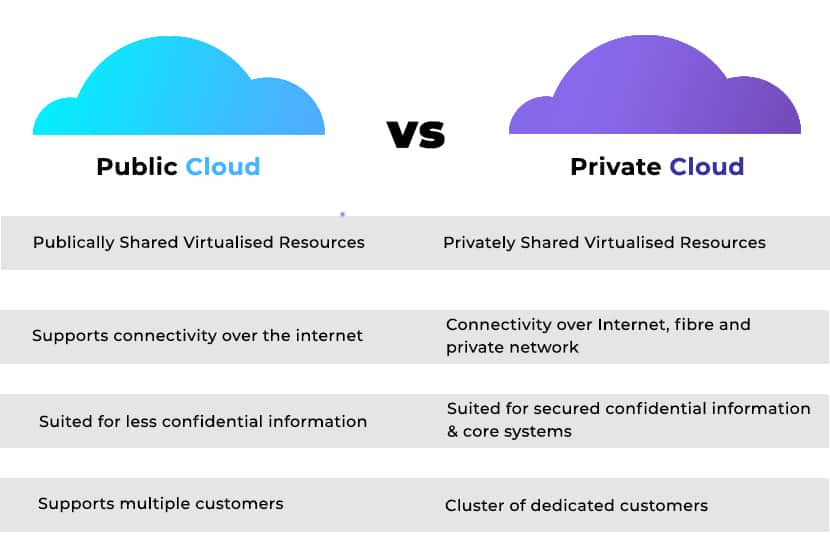

The public cloud model is the most widely used cloud service. This cloud type is a popular option for web applications, file sharing, and non-sensitive data storage.

The service provider owns and operates all the hardware needed to run a public cloud. Providers keep devices in massive data centers.

The public cloud deliverynmodel plays a vital role in development and testing. Developers often use public cloud infrastructure for development and testing purposes. Its virtual environment is cheap and can be configured easily and deployed quickly, making it perfect for test environments.

Advantages of Public Cloud

Benefits of the public cloud include:

- Low cost: Public cloud is the cheapest model on the market. Besides the small initial fee, clients only pay for the services they are using, so there is no unnecessary overhead.

- No hardware investment: Service providers fund the entire infrastructure.

- No infrastructure management: A client does not need a dedicated in-house team to make full use of a public cloud.

Disadvantages of Public Cloud

The public cloud does have some drawbacks:

- Security and privacy concerns: As anyone can ask for access, this model does not offer ideal protection against attacks. The size of public clouds also leads to vulnerabilities.

- Reliability: Public clouds are prone to outages and malfunctions.

- Poor customization: Public offerings have little to no customization. Clients can pick the operating system and the sizing of the VM (storage and processors), but they cannot customize ordering, reporting, or networking.

- Limited resources: Public clouds have incredible computing power, but you share the resources with other tenants. There is always a cap on how much resources you can use, leading to scalability issues.

Private Cloud

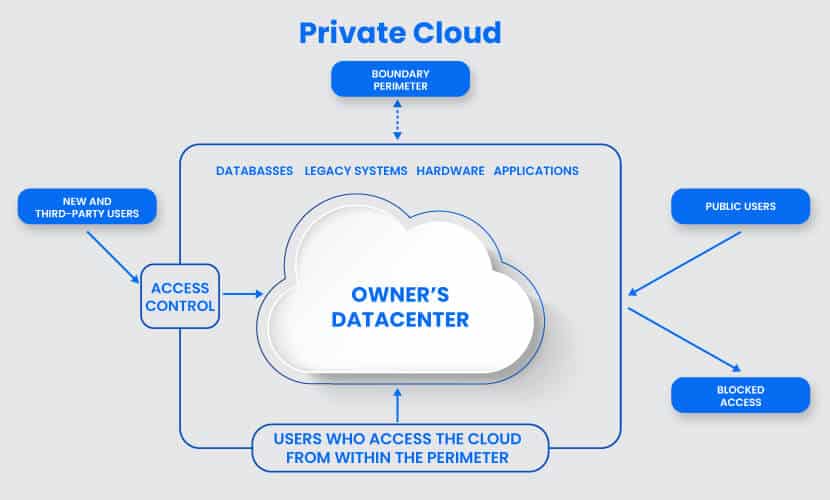

Whereas a public model is available to anyone, a private cloud belongs to a specific organization. That organization controls the system and manages it in a centralized fashion. While a third party (e.g., service provider) can host a private cloud server (a type of colocation), most companies choose to keep the hardware in their on-premises data center. From there, an in-house team can oversee and manage everything.

The private cloud deployment model is also known as the internal or corporate model.

Advantages of Private Cloud

Here are the main reasons why organizations are using a private cloud:

- Customization: Companies get to customize their solution per their requirements.

- Data privacy: Only authorized internal personnel can access data. Ideal for storing corporate data.

- Security: A company can separate sets of resources on the same infrastructure. Segmentation leads to high levels of security and access control.

- Full control: The owner controls the service integrations, IT operations, rules, and user practices. The organization is the exclusive owner.

- Legacy systems: This model supports legacy applications that cannot function on a public cloud.

Disadvantages of Private Cloud

- High cost: The main disadvantage of private cloud is its high cost. You need to invest in hardware and software, plus set aside resources for in-house staff and training.

- Fixed scalability: Scalability depends on your choice of the underlying hardware.

- High maintenance: Since a private cloud is managed in-house, it requires high maintenance.

Virtual Private Cloud (VPC)

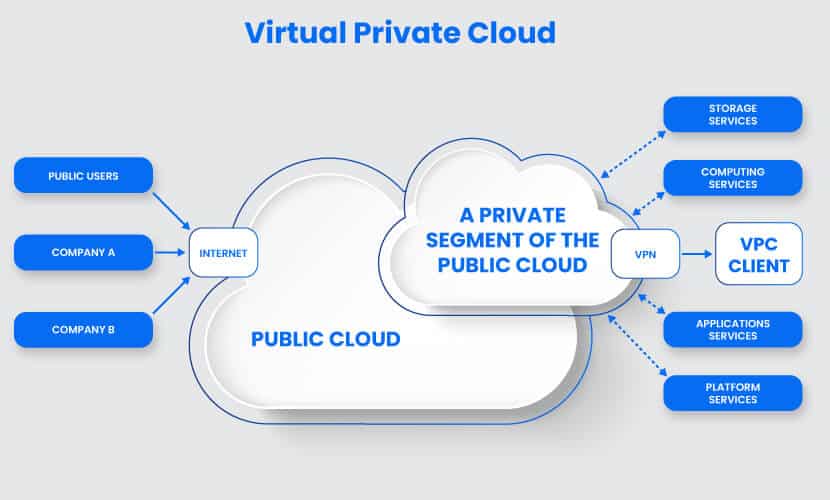

A VPC customer has exclusive access to a segment of a public cloud. This deployment is a compromise between a private and a public model in terms of price and features.

Access to a virtual private platform is typically given through a secure connection (e.g., VPN). Access can also be restricted by the user’s physical location by employing firewalls and IP address whitelisting.

See phoenixNAP's Virtual Private Data Center offering to learn more about this cloud deployment model.

Advantages of Virtual Private Cloud

Here are the positives of VPCs:

- Cheaper than private clouds: A VPC does not cost nearly as much as a full-blown private solution.

- More well-rounded than a public cloud: A VPC has better flexibility, scalability, and security than what a public cloud provider can offer.

- Maintenance and performance: Less maintenance than in the private cloud, more security and performance than in the public cloud.

Disadvantages of Virtual Private Cloud

The main weaknesses of VPCs are:

- It is not a private cloud: While there is some versatility, a VPC is still very restrictive when it comes to customization.

- Typical public cloud problems: Outages and failures are commonplace in a VPC setup.

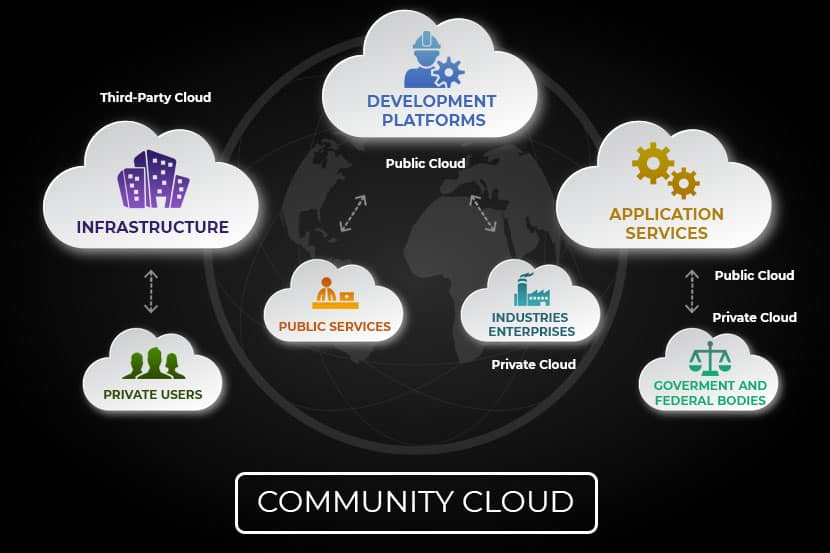

Community Cloud

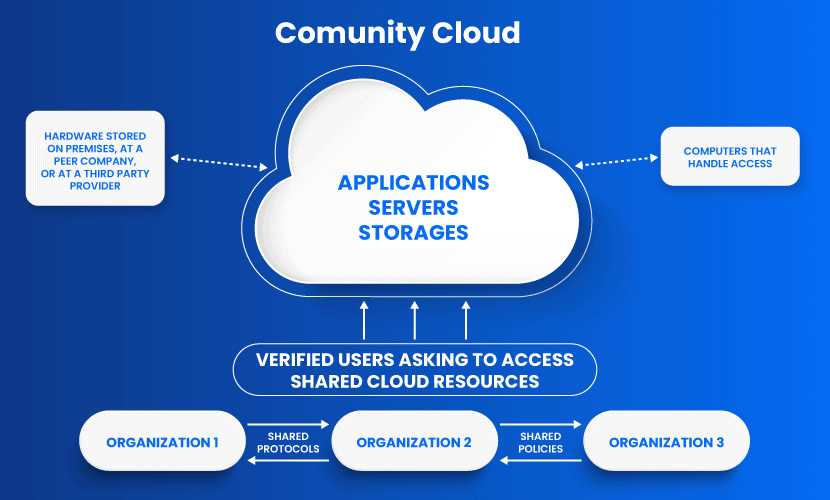

The community cloud deployment model operates as a public cloud. The difference is that this system only allows access to a specific group of users with shared interests and use cases.

This type of cloud architecture can be hosted on-premises, at a peer organization, or by a third-party provider. A combination of all three is also an option.

Typically, all organizations in a community have the same security policies, application types, and legislative issues.

Advantages of Community Cloud

Here are the benefits of a community cloud solution:

- Cost reductions: A community cloud is cheaper than a private one, yet it offers comparable performance. Multiple companies share the bill, which additionally lowers the cost of these solutions.

- Setup benefits: Configuration and protocols within a community system meet the needs of a specific industry. A collaborative space also allows clients to enhance efficiency.

Disadvantages of Community Cloud

The main disadvantages of community cloud are:

- Shared resources: Limited storage and bandwidth capacity are common problems within community systems.

- Still uncommon: This is the latest deployment model of cloud computing. The trend is still catching on, so the community cloud is currently not an option in every industry.

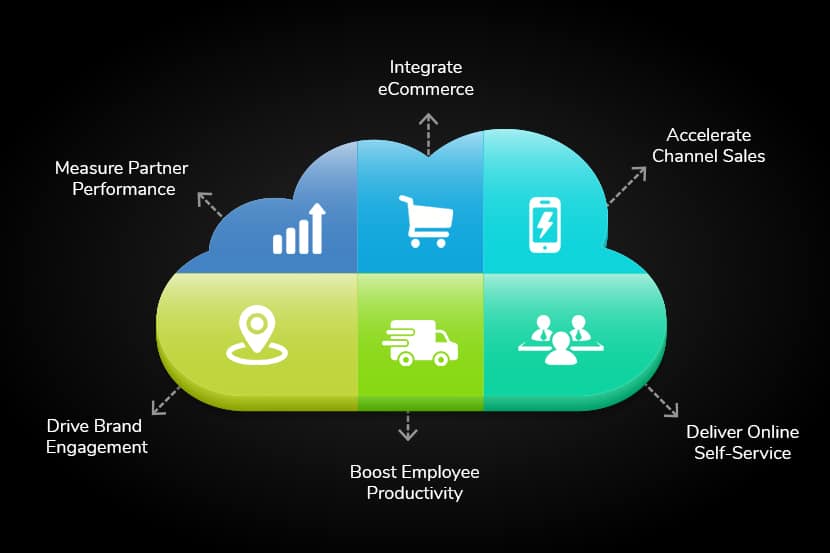

Hybrid Cloud

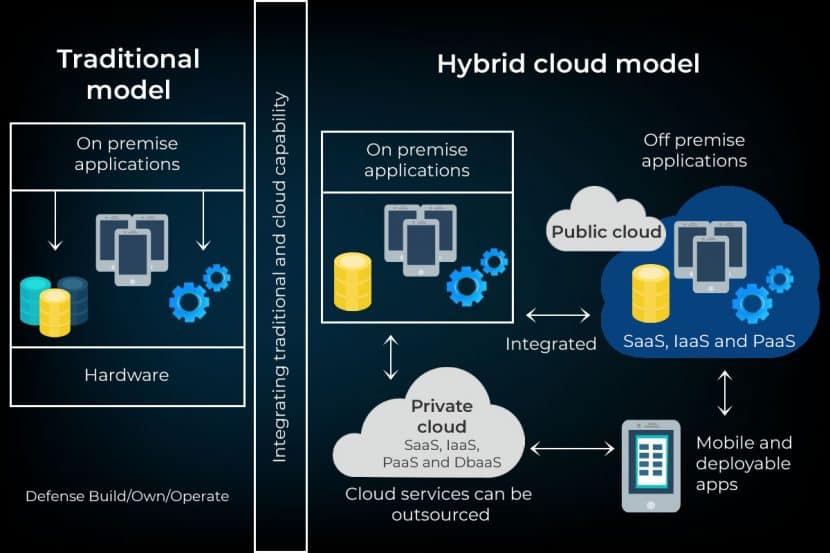

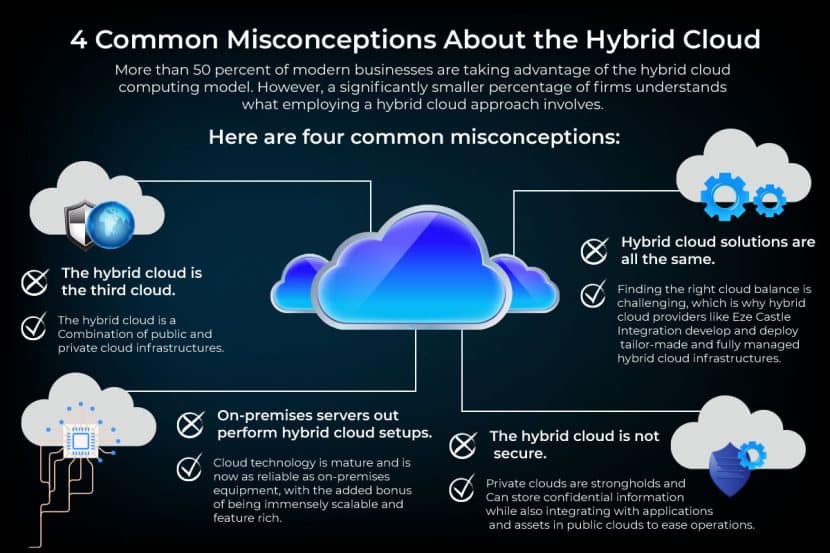

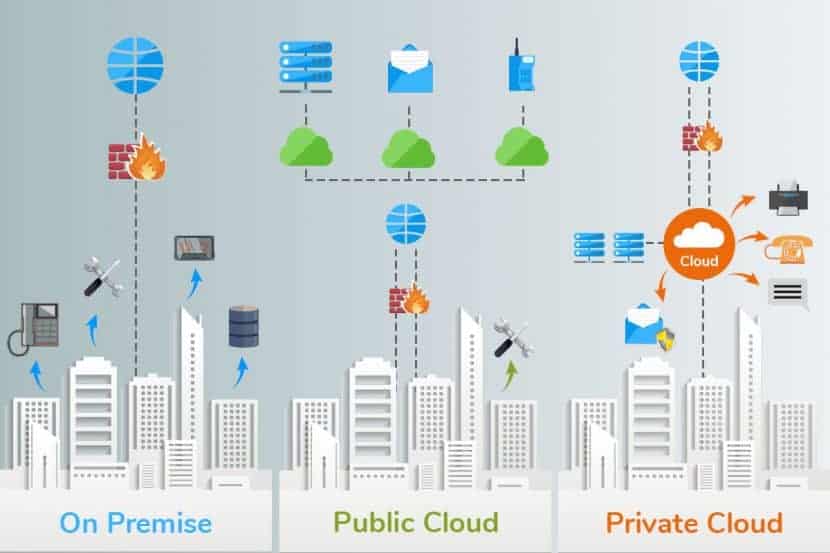

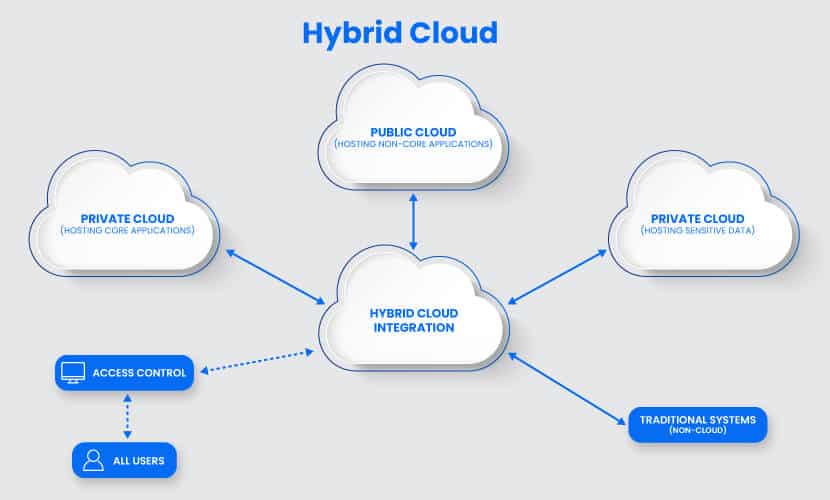

A hybrid cloud is a combination of two or more infrastructures (private, community, VPC, public cloud, and dedicated servers). Every model within a hybrid is a separate system, but they are all a part of the same architecture.

A typical deployment model example of a hybrid solution is when a company stores critical data on a private cloud and less sensitive information on a public cloud. Another use case is when a portion of a firm’s data cannot legally be stored on a public cloud.

The hybrid cloud model is often used for cloud bursting. Cloud bursting allows an organization to run applications on-premises but “burst” into the public cloud in times of heavy load. It is an excellent option for organizations with versatile use cases.

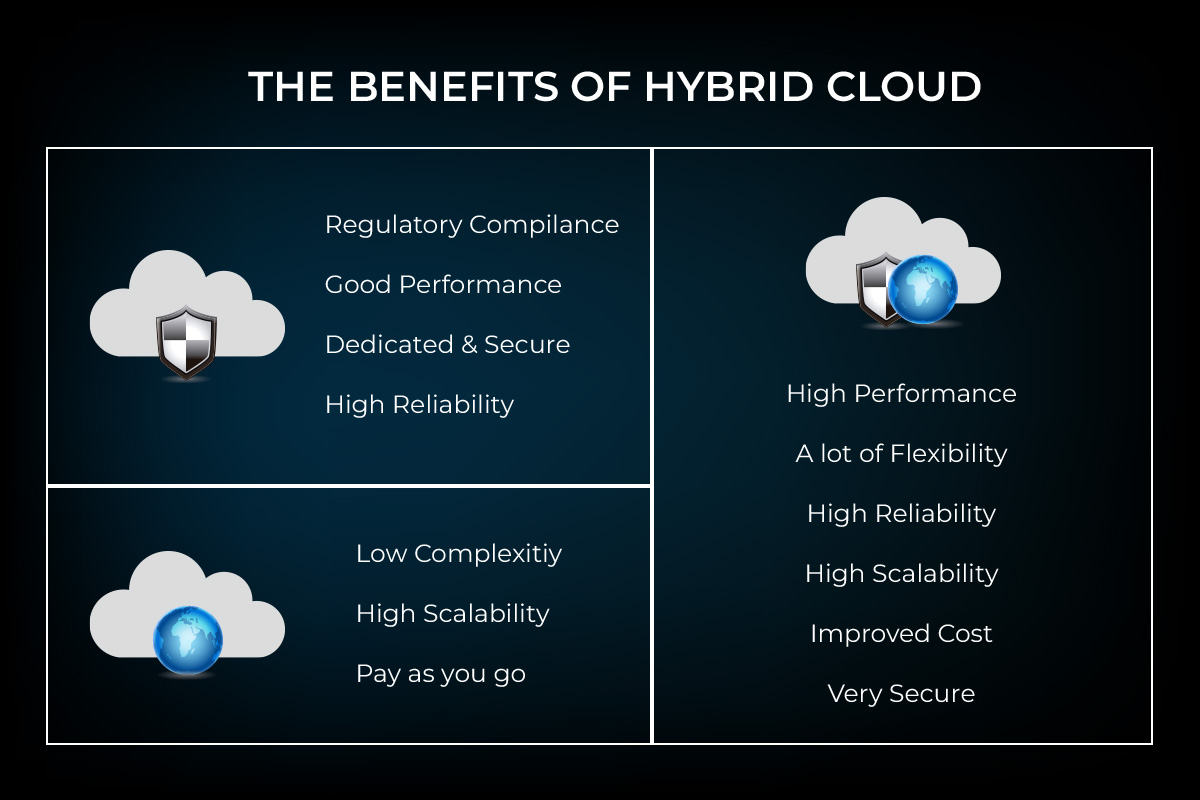

Advantages of Hybrid Cloud

Here are the benefits of a hybrid cloud system:

- Cost-effectiveness: A hybrid solution lowers operational costs by using a public cloud for most workflows.

- Security: It is easier to protect a hybrid cloud from attackers due to segmented storage and workflows.

- Flexibility: This cloud model offers high levels of setup flexibility. Clients can create custom-made solutions that fit their needs entirely.

Disadvantages of Hybrid Cloud

The disadvantages of hybrid solutions are:

- Complexity: A hybrid cloud is complex to set up and manage as you combine two or more different cloud service models.

- Specific use case: A hybrid cloud makes sense only if an organization has versatile use cases or need to separate sensitive and non-sensitive data.

How to Choose Between Cloud Deployment Models

To choose the best cloud deployment model for your company, start by defining your requirements for:

- Scalability: Is your user activity growing? Does your system run into sudden spikes in demand?

- Ease of use: How skilled is your team? How much time and money are you willing to invest in staff training?

- Privacy: Are there strict privacy rules surrounding the data you collect?

- Security: Do you store any sensitive data that does not belong on a public server?

- Cost: How much resources can you spend on your cloud solution? How much capital can you pay upfront?

- Flexibility: How flexible (or rigid) are your computing, processing, and storage needs?

- Compliance: Are there any notable laws or regulations in your country or industry? Do you need to adhere to compliance standards?

Answers to these questions will help you pick between a public, private, virtual private, community, or hybrid cloud.

Typically, a public cloud is ideal for small and medium businesses, especially if they have limited demands. The larger the organization, the more sense a private cloud or Virtual Private Cloud starts to make.

For bigger businesses that wish to minimize costs, there are compromise options like VPCs and hybrids. If your niche has a community offering, that option is worth exploring.

Invest Wisely in Enterprise Cloud Computing Services

Each cloud deployment model offers a unique value to a business. Now that you have a strong understanding of every option on the market, you can make an informed decision and pick the one with the highest ROI.

If security is your top priority, learn more about Data Security Cloud, the safest cloud option on the market.

Recent Posts

Automating Server Provisioning in Bare Metal Cloud with MAAS (Metal-as-a-Service) by Canonical

As part of the effort to build a flexible, cloud-native ready infrastructure, phoenixNAP collaborated with Canonical on enabling nearly instant OS installation. Canonical’s MAAS (Metal-as-a-Service) solution allows for automated OS installation on phoenixNAP’s Bare Metal Cloud, making it possible to set up a server in less than two minutes.

Bare Metal Cloud is a cloud-native ready IaaS platform that provides access to dedicated hardware on demand. Its automation features, DevOps integrations, and advanced network options enable organizations to build a cloud-native infrastructure that supports frequent releases, agile development, and CI/CD pipelines.

Through MAAS integration, Bare Metal Cloud provides a critical capability for organizations looking to streamline their infrastructure management processes.

What is MAAS?

Allowing for self-service, remote OS installation, MAAS is a popular cloud-native infrastructure management solution. Its key features include automatic discovery of network devices, zero-touch deployment on major OSs, and integration with various IaC tools.

Built to enable API-driven server provisioning, MAAS has a robust architecture that allows for easy infrastructure coordination. Its primary components are Region and Rack, which work together to provide high-bandwidth services to multiple racks and ensure availability. The architecture also contains a central postgres database, which deals with operator requests.

Through tiered infrastructure, standard protocols such as IPMI and PXE, and integrations with popular IaaS tools, MAAS helps create powerful DevOps environments. Bare Metal Cloud leverages its features to enable nearly instant provisioning of dedicated servers and deliver a cloud-native ready IaaS platform.

How MAAS Works on Bare Metal Cloud

The integration of MAAS with Bare Metal Cloud allows for under-120-seconds server provisioning and a high level of infrastructure scalability. Rather than building a server automation system from scratch, phoenixNAP relied on MAAS to shorten the go-to-market timeframes and ensure excellent experience for Bare Metal Cloud users.

Designed to bring the cloud experience to bare metal platforms, MAAS enables Bare Metal Cloud users to get full control over their physical servers while having cloud-like flexibility. They can leverage a command line interface (CLI), a web user interface (web UI), and a REST API for querying the properties, deploying operating systems, running custom scripts and initiating reboot the servers.

“phoenixNAP’s Bare Metal Cloud demonstrates the full potential of MAAS,” explained Adam Collard, Engineering Manager, Canonical. “We are excited to support phoenixNAP’s growth in the ecosystem and look forward to working with them to accelerate customer deployments.”

Bare Metal Cloud Features and Usage

The capabilities of MAAS enabled phoenixNAP to automate the server provisioning process and accelerate deployment timeframes of its Bare Metal Cloud. The integration also helped ensure advanced application security and control with consistent performance.

“Incredibly robust and reliable, MAAS is one of the fundamental components of our Bare Metal Cloud,” said Ian McClarty, President of phoenixNAP. “By enabling us to automate OS installation and lifecycle processes for various instance types, MAAS helped us accelerate time to market. We can now offer lightning-fast physical server provisioning to organizations looking to optimize their infrastructure for agile development lifecycles and CI/CD pipelines. Working with the Canonical team was a pleasure at every step of the process, and we look forward to new joint projects in future.”

Bare Metal Cloud is designed with automation in focus and integrates with the most popular IaC tools. It allows for a simple server deployment in under-120-seconds server provisioning, which is enabled by MAAS OS installation automation capabilities. In addition to this, it includes a range of features designed to support modern IT demand and DevOps approaches to infrastructure creation and management.

Bare Metal Cloud Features

- Single-tenant, non-virtualized environment

- Fully automated, API-driven server provisioning

- Integrations with Terraform, Ansible, and Pulumi

- SDK available on GitHub

- Pay-per-use and reserved instances billing models

- Dedicated hardware — no “noisy neighbors”

- Global scalability

- Cutting edge hardware and network technologies

- Built with market proven and well-established technology partners

- Suited for developers and business critical production environments alike

Looking to deploy a Kubernetes cluster on Bare Metal Cloud?

Download our free white paper titled Automating the Provisioning of Kubernetes Cluster on Bare Metal Servers in Public Cloud.

Recent Posts

Infrastructure as Code with Terraform on Bare Metal Cloud

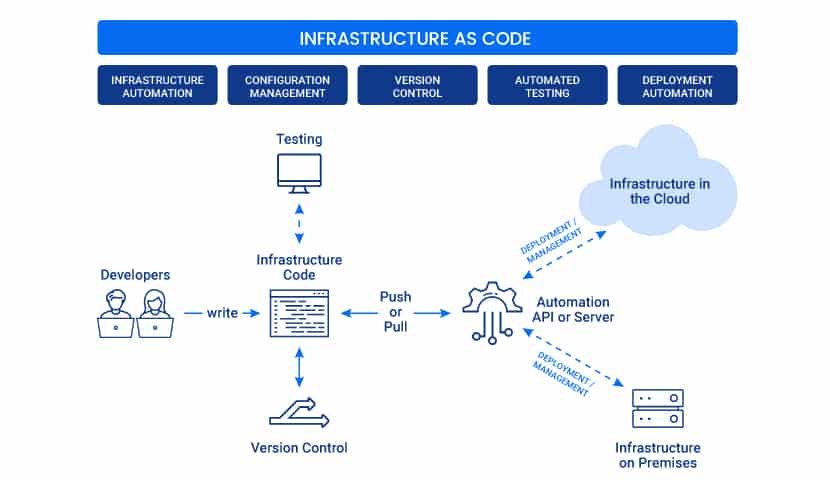

Infrastructure as Code (IaC) simplifies the process of managing virtualized cloud resources. With the introduction of cloud-native dedicated servers, it is now possible to deploy physical machines with the same level of flexibility.

phoenixNAP’s cloud-native dedicated server platform Bare Metal Cloud (BMC), was designed with IaC compatibility in mind. BMC is fully integrated with HashiCorp Terraform, one of the most widely used IaC tools in DevOps. This integration allows users to leverage a custom-built Terraform provider to deploy BMC servers in minutes with just a couple lines of code.

Why Infrastructure as Code?

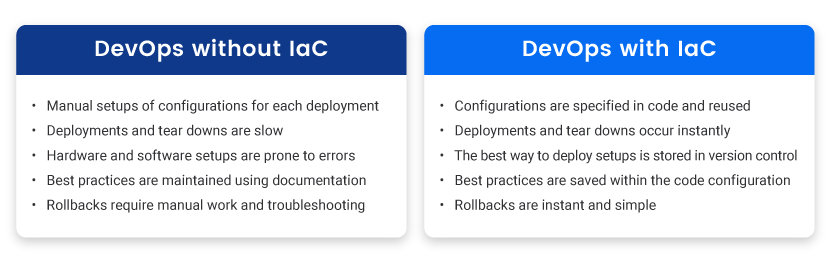

Infrastructure as Code is a method of automating the process of deploying and managing cloud resources through human-readable configuration files. It plays a pivotal role in DevOps, where speed and agility are of the essence.

Before IaC, sys admins deployed everything by hand. Every server, database, load balancer, or network had to be configured manually. Teams now utilize various IaC engines to spin up or tear down hundreds of servers across multiple providers in minutes.

While there are many powerful IaC tools on the market, Terraform stands out as one of the most prominent players in the IaC field.

The Basics of Terraform

Terraform by HashiCorp is an infrastructure as code engine that allows DevOps teams to safely deploy, modify, and version cloud-native resources. Its open source tool is free to use, but most teams choose to use it with Terraform Cloud or Terraform Enterprise, which enable collaboration and governance.

To deploy with Terraform, developers define the desired resources in a configuration file, which is written in HashiCorp Configuration Language (HCL). Terraform then analyzes that file to create an execution plan. Once confirmed by the user, it executes the plan to provision precisely what was defined in the configuration file.

Terraform identifies differences between the desired state and the existing state of the infrastructure. This mechanism plays an essential role in a DevOps pipeline, where maintaining consistency across multiple environments is crucial.

Deploying Bare Metal Cloud Servers with Terraform

Terraform maintains a growing list of providers that support its software. Providers are custom-built plugins from various service providers that users initialize in their configuration files.

phoenixNAP has its own Terraform provider – pnap. Any Bare Metal Cloud user can use it to deploy and manage BMC servers without using the web-based Bare Metal Cloud Portal. The source code for the phoenixNAP provider and documentation is available on the official Terraform provider page.

Terraform Example Usage with Bare Metal Cloud

To start deploying BMC servers with Terraform, create a BMC account, and install Terraform on your local system or remote server. Before running Terraform, gather necessary authentication data and store it in the config.yaml file. You need the clientId and clientSecret, both of which can be found on your BMC account.

Once everything is set up, start defining your desired BMC resources. To do so, create a Terraform configuration file and declare that you want to use the pnap provider:

terraform {

required_providers {

pnap = {

source = "phoenixnap/pnap"

version = "0.6.0"

}

}

}

provider "pnap" {

# Configuration options

}

The section reserved for configuration options should contain the description of the desired state of your BMC infrastructure.

To deploy the most basic Bare Metal Cloud server configuration, s1.c1.small, with an Ubuntu OS in the Phoenix data center:

resource "pnap_server" "My-First-BMC-Server" {

hostname = "your-hostname"

os = "ubuntu/bionic"

type = "s1.c1.small"

location = "PHX"

ssh_keys = [

"ssh-rsa..."

]

#action = "powered-on"

}

The argument name action denotes power actions that can be performed on the server, and they include reboot, reset, powered-on, powered-off, shutdown. While all argument names must contain corresponding values, the action argument does not need to be defined.

To deploy this Bare Metal Cloud instance, run the terraform init CLI command to instruct Terraform to begin the initialization process.

Your Terraform configurations should be stored in a file with a .tf extension. While Terraform uses a domain-specific language for defining configurations, users can also write configuration files in JSON. In that case, the file extension needs to be .tf.json.

Benefits of Using Terraform with Bare Metal Cloud

Benefits of Using Terraform with Bare Metal Cloud

All Terraform configuration files are reusable, scalable, and can be versioned for easier team collaboration on BMC provisioning schemes.

Whether you need to deploy one or hundreds of servers, Terraform and BMC will make it happen. There are no limits to how many servers you can define in your configuration files. You can also use other providers alongside phoenixNAP.

For easier management of complex setups, Terraform has a feature called modules — containers that allow you to define the architecture of your environment in an abstract way. Modules are reusable chunks of code that can call other modules that contain one or more infrastructure objects.

Collaborating on BMC Configurations with Terraform Cloud

Once you’ve learned how to write and provision Terraform configurations, you’ll want to set up a method that allows your entire DevOps team to work more efficiently on deploying new and modifying existing BMC resources.

You can store Terraform configuration in a version control system and run them remotely from Terraform Cloud for free. This helps you reduce the chance of deploying misconfigured resources, improves oversight, and ensures every change is executed reliably from the cloud.

You can also leverage Terraform Cloud’s remote state storage. Terraform state files map Terraform configurations with resources deployed in the real world. Using Terraform Cloud to store state files ensures your team is always on the same page.

Another great advantage of Terraform is that all configuration files are reusable. This makes replicating the same environment multiple times extremely easy. By maintaining consistency across multiple environments, teams can deliver quality code to production faster and safer.

Automate Your Infrastructure

This article gave you a broad overview of how to leverage Terraform’s flexibility to interact with your Bare Metal Cloud resources programmatically. By using the phoenixNAP Terraform provider and Terraform Cloud, you can quickly deploy, configure, and decommission multiple BMC instances with just a couple of lines of code.

This automated approach to infrastructure provisioning improves the speed and agility of DevOps workflows. BMC, in combination with Terraform Cloud, allows teams to focus on building software rather than wasting time waiting around for their dedicated servers to be provisioned manually.

Recent Posts

What is Quantum Computing & How Does it Work?

Technology giants like Google, IBM, Amazon, and Microsoft are pouring resources into quantum computing. The goal of quantum computing is to create the next generation of computers and overcome classic computing limits.

Despite the progress, there are still unknown areas in this emerging field.

This article is an introduction to the basic concepts of quantum computing. You will learn what quantum computing is and how it works, as well as what sets a quantum device apart from a standard machine.

What is Quantum Computing? Defined

Quantum computing is a new generation of computers based on quantum mechanics, a physics branch that studies atomic and subatomic particles. These supercomputers perform computations at speeds and levels an ordinary computer cannot handle.

These are the main differences between a quantum device and a regular desktop:

- Different architecture: Quantum computers have a different architecture than conventional devices. For example, instead of traditional silicon-based memories or processors, different technology platforms, such as super conducting circuits and trapped atomic ions are utilized.

- Computational intensive use cases: A casual user might not have much use for a quantum computer. The computational-heavy focus and complexity of these machines make them suitable for corporate and scientific settings in the foreseeable future.

Unlike a standard computer, its quantum counterpart can perform multiple operations simultaneously. These machines also store more states per unit of data and operate on more efficient algorithms.

Incredible processing power makes quantum computers capable of solving complex tasks and searching through unsorted data.

What is Quantum Computing Used for? Industry Use Cases

The adoption of more powerful computers benefits every industry. However, some areas already stand out as excellent opportunities for quantum computers to make a mark:

- Healthcare: Quantum computers help develop new drugs at a faster pace. DNA research also benefits greatly from using quantum computing.

- Cybersecurity: Quantum programming can advance data encryption. The new Quantum Key Distribution (QKD) system, for example, uses light signals to detect cyber attacks or network intruders.

- Finance: Companies can optimize their investment portfolios with quantum computers. Improvements in fraud detection and simulation systems are also likely.

- Transport: Quantum computers can lead to progress in traffic planning systems and route optimization.

What are Qubits?

The key behind a quantum computer’s power is its ability to create and manipulate quantum bits, or qubits.

Like the binary bit of 0 and 1 in classic computing, a qubit is the basic building block of quantum computing. Whereas regular bits can either be in the state of 0 or 1, a qubit can also be in the state of both 0 and 1.

Here is the state of a qubit q0:

q0 = a|0> + b|1>, where a2 + b2 = 1

The likelihood of q0 being 0 when measured is a2. The probability of it being 1 when measured is b2. Due to the probabilistic nature, a qubit can be both 0 and 1 at the same time.

For a qubit q0 where a = 1 and b = 0, q0 is equivalent to a classical bit of 0. There is a 100% chance to get to a value of 0 when measured. If a = 0 and b = 1, then q0 is equivalent to a classical bit of 1. Thus, the classical binary bits of 0 and 1 are a subset of qubits.

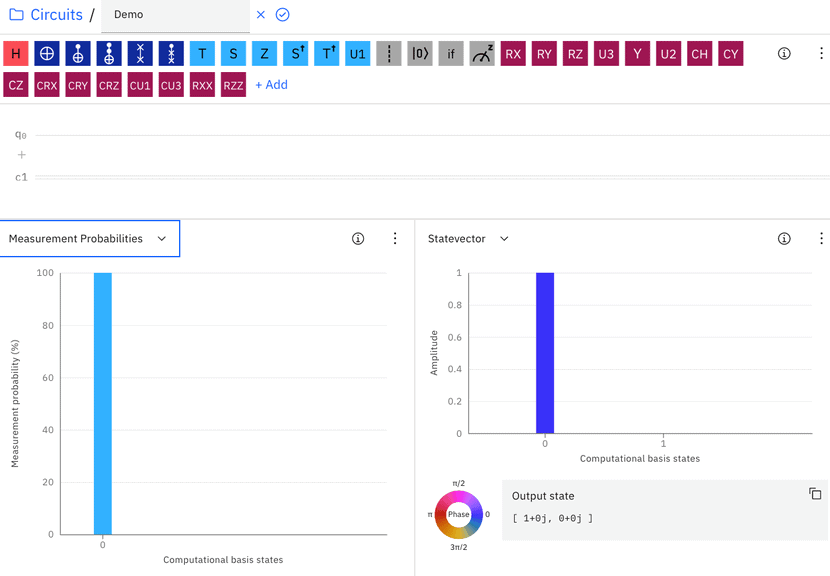

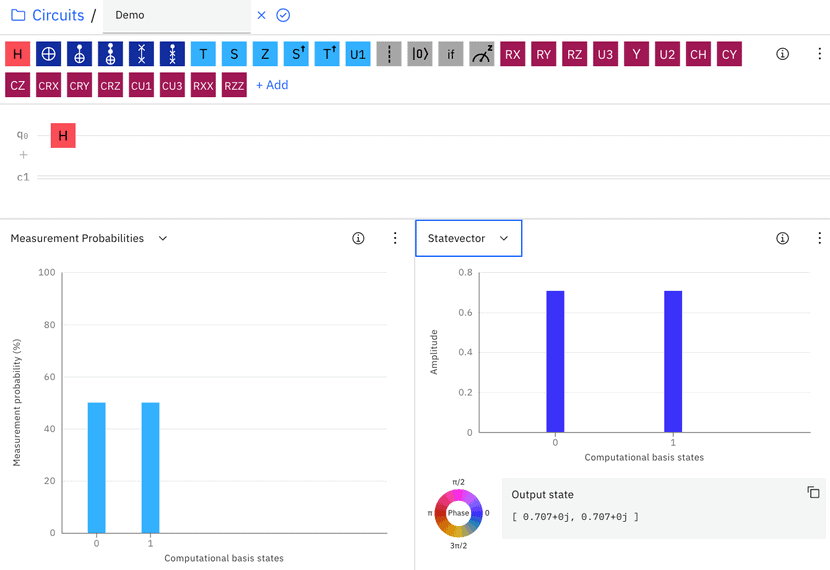

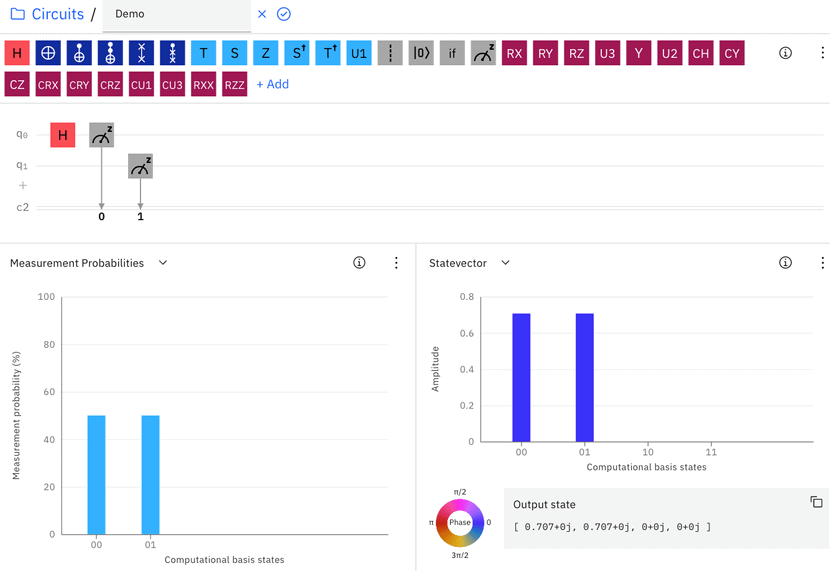

Now, let’s look at an empty circuit in the IBM Circuit Composer with a single qubit q0 (Figure 1). The “Measurement probabilities” graph shows that the q0 has 100% of being measured as 0. The “Statevector” graph shows the values of a and b, which correspond to the 0 and 1 “computational basis states” column, respectively.

In the case of Figure 1, a is equal to 1 and b to 0. So, q0 has a probability of 12 = 1 to be measured as 0.

A connected group of qubits provides more processing power than the same number of binary bits. The difference in processing is due to two quantum properties: superposition and entanglement.

Superposition in Quantum Computing

When 0 < a and b < 1, the qubit is in a so-called superposition state. In this state, it is possible to jump to either 0 or 1 when measured. The probability of getting to 0 or 1 is defined by a2 and b2.

The Hadamard Gate is the basic gate in quantum computing. The Hadamard Gate moves the qubit from a non-superposition state of 0 or 1 into a superposition state. While in a superposition state, there is a 0.5 probability of it being measured as 0. There is also a 0.5 chance of the qubit ending up as 1.

Let’s look at the effect of adding the Hadamard Gate (shown as a red H) on q0 where q0 is currently in a non-superposition state of 0 (Figure 2). After passing the Hadamard gate, the “Measurement Probabilities” graph shows that there is a 50% chance of getting a 0 or 1 when q0 is measured.

The “Statevector” graph shows the value of a and b, which are both square roots of 0.5 = 0.707. The probability for the qubit to be measured to 0 and 1 is 0.7072 = 0.5, so q0 is now in a superposition state.

What Are Measurements?

When we measure a qubit in a superposition state, the qubit jumps to a non-superposition state. A measurement changes the qubit and forces it out of superposition to the state of either 0 or 1.

If a qubit is in a non-superposition state of 0 or 1, measuring it will not change anything. In that case, the qubit is already in a state of 100% being 0 or 1 when measured.

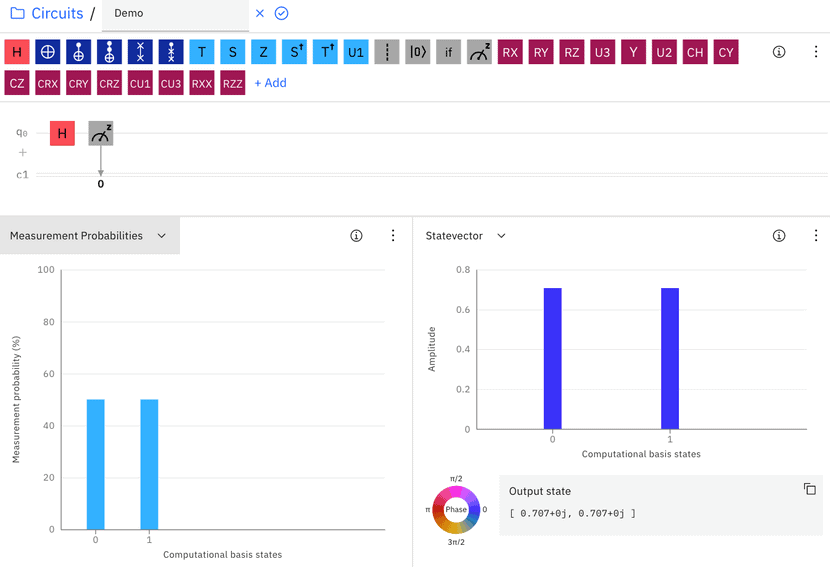

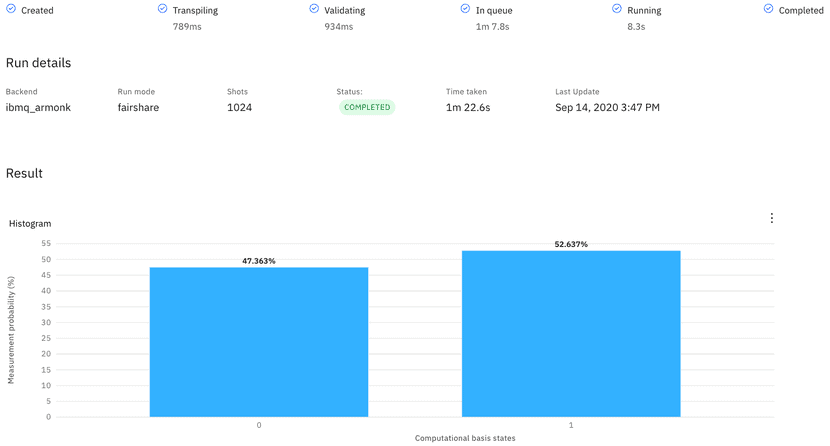

Let us add a measurement operation into the circuit (Figure 3). We measure q0 after the Hadamard gate and output the value of the measurement to bit 0 (a classical bit) in c1:

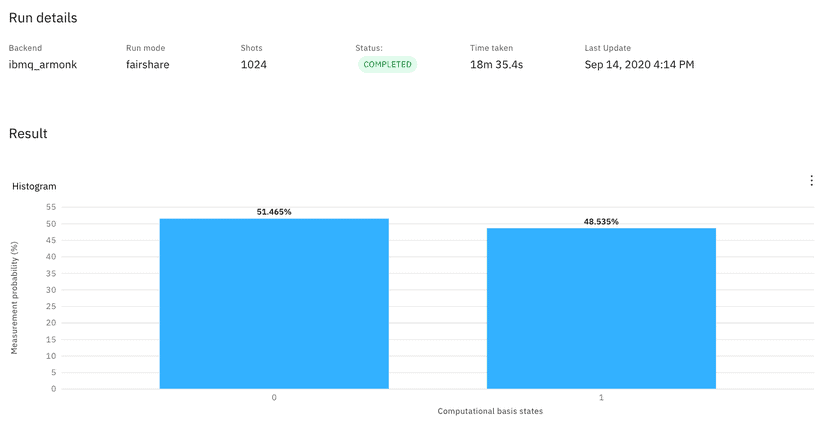

To see the results of the q0 measurement after the Hadamard Gate, we send the circuit to run on an actual quantum computer called “ibmq_armonk.” By default, there are 1024 runs of the quantum circuit. The result (Figure 4) shows that about 47.4% of the time, the q0 measurement is 0. The other 52.6% of times, it is measured as 1:

The second run (Figure 5) yields a different distribution of 0 and 1, but still close to the expected 50/50 split:

Entanglement in Quantum Computing

If two qubits are in an entanglement state, the measurement of one qubit instantly “collapses” the value of the other. The same effect happens even if the two entangled qubits are far apart.

If we measure a qubit (either 0 or 1) in an entanglement state, we get the value of the other qubit. There is no need to measure the second qubit. If we measure the other qubit after the first one, the probability of getting the expected result is 1.

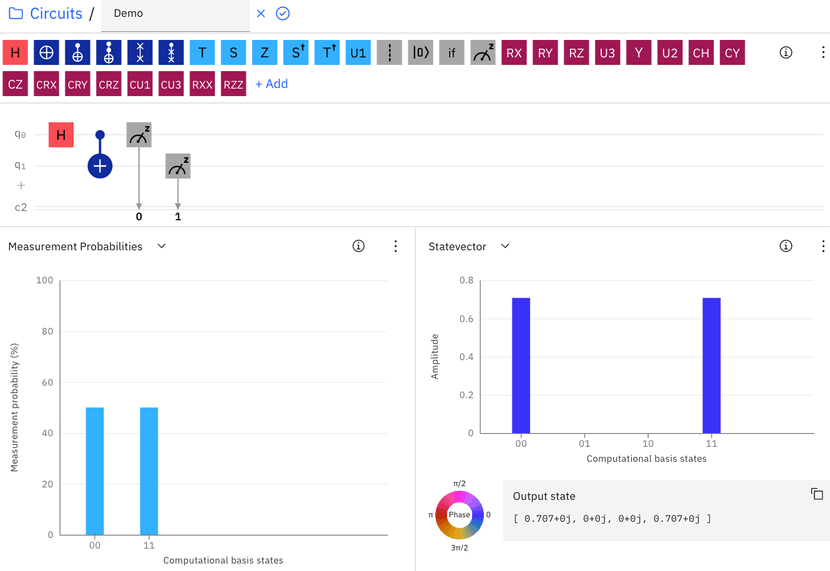

Let us look at an example. A quantum operation that puts two untangled qubits into an entangled state is the CNOT gate. To demonstrate this, we first add another qubit q1, which is initialized to 0 by default. Before the CNOT gate, the two qubits are untangled, so q0 has a 0.5 chance of being 0 or 1 due to the Hadamard gate, while q1 is going to be 0. The “Measurement Probabilities” graph (Figure 6) shows that the probability of (q1, q0) being (0, 0) or (0, 1) is 50%:

Then we add the CNOT gate (shown as a blue dot and the plus sign) that takes the output of q0 from the Hadamard gate and q1 as inputs. The “Measurement Probabilities” graph now shows that there is a 50% chance of (q1, q0) being (0, 0) and 50% of being (1, 1) when measured (Figure 7):

There is zero chance of getting (0, 1) or (1, 0). Once we determine the value of one qubit, we know the other’s value because the two must be equal. In such a state, q0 and q1 are entangled.

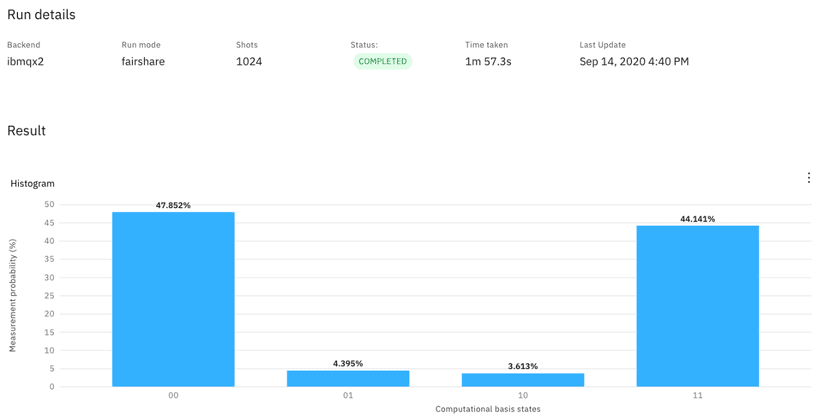

Let us run this on an actual quantum computer and see what happens (Figure 8):

We are close to a 50/50 distribution between the ‘00’ and ‘11’ states. We also see unexpected occurrences of ‘01’ and ‘10’ due to the quantum computer’s high error rates. While error rates for classical computers are almost non-existent, high error rates are the main challenge of quantum computing.

The Bell Circuit is Only a Starting Point

The circuit shown in the ‘Entanglement’ section is called the Bell Circuit. Even though it is basic, that circuit shows a few fundamental concepts and properties of quantum computing, namely qubits, superposition, entanglement, and measurements. The Bell Circuit is often cited as the Hello World program for quantum computing.

By now, you probably have many questions, such as:

- How do we physically represent the superposition state of a qubit?

- How do we physically measure a qubit, and why would that force a qubit into 0 or 1?

- What exactly is the |0> and |1> in the formulation of qubit?

- Why do a2 and b2 correspond to the chance of a qubit being measured as 0 and 1?

- What are the mathematical representations of the Hadamard and CNOT gates? Why do gates put qubits into superposition and entanglement states?

- Can we explain the phenomenon of entanglement?

There are no shortcuts to learning quantum computing. The field touches on complex topics spanning physics, mathematics, and computer science.

There is an abundance of good books and video tutorials that introduce the technology. These resources typically cover pre-requisite concepts like linear algebra, quantum mechanics, and binary computing.

In addition to books and tutorials, you can also learn a lot from code examples. Solutions to financial portfolio optimization and vehicle routing, for example, are great starting points for learning about quantum computing.

The Next Step in Computer Evolution

Quantum computers have the potential to exceed even the most advanced supercomputers. Quantum computing can lead to breakthroughs in science, medicine, machine learning, construction, transport, finances, and emergency services.

The promise is apparent, but the technology is still far from being applicable to real-life scenarios. New advances emerge every day, though, so expect quantum computing to cause significant disruptions in years to come.

Recent Posts

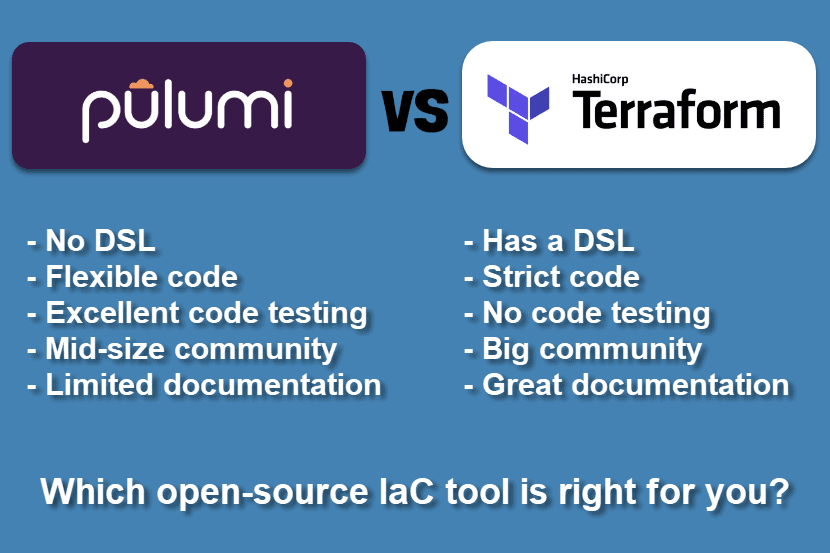

Pulumi vs Terraform: Comparing Key Differences

Terraform, and Pulumi are two popular Infrastructure as Code (IaC) tools used to provision and manage virtual environments. Both tools are open source, widely used, and provide similar features. However, it isn’t easy to choose between Pulumi and Terraform without a detailed comparison.

Below is an examination of the main differences between Pulumi and Terraform. The article analyzes which tool performs better in real-life use cases and offers more value to an efficient software development life cycle.

Key Differences Between Pulumi and Terraform

- Pulumi does not have a domain-specific software language. Developers can build infrastructure in Pulumi by using general-purpose languages such as Go, .NET, JavaScript, etc. Terraform, on the other hand, uses its Hashicorp Configuration Language.

- Terraform follows a strict code guideline. Pulumi is more flexible in that regard.

- Terraform is well documented and has a vibrant community. Pulumi has a smaller community and is not as documented.

- Terraform is easier for state file troubleshooting.

- Pulumi provides superior built-in testing support due to not using a domain-specific language.

What is Pulumi?

Pulumi is an open-source IaC tool for designing, deploying and managing resources on cloud infrastructure. The tool supports numerous public, private, and hybrid cloud providers, such as AWS, Azure, Google Cloud, Kubernetes, phoenixNAP Bare Metal Cloud, and OpenStack.

Pulumi is used to create traditional infrastructure elements such as virtual machines, networks, and databases. The tool is also used for designing modern cloud components, including containers, clusters, and serverless functions.

While Pulumi features imperative programming languages, use the tool for declarative IaC. The user defines the desired state of the infrastructure, and Pulumi builds up the requested resources.

What is Terraform?

Terraform is a popular open-source IaC tool for building, modifying, and versioning virtual infrastructure.

The tool is used with all major cloud providers. Terraform is used to provision everything from low-level components, such as storage and networking, to high-end resources such as DNS entries. Building environments with Terraform is user-friendly and efficient. Users can also manage multi-cloud or multi offering environments with this tool.

How to Install Terraform?

Learn how to get started with Terraform in our guide How to Install Terraform on CentOS/Ubuntu.

Terraform is a declarative IaC tool. Users write configuration files to describe the needed components to Terraform. The tool then generates a plan describing the required steps to reach the desired state. If the user agrees with the outline, Terraform executes the configuration and builds the desired infrastructure.

Pulumi vs Terraform Comparison

While both tools serve the same purpose, Pulumi and Terraform differ in several ways. Here are the most prominent differences between the two infrastructure as code tools:

1. Unlike Terraform, Pulumi Does Not Have a DSL

To use Terraform, a developer must learn a domain-specific language (DSL) called Hashicorp Configuration Language (HCL). HCL has the reputation of being easy to start with but hard to master.

In contrast, Pulumi allows developers to use general-purpose languages such as JavaScript, TypeScript, .Net, Python, and Go. Familiar languages allow familiar constructs, such as for loops, functions, and classes. All these functionalities are available with HCL too, but their use requires workarounds that complicate the syntax.

The lack of a domain-specific language is the main selling point of Pulumi. By allowing users to stick with what they know, Pulumi cuts down on boilerplate code and encourages the best programming practices.

2. Different Types of State Management

With Terraform, state files are by default stored on the local hard drive in the terraform.tfstate file. With Pulumi, users sign up for a free account on the official website, and state files are stored online.

By enabling users to store state files via a free account, Pulumi offers many functionalities. There is a detailed overview of all resources, and users have insight into their deployment history. Each deployment provides an analysis of configuration details. These features enable efficient managing, viewing, and monitoring activities.

What's a State File?

State files help IaC tools map out the configuration requirements to real-world resources.

To enjoy similar benefits with Terraform, you must move away from the default local hard drive setup. To do that, use a Terraform Cloud account or rely on a third-party cloud storing provider. Small teams of up to five users can get a free version of Terraform Cloud.

Pulumi requires a paid account for any setup with more than a single developer. Pulumi’s paid version offers additional benefits. These include team sharing capabilities, Git and Slack integrations, and support for features that integrate the IaC tool into CI/CD deployments. The team account also enables state locking mechanisms.

3. Pulumi Offers More Code Versatility

Once the infrastructure is defined, Terraform guides users to the desired declarative configuration. The code is always clean and short. Problems arise when you try to implement certain conditional situations as HCL is limited in that regard.

Pulumi allows users to write code with a standard programming language, so numerous methods are available for reaching the desired parameters.

4. Terraform is Better at Structuring Large Projects

Terraform allows users to split projects into multiple files and modules to create reusable components. Terraform also enables developers to reuse code files for different environments and purposes.

Pulumi structures the infrastructure as either a monolithic project or micro-projects. Different stacks act as different environments. When using higher-level Pulumi extensions that map to multiple resources, there is no way to deserialize the stack references back into resources.

5. Terraform Provides Better State File Troubleshooting

When using an IaC tool, running into a corrupt or inconsistent state is inevitable. A crash usually causes an inconsistent state during an update, a bug, or a drift caused by a bad manual change.

Terraform provides several commands for dealing with a corrupt or inconsistent state:

refreshhandles drift by adjusting the known state with the real infrastructure state.state {rm,mv}is used to modify the state file manually.importfinds an existing cloud resource and imports it into your state.taint/untaintmarks individual resources as requiring recreation.

Pulumi also offers several CLI commands in the case of a corrupt or inconsistent state:

refreshworks in the same way as Terraform’s refresh.state deleteremoves the resource from the state file.

Pulumi has no equivalent of taint/untaint. For any failed update, a user needs to edit the state file manually.

6. Pulumi Offers Better Built-In Testing

As Pulumi uses common programming languages, the tool supports unit tests with any framework supported by the user’s software language of choice. For integrations, Pulumi only supports writing tests in Go.

Terraform does not offer official testing support. To test an IaC environment, users must rely on third-party libraries like Terratest and Kitchen-Terraform.

7. Terraform Has Better Documentation and a Bigger Community

When compared to Terraform, the official Pulumi documentation is still limited. The best resources for the tool are the examples found on GitHub and the Pulumi Slack.

The size of the community also plays a significant role in terms of helpful resources. Terraform has been a widely used IaC tool for years, so its community grew with its popularity. Pulumi‘s community is still nowhere close to that size.

8. Deploying to the Cloud

Pulumi allows users to deploy resources to the cloud from a local device. By default, Terraform requires the use of its SaaS platform to deploy components to the cloud.

If a user wishes to deploy from a local device with Terraform, AWS_ACCESS_KEY and AWS_SECRET_ACCESS_KEY variables need to be added to the Terraform Cloud environment. This process is not a natural fit with federated SSO accounts for Amazon Web Services (AWS). Security concerns over a third-party system having access to your cloud are also worth noting.

The common workaround is to use Terraform Cloud solely for storing state information. This option, however, comes at the expense of other Terraform Cloud features.

Note: The table is scrollable horizontally!

| Pulumi | Terraform | |

|---|---|---|

| Publisher | Pulumi | HashiCorp |

| Method | Push | Push |

| IaC approach | Declarative | Declarative |

| Price | Free for one user, three paid packages for teams | Free for up to five users, two paid packages for larger teams |

| Written in | Typescript, Python, Go | Go |

| Source | Open | Open |

| Domain-Specific Language (DSL) | No | Yes (Hashicorp Configuration Language) |

| Main advantage | Code in a familiar programming language, great out-of-the-box GUI | Pure declarative IaC tool, works with all major cloud providers, lets you create infrastructure building blocks |

| Main disadvantage | Still unpolished, documentation lacking in places | HCL limits coding freedom and needs to be mastered to use advanced features |

| State files management | State files are stored via a free account | State files are by default stored on a local hard drive |

| Community | Mid-size | Large |

| Ease of use | The use of JavaScript, TypeScript, .Net, Python, and Go keeps IaC familiar | HCL is a complex language, albeit with a clean syntax |

| Modularity | Problematic with higher-level Pulumi extensions | Ideal due to reusable components |

| Documentation | Limited, with best resources found on Pulumi Slack and GitHub | Excellent official documentation |

| Code versatility | As users write code in different languages, there are multiple ways to reach the desired state | HCL leaves little room for versatility |

| Deploying to the cloud | Can be done from a local device | Must be done through the SaaS platform |

| Testing | Test with any framework that supports the used programming language | Must be performed via third-party tools |

Using Pulumi and Terraform Together

It is possible to run IaC by using both Pulumi and Terraform at the same time. Using both tools requires some workarounds, though.

Pulumi supports consuming local or remote Terraform state from Pulumi programs. This support helps with the gradual adoption of Pulumi if you decide to continue managing a subset of your virtual infrastructure with Terraform.

For example, you might decide to keep your VPC and low-level network definitions written in Terraform to avoid disrupting the infrastructure. Using the state reference support, you can design high-level infrastructure with Pulumi and still consume the Terraform-powered VPC information. In that case, the co-existence of Pulumi and Terraform is easy to manage and automate.

Conclusion: Both are Great Infrastructure as Code Tools

Both Terraform and Pulumi offer similar functionalities. Pulumi is a less rigid tool focused on functionality. Terraform is more mature, better documented, and has strong community support.

However, what sets Pulumi apart is its fit with the DevOps culture.

By expressing infrastructure with popular programming languages, Pulumi bridges the gap between Dev and Ops. It provides a common language between development and operations teams. In contrast, Terraform reinforces silos across departments, pushing development and operations teams further apart with its domain-specific language.

From that point of view, Pulumi is a better fit for standardizing the DevOps pipeline across the development life cycle. The tool reinforces uniformity and leads to quicker software development with less room for error.

Recent Posts

What Is Infrastructure as Code? Benefits, Best Practices, & Tools

Infrastructure as Code (IaC) enables developers to provision IT environments with several lines of code. Unlike manual infrastructure setups that require hours or even days to configure, it takes minutes to deploy an IaC system.

This article explains the concepts behind Infrastructure as Code. You will learn how IaC works and how automatic configurations enable teams to develop software with higher speed and reduced cost.

What is Infrastructure as Code (IaC)?

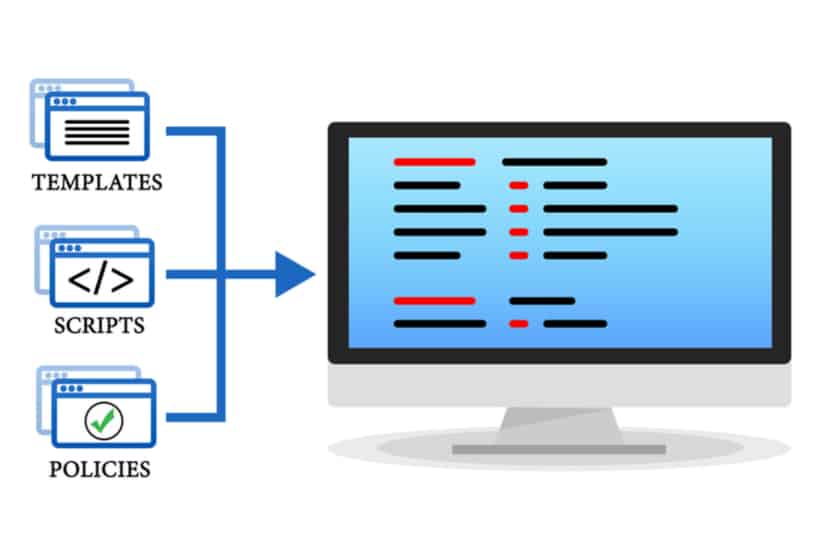

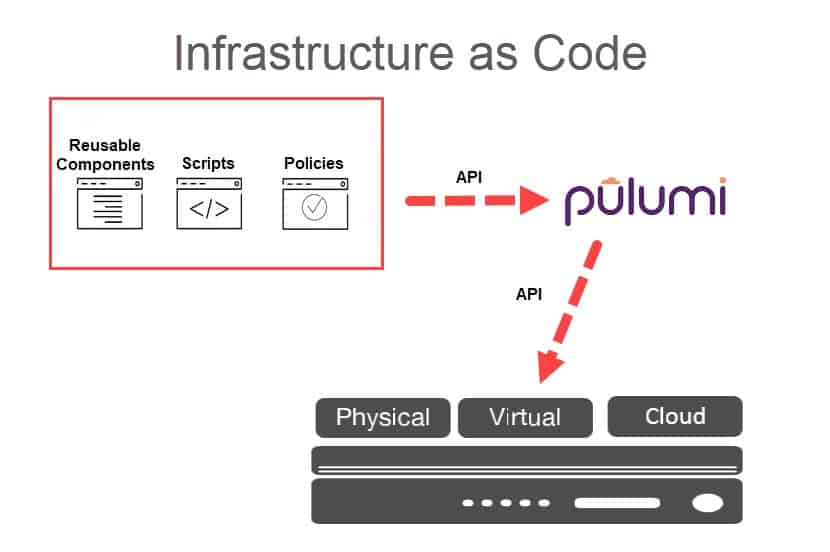

Infrastructure as Code is the process of provisioning and configuring an environment through code instead of manually setting up the required devices and systems. Once code parameters are defined, developers run scripts, and the IaC platform builds the cloud infrastructure automatically.

Such automatic IT setups enable teams to quickly create the desired cloud setting to test and run their software. Infrastructure as Code allows developers to generate any infrastructure component they need, including networks, load balancers, databases, virtual machines, and connection types.

How Infrastructure as Code WorkS

Here is a step-by-step explanation of how creating an IaC environment works:

- A developer defines the configuration parameters in a domain-specific language (DCL).

- The instruction files are sent to a master server, a management API, or a code repository.

- The IaC platform follows the developer’s instructions to create and configure the infrastructure.

With IaC, users don’t need to configure an environment every time they want to develop, test, or deploy software. All infrastructure parameters are saved in the form of files called manifests.

As all code files, manifests are easy to reuse, edit, copy, and share. Manifests make building, testing, staging, and deploying infrastructure quicker and consistent.

Developers codify the configuration files store them in version control. If someone edits a file, pull requests and code review workflows can check the correctness of the changes.

What Issues Does Infrastructure as Code Solve?

Infrastructure as Code solves the three main issues of manual setups:

- High price

- Slow installs

- Environment inconsistencies

High Price

Manually setting up each IT environment is expensive. You need dedicated engineers for setting up the hardware and software. Network and hardware technicians require supervisors, so there is more management overhead.

With Infrastructure as Code, a centrally managed tool sets up an environment. You pay only for the resources you consume, and you can quickly scale up and down your resources.

Slow Installs

To manually set up an infrastructure, engineers first need to rack the servers. They then manually configure the hardware and network to the desired settings. Only then can engineers start to meet the requirements of the operating system and the hosted application.

This process is time-consuming and prone to mistakes. IaC reduces the setup time to minutes and automates the process.

Environment Inconsistencies

Whenever several people are manually deploying configurations, inconsistencies are bound to occur. Over time, it gets difficult to track and reproduce the same environments. These inconsistencies lead to critical differences between development, QA, and production environments. Ultimately, the differences in settings inevitably cause deployment issues.

Infrastructure as Code ensures continuity as environments are provisioned and configured automatically with no room for human error.

The Role of Infrastructure as Code in DevOps

Infrastructure as Code is essential to DevOps. Agile processes and automation are possible only if there is a readily available IT infrastructure to run and test the code.

With IaC, DevOps teams enjoy better testing, shorter recovery times, and more predictable deployments. These factors are vital for quick-paced software delivery. Uniform IT environments lower the chances of bugs arising in the DevOps pipeline.

The IaC approach has no limitations as DevOps teams provision all aspects of the needed infrastructure. Engineers create servers, deploy operating systems, containers, application configurations, set up data storage, networks, and component integrations.

IaC can also be integrated with CI/CD tools. With the right setup, the code can automatically move app versions from one environment to another for testing purposes.

Benefits of Infrastructure as Code

Here are the benefits an organization gets from Infrastructure as Code:

Speed

With IaC, teams quickly provision and configure infrastructure for development, testing, and production. Quick setups speed up the entire software development lifecycle.

The response rate to customer feedback is also faster. Developers add new features quickly without needing to wait for more resources. Quick turnarounds to user requests improve customer satisfaction.

Standardization

Developers get to rely on system uniformity during the delivery process. There are no configuration drifts, a situation in which different servers develop unique settings due to frequent manual updates. Drifts lead to issues at deployment and security concerns.

IaC prevents configuration drifts by provisioning the same environment every time you run the same manifest.

Reusability

DevOps teams can reuse existing IaC scripts in various environments. There is no need to start from scratch every time you need new infrastructure.

Collaboration

Version control allows multiple people to collaborate on the same environment. Thanks to version control, developers work on different infrastructure sections and roll out changes in a controlled manner.

Efficiency

Infrastructure as Code improves efficiency and productivity across the development lifecycle.

Programmers create sandbox environments to develop in isolation. Operations can quickly provision infrastructure for security tests. QA engineers have perfect copies of the production environments during testing. When it is deployment time, developers push both infrastructure and code to production in one step.

IaC also keeps track of all environment build-up commands in a repository. You can quickly go back to a previous instance or redeploy an environment if you run into a problem.

Lower Cost

IaC reduces the costs of developing software. There is no need to spend resources on setting up environments manually.

Most IaC platforms offer a consumption-based cost structure. You only pay for the resources you are actively using, so there is no unnecessary overhead.

Scalability

IaC makes it easy to add resources to existing infrastructure. Upgrades are provisioned quickly, and with ease, so you can quickly expand during burst periods.

For example, organizations running online services can easily scale up to keep up with user demands.

Disaster Recovery

In the event of a disaster, it is easy to recover large systems quickly with IaC. You just re-run the same manifest, and the system will be back online at a different location if need be.

Infrastructure as Code Best Practices

Use Little to No Documentation

Define specifications and parameters in configuration files. There is no need for additional documentation that gets out of sync with the configurations in use.

Version Control All Configuration Files

Place all your configuration files under source control. Versioning gives flexibility and transparency when managing infrastructure. It also allows you to track, manage, and restore previous manifests.

Constantly Test the Configurations

Test and monitor environments before pushing any changes to production. To save time, consider setting up automated tests to run whenever the configuration code gets modified.

Go Modular

Divide your infrastructure into multiple components and then combine them through automation. IaC segmentation offers many advantages. You control who has access to certain parts of your code. You also limit the number of changes that can be made to manifests.

Infrastructure as Code Tools

IaC tools speed up and automate the provisioning of cloud environments. Most tools also monitor previously created systems and roll back changes to the code.

While they vary in terms of features, there are two main types of Infrastructure as Code tools:

- Imperative tools

- Declarative tools

Imperative Approach Tools

Tools with an imperative approach define commands to enable the infrastructure to reach the desired state. Engineers create scripts that provision the infrastructure one step at a time. It is up to the user to determine the optimal deployment process.

The imperative approach is also known as the procedural approach.

When compared to declarative approach tools, imperative IaC requires more manual work. More tasks are required to keep scripts up to date.

Imperative tools are a better fit with system admins who have a background in scripting.

const aws = require("@pulumi/aws");

let size = "t2.micro";

let ami = "ami-0ff8a91507f77f867"

let group = new aws.ec2.SecurityGroup("webserver-secgrp", {

ingress: [

{protocol: "tcp", fromPort: 22, toPort: 22, cidrBlocks: ["0.0.0.0/0"] },

],

});

let server = new aws.ec2.Instance("webserver-www", {

instanceType: size,

securityGroups: [ group.name ],

ami: ami,

});

exports.publicIp = server.publicIp;

exports.publicHostName= server.publicDns;Imperative IaC example (using Pulumi)

Declarative Approach Tools

A declarative approach describes the desired state of the infrastructure without listing the steps to reach that state. The IaC tool processes the requirements and then automatically configures the necessary software.

While no step-by-step instruction is needed, the declarative approach requires a skilled administrator to set up and manage the environment.

Declarative tools are catered towards users with strong programming experience.

resource "aws_instance" "myEC2" {

ami = "ami-0ff8a91507f77f867"

instance_type = "t2.micro"

security_groups = ["sg-1234567"]

}Declarative Infrastructure as Code example (using Terraform)

Popular IaC Tools

The most widely used Infrastructure as Code tools on the market include:

- Terraform: This open-source declarative tool offers pre-written modules that you populate with parameters to build and manage an infrastructure.

- Pulumi: The main advantage of Pulumi is that users can rely on their favorite language to describe the desired infrastructure.

- Puppet: Using Puppet’s Ruby-based DSL, you define the desired state of the infrastructure, and the tool automatically creates the environment.

- Ansible: Ansible enables you to model the infrastructure by describing how the components and systems relate to one another.

- Chef: Chef is the most popular imperative tool on the market. Chef allows users to make “recipes” and “cookbooks” using its Ruby-based DSL. These files specify the exact steps needed to achieve the desired environment.

- SaltStack: What sets SaltStack apart is the simplicity of provisioning and configuring infrastructure components.

Learn more about Pulumi in our article What is Pulumi?.

To see how different options tools stack up, read Ansible vs. Terraform vs. Puppet.

Want to Stay Competitive, IaC is Not Optional

Infrastructure as Code is an effective way to keep up with the rapid pace of current software development. In a time when IT environments must be built, changed, and torn down daily, IaC is a requirement for any team wishing to stay competitive.

PhoenixNAP’s Bare Metal Cloud platform supports API driven provisioning of servers. It’s also fully integrated with Ansible and Terraform, two of the leading Infrastructure as Code tools.

Learn more about Bare Metal Cloud and how it can help propel an organization’s Infrastructure as Code efforts.

Recent Posts

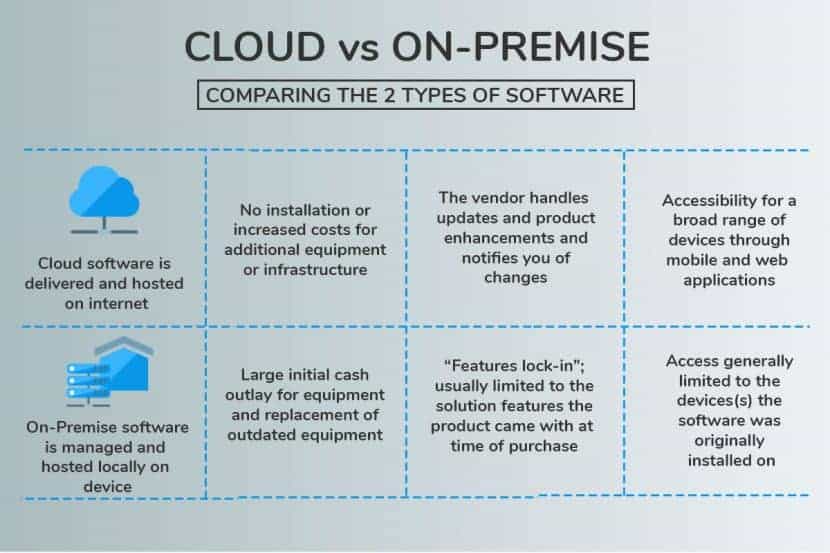

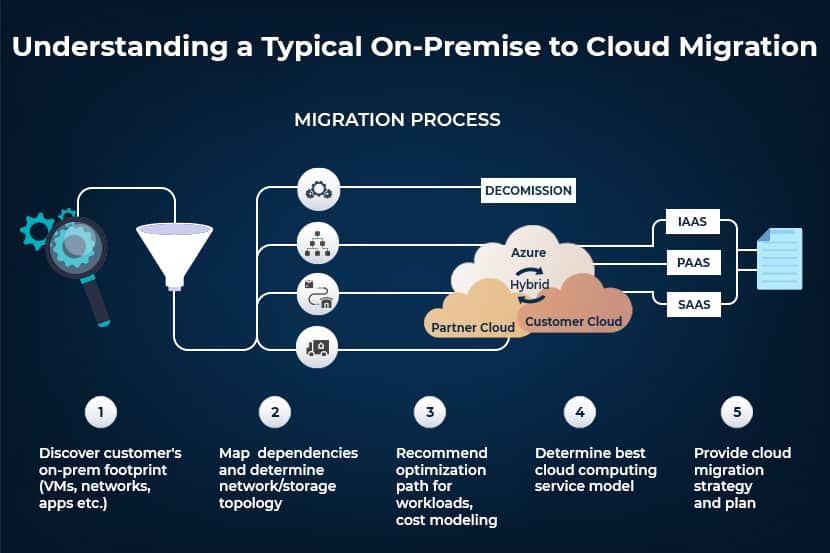

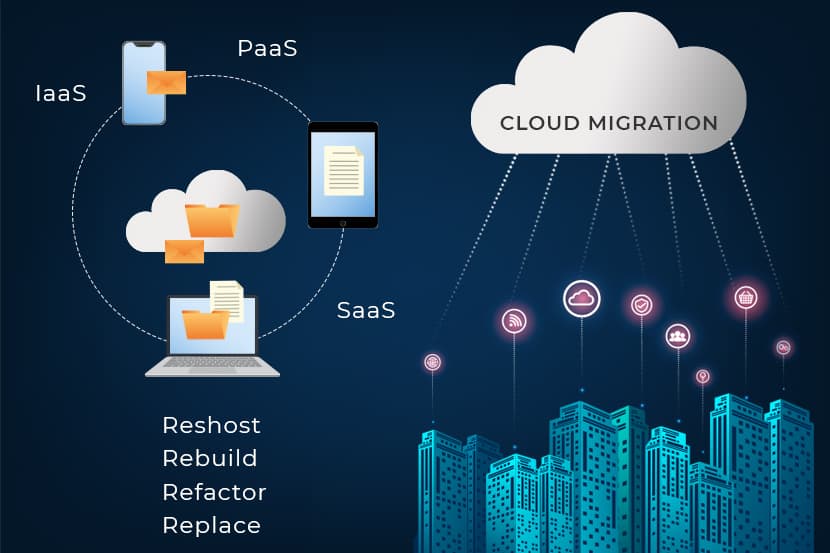

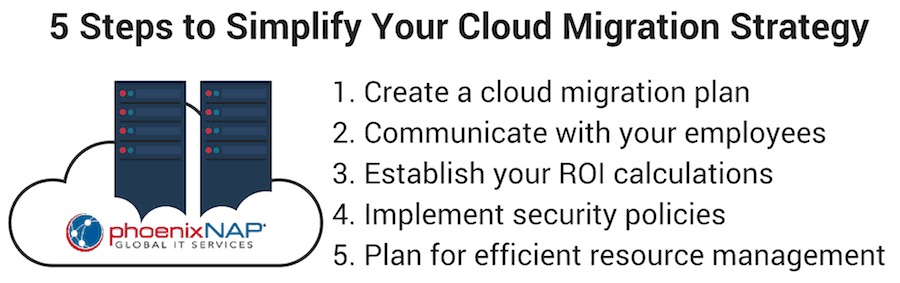

On-Premise vs Cloud: Which is Right for Your Business?

Much has changed since organizations valued on-premise infrastructure as the best option for their applications. Nowadays, most companies are moving towards off-premise possibilities such as cloud and colocation.

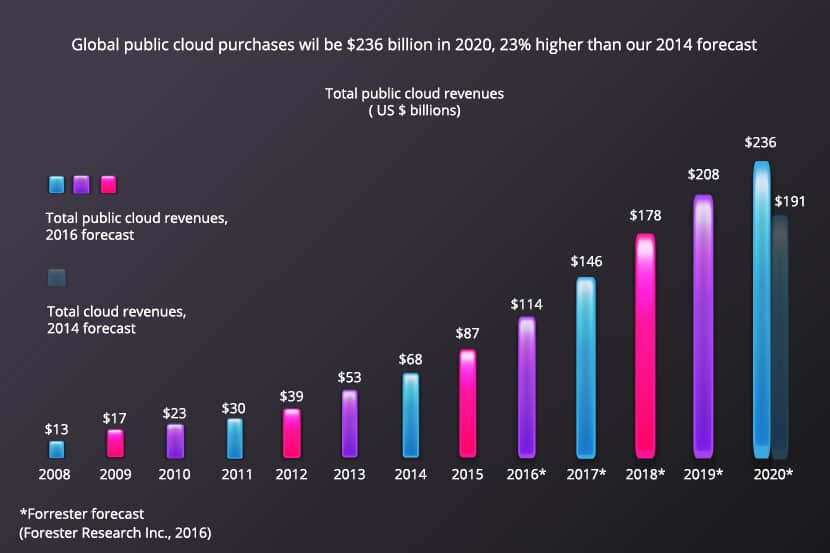

Forrester Inc. reports that Global spending on Cloud services has exponentially increased from $17 billion in 2009 to $208 billion in 2019, growing at an increasing rate, especially in the last five years.

Before a company decides to switch to cloud computing technology, they need to understand both options’ pros and cons.

To decide which solution is best for your business, make sure you understand what on-premise and cloud computing are and how they work.

What is On-Premise Hosting?

On-premise is the traditional approach in which all the required software and infrastructure for a given application reside in-house. On a larger scale, this could mean the business hosts its own data center on-site.

Running applications on-site includes buying and maintaining in-house servers and infrastructure. Apart from physical space, this solution demands a dedicated IT staff qualified to maintain and monitor servers and their security.

What is Cloud Computing?

Cloud computing is an umbrella term that refers to computing services via the internet. By definition, it is a platform that allows the delivery of applications and services. These services include computing, storage, database, monitoring, security, networking, analytics, and other related operations.

The key characteristic of cloud computing is that you pay for what you use. The cloud service provider also takes care of maintaining its network architecture, giving you the freedom to focus on your application.

Most cloud providers offer much better infrastructure and services than what organizations set up individually. Renting rack space in a data center costs only a fraction of what it would to set up and maintain the in-house infrastructure at such a scale. Also, there are considerable savings on technical staff, upgrades, and licenses.

On-Premise vs. Cloud Comparison

There is no clear winner between on-premise vs. cloud computing solutions that cover all business purposes.

Both on-site and Cloud deal with performance, cost, security, compliance, backups, and disaster recovery differently.

| On-Premise Hosting | Cloud Hosting | |

| Cost | Higher | Lower |

| Technical Involvement | Extremely high | Low |

| Scalability | Minimal options | Vertical and horizontal scalability |

| Security and Compliance | On-premise hosting security depends entirely on the staff that maintains it | The Cloud provider ensures a secure environment. Cheaper options on the market provide less security than on-premise infrastructure |

| Control | Full control and infinite customization options | A hypervisor layer between the infrastructure and the hardware. No direct access to hardware |

A closer look at the major factors will help you decide which one is best for you.

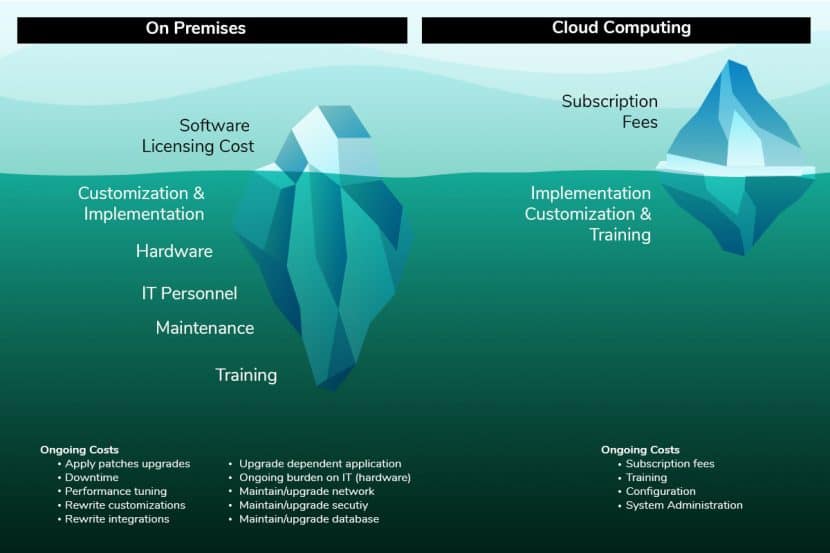

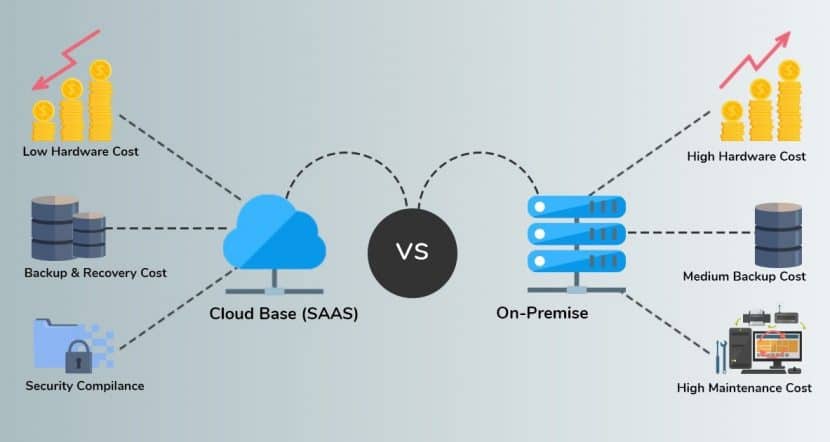

1. Cost

The core difference between on-premise vs. cloud computing is also the very reason for their contrasting pricing models.

With on-premise, the client uses in-house dedicated servers. Therefore, obtaining them requires a considerable upfront investment that includes buying servers, licensing software, and hiring a maintenance team. Additionally, in-house infrastructure is not as flexible when it comes to scaling resources. Not using the full potential of the setup results in unwanted operating costs.

Cloud computing has little to no upfront costs. The infrastructure belongs to the provider, while the client only pays for using the devices on a monthly or annual basis. This is known as the pay-as-you-go model where you only pay for the units you consume and only for the time used. Cloud computing also doesn’t require the cost of investing in a technical team. If not agreed upon otherwise, the provider takes care of maintenance.

Verdict: When it comes to pricing, cloud computing has the upper edge. Not only does it have a pay-as-you-go model with no upfront investment, but it is easy to predict costs over time. On the other hand, in-house hosting is cost-effective when an organization already has servers and a dedicated IT team.

2. Technical Involvement

Another critical factor that affects an organization’s decision is the amount of technical involvement required.

On-premise involves on-location physical resources, as well as on-location staff that is responsible for that infrastructure. It requires full technical involvement in configuring and maintaining servers by a team of experts. Employing people devoted to ensuring your infrastructure is secure and efficient is very costly.

Cloud solutions are usually fully managed by the provider. They require minimum technical expertise from the client. However, service providers allow a certain amount of flexibility in this regard. Outsourcing maintenance allows you to focus on other business aspects. Still, not all companies are willing to hand over their infrastructure and data.

Verdict: Cloud offers a convenient solution for organizations. Especially if an organization doesn't have the staff or expertise to manage their infrastructure.

However, organizations often opt for an on-premise solution because they need full control due to security requirements, continuity, and geographic requirements.

3. Scalability

Modern applications are continually evolving due to ever-increasing demand and user requirements. Infrastructure has to be flexible and scalable so that the user experience does not suffer.

On-premise offers little flexibility in this respect because physical servers are in use. If you run your operations on-site, resource scaling requires buying and deploying new servers. There are just a few cases where scaling is possible. A few involve controlling the number of active processors per server, increasing memory, and increasing bandwidth.

Cloud Computing offers superior scalability options. These include resizing server resources, bandwidth, and internet usage. For cost-saving purposes, Cloud servers are scaled down or shut down when usage is low. This flexibility is possible due to the servers’ virtual location and resources, which are increased or decreased conveniently. Cloud resources are administered through an admin panel or API.

Verdict: Cloud computing is a good option for small and medium businesses that need little computing resources to start with and hope to scale their infrastructure in time.

4. Cloud vs On-Premise Security and Compliance

Compliance and security are the most critical aspects of both on-premise and cloud computing. It is the most significant barrier to the adoption of these services. Current providers have made many innovations in securing their platforms across both on-premise and Cloud.

For example, the introduction of Private Cloud was a significant step towards achieving greater security in the Cloud.

To learn more about Private Cloud, see our article on Public vs Private Cloud solutions.

Owners of in-house infrastructure manage all the security by themselves. They are responsible for the policies they adopt and the type of security they implement. Therefore, the level of security depends on the knowledge of the staff that manages the servers. Furthermore, there is less chance of losing data.

Security becomes more critical with Cloud computing workloads. Client applications and data can spread across many servers or even data centers. The provider ensures Cloud security, including physical security. Providers should provide security measures like biometric access control, strict visiting policies, screening clients, and CCTV monitoring. These add another layer of protection in case of a physical attack.

Certain countries and industries require data storage within a particular geographic region. Others require a dedicated server that is owned by the client and not shared with other organizations. In such cases, it becomes easier to manage with on-premise.

It is crucial to ensure that the security protocols that are in place by the provider satisfy your needs. That may include HIPAA compliance or PCI compliant hosting.

Verdict: Security experts give on-premise the upper hand. However, there are also benefits to Cloud computing. The provider takes care of the security of both hardware and software. They also possess security certifications that are difficult for individual organizations to obtain.

5. Control

Another deciding factor is considering how much control do you need to have in setting up the system.

On-premises allows control over all aspects of the build – what kind of servers you want to use, software installations, and how to set up the architecture. It requires more time to set up as you have to consider all aspects of the build.

In contrast, with Cloud computing, there is less control of the underlying infrastructure. Consequently, implementation is much faster and more straightforward as the infrastructure is delivered pre-configured.

Verdict: On-premise offers more control but takes more time to set up, while cloud computing is easier and faster to implement.

Making the Decision

This article considered the critical factors of on-premise versus cloud solutions. Each organization must look into its architecture and make application-specific decisions, giving each application individual assessments.

To sum up, the benefits for the adoption of off-premise infrastructures include:

- Improved security with many fail-safes and guarantees

- Compliance with regulatory policies

- Cost-savings due to economies of scale

- Reduction of overhead costs

- Better performance through geo-location optimization

- Higher availability

The decision to colocate can, later on, develop into a full cloud migration where Cloud computing is implemented for scaling and rapid expansion. The reverse is also possible. Organizations using Cloud services can decide to migrate to dedicated servers at a secure data center.

To get help with your decision-making process, contact one of our experts today.

Recent Posts

What is Hadoop? Hadoop Big Data Processing

The evolution of big data has produced new challenges that needed new solutions. As never before in history, servers need to process, sort and store vast amounts of data in real-time.

This challenge has led to the emergence of new platforms, such as Apache Hadoop, which can handle large datasets with ease.

In this article, you will learn what Hadoop is, what are its main components, and how Apache Hadoop helps in processing big data.

What is Hadoop?

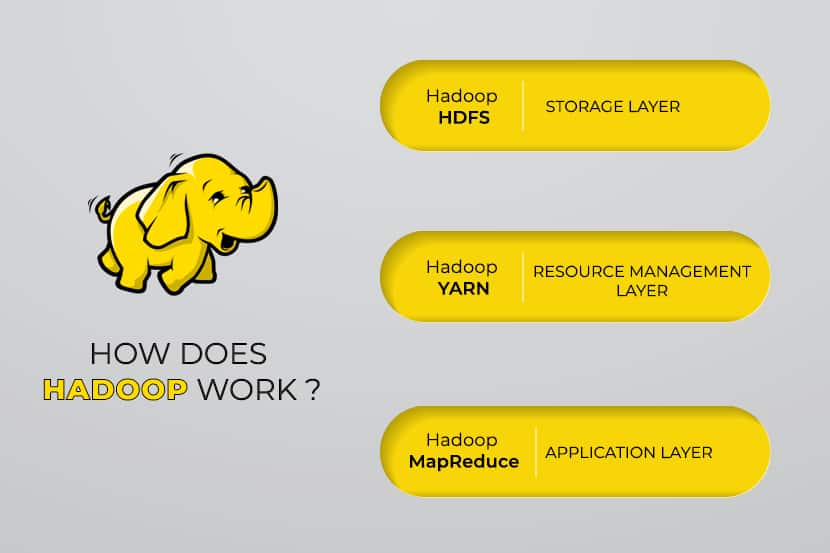

The Apache Hadoop software library is an open-source framework that allows you to efficiently manage and process big data in a distributed computing environment.

Apache Hadoop consists of four main modules:

Hadoop Distributed File System (HDFS)

Data resides in Hadoop’s Distributed File System, which is similar to that of a local file system on a typical computer. HDFS provides better data throughput when compared to traditional file systems.

Furthermore, HDFS provides excellent scalability. You can scale from a single machine to thousands with ease and on commodity hardware.

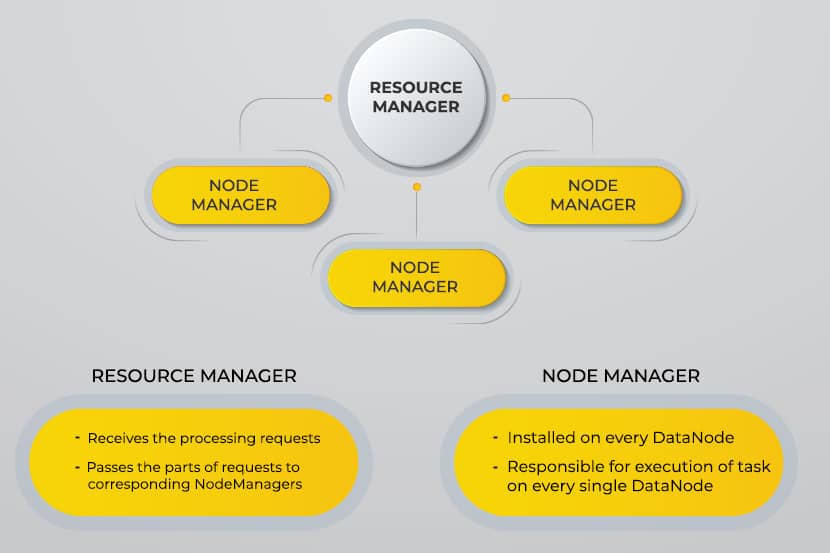

Yet Another Resource Negotiator (YARN)

YARN facilitates scheduled tasks, whole managing, and monitoring cluster nodes and other resources.

MapReduce

The Hadoop MapReduce module helps programs to perform parallel data computation. The Map task of MapReduce converts the input data into key-value pairs. Reduce tasks consume the input, aggregate it, and produce the result.

Hadoop Common

Hadoop Common uses standard Java libraries across every module.

To learn how Hadoop components interact with one another, read our article that explains Apache Hadoop Architecture.

Why Was Hadoop Developed?

The World Wide Web grew exponentially during the last decade, and it now consists of billions of pages. Searching for information online became difficult due to its significant quantity. This data became big data, and it consists of two main problems:

- Difficulty in storing all this data in an efficient and easy-to-retrieve manner

- Difficulty in processing the stored data

Developers worked on many open-source projects to return web search results faster and more efficiently by addressing the above problems. Their solution was to distribute data and calculations across a cluster of servers to achieve simultaneous processing.

Eventually, Hadoop came to be a solution to these problems and brought along many other benefits, including the reduction of server deployment cost.

How Does Hadoop Big Data Processing Work?

Using Hadoop, we utilize the storage and processing capacity of clusters and implement distributed processing for big data. Essentially, Hadoop provides a foundation on which you build other applications to process big data.

Applications that collect data in different formats store them in the Hadoop cluster via Hadoop’s API, which connects to the NameNode. The NameNode captures the structure of the file directory and the placement of “chunks” for each file created. Hadoop replicates these chunks across DataNodes for parallel processing.

MapReduce performs data querying. It maps out all DataNodes and reduces the tasks related to the data in HDFS. The name, “MapReduce” itself describes what it does. Map tasks run on every node for the supplied input files, while reducers run to link the data and organize the final output.

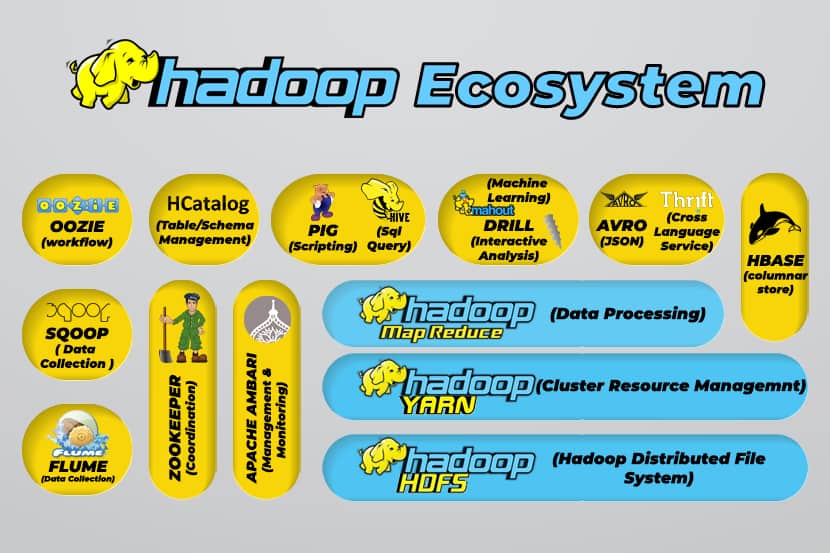

Hadoop Big Data Tools

Hadoop’s ecosystem supports a variety of open-source big data tools. These tools complement Hadoop’s core components and enhance its ability to process big data.

The most useful big data processing tools include:

- Apache Hive

Apache Hive is a data warehouse for processing large sets of data stored in Hadoop’s file system.

- Apache Zookeeper

Apache Zookeeper automates failovers and reduces the impact of a failed NameNode.

- Apache HBase

Apache HBase is an open-source non-relation database for Hadoop.

- Apache Flume

Apache Flume is a distributed service for data streaming large amounts of log data.

- Apache Sqoop

Apache Sqoop is a command-line tool for migrating data between Hadoop and relational databases.

- Apache Pig

Apache Pig is Apache’s development platform for developing jobs that run on Hadoop. The software language in use is Pig Latin.

- Apache Oozie

Apache Oozie is a scheduling system that facilitates the management of Hadoop jobs.

- Apache HCatalog

Apache HCatalog is a storage and table management tool for sorting data from different data processing tools.

If you are interested in Hadoop, you may also be interested in Apache Spark. Learn the differences between Hadoop and Spark and their individual use cases.

Advantages of Hadoop

Hadoop is a robust solution for big data processing and is an essential tool for businesses that deal with big data.

The major features and advantages of Hadoop are detailed below:

- Faster storage and processing of vast amounts of data

The amount of data to be stored increased dramatically with the arrival of social media and the Internet of Things (IoT). Storage and processing of these datasets are critical to the businesses that own them.

- Flexibility

Hadoop’s flexibility allows you to save unstructured data types such as text, symbols, images, and videos. In traditional relational databases like RDBMS, you will need to process the data before storing it. However, with Hadoop, preprocessing data is not necessary as you can store data as it is and decide how to process it later. In other words, it behaves as a NoSQL database.

- Processing power

Hadoop processes big data through a distributed computing model. Its efficient use of processing power makes it both fast and efficient.

- Reduced cost

Many teams abandoned their projects before the arrival of frameworks like Hadoop, due to the high costs they incurred. Hadoop is an open-source framework, it is free to use, and it uses cheap commodity hardware to store data.

- Scalability

Hadoop allows you to quickly scale your system without much administration, just by merely changing the number of nodes in a cluster.

- Fault tolerance

One of the many advantages of using a distributed data model is its ability to tolerate failures. Hadoop does not depend on hardware to maintain availability. If a device fails, the system automatically redirects the task to another device. Fault tolerance is possible because redundant data is maintained by saving multiple copies of data across the cluster. In other words, high availability is maintained at the software layer.

The Three Main Use Cases

Processing big data

We recommend Hadoop for vast amounts of data, usually in the range of petabytes or more. It is better suited for massive amounts of data that require enormous processing power. Hadoop may not be the best option for an organization that processes smaller amounts of data in the range of several hundred gigabytes.

Storing a diverse set of data

One of the many advantages of using Hadoop is that it is flexible and supports various data types. Irrespective of whether data consists of text, images, or video data, Hadoop can store it efficiently. Organizations can choose how they process data depending on their requirement. Hadoop has the characteristics of a data lake as it provides flexibility over the stored data.

Parallel data processing

The MapReduce algorithm used in Hadoop orchestrates parallel processing of stored data, meaning that you can execute several tasks simultaneously. However, joint operations are not allowed as it confuses the standard methodology in Hadoop. It incorporates parallelism as long as the data is independent of each other.

What is Hadoop Used for in the Real World

Companies from around the world use Hadoop big data processing systems. A few of the many practical uses of Hadoop are listed below:

- Understanding customer requirements

In the present day, Hadoop has proven to be very useful in understanding customer requirements. Major companies in the financial industry and social media use this technology to understand customer requirements by analyzing big data regarding their activity.

Companies use that data to provide personalized offers to customers. You may have experienced this through advertisements shown on social media and eCommerce sites based on our interests and internet activity.

- Optimizing business processes