8 Types of Firewalls: Guide For IT Security Pros

Are you searching for the right firewall setup to protect your business from potential threats?

Understanding how firewalls work helps you decide on the best solution. This article explains the types of firewalls, allowing you to make an educated choice.

What is a Firewall?

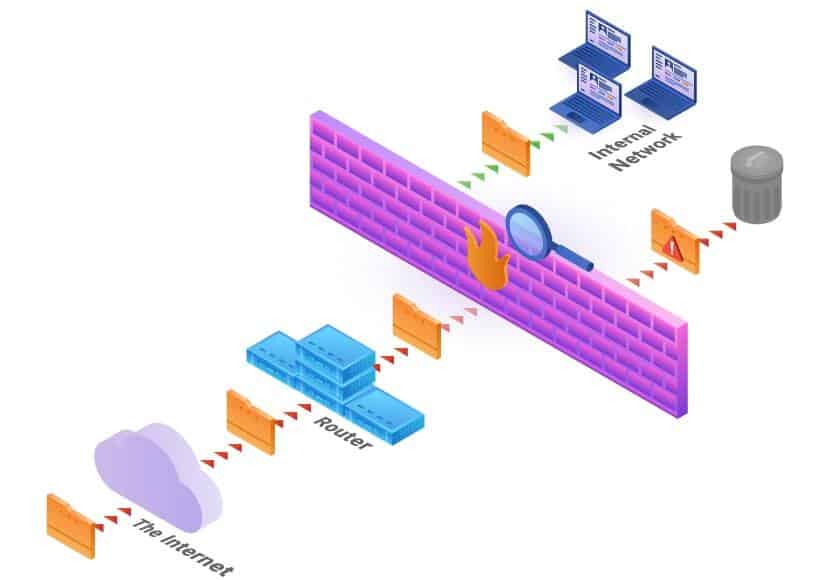

A firewall is a security device that monitors network traffic. It protects the internal network by filtering incoming and outgoing traffic based on a set of established rules. Setting up a firewall is the simplest way of adding a security layer between a system and malicious attacks.

How Does a Firewall Work?

A firewall is placed on the hardware or software level of a system to secure it from malicious traffic. Depending on the setup, it can protect a single machine or a whole network of computers. The device inspects incoming and outgoing traffic according to predefined rules.

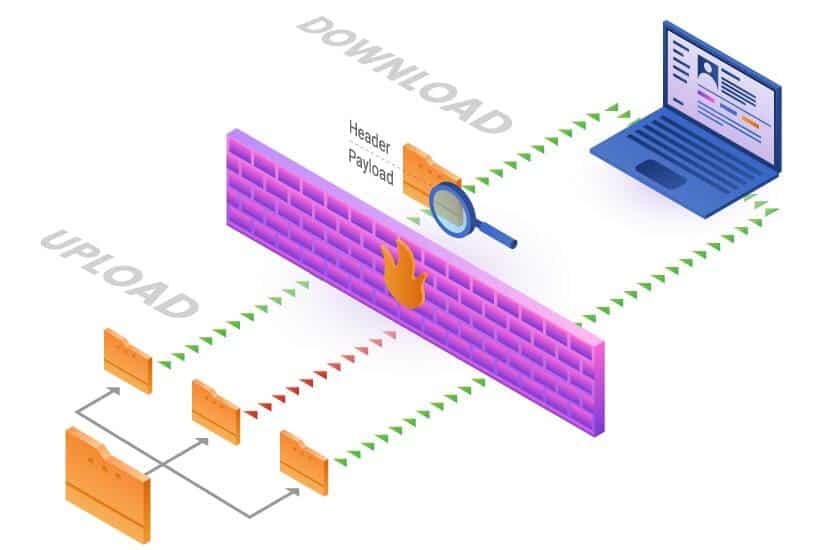

Communicating over the Internet is conducted by requesting and transmitting data from a sender to a receiver. Since data cannot be sent as a whole, it is broken up into manageable data packets that make up the initially transmitted entity. The role of a firewall is to examine data packets traveling to and from the host.

What does a firewall inspect? Each data packet consists of a header (control information) and payload (the actual data). The header provides information about the sender and the receiver. Before the packet can enter the internal network through the defined port, it must pass through the firewall. This transfer depends on the information it carries and how it corresponds to the predefined rules.

For example, the firewall can have a rule that excludes traffic coming from a specified IP address. If it receives data packets with that IP address in the header, the firewall denies access. Similarly, a firewall can deny access to anyone except the defined trusted sources. There are numerous ways to configure this security device. The extent to which it protects the system at hand depends on the type of firewall.

Types of Firewalls

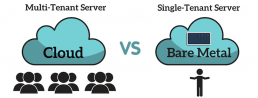

Although they all serve to prevent unauthorized access, the operation methods and overall structure of firewalls can be quite diverse. According to their structure, there are three types of firewalls – software firewalls, hardware firewalls, or both. The remaining types of firewalls specified in this list are firewall techniques which can be set up as software or hardware.

Software Firewalls

A software firewall is installed on the host device. Accordingly, this type of firewall is also known as a Host Firewall. Since it is attached to a specific device, it has to utilize its resources to work. Therefore, it is inevitable for it to use up some of the system’s RAM and CPU.

If there are multiple devices, you need to install the software on each device. Since it needs to be compatible with the host, it requires individual configuration for each. Hence, the main disadvantage is the time and knowledge needed to administrate and manage firewalls for each device.

On the other hand, the advantage of software firewalls is that they can distinguish between programs while filtering incoming and outgoing traffic. Hence, they can deny access to one program while allowing access to another.

Hardware Firewalls

As the name suggests, hardware firewalls are security devices that represent a separate piece of hardware placed between an internal and external network (the Internet). This type is also known as an Appliance Firewall.

Unlike a software firewall, a hardware firewall has its resources and doesn’t consume any CPU or RAM from the host devices. It is a physical appliance that serves as a gateway for traffic passing to and from an internal network.

They are used by medium and large organizations that have multiple computers working inside the same network. Utilizing hardware firewalls in such cases is more practical than installing individual software on each device. Configuring and managing a hardware firewall requires knowledge and skill, so make sure there is a skilled team to take on this responsibility.

Packet-Filtering Firewalls

When it comes to types of firewalls based on their method of operation, the most basic type is the packet-filtering firewall. It serves as an inline security checkpoint attached to a router or switch. As the name suggests, it monitors network traffic by filtering incoming packets according to the information they carry.

As explained above, each data packet consists of a header and the data it transmits. This type of firewall decides whether a packet is allowed or denied access based on the header information. To do so, it inspects the protocol, source IP address, destination IP, source port, and destination port. Depending on how the numbers match the access control list (rules defining wanted/unwanted traffic), the packets are passed on or dropped.

If a data packet doesn’t match all the required rules, it won’t be allowed to reach the system.

A packet-filtering firewall is a fast solution that doesn’t require a lot of resources. However, it isn’t the safest. Although it inspects the header information, it doesn’t check the data (payload) itself. Because malware can also be found in this section of the data packet, the packet-filtering firewall is not the best option for strong system security.

***This table is scrollable horizontally.

| PACKET-FILTERING FIREWALLS | |||

| Advantages | Disadvantages | Protection Level | Who is it for: |

| – Fast and efficient for filtering headers.

– Don’t use up a lot of resources. – Low cost. |

– No payload check.

– Vulnerable to IP spoofing. – Cannot filter application layer protocols. – No user authentication. |

– Not very secure as they don’t check the packet payload. | – A cost-efficient solution to protect devices within an internal network.

– A means of isolating traffic internally between different departments. |

Circuit-Level Gateways

Circuit-level gateways are a type of firewall that work at the session layer of the OSI model, observing TCP (Transmission Control Protocol) connections and sessions. Their primary function is to ensure the established connections are safe.

In most cases, circuit-level firewalls are built into some type of software or an already existing firewall.

Like pocket-filtering firewalls, they don’t inspect the actual data but rather the information about the transaction. Additionally, circuit-level gateways are practical, simple to set up, and don’t require a separate proxy server.

***This table is scrollable horizontally.

|

CIRCUIT-LEVEL GATEWAYS |

|||

|

Advantages |

Disadvantages | Protection Level |

Who is it for: |

| – Resource and cost-efficient.

– Provide data hiding and protect against address exposure. – Check TCP handshakes. |

– No content filtering.

– No application layer security. – Require software modifications. |

– Moderate protection level (higher than packet filtering, but not completely efficient since there is no content filtering). | – They should not be used as a stand-alone solution.

– They are often used with application-layer gateways. |

Stateful Inspection Firewalls

A stateful inspection firewall keeps track of the state of a connection by monitoring the TCP 3-way handshake. This allows it to keep track of the entire connection – from start to end – permitting only expected return traffic inbound.

When starting a connection and requesting data, the stateful inspection builds a database (state table) and stores the connection information. In the state table, it notes the source IP, source port, destination IP, and destination port for each connection. Using the stateful inspection method, it dynamically creates firewall rules to allow anticipated traffic.

This type of firewall is used as additional security. It enforces more checks and is safer compared to stateless filters. However, unlike stateless/packet filtering, stateful firewalls inspect the actual data transmitted across multiple packets instead of just the headers. Because of this, they also require more system resources.

***This table is scrollable horizontally.

|

STATEFUL INSPECTION FIREWALLS |

|||

|

Advantages |

Disadvantages | Protection Level |

Who is it for: |

| – Keep track of the entire session.

– Inspect headers and packet payloads. – Offer more control. – Operate with fewer open ports. |

– Not as cost-effective as they require more resources.

– No authentication support. – Vulnerable to DDoS attacks. – May slow down performance due to high resource requirements. |

– Provide more advanced security as it inspects entire data packets while blocking firewalls that exploit protocol vulnerabilities.

– Not efficient when it comes to exploiting stateless protocols. |

– Considered the standard network protection for cases that need a balance between packet filtering and application proxy. |

Proxy Firewalls

A proxy firewall serves as an intermediate device between internal and external systems communicating over the Internet. It protects a network by forwarding requests from the original client and masking it as its own. Proxy means to serve as a substitute and, accordingly, that is the role it plays. It substitutes for the client that is sending the request.

When a client sends a request to access a web page, the message is intersected by the proxy server. The proxy forwards the message to the web server, pretending to be the client. Doing so hides the client’s identification and geolocation, protecting it from any restrictions and potential attacks. The web server then responds and gives the proxy the requested information, which is passed on to the client.

***This table is scrollable horizontally.

|

PROXY FIREWALLS |

|||

|

Advantages |

Disadvantages | Protection Level |

Who is it for: |

| – Protect systems by preventing contact with other networks.

– Ensure user anonymity. – Unlock geolocational restrictions. |

– May reduce performance.

– Need additional configuration to ensure overall encryption. – Not compatible with all network protocols. |

– Offer good network protection if configured well. | – Used for web applications to secure the server from malicious users.

– Utilized by users to ensure network anonymity and for bypassing online restrictions. |

Next-Generation Firewalls

The next-generation firewall is a security device that combines a number of functions of other firewalls. It incorporates packet, stateful, and deep packet inspection. Simply put, NGFW checks the actual payload of the packet instead of focusing solely on header information.

Unlike traditional firewalls, the next-gen firewall inspects the entire transaction of data, including the TCP handshakes, surface-level, and deep packet inspection.

Using NGFW is adequate protection from malware attacks, external threats, and intrusion. These devices are quite flexible, and there is no clear-cut definition of the functionalities they offer. Therefore, make sure to explore what each specific option provides.

***This table is scrollable horizontally.

|

NEXT-GENERATION FIREWALLS |

|||

|

Advantages |

Disadvantages | Protection Level |

Who is it for: |

| – Integrates deep inspection, antivirus, spam filtering, and application control.

– Automatic upgrades. – Monitor network traffic from Layer 2 to Layer 7. |

– Costly compared to other solutions.

– May require additional configuration to integrate with existing security management.

|

– Highly secure. | – Suitable for businesses that require PCI or HIPAA compliance.

– For businesses that want a package deal security device. |

Cloud Firewalls

A cloud firewall or firewall-as-a-service (Faas) is a cloud solution for network protection. Like other cloud solutions, it is maintained and run on the Internet by third-party vendors.

Clients often utilize cloud firewalls as proxy servers, but the configuration can vary according to the demand. Their main advantage is scalability. They are independent of physical resources, which allows scaling the firewall capacity according to the traffic load.

Businesses use this solution to protect an internal network or other cloud infrastructures (Iaas/Paas).

***This table is scrollable horizontally.

|

CLOUD FIREWALLS |

|||

|

Advantages |

Disadvantages | Protection Level |

Who is it for: |

| – Availability.

– Scalability that offers increased bandwidth and new site protection. – No hardware required. – Cost-efficient in terms of managing and maintaining equipment. |

– A wide range of prices depending on the services offered.

– The risk of losing control over security assets. – Possible compatibility difficulties if migrating to a new cloud provider. |

– Provide good protection in terms of high availability and having a professional staff taking care of the setup.

|

– A solution suitable for larger businesses that do not have an in-staff security team to maintain and manage the on-site security devices. |

Which Firewall Architecture is Right for Your Business?

When deciding on which firewall to choose, there is no need to be explicit. Using more than one firewall type provides multiple layers of protection.

Also, consider the following factors:

- The size of the organization. How big is the internal network? Can you manage a firewall on each device, or do you need a firewall that monitors the internal network? These questions are important to answer when deciding between software and hardware firewalls. Additionally, the decision between the two will largely depend on the capabilities of the tech team assigned to manage the setup.

- The resources available. Can you afford to separate the firewall from the internal network by placing it on a separate piece of hardware or even on the cloud? The traffic load the firewall needs to filter and whether it is going to be consistent also plays an important role.

- The level of protection required. The number and types of firewalls should reflect the security measures the internal network requires. A business dealing with sensitive client information should ensure that data is protected from hackers by tightening the firewall protection.

Build a firewall setup that fits the requirements considering these factors. Utilize the ability to layer more than one security device and configure the internal network to filter any traffic coming its way. For secure cloud options, see how phoenixNAP ensures cloud data security.

Recent Posts

10 Step Business Continuity Planning Checklist with Sample Template

If you don’t have a Business Continuity Plan in place, then your business and data is already in danger. Believing a business will continue to generate profit in the future without putting safeguards in place is a very risky practice. Ignoring the pitfalls can be catastrophic.

Business continuity as a concept is self-explanatory. Yet, it encompasses much more than an organization’s future profitability. It covers all aspects of a business’s longevity, prosperity and success.

In this article, you will learn how to create an effective business continuity plan to protect your assets.

What is a Business Continuity Plan?

The definition of business continuity planning refers to the process involved in the creation of a system that prevents penitential threats to a company, also aiding in its recovery.

This plan outlines how assets and personnel will be protected during the event of a disaster, and how to function normally through an event. A BCP should include contingencies for human resources, assets and business processes, and any other aspects that could be affected by downtime or failure. The plan consists of input from all key stakeholders and must be finalized in advance.

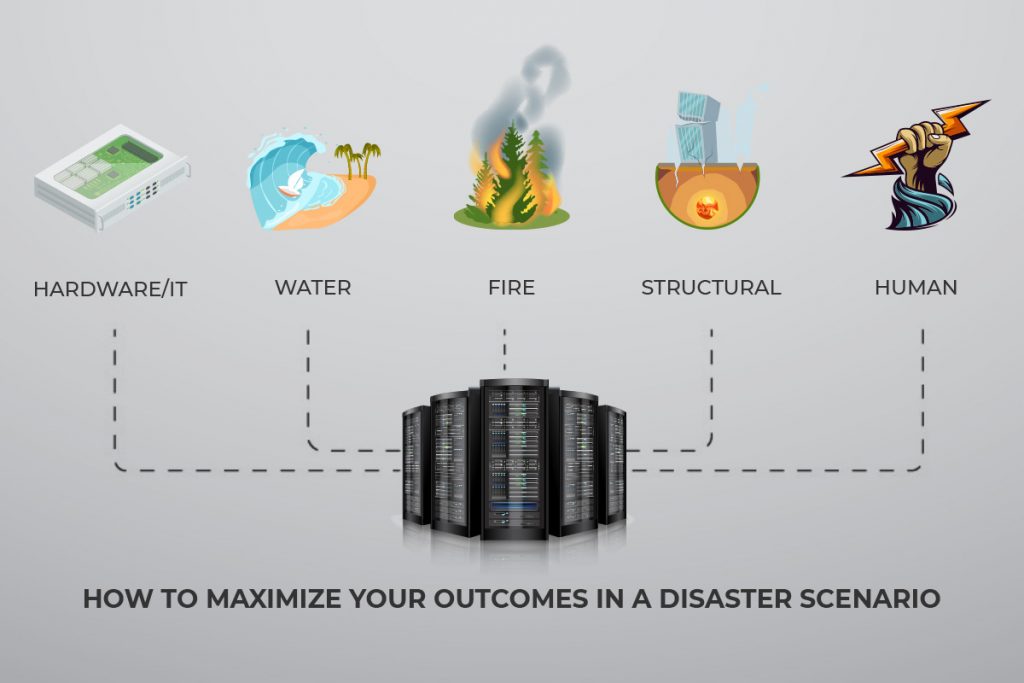

A BCP is an essential part of a company’s risk management strategy. It should be updated as technology and hardware/software get updated. These risks usually include natural disasters—weather-related events, flood, fire, or cyber and virtual attacks. Any and every risk that can affect a company’s operations is defined beforehand by the BCP. A typical plan includes:

- Identifying all potential risks

- Determining the effect of the risk on the company’s normal operations

- Implementing procedures and safeguards for risk mitigation

- Testing the procedures to ensure their success

- Constantly reviewing the processes to make sure it’s updated

After an organization assess its risks and identifies them, it needs to follow these steps:

- Understanding how these risks will interfere or affect operations

- Setting up procedures and safeguards that mitigate risks and offer rapid solutions

- Systems on how to test solutions to ensure they work, and scheduling them regularly

- Ensuring that processes are systematically reviewed to make sure they’re up to date

Business Continuity Checklist

A successful business continuity plan is prepared based on the understanding of the impact of a disaster situation on a business. A business continuity checklist includes certain steps, which we have summarized for you below in point form.

Use this step by step guide for preparing your comprehensive preparedness plan. When it comes to disaster recovery strategies, each company will have varying strategies based on geographical locations, the organization’s structure, system, environments, and the severity of the disaster in question.

-

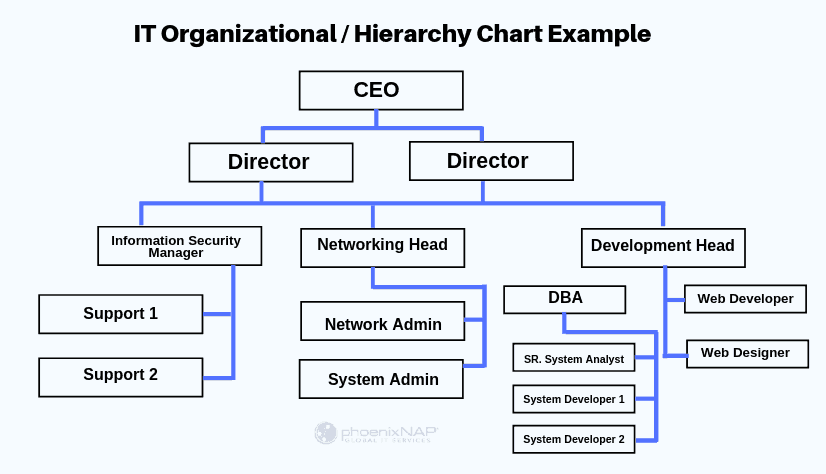

Assemble the Planning Team:

Implementing a BCP plan certainly requires a dedicated team. Teams should be built with hierarchy in mind, with specific roles and recovery tasks assigned to staff members who are accountable for each.

-

Drawing Up the BCP Plan:

Mapping out a strategy is one of the most important components of a business continuity plan. The objectives of the plan should be clearly understood with goals set accordingly. A company should use this opportunity to identify the key processes and the people who will keep it running.

To draw up the plan, companies need to make a list of all the disruptions that could affect a company’s operations. Pinpoint critical functions in everyday business processes and formulate practical recovery strategies for each possible disaster scenario.

-

Conduct Business Impact Analysis:

After identifying all the potential threats, they should be thoroughly analyzed. A proper business impact analysis or BIA should be in place. Extensive lists may need to be prepared, depending on the company’s set up and geographical location.

The list can include floods, hurricanes, fires, volcanoes, and even Tsunamis. Apart from the above natural disasters, others have a much higher probability of occurring. These can include cyberattacks, downtime due to power outages, data corruption, system failures, hardware faults, and other malicious threats to data security.

-

Educate and Train:

Handling business continuity requires knowledge beyond that of IT professionals and those with cybersecurity proficiency. Companies at the upper management level need to layout the objectives, requirements, and key components of the plan before the whole team. Develop a comprehensive training program to help the team develop the required skills.

-

Isolate Sensitive Info:

Every business works with critical data allocated with the topmost security priority. Such data, when compromised or leaked, can spell the end for a company or organization. Data, such as financial records and other mission-critical information such as user login credentials, require storage where recovery is convenient and easy. Store data according to priority based on the importance of the data to the business.

-

Backup Important Data:

Every company has some critical data, which is irreplaceable. Hence, every recovery or backup plan should include creating copies of anything which is not replaceable. In a Managed Service Provider’s (MSP) case, it includes files, data on customer and employee records, business emails, etc. The plan in place should facilitate quick recovery so that businesses can recover tomorrow from any disaster that occurs today.

-

Protect Hard Copy Data:

Electronic or digital data is the main focus of modern IT security strategies. There is still an enormous volume of physical documents that businesses need to maintain daily.

For example, a typical MSP involves working with an assortment of tax documents, contracts, and employee files, which are as important as the data saved on the hard drives. Convert documents that can be digitized to minimize the loss of physical documents.

-

Designate a Recovery Site:

Disasters have the potential to wipe out a company’s data center completely.

Companies should prepare for the worst, by designating a secondary site which would act as a back-up for the primary site. The second site should be equipped with the required tools and systems to recover affected systems to ensure that the business processes continue.

-

Set up a Communications Program:

Communication within the company is vital in times of crisis. Companies should consider drafting sample messages in advance to expedite communications to suppliers and partners in times of crisis.

Business Continuity teams can use a detailed communication plan to coordinate their efforts efficiently.

-

Test, Measure, and Update:

Every important business program should be tested and measured for its effectiveness, and business continuity plans are no exceptions. Testing should include running simulations to test the team’s level of preparedness during a crisis. Based on the results, additional modifications and tweaks can be made.

Download Our Sample Business Continuity Plan Template

Benefits of a Business Continuity Plan

A business continuity plan involves identifying and listing out all potential risks and threats a company may face and laying out appropriate policies to mitigate those risks in case of any disaster or crisis. A properly implemented business continuity plan would help any company to remain operational even in the wake of a disaster. Outlined below are some of the greatest advantages of having a business continuity plan in place:

Business Remains Operational During Disaster

Disasters can happen at any time, unannounced. Businesses need to recover from such incidents as quickly as possible to ensure there are no major disruptions in business processes. Business Continuity Plans can help companies remain operational throughout the disaster or the business recovery phase.

Avoid Expensive Downtime:

An Aberdeen Group report indicated that downtime could cost up to $8600 per hour to small scale organizations. If the system is down, businesses lose money, customers, or even their reputation is in danger in certain cases. A proper BCP in place can prevent losing any opportunities during an outage.

Protect Against Different Disasters

Disasters and crises do not always include disasters such as fire, tornadoes, or pandemics, etc. A crisis can also occur from hardware failures, power outages, cybercrimes, and other forms of human error. Thus, companies need to protect themselves not only from natural disasters but from all other forms of outages and downtime. A BCP mitigates these risks.

Gain a Competitive Advantage

In the event of a national or global crisis, a business’s reputation can be bolstered, if it remains up and running while its competitors are down. Clients can look more favorably towards the company as they associate a certain level of reliability with them. Putting a BCP plan in place can help companies stay operational during such times, giving them a clear competitive edge over their competitors.

Giving Assurance to Employees

It is natural for employees to worry if systems are compromised due to a crisis. This situation makes them worry about how and when to proceed with their delegated tasks, negatively affecting the workflow. This scenario is especially true for customer-centric organizations. Having a BCP plan in place for such situations can help prepare a company’s staff on what to do in such situations, and help keep business processes running smoothly. Having a clear action plan can do wonders for employees as it increases the company’s morale and job satisfaction.

Gain Peace of Mind

Having a detailed, tried, and tested BCP in place can alleviate much of management’s worries and stress, helping them to work on other core competencies. Companies can carry on confidently with their operations, knowing that there are measures in place to counter any system outage or downtime. BCP plans are thus critical to a company’s longevity, helping them defend against potential risks while enhancing a company’s reputation.

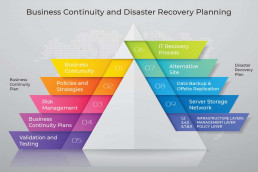

Stages of Developing a Strong BCP

Business Impact Analysis: You will identify resources and functions that are time-sensitive and need an immediate reaction.

GAP analysis: You need to analyze aspects of your business continuity management system that you currently have and evaluate your IT emergency management system and see how ready and mature it is to face evolving threats.

Improvement planning: This analysis will tell you what you need to work on to help improve the maturity of your Business Continuity Management (BCM) and what will help it improve over time.

Recovery: A clear plan needs to be outlined on which steps to take to fully recover critical business functions and get all applications back online smoothly.

Organization: A continuity team should be put in place who will come up with this plan and be responsible for managing all types of disruptions.

Training: The continuity team needs to get regular training and undergo testing, who complete scenarios and exercises that deal with the multitude of threats and disasters your company can face. They should also update and regularly go over the plan and strategies.

What Does a Business Continuity Plan Typically Include?

It’s critical to have a detailed plan for how to run business operations and maintain them for both the interim and possible longer-term disruptions and outages.

A BCP plan should outline what to do with data backups, equipment and supplies, and backup site locations, and how to reestablish technical productivity and software integrity so that vital business functions can continue. It should give step by step instructions to administrators, which includes all necessary information for backup site providers, key personnel, and emergency responders.

Remember these three keys to creating a successful business continuity plan:

- Disaster recovery: Consolidate a method to recover a data center, possibly at an external site. If the primary site is compromised, it becomes obsolete and inoperable.

- High availability: Ensure the capability of processes are highly available. In case of a local failure, the business can still function with limited access to applications despite the crisis in hardware/software, business processes, or the shutdown of physical facilities.

- Continuity of operations: The main goal is to keep processes and applications running during an outage, and to test them during planned outages. Scheduling backups and planning for maintenance is key to staying active.

Keep up with your competitors! As the Covid-19 crisis has shown, it’s essential to put a Business Continuity Plan in place to defend against every type of disaster using our best practices. Failure to do so can mean financial loss or damage to your company’s reputation. Start preparing, contact us or use our free BCP template to get started today.

What is Data Integrity? Why Your Business Needs to Maintain it

Definition of Data Integrity

Data Integrity is a process to ensure data is accurate and consistent over its lifecycle. Good data is invaluable to companies for planning – but only if the data is accurate.

Data Integrity typically refers to computer data. It can be applied more broadly, though, to any data collection. Even a field technician who makes onsite repairs can collect data. Protocols can still be used to ensure data stays intact.

Threats to the Integrity of Data

There are a few ways that data can be damaged:

- Damage in transit – Data can become damaged during transfer either to a storage device or over a network.

- Hardware failure – Failure in a storage device or other computer hardware can cause corruption.

- Configuration problems – A misconfiguration in a computing system, such as a software or security application, can damage data.

- Human error – People make mistakes, and can accidentally damage data.

- Deliberate breach – A person or software infiltrates a computer and changes data. For example, some malware encrypts data and holds it hostage for payment. A hacker might breach the system and make changes.

The Importance of Data Integrity

Critical business decisions depend on accurate data. As data collection increases, companies use it to measure effectiveness.

If data is damaged, any decisions based on that data are suspect. For example, a business sets a tracking cookie on its web page. This cookie collects the number of page views and sign-ups by visitors. If the cookie is misconfigured, it might show an artificially high sign-up rate. The business might decide to spend less on marketing, leading to less traffic and fewer sign-ups.

Data integrity is crucial because it’s a window into the organization. If that data is damaged, it’s hard to see the details. Worse, manipulated data can lead to bad business decisions.

Aspects of Data Integrity

Who, what, when

Data should have the time, date, and identity of who recorded it. It could include a brief overview or might be a timestamp of access to a website. It could be noted from a tech support agent.

Readability and Formatting

The data should be formatted and easy to read. In the case of a tech support agent, use a standard format to document the ticket. For a website, logging should be automatic and meaningful. A field technician should write legibly on forms, and consider transcribing them digitally.

Timely

Log data as it happens.Any delay in recording creates an opportunity for loss. Data should record as it is observed, without interpretations.

Original

Good data is kept in its original format, secured, and backed up. Create reports and interpretations using copies of the original data. This helps reduce the chances of damaging the original.

Accurate

Make sure data follows protocols, and is free from errors. A tech support agent might log a script. A website logger might record data in a standard file type like XML. A field technician should complete all fields on a paper form.

How to ensure data integrity

Validate input

Check input at the time it’s recorded. For example, a contact form on a website might screen for a valid email address. Digital input can be automated, such as electronic forms that allow specific information. Review paper forms and logs and correct any errors.

Input validation can also be used to block cyber attacks, such as SQL injection prevention. This is one-way Data Integrity works together with data security.

Validate data

Once collected, the data is in a raw form. Validation checks the quality of the data to be correct, meaningful, and secure. Automate digital validation by using scripts to filter and organize data. For paper data, transcribe notes into digital format. Alternately, physical notes can be reviewed for errors.

Data validation can happen during transfer. For example, copying to a USB drive or downloading from the internet. This checks to ensure the copy is identical to the original. Network protocols use error-checking, but it’s not foolproof. Validation is an extra step to ensure integrity.

Make backups

A good backup creates a duplicate in a different location. Copying a folder onto a USB drive is one way to create a backup. Storing files in the cloud is another. Even data centers can create backups by mirroring content with a second data center.

Backups should include the original raw data. Reports can always be recreated from the original data. Once lost, raw data is irreplaceable.

Implement access controls

Access to data should be based on a business needs. Restrict unauthorized users from access to data. For example, a tech support agent does not need access to client payment card data.

Even with physical paper data, access controls and management are essential. Sensitive physical records should be kept locked and secure. Limiting access reduces the chances of corruption and loss.

Maintain an audit trail

An audit trail records access and usage of data. For example, a database server might record the username, time, and date for each action in a database. Likewise, a library might keep a ledger of the names and dates of guests.

Audit trails are data and should follow the guidelines in this article. They aren’t typically used unless there’s a problem. The audit trail can help identify the source of data loss. An audit trail might show a username and time stamp for access. This helps identify and stop the problem.

Database Integrity

In database theory, data integrity includes three main points:

- Entity Integrity – Each table needs a unique primary key to distinguish one table from another.

- Referential Integrity – Tables can refer to other tables using a foreign key.

- Domain Integrity – The database has pre-set categories and values. This is similar to screening input and reading reports.

With a database, data integrity works differently. This is useful for the inner workings of a database. Even so, the database is still part of an organization. The advice in this article will help your organization create policies on how to keep the database intact.

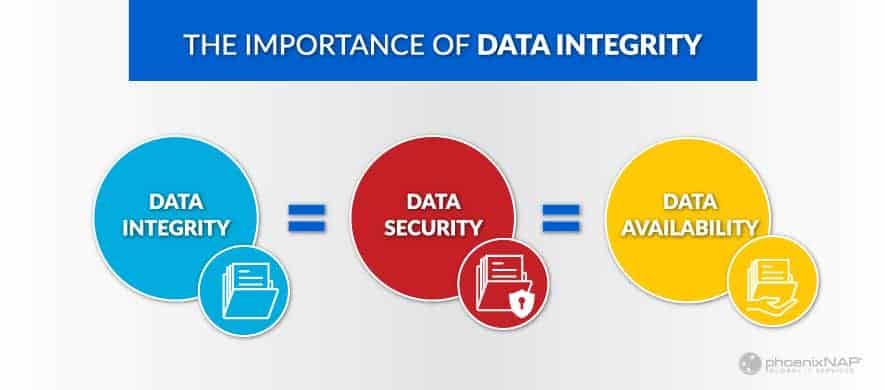

Data Security versus Data Integrity

Data Security is related to Data Integrity, but they are not the same thing. Data Security refers to keeping data safe from unauthorized users. It includes hardware solutions like firewalls and software solutions like authentication. Data Security often goes hand-in-hand with preventing cyber attacks.

Data Integrity is a more broad application of policies and solutions to keep data pure and unmodified. It can include Data Security to prevent unauthorized users from modifying data. But it also provides for measures to record, maintain, and preserve data in its original condition.

Conclusion

Data Integrity ensures keeping electronic data intact. After all, reports are only as good as the data they are based on. Data integrity can also apply to information outside the computer world. Whether it’s digital or printed, ensuring data integrity forms the base for good business decisions.

Recent Posts

RTO (Recovery Time Objective) vs RPO (Recovery Point Objective)

In this article you will learn:

- What Recovery Time Objective (RTO) and Recovery Point Objective (RPO) are. Why they are critical to your data recovery and protection strategy.

- Intelligent data management starts with a plan to avoid catastrophic losses — disaster recovery planning can guarantee the survival of your business when an emergency strikes.

- How business continuity planning minimizes the loss of revenue while also boosting customer confidence.

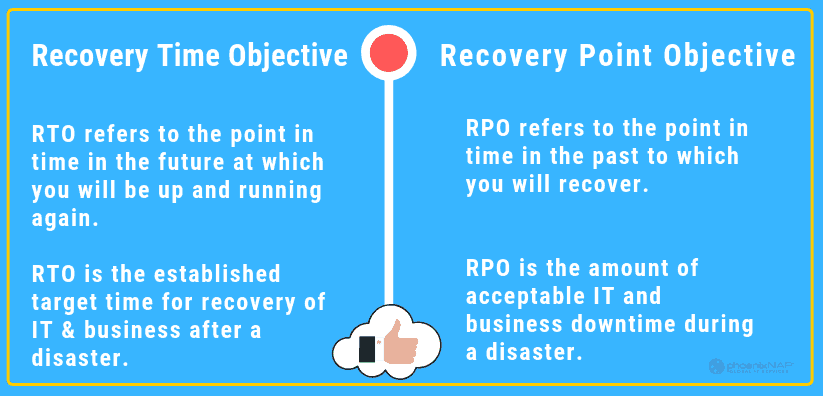

Recovery Time Objective and Recovery Point Objective may sound alike, but they are entirely different metrics in disaster recovery and business continuity management.

Find out how to plan accordingly with the proper resources before you need them. Much like having insurance, you may never use it – or it may save your company.

In this article, we will examine the critical differences between RPO and RTO and clear up any confusion!

RTO: Recovery Time Objective

RTO dictates how quickly your infrastructure needs to be back online after a disaster. Sometimes, we use RTO to define the maximum downtime a company can handle and maintain business continuity. This is often a target time set for services restoration after a disaster. For example, a Recovery Time Objective of 2 hours aims to have everything back up and running within two hours of service disruption notification.

Sometimes, such RTO is not achievable. A hurricane or a flood can bring down a business, leaving it down for weeks. However, some organizations are more resilient to outages.

For example, a small plumbing company could get by with paperwork orders and invoicing for a week or more. A business with a web-based application that relies on subscriptions might be crippled after only a few hours.

In the case of outsourced IT services, RTO is defined within a Service Level Agreement (SLA). IT and other service providers typically include the following support terms in their SLA:

- Availability: the hours you can call for support.

- Response time: how quickly they contact you after a support request.

- Resolution time: how quickly they will restore the services.

Depending on your business requirements, you may need better RTO. With it, the costs increase as well. Whatever RTO you choose, it should be cost-effective for your organization.

Businesses can handle RTO internally. If you have an in-house IT department, there should be a goal for resolving technical problems. The ability to fulfill the RTO depends on the severity of the disaster. An objective of one hour is attainable for a server crash. However, it might not be realistic to expect a one-hour solution in case of a natural disaster in the area.

RTO includes more than just the amount of time to needed to recover from a disaster. It should also include steps to mitigate or recover from different disasters. The plan needs to contain proper testing for the measures

RPO: Recovery Point Objective

An RPO measures the acceptable amount of data loss after a disruption of service.

For example, lost sales may become an excessive burden against costs after 18 hours. That threshold may put a company below any sales targets.

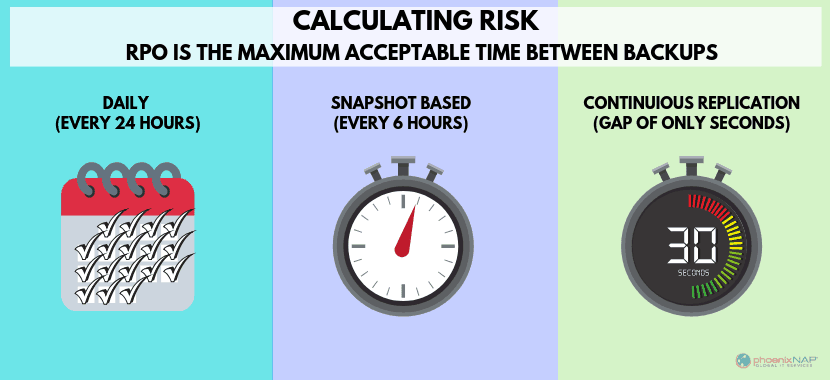

Backups and mirror-copies of data are an essential part of RPO solutions. It is necessary to know how much data is an acceptable loss. Some businesses address this by calculating storage costs versus recovery costs. This helps determine how often to create backups. Other businesses use cloud storage to create a real-time clone of their data. In this scenario, a failover happens in a matter of seconds.

Similar to RTO and acceptable downtime, some businesses have better loss tolerance for data. Retrieving 18 hours of records for a small plumbing company is possible but may not be detrimental to the business operation. In contrast, an online billing company may find itself in trouble after only a few minutes worth of data loss.

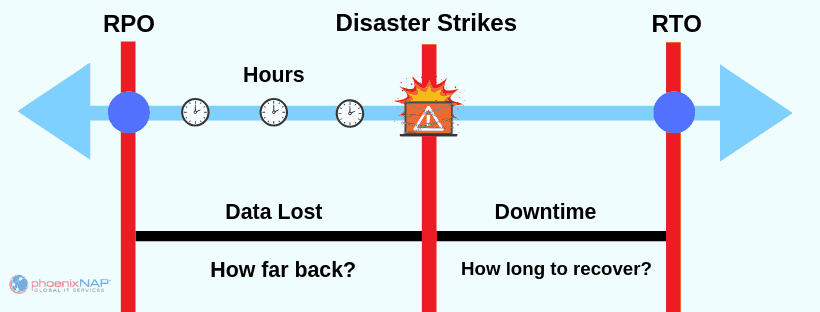

RPO is categorized by time and technology:

- 8-24 hours: These objectives rely on external storage data backups of the production environment. The last available backup serves as a restoration point.

- Up to 4 hours: These objectives require ongoing snapshots of the production environment. In a disaster, getting data back is faster and brings less disruption to your business.

- Near zero: These objectives use enterprise cloud backup and storage solutions to mirror or replicate data. Frequently, these services replicate data in multiple geographic locations for maximum redundancy. The failover and failback are seamless.

Both RTO and RPO involve periods of time for the measurements. However, while RTO focuses on bringing hardware and software online, RPO focuses on acceptable data loss.

Calculation of Risk

Both RTO and RPO are calculations of risk. RTO is a calculation of how long a business can sustain a service interruption. RPO is a calculation of how recent the data will be when it is recovered.

Calculating RTO

We base RTO calculation on projection and risk management. A frequently used application may be critical for business continuity in the same way a seldom-used application is. Hence, the importance of an application does not have to be the same as the frequency of usage. You need to decide which services can be unavailable for how long and if they are critical to your business.

To calculate RTO, consider these factors:

- The cost per hour of outage

- The importance and priority of individual systems

- Steps required to mitigate or recover from a disaster (including individual components or processes)

- Cost/benefit equation for recovery solutions

Calculating RPO

Calculating an RPO is also based on risk. In a disaster, a degree of data loss may be imminent. RPO becomes a balancing act between the impact of data loss on the business and the cost of mitigation. A few angry customers, because their orders are lost, might be an acceptable loss. In contrast, hundreds of lost transactions might be a massive blow to a business.

Consider these factors when determining your RPO:

- The maximum tolerable amount of data loss that your organization can sustain.

- The cost of lost data and operations

- The cost of implementing recovery solutions

RPO is the maximum acceptable time between backups. If data backups are performed every 6 hours, and a disaster strikes 1 hour after the backup, you will lose only one hour of data. This means you are 5 hours under the projected RPO.

Disaster Recovery Planning

Disasters come in many forms. Such as a natural disaster, hurricane, flood, or a wildfire. A disaster could also refer to a catastrophic failure of assets or infrastructure, like power lines, bridges, or servers.

Disasters include all types of cybersecurity attacks that destroy your data, compromise credit card information, or even disable an entire site.

With so many definitions of disaster, it is helpful to define them in terms of what they have in common. For organizations and IT departments, a disaster is an event that disrupts normal business operation.

Dealing with disasters starts with planning and prevention. Many businesses use cloud solutions in different geographical regions to minimize the risk of downtime. Some install redundant hardware to keep the IT infrastructure running.

A crucial step in data recovery is to develop a Disaster Recovery plan.

Consider the probability of different kinds of disasters. Various disasters may warrant different response plans. For example, in the Pacific Northwest, hurricanes are rare, but earthquakes can occur. In Florida, the reverse is true. Cyber-attacks may be more of a threat to larger businesses with extensive online presence than smaller ones. A DDoS attack might warrant a different response than a data breach.

A Disaster Recovery Plan helps to bring systems and processes online much faster than ad hoc solutions. When everyone plays a specific role, a recovery strategy can proceed quickly. A DR plan also helps put resources in place before you need them. Therefore, response plans improve Recovery Time and Recovery Point Objectives.

Difference Between RTO and RPO is Critical

While closely related, it is essential to understand the differences between Recovery Time Objective and Recovery Point Objective

RTO refers to the amount of time you need to bring a system back online. RPO is a business calculation for acceptable data loss from downtime.

Improve these metrics and employ a Disaster Recovery plan today.

Recent Posts

What is Business Continuity Management (BCM)? Framework & Key Strategies

Business continuity management is a critical process. It ensures your company maintains normal business operations during a disaster with minimal disruption.

BCM works on the principle that good response systems mitigate damages from theoretical events.

What is Business Continuity Management? A Definition

Business continuity management is defined as the advanced planning and preparation of an organization to maintaining business functions or quickly resuming after a disaster has occurred. It also involves defining potential risks including fire, flood or cyber attacks.

Business leaders plan to identify and address potential crises before they happen. Then testing those procedures to ensure that they work, and periodically reviewing the process to make sure that it is up to date.

Business Continuity Management Framework

Policies and Strategies

Continuity management is about more than the reaction to a natural disaster or cyber attack. It begins with the policies and procedures developed, tested, and used when an incident occurs.

The policy defines the program’s scope, key parties, and management structure. It needs to articulate why business continuity is necessary andGovernance is critical in this phase.

Knowing who is responsible for the creation and modification of a business continuity plan checklist is one component. The other is identifying the team responsible for implementation. Governance provides clarity in what can be a chaotic time for all involved.

The scope is also crucial. It defines what business continuity means for the organization.

Is it about keeping applications operational, products and services available, data accessible, or physical locations and people safe? Businesses need to be clear about what is covered by a plan whether it’s revenue-generating components of the company, external facing aspects, or some other subset of the total organization.

Roles and responsibilities need to be assigned during this phase as well.

These may be roles that are obvious based on job function, or specific, given the type of disruption that may be experienced. In all cases, the policy, governance, scope, and roles need to be broadly communicated and supported.

Business Impact Assessment

The impact assessment is a cataloging process to identify the data your company holds, where it’s stored, how it’s collected, and how it’s accessed It determines which of those data are most critical and what the amount of downtime is that’s acceptable should that data or apps be unavailable.

While companies aim for 100 percent uptime, that rate is not always possible, even given redundant systems and storage capabilities. This phase is also the time when you need to calculate your recovery time objective, which is the maximum time it would take to restore applications to a functional state in the case of a sudden loss of service.

Also, companies should know the recovery point objective, which is the age of data that would be acceptable for customers and your company to resume operations. It can also be thought of as the data loss acceptability factor.

Risk Assessment

Risk comes in many forms. A Business Impact Analysis and a Threat & Risk Assessment should be performed.

Threats can include bad actors, internal players, competitors, market conditions, political matters (both domestic and international), and natural occurrences. A key component of your plan is to create a risk assessment that identifies potential threats to the enterprise.

Risk assessment identifies the broad array of risks that could impact the enterprise.

Identifying potential threats is the first step and can be far-reaching. This includes:

- The impact of personnel loss

- Changes in consumer or customer preferences

- Internal agility and ability to respond to security incidents with a plan

- Financial volatility

Regulated companies need to factor in the risk of non-compliance, which can result in hefty financial penalties and fines, increased agency scrutiny and the loss of standing, certification, or credibility.

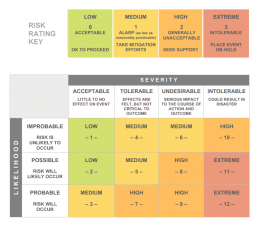

Each risk needs to be articulated and detailed. In the next phase, the organization needs to determine the probability of each risk happening and the potential impact of each one. Likelihood and potential are key measures when it comes to risk assessment.

Once the risks have been identified and ranked, the organization needs to determine what its risk tolerance is for each potentiality. What are the most urgent, critical issues that need to be addressed? At this phase, potential solutions need to be identified, evaluated, and priced. With this new information, which includes probability and cost, the organization needs to prioritize which risks will be addressed.

The ranked risks then need to be evaluated as to which risks will be addressed first. Note that this process is not static. It needs to be regularly discussed to account for new threats that emerge as technologies, geopolitics, and competition evolves.

Validation and Testing

The risks and their impacts need to be continuously monitored, measured and tested. Once mitigation plans are in place, those also should be assessed to ensure they are working correctly and cohesively.

Incident Identification

With business continuity, defining what constitutes an incident is essential. Events should be clearly described in policy documents, as should who or what can trigger that an incident has occurred. These triggering actions should prompt the deployment of the business continuity plan as it is defined and bring the team into action.

Disaster Recovery

What’s the difference between business continuity and disaster recovery? The former is the overarching plans that guide operations and establish policy. Disaster recovery is what happens when an incident occurs.

Disaster recovery is the deployment of the teams and actions that are sprung. It is the net results of the work done to identify risks and remediate them. Disaster recovery is about specific incident responses, as opposed to broader planning.

After an incident, one fundamental task is to debrief and assess the response, and revising plans accordingly.

Role of Communication & Managing Business Continuity

Communication is an essential component of managing business continuity. Crisis communication is one component, ensuring that there are transparent processes for communicating with customers, consumers, employees, senior-level staff, and stakeholders. Consistent communication strategies are essential during and after an incident. Messaging must be consistent, accurate, and coming from a unified corporate voice.

Crisis management involves many layers of communication, including the creation of tools to indicate progress, critical needs, and issues. The types of communication may vary across constituencies but should be based on the same sources of information.

Resilience and Reputation Management

The risks of not having a business continuity plan are significant. The absence of preparing means the company is ill-prepared to address pressing issues.

These risks can leave a company flat-footed and can lead to other significant problems, including:

- Downtime for cloud-based servers, systems, and applications. Even minutes of downtime can result in the loss of substantial revenue.

- Credibility loss to reputation and brand identity. Widespread, consistent, or frequent downtime can erode confidence with customers and consumers. Customer retention can plummet.

- Regulatory compliance can be at risk in industries such as financial services, healthcare, and energy. If systems and data are not operational and accessible, the consequences are severe.

Prepare Today, Establish a Business Continuity Management Program

Managing business continuity is about data protection and integrity, the loss of which can be catastrophic.

It should be part of organizational culture. With a systematic approach to business continuity planning, businesses can expedite the recovery of critical activity.

Recent Posts

Upgrade Your Security Incident Response Plan (CSIRP) : 7 Step Checklist

In this article you will learn:

- Why every organization needs a cybersecurity incident response policy for business continuity.

- The Seven critical security incident response steps (in a checklist) to mitigate data loss.

- What should be included in the planning process to ensure business operations are not interrupted?

- Identify which incidents require attention & When to initiate your response.

- How to use threat intelligence to avoid future incidents.

What if your company’s network was hacked today? The business impact could be massive.

Are you prepared to respond to a data security breach or cybersecurity attack? In 2020, it is far more likely than not that you will go through a security event.

If you have data, you are at risk for cyber threats. Cybercriminals are continually developing new strategies to breach systems. Proper planning is a must. Preparation for these events can decrease the damage and loss you and your stakeholder’s.

Having a clear, specific, and current cybersecurity incident response plan is no longer optional.

What is an Incident Response Plan?

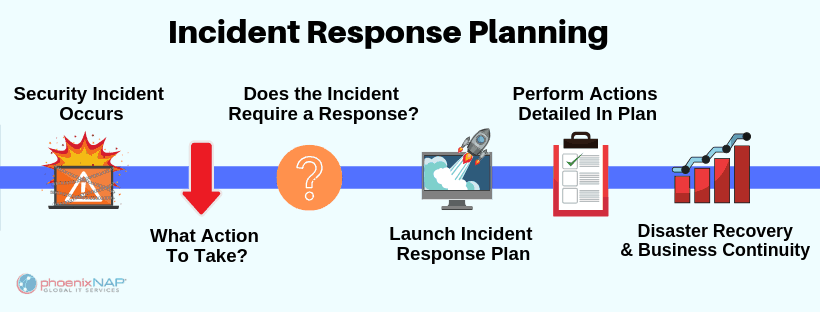

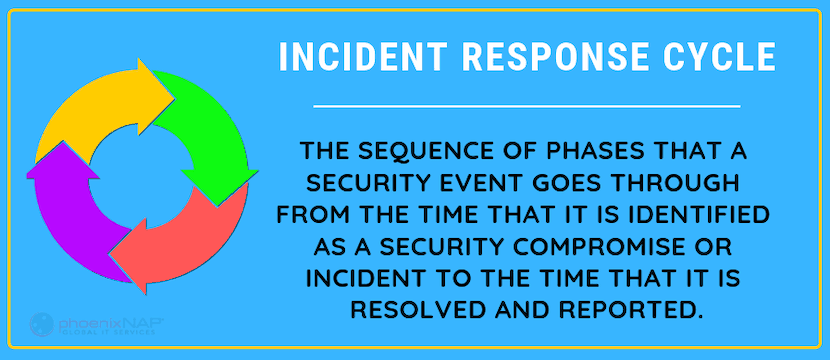

An incident response (IR) plan is the guide for how your organization will react in the event of a security breach.

Incident response is a well-planned approach to addressing and managing reaction after a cyber attack or network security breach. The goal is to minimize damage, reduce disaster recovery time, and mitigate breach-related expenses.

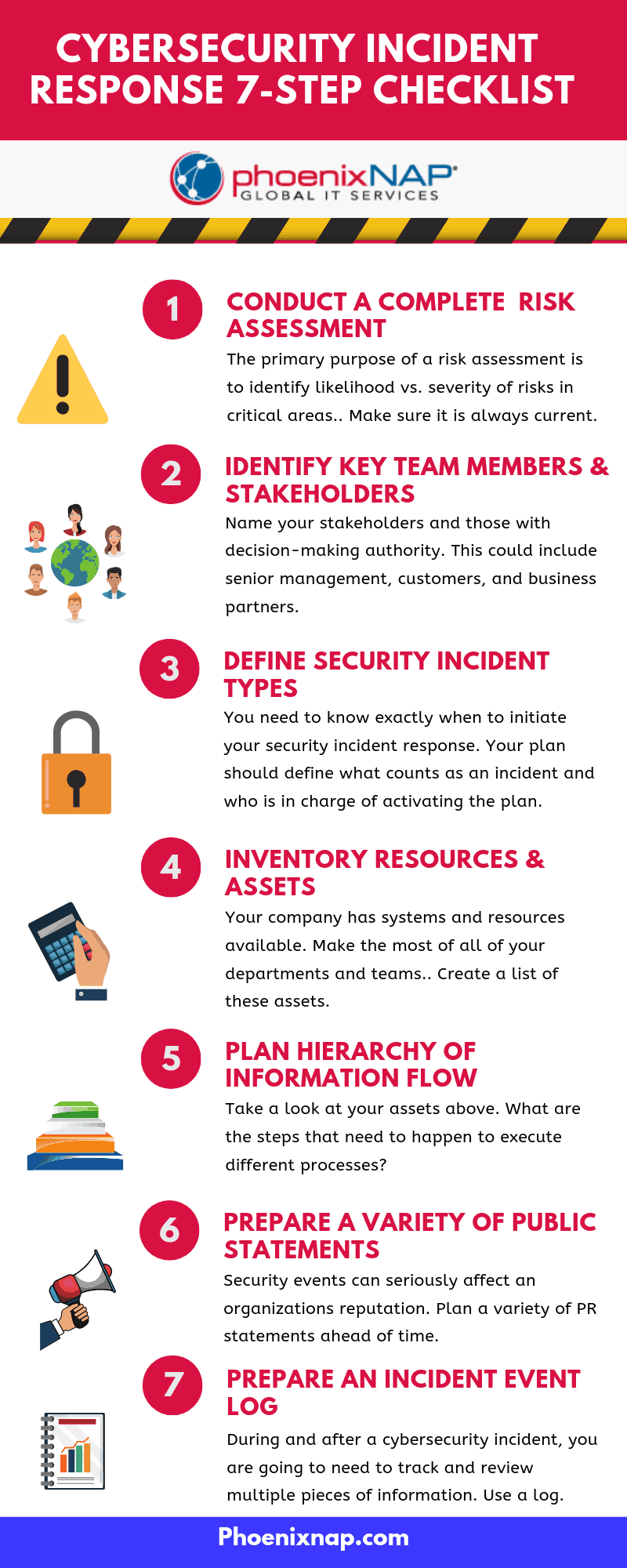

Cybersecurity Incident Response Checklist, in 7 Steps

During a breach, your team won’t have time to interpret a lengthy or tedious action plan.

Keep it simple; keep it specific.

Checklists are a great way to capture the information you need while staying compact, manageable, and distributable. Our checklist is based on the 7 phases of incident response process which are broken down in the infographic below.

Share this Image On Your Site, Copy & Paste

1. Focus Response Efforts with a Risk Assessment

If you haven’t done a potential incident risk assessment, now is the time. The primary purpose of any risk assessment is to identify likelihood vs. severity of risks in critical areas. If you’ve done a cybersecurity risk assessment, make sure it is current and applicable to your systems today. If It’s out-of-date, perform another evaluation.

Examples of a high-severity risk are a security breach of a privileged account with access to sensitive data. This is especially the case if the number of affected users is high. If the likelihood of this risk is high, then it demands specific contingency planning in your IR plan. The Department of Homeland Security provides an excellent Cyber Incident Scoring System to help you assess risk.

Use your risk assessment to identify and prioritize severe, likely risks. Plan appropriately for medium and low-risk items as well. Doing this will help you avoid focusing all your energy on doomsday scenarios. Remember, a “medium-risk” breach could still be crippling.

2. Identify Key Team Members and Stakeholders

Identify key individuals in your plan now, both internal and external to your CSIRT. Name your stakeholders and those with decision-making authority. This could include senior management, customers, and business partners.

Document the roles and responsibilities of each key person or group. Train them to perform these functions. People may be responsible for sending out a PR statement, activating procedures to contact authorities, or performing containment activities to minimize damage from the breach.

Store multiple forms of contact information both online and offline. Plan to have a variety of contact methods available (don’t rely exclusively on email) in case of system interruptions.

3. Define Incident Types and Thresholds

You need to know exactly when to initiate your IT security incident response. Your response plan should define what counts as an incident and who is in charge of activating the plan.

Know the kinds of cybersecurity attacks that can occur — stay-up-to-date on the latest trends and new types of data breaches that are happening.

Defining potential security incidents can save critical time in the early stages of breach detection. The stronger your CSIRT’s working knowledge of incident types and what they look like, the faster you can invoke a targeted active response.

Educate those outside your CSIRT, including stakeholders. They should also be familiar with these incident definitions and thresholds. Establish a clear communication plan to share information amongst your CSIRT and other key individuals to convey this information.

4. Inventory Your Resources and Assets

IR response depends on coordinated action across many departments and groups. You have different systems and resources available, so make the most of all of your departments and response teams.

Create a list of these assets, which can include:

- Business Resources: Team members, security operations center departments, and business partners are all businesses resources. These should consist of your legal team, IT, HR, a security partner, or the local authorities.

- Process Resources: A key consideration is to evaluate the processes you can activate depending on the type and severity of a security breach. Partial containment, “watch and wait” strategies, and system shutdowns like web page deactivation are all resources to include in your IR plan.

Once you have inventoried your assets, define how you would use them in a variety of incident types. With careful security risk management of these resources, you can minimize affected systems and potential losses.

5. Recovery Plan Hierarchies and Information Flow

Take a look at your assets above.

What are the steps that need to happen to execute different processes? Who is the incident response manager? Who is the contact for your security partner?

Design a flowchart of authority to define how to get from Point A to Point B. Who has the power to shut down your website for the short term? What steps need to happen to get there?

Flowcharts are an excellent resource for planning the flow of information. NIST has some helpful tools explaining how to disseminate information accurately at a moment’s notice. Be aware that this kind of communication map can change frequently. Make special plans to update these flowcharts after a department restructure or other major transition. You may need to do this outside your typical review process.

6. Prepare Public Statements

Security events can seriously affect an organizations reputation. Curbing some of the adverse effects around these breaches has a lot to do with public perception. How you interface with the public about a potential incident matters.

Some of the best practices recognized by the IAPP include:

- Use press releases to get your message out.

- Describe how (and with whom) you are solving the problem and what corrective action has been taken.

- Explain that you will publish updates on the root cause as soon as possible.

- Use caution when talking about actual numbers or totalities such as “the issue is completely resolved.”

- Be consistent in your messaging

- Be open to conversations after the incident in formats like Q&A’s or blog posts

Plan a variety of PR statements ahead of time. You may need to send an email to potentially compromised users. You may need to communicate with media outlets. You should have statement templates prepared if you need to provide the public with information about a breach.

How much is too much information? This is an important question to ask as you design your prepared PR statements. For these statements, timing is key – balance fact-checking and accuracy against timeliness.

Your customers are going to want answers fast, but don’t let that rush you into publishing incorrect info. Publicizing wrong numbers of affected clients or the types of data compromised will hurt your reputation. It’s much better to publish metrics you’re sure about than to mop up the mess from a false statement later.

7. Prepare an Incident Event Log

During and after a cybersecurity incident, you are going to need to track and review multiple pieces of information. How, when, and where the breach was discovered and addressed? These details and all supporting info will go into an event log. Prepare a template ahead of time, so it is easy to complete.

This log should include:

- Location, time, and nature of the incident discovery

- Communications details (who, what, and when)

- Any relevant data from your security reporting software and event logs

After an information security incident, this log will be critical. A thorough and effective incident review is impossible without a detailed event log. Security analysts will lean on this log to review the efficacy of your response and lessons learned. This account will also support your legal team and law enforcement both during and after threat detection.

How Often Should You Review Your Incident Response Procedures?

To review the steps in your cybersecurity incident response checklist, you need to test it. Run potential scenarios based on your initial risk assessment and updated security policy.

Perhaps you are in a multi-user environment prone to phishing attacks. Your testing agenda will look different than if you are a significant target for a DDoS attack. At a minimum, annual testing is suggested. But your business may need to conduct these exercises more frequently.

Planning Starts Now For Effective Cyber Security Incident Response

If you don’t have a Computer Security Incident Response Team (CSIRT) yet, it’s time to make one. The CSIRT will be the primary driver for your cybersecurity incident response plan. Critical players should include members of your executive team, human resources, legal, public relations, and IT.

Your plan should be a clear, actionable document that your team can tackle in a variety of scenarios, whether it’s a small containment event or a full-scale front-facing site interruption.

Protecting your organization from cybersecurity attacks is a shared process.

Partnering with the experts in today’s security landscape can make all the difference between a controlled response and tragic loss. Contact PhoenixNAP today to learn more about our global security solutions.

Recent Posts

Information Security Risk Management: Plan, Steps, & Examples

Are your mission-critical data, customer information, and personnel records safe from intrusions from cybercriminals, hackers, and even internal misuse or destruction?

If you’re confident that your data is secure, other companies had the same feeling:

- Target, one of the largest retailers in the U.S. fell victim to a massive cyber attack in 2013, with personal information of 110 million customers and 40 million banking records being compromised. This resulted in long-term damage to the company’s image and a settlement of over 18 million dollars.

- Equifax, the well-known credit company, was attacked over a period of months, discovered in July 2017. Cyber thieves made off with sensitive data of over 143 million customers and 200,000 credit card numbers.

These are only examples of highly public attacks that resulted in considerable fines and settlements. Not to mention, damage to brand image and public perception.

Kaspersky Labs’ study of cybersecurity revealed 758 million malicious cyber attacks and security incidents worldwide in 2018, with one third having their origin in the U.S.

How do you protect your business and information assets from a security incident?

The solution is to have a strategic plan, a commitment to Information Security Risk Management.

What is Information Security Risk Management? A Definition

Information Security Risk Management, or ISRM, is the process of managing risks affiliated with the use of information technology.

In other words, organizations need to:

- Identify Security risks, including types of computer security risks.

- Determining business “system owners” of critical assets.

- Assessing enterprise risk tolerance and acceptable risks.

- Develop a cybersecurity incident response plan.

Building Your Risk Management Strategy

Risk Assessment

Your risk profile includes analysis of all information systems and determination of threats to your business:

- Network security risks

- Data & IT security risks

- Existing organizational security controls

A comprehensive IT security assessment includes data risks, analysis of database security issues, the potential for data breaches, network, and physical vulnerabilities.

Risk Treatment

Actions taken to remediate vulnerabilities through multiple approaches:

- Risk acceptance

- Risk avoidance

- Risk management

- Incident management

- Incident response planning

Developing an enterprise solution requires a thorough analysis of security threats to information systems in your business.

Risk assessment and risk treatment are iterative processes that require the commitment of resources in multiple areas of your business: HR, IT, Legal, Public Relations, and more.

Not all risks identified in risk assessment will be resolved in risk treatment. Some will be determined to be acceptable or low-impact risks that do not warrant an immediate treatment plan.

There are multiple stages to be addressed in your information security risk assessment.

6 Stages of a Security Risk Assessment

A useful guideline for adopting a risk management framework is provided by the U.S. Dept. of Commerce National Institute of Standards and Technology (NIST). This voluntary framework outlines the stages of ISRM programs that may apply to your business.

1. Identify – Data Risk Analysis

This stage is the process of identifying your digital assets that may include a wide variety of information:

Financial information that must be controlled under Sarbanes-OxleyHealthcare records requiring confidentiality through the application of the Health Insurance Portability and Accountability Act, HIPAA

Company-confidential information such as product development and trade secrets

Personnel data that could expose employees to cybersecurity risks such as identity theft regulations

For those dealing with credit card transactions, compliance with Payment Card Industry Data Security Standard (PCI DSS)

During this stage, you will evaluate not only the risk potential for data loss or theft but also prioritize the steps to be taken to minimize or avoid the risk associated with each type of data.

The result of the Identify stage is to understand your top information security risks and to evaluate any controls you already have in place to mitigate those risks. The analysis in this stage reveals such data security issues as:

Potential threats – physical, environmental, technical, and personnel-related

Controls already in place – secure strong passwords, physical security, use of technology, network access

Data assets that should or must be protected and controlled

This includes categorizing data for security risk management by the level of confidentiality, compliance regulations, financial risk, and acceptable level of risk.

2. Protection – Asset Management

Once you have an awareness of your security risks, you can take steps to safeguard those assets.

This includes a variety of processes, from implementing security policies to installing sophisticated software that provides advanced data risk management capabilities.

- Security awareness training of employees in the proper handling of confidential information.

- Implement access controls so that only those who genuinely need information have access.

- Define security controls required to minimize exposure from security incidents.

- For each identified risk, establish the corresponding business “owner” to obtain buy-in for proposed controls and risk tolerance.

- Create an information security officer position with a centralized focus on data security risk assessment and risk mitigation.

3. Implementation

Your implementation stage includes the adoption of formal policies and data security controls.

These controls will encompass a variety of approaches to data management risks:

- Review of identified security threats and existing controls

- Creation of new controls for threat detection and containment

- Select network security tools for analysis of actual and attempted threats

- Install and implement technology for alerts and capturing unauthorized access

4. Security Control Assessment

Both existing and new security controls adopted by your business should undergo regular scrutiny.

- Validate that alerts are routed to the right resources for immediate action.

- Ensure that as applications are added or updated, there is a continuous data risk analysis.

- Network security measures should be tested regularly for effectiveness. If your organization includes audit functions, have controls been reviewed and approved?

- Have data business owners (stakeholders) been interviewed to ensure risk management solutions are acceptable? Are they appropriate for the associated vulnerability?

5. Information Security System Authorizations

Now that you have a comprehensive view of your critical data, defined the threats, and established controls for your security management process, how do you ensure its effectiveness?

The authorization stage will help you make this determination:

- Are the right individuals notified of on-going threats? Is this done promptly?

- Review the alerts generated by your controls – emails, documents, graphs, etc. Who is tracking response to warnings?

This authorization stage must examine not only who is informed, but what actions are taken, and how quickly. When your data is at risk, the reaction time is essential to minimize data theft or loss.

6. Risk Monitoring

Adopting an information risk management framework is critical to providing a secure environment for your technical assets.

Implementing a sophisticated software-driven system of controls and alert management is an effective part of a risk treatment plan.

Continuous monitoring and analysis are critical. Cyber thieves develop new methods of attacking your network and data warehouses daily. To keep pace with this onslaught of activity, you must revisit your reporting, alerts, and metrics regularly.

Create an Effective Security Risk Management Program

Defeating cybercriminals and halting internal threats is a challenging process. Bringing data integrity and availability to your enterprise risk management is essential to your employees, customers, and shareholders.

Creating your risk management process and take strategic steps to make data security a fundamental part of conducting business.

In summary, best practices include:

- Implement technology solutions to detect and eradicate threats before data is compromised.

- Establish a security office with accountability.

- Ensure compliance with security policies.

- Make data analysis a collaborative effort between IT and business stakeholders.

- Ensure alerts and reporting are meaningful and effectively routed.

Conducting a complete IT security assessment and managing enterprise risk is essential to identify vulnerability issues.

Develop a comprehensive approach to information security.

PhoenixNAP incorporates infrastructure and software solutions to provide our customers with reliable, essential information technology services:

- High-performance, scalable Cloud services

- Dedicated servers and redundant systems

- Complete software solutions for ISRM

- Disaster recovery services including backup and restore functions

Security is our core focus, providing control and protection of your network and critical data.

Contact our professionals today to discuss how our services can be tailored to provide your company with a global security solution.

Recent Posts

Definitive Guide For Preventing and Detecting Ransomware

In this article you will learn:

- Best practices to implement immediately to protect your organization from ransomware.

- Why you should be using threat detection to protect your data from hackers.

- What to do if you become a ransomware victim. Should you pay the ransom? You may be surprised by what the data says.

- Where you should be backing up your data. Hint, the answer is more than one location.

- Preventing ransomware starts with employee awareness.

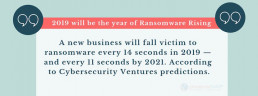

Ransomware has become a lucrative tactic for cybercriminals.

No business is immune from the threat of ransomware.

When your systems come under ransomware attack, it can be a frightening and challenging situation to manage. Once malware infects a machine, it attacks specific files—or even your entire hard drive and locks you out of your own data.

Ransomware is on the rise with an increase of nearly 750 percent in the last year.

Cybercrime realted damages are expected to hit $6 trillion by 2021.

The best way to stop ransomware is to be proactive by preventing attacks from happening in the first place. In this article, we will discuss how to prevent and avoid ransomware.

What is Ransomware? How Does it Work?

All forms of ransomware share a common goal. To lock your hard drive or encrypt your files and demand money to access your data.

Ransomware is one of many types of malware or malicious software that uses encryption to hold your data for ransom.

It is a form of malware that often targets both human and technical weaknesses by attempting to deny an organization the availability of its most sensitive data and/or systems.

These attacks on cybersecurity can range from malware locking system to full encryption of files and resources until a ransom is paid.

A bad actor uses a phishing attack or other form of hacking to gain entry into a computer system. One way ransomware gets on your computer is in the form of email attachments that you accidentally download. Once infected with ransomware, the virus encrypts your files and prevents access.

The hacker then makes it clear that the information is stolen and offers to give that information back if the victim pays a ransom.

Victims are often asked to pay the ransom in the form of Bitcoins. If the ransom is paid, the cybercriminals may unlock the data or send a key to for the encrypted files. Or, they may not unlock anything after payment, as we discuss later.

How To Avoid & Prevent Ransomware

Ransomware is particularly insidious. Although ransomware often travels through email, it has also been known to take advantage of backdoors or vulnerabilities.

Here are some ways you can avoid falling victim and be locked out of your own data.

1. Backup Your Systems, Locally & In The Cloud

The first step to take is to always backup your system. Locally, and offsite.

This is essential. First, it will keep your information backed up in a safe area that hackers cannot easily access. Secondly, it will make it easier for you to wipe your old system and repair it with backup files in case of an attack.

Failure to back up your system can cause irreparable damage.

Use a cloud backup solution to protect your data. By protecting your data in the cloud, you keep it safe from infection by ransomware. Cloud backups introduce redundancy and add an extra layer of protection.

Have multiple backups just in case the last back up got overwritten with encrypted ransomware files.

2. Segment Network Access

Limit the data an attacker can access with network segmentation security. With dynamic control access, you help ensure that your entire network security is not compromised in a single attack. Segregate your network into distinct zones, each requiring different credentials.

3. Early Threat Detection Systems

You can install ransomware protection software that will help identify potential attacks. Early unified threat management programs can find intrusions as they happen and prevent them. These programs often offer gateway antivirus software as well.

Use a traditional firewall that will block unauthorized access to your computer or network. Couple this with a program that filters web content specifically focused on sites that may introduce malware. Also, use email security best practices and spam filtering to keep unwanted attachments from your email inbox.

Windows offers a function called Group Policy that allows you to define how a group of users can use your system. It can block the execution of files from your local folders. Such folders include temporary folders and the download folder. This stops attacks that begin by placing malware in a local folder that then opens and infects the computer system.

Make sure to download and install any software updates or patches for systems you use. These updates improve how well your computers work, and they also repair vulnerable spots in security. This can help you keep out attackers who might want to exploit software vulnerabilities.

You can even use software designed to detect attacks after they have begun so the user can take measures to stop it. This can include removing the computer from the network, initiating a scan, and notifying the IT department.

4. Install Anti Malware / Ransomware Software

Don’t assume you have the latest antivirus to protect against ransomware. Your security software should consist of antivirus, anti-malware, and anti-ransomware protection.

It is also crucial to regularly update your virus definitions.

5. Run Frequent Scheduled Security Scans

All the security software on your system does no good if you aren’t running scans on your computers and mobile devices regularly.