Achieving business continuity is a primary concern for modern organizations. Downtime can cause significant financial impact and, in some cases, irrecoverable data loss.

The solution to avoiding service disruption and unplanned downtime is employing a high availability architecture.

Because every business is highly dependent on the Internet, every minute counts. That is why company computers and servers must stay operational at all times.

Whether you choose to house your own IT infrastructure or opt for a hosted solution in a data center, high availability must be the first thing to consider when setting up your IT environment.

High Availability Definition

A highly available architecture involves multiple components working together to ensure uninterrupted service during a specific period. This also includes the response time to users’ requests. Namely, available systems have to be not only online, but also responsive.

Implementing a cloud computing architecture that enables this is key to ensuring the continuous operation of critical applications and services. They stay online and responsive even when various component failures occur or when a system is under high stress.

Highly available systems include the capability to recover from unexpected events in the shortest time possible. By moving the processes to backup components, these systems minimize downtime or eliminate it. This usually requires constant maintenance, monitoring, and initial in-depth tests to confirm that there are no weak points.

High availability environments include complex server clusters with system software for continuous monitoring of the system’s performance. The top priority is to avoid unplanned equipment downtime. If a piece of hardware fails, it must not cause a complete halt of service during the production time.

Staying operational without interruptions is especially crucial for large organizations. In such settings, a few minutes lost can lead to a loss of reputation, customers, and thousands of dollars. Highly available computer systems allow glitches as long as the level of usability does not impact business operations.

A highly available infrastructure has the following traits:

- Hardware redundancy

- Software and application redundancy

- Data redundancy

- The single points of failure eliminated

How To Calculate High Availability Uptime Percentage?

Availability is measured by how much time a specific system stays fully operational during a particular period, usually a year.

It is expressed as a percentage. Note that uptime does not necessarily have to mean the same as availability. A system may be up and running, but not available to the users. The reasons for this may be network or load balancing issues.

The uptime is usually expressed by using the grading with five 9’s of availability.

If you decide to go for a hosted solution, this will be defined in the Service Level Agreement (SLA). A grade of “one nine” means that the guaranteed availability is 90%. Today, most organizations and businesses require having at least “three nines,” i.e., 99.9% of availability.

Businesses have different availability needs. Those that need to remain operational around the clock throughout the year will aim for “five nines,” 99.999% of uptime. It may seem like 0.1% does not make that much of a difference. However, when you convert this to hours and minutes, the numbers are significant.

Refer to the table of nines to see the maximum downtime per year every grade involves:

| Availability Level | Maximum Downtime per Year | Downtime per Day |

| One Nine: 90% | 36.5 days | 2.4 hours |

| Two Nines: 99% | 3.65 days | 14 minutes |

| Three Nines: 99.9% | 8.76 hours | 86 seconds |

| Four Nines: 99.99% | 52.6 minutes | 8.6 seconds |

| Five Nines: 99.999% | 5.25 minutes | 0.86 seconds |

| Six Nines: 99.9999% | 31.5 seconds | 8.6 milliseconds |

As the table shows, the difference between 99% and 99.9% is substantial.

Note that it is measured in days per year, not hours or minutes. The higher you go on the scale of availability, the cost of the service will increase as well.

How to calculate downtime? It is essential to measure downtime for every component that may affect the proper functioning of a part of the system, or the entire system. Scheduled system maintenance must be a part of the availability measurements. Such planned downtimes also cause a halt to your business, so you should pay attention to that as well when setting up your IT environment.

As you can tell, 100% availability level does not appear in the table.

Simply put, no system is entirely failsafe. Additionally, the switch to backup components will take some period, be that milliseconds, minutes, or hours.

How to Achieve High Availability

Businesses looking to implement high availability solutions need to understand multiple components and requirements necessary for a system to qualify as highly available. To ensure business continuity and operability, critical applications and services need to be running around the clock. Best practices for achieving high availability involve certain conditions that need to be met. Here are 4 Steps to Achieving 99.999% Reliability and Uptime.

1. Eliminate Single Points of Failure High Availability vs. Redundancy

The critical element of high availability systems is eliminating single points of failure by achieving redundancy on all levels. No matter if there is a natural disaster, a hardware or power failure, IT infrastructures must have backup components to replace the failed system.

There are different levels of component redundancy. The most common of them are:

- The N+1 model includes the amount of the equipment (referred to as ‘N’) needed to keep the system up. It is operational with one independent backup component for each of the components in case a failure occurs. An example would be using an additional power supply for an application server, but this can be any other IT component. This model is usually active/passive. Backup components are on standby, waiting to take over when a failure happens. N+1 redundancy can also be active/active. In that case, backup components are working even when primary components function correctly. Note that the N+1 model is not an entirely redundant system.

- The N+2 model is similar to N+1. The difference is that the system would be able to withstand the failure of two same components. This should be enough to keep most organizations up and running in the high nines.

- The 2N model contains double the amount of every individual component necessary to run the system. The advantage of this model is that you do not have to take into consideration whether there was a failure of a single component or the whole system. You can move the operations entirely to the backup components.

- The 2N+1 model provides the same level of availability and redundancy as 2N with the addition of another component for improved protection.

The ultimate redundancy is achieved through geographic redundancy.

That is the only mechanism against natural disasters and other events of a complete outage. In this case, servers are distributed over multiple locations in different areas.

The sites should be placed in separate cities, countries, or even continents. That way, they are entirely independent. If a catastrophic failure happens in one location, another would be able to pick up and keep the business running.

This type of redundancy tends to be extremely costly. The wisest decision is to go for a hosted solution from one of the providers with data centers located around the globe.

Next to power outages, network failures represent one of the most common causes of business downtime.

For that reason, the network must be designed in such a way that it stays up 24/7/365. To achieve 100% network service uptime, there have to be alternate network paths. Each of them should have redundant enterprise-grade switches and routers.

2. Data Backup and recovery

Data safety is one of the biggest concerns for every business. A high availability system must have sound data protection and disaster recovery plans.

An absolute must is to have proper backups. Another critical thing is the ability to recover in case of a data loss quickly, corruption, or complete storage failure. If your business requires low RTOs and RPOs and you cannot afford to lose data, the best option to consider is using data replication. There are many backup plans to choose from, depending on your business size, requirements, and budget.

Data backup and replication go hand in hand with IT high availability. Both should be carefully planned. Creating full backups on a redundant infrastructure is vital for ensuring data resilience and must not be overlooked.

3. Automatic failover with Failure Detection

In a highly available, redundant IT infrastructure, the system needs to instantly redirect requests to a backup system in case of a failure. This is called failover. Early failure detections are essential for improving failover times and ensuring maximum systems availability.

One of the software solutions we recommend for high availability is Carbonite Availability. It is suitable for any infrastructure, whether it is virtual or physical.

For fast and flexible cloud-based infrastructure failover and failback, you can turn to Cloud Replication for Veeam. The failover process applies to either a whole system or any of its parts that may fail. Whenever a component fails or a web server stops responding, failover must be seamless and occur in real-time.

The process looks like this:

- There is Machine 1 with its clone Machine 2, usually referred to as Hot Spare.

- Machine 2 continually monitors the status of Machine 1 for any issues.

- Machine 1 encounters an issue. It fails or shuts down due to any number of reasons.

- Machine 2 automatically comes online. Every request is now routed to Machine 2 instead of Machine 1. This happens without any impact to end users. They are not even aware there are any issues with Machine 1.

- When the issue with the failed component is fixed, Machine 1 and Machine 2 resume their initial roles

The duration of the failover process depends on how complicated the system is. In many cases, it will take a couple of minutes. However, it can also take several hours.

Planning for high availability must be based on all these considerations to deliver the best results. Each system component needs to be in line with the ultimate goal of achieving 99.999 percent availability and improve failover times.

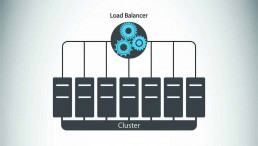

4. Load Balancing

A load balancer can be a hardware device or a software solution. Its purpose is to distribute applications or network traffic across multiple servers and components. The goal is to improve overall operational performance and reliability.

It optimizes the use of computing and network resources by efficiently managing loads and continuously monitoring the health of the backend servers.

How does a load balancer decide which server to select?

Many different methods can be used to distribute load across a server pool. Choosing the one for your workloads will depend on multiple factors. Some of them include the type of application that is served, the status of the network, and the status of the backend servers. A load balancer decides which algorithm to use according to the current amount of incoming requests.

Some of the most common load balancing algorithms are:

- Round Robin. With Round Robin, the load balancer directs requests to the first server in line. It will move down the list to the last one and then start from the beginning. This method is easy to implement, and it is widely used. However, it does not take into consideration if servers have different hardware configurations and if they can overload faster.

- Least Connection. In this case, the load balancer will select the server with the least number of active connections. When a request comes in, the load balancer will not assign a connection to the next server on the list, as is the case with Round Robin. Instead, it will look for one with the least current connections. Least connection method is especially useful to avoid overloading your web servers in cases where sessions last for a long time.

- Source IP hash. This algorithm will determine which server to select according to the source IP address of the request. The load balancer creates a unique hash key using the source and destination IP address. Such a key enables it always to direct a user’s request to the same server.

Load balancers indeed play a prominent role in achieving a highly available infrastructure. However, merely having a load balancer does not mean that you have a high system availability.

If a configuration with a load balancer only routes the traffic to decrease the load on a single machine, that does not make a system highly available.

By implementing redundancy for the load balancer itself, you can eliminate it as a single point of failure.

In Closing: Implement High Availability Architecture

No matter what size and type of business you run, any kind of service downtime can be costly without a cloud disaster recovery solution.

Even worse, it can bring permanent damage to your reputation. By applying a series of best practices listed above, you can reduce the risk of losing your data. You also minimize the possibilities of having production environment issues.

Your chances of being offline are higher without a high availability system.

From that perspective, the cost of downtime dramatically surpasses the costs of a well-designed IT infrastructure. In recent years, hosted and cloud computing solutions have become more popular than in-house solutions support. The main reason for this is the fact it reduces IT costs and adds more flexibility.

No matter which solution you go for, the benefits of a high availability system are numerous:

- You save money and time as there is no need to rebuild lost data due to storage or other system failures. In some cases, it is impossible to recover your data after an outage. That can have a disastrous impact on your business.

- Less downtime means less impact on users and clients. If your availability is measured in five nines, that means almost no service disruption. This leads to better productivity of your employees and guarantees customer satisfaction.

- The performance of your applications and services will be improved.

- You will avoid fines and penalties if you do not meet the contract SLAs due to a server issue.