How AI and Voice Technology are Changing Business

When the first version of Siri came out, she battled to understand natural language patterns, expressions, colloquialisms, and different accents all synthesized into computational algorithms.

Voice technology has improved extensively over the last few years. These changes are all thanks to the implementation of Artificial Intelligence (AI). AI has made voice technology much more adaptive and efficient.

This article focuses on the impact that AI and voice technology have on businesses enabling voice technology services.

AI and Voice Technology

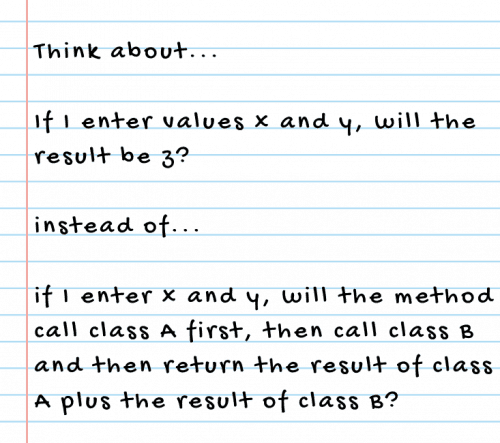

The human brain is complex. Despite this, there are limits to what it can do. For a programmer to think of all the possible eventualities is impractical at best. In traditional software development, developers instruct software on what function to execute and in which circumstances.

It’s a time-consuming and monotonous process. It is not uncommon for developers to make small mistakes that become noticeable bugs once a program is released.

With AI, developers instruct the software on how to function and learn. As the AI algorithm learns, it finds ways to make the process more efficient. Because AI can process data a lot faster than we can, it can come up with innovative solutions based on the previous examples that it accesses.

The revolution of voice tech powered by AI is dramatically changing the way many businesses work. AI, in essence, is little more than a smart algorithm. What makes it different from other algorithms is its ability to learn. We are now moving from a model of programming to teaching.

Traditionally, programmers write code to tell the algorithm how to behave from start to finish. Now programmers can dispense with tedious processes. All they need to do is to teach the program the tasks it needs to perform.

The Rise of AI and Voice Technology

Voice assistants can now do a lot more than just run searches. They can help you book appointments, flights, play music, take notes, and much more. Apple offers Siri, Microsoft has Cortana, Amazon uses Alexa, and Google created Google Assistant. With so many choices and usages, is it any wonder that 40% of us use voice tech daily?

They’re also now able to understand not only the question you’re asking but the general context. This ability allows voice tech to offer better results.

Before it, communication happened via typing or graphical interfaces. Now, sites and applications can harness the power of smart voice technologies to enhance their services in ways previously unimagined. It’s the reason voice-compatible products are on the rise.

Search engines have also had to keep up as optimization targeted text-based search queries only. As voice assistant technology advances, it’s starting to change. In 2019, Amazon sold over 100 million devices, including Echo and third-party gadgets, with Alexa built-in.

According to Google, 20% of all searches are voice, and by 2020 that number could rise to 50%. That means for businesses looking to grow voice technology is one major area to consider, as the global voice commerce is expected to be worth $40B by 2022.

How Voice Technology Works

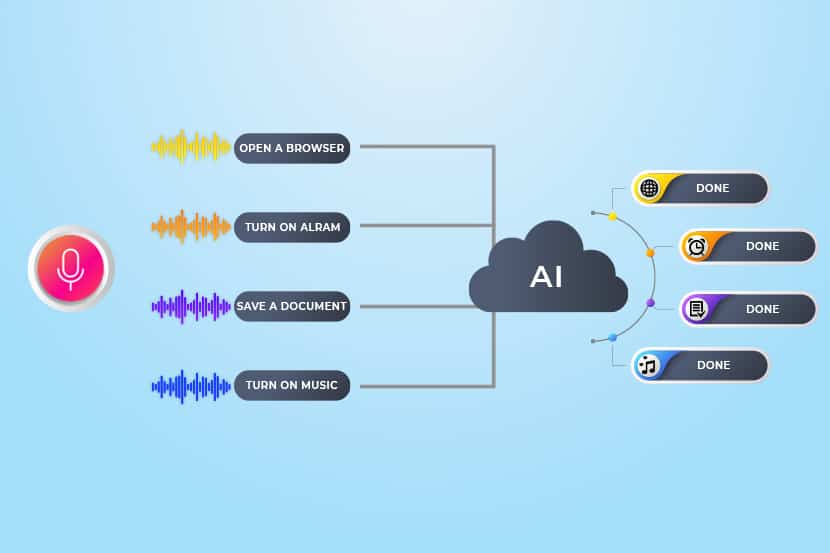

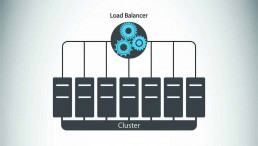

Voice technology requires two different interfaces. The first is between the end-user and the endpoint device in use. The second is between the endpoint device and the server.

It’s the server that contains the “personality” of your voice assistant. Be it a bare metal server or on the cloud, voice technology is powered by computational resources. It’s where all the AI’s background processes run, despite giving you the feeling that the voice assistant “lives” on your devices.

It seems logical, considering how fast your assistant answers your question. The truth is that your phone alone doesn’t have the required processing power or space to run the full AI program. That’s why your assistant is inaccessible when the internet is down.

How Does AI in Voice Technology Work?

Say, for example, that you want to search for more information on a particular country. You simply voice your request. Your request then relays to the server. That’s when AI takes over. It uses machine learning algorithms to run searches across millions of sites to find the precise information that you need.

To find the best possible information for you, the AI must also analyze each site very quickly. This rapid analysis enables it to determine whether or not the website pertains to the search query and how credible the information is.

If the site is deemed worthy, it shows up in search results. Otherwise, the AI discards it.

The AI goes one step further and watches how you react. Did you navigate off the site straight away? If so, the technology takes it as a sign that the site didn’t match the search term. When someone else uses similar search terms in the future, AI remembers that and refines its results.

Over time, as the AI learns more and more. It becomes more capable of producing accurate results. At the same time, the AI learns all about your preferences. Unless you say otherwise, it’ll focus on search results in the area or country where you live. It determines what music you like, what settings you prefer, and makes recommendations. This intelligent programming allows that simple voice assistant to improve its performance every time you use it.

Learn how Artificial Intelligence automates procedures in ITOps - What is AIOps.

Servers Power Artificial Intelligence

Connectivity issues, the program’s speed, and the ability of servers to manage all this information are all concerns of voice technology providers.

Companies need to offer these services to run enterprise-level servers. The servers must be capable of storing large amounts of data and processing it at high speed. The alternative is cloud-computing located off-premise by third-party providers, that reduces over-head costs and increases the growth potential of your services and applications.

Alexa and Siri are complex programs, but why would they need so much space on a server? After all, they’re individual programs; how much space could they need? That’s where it becomes tricky.

According to Statista, in 2019, there were 3.25 billion active virtual assistant users globally. Forecasts say that the number will be 8 billion by the end of 2023.

The assistant adapts to the needs of each user. That essentially means that it has to adjust to a possible 3.25 billion permutations of the underlying system. The algorithm learns as it goes, so all that information must pass through the servers.

It’s expected that each user would want their personal settings stored. So, the servers must accommodate not only the new information stored, but also save the old information too.

This ever-growing capacity is why popular providers run large server farms. This is where the on-premise versus cloud computing debate takes on greater meaning.

Takeaway

Without the computational advances made in AI, voice technology would not be possible. The permutations in the data alone would be too much for humans to handle.

Artificial intelligence is redefining tech apps with voice technologies in a variety of businesses. It’s very compatible with AI and will keep improving as machine learning grows.

The incorporation of voice technology using AI in the cloud can provide fast processing and improve businesses dramatically. Businesses can have voice assistants that handle customer care and simultaneously learn from those interactions, teaching itself how to serve your clients better.

Recent Posts

How to Leverage Object Storage with Veeam Backup Office 365

Introduction

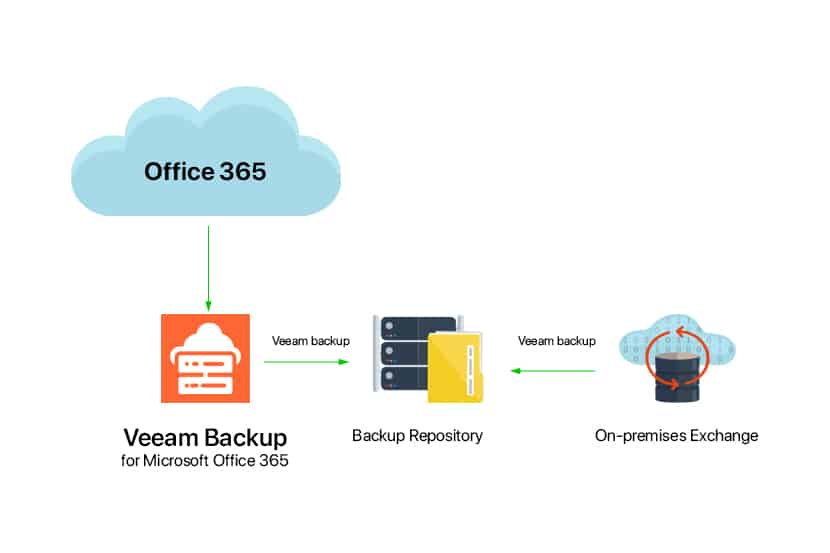

phoenixNAP Managed Backup for Microsoft Office 365 solution powered by Veeam has gained popularity amongst Managed Service Providers and Office 365 administrators in recent years.

Following the publication of our KB article, How To Install & Configure Veeam Backup For Office 365, we wanted to shed light on how one can leverage Object Storage as a target to offload bulk Office 365 backup data. Object Storage support has been introduced in the recent release of Veeam Backup for Office 365 v4 as of November 2019. It has significantly increased the product’s ability to offload backup data to cloud providers.

Unlike other Office 365 backup products, VBO has further solidified the product’s flexibility benefits to be deployed in different scenarios, on-premises, as a hybrid cloud solution, or as a cloud service. phoenixNAP has now made it easier for Office 365 Tenants to leverage Object Storage, and for MSPs to increase margins as part of their Managed Backup service offerings. It’s simple deployment, lower storage cost and ability to scale infinitely has made Veeam Backup for Office 365 a top performer amongst its peers.

In this article, we will be discussing the importance of taking Office 365 backup, explain Object Storage architecture in brief and present the necessary steps required to configure Object Storage as a backup repository for Veeam Backup for Office 365.

You may have different considerations in the way the product should be configured. Nonetheless, this blog will focus on leveraging Object Storage as a backup target for Office 365 data. Since Veeam Backup for Office 365 can be hosted in many ways, this blog will remain deployment-neutral as the process required to add Object Storage target repository is common to all deployment models.

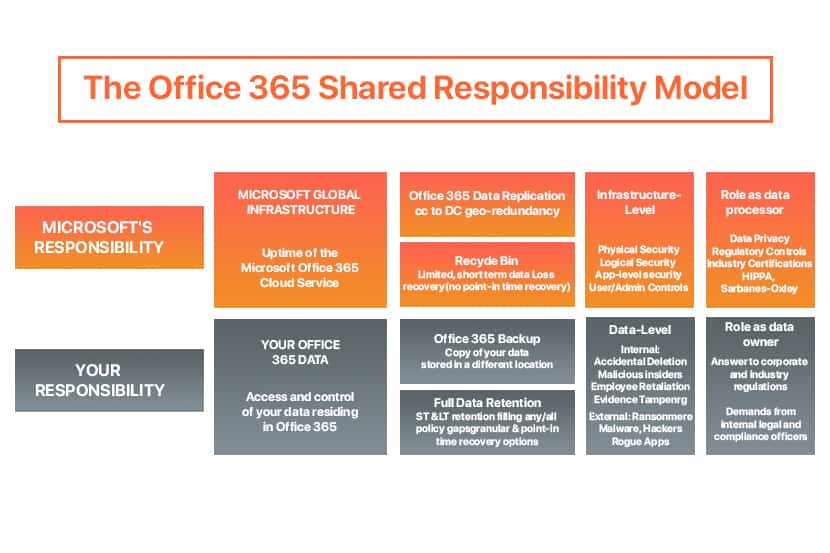

Why Should We Backup Office 365?

Some misconceptions which frequently surface when mentioning Office 365 backup is the idea that since Office 365 data resides on Microsoft cloud, such data is already being taken care of. To some extent they do, Microsoft goes a long way to have this service highly available and provide some data retention capabilities, but they still make it clear that as per the Shared Responsibility Model and GDPR regulation, the data owner/controller is still the one responsible for Office 365 data. Even if they did, should you really want to place all the eggs in one basket?

Office 365 is not just limited to email communication – Exchange Online, but it is also the service used for SharePoint Online, OneDrive, and Teams which are most commonly used amongst organizations to store important corporate data, collaborate, and support their distributed remote workforce. At phoenixNAP we’re here to help you elevate Veeam Backup for Office 365 and assist you in recovering against:

- Accidental deletion

- Overcome retention policy gaps

- Fight internal and external security threats

- Meet legal and compliance requirements

This further solidifies our reason why you should also opt for Veeam Backup for Office 365 and leverage phoenixNAP Object Storage to secure and maintain a solid DRaaS as part of your Data Protection Plan.

Object Storage

What is object storage?

Object Storage is another type of data storage architecture that is best used to store a significant amount of unstructured data. Whereas File Storage data is stored in a hierarchical way to retain the original structure but is complex to scale and expensive to maintain, Object Storage stores data as objects typically made up of the data itself, a variable amount of metadata and unique identifiers which makes it a smart and cost-effective way to store data.

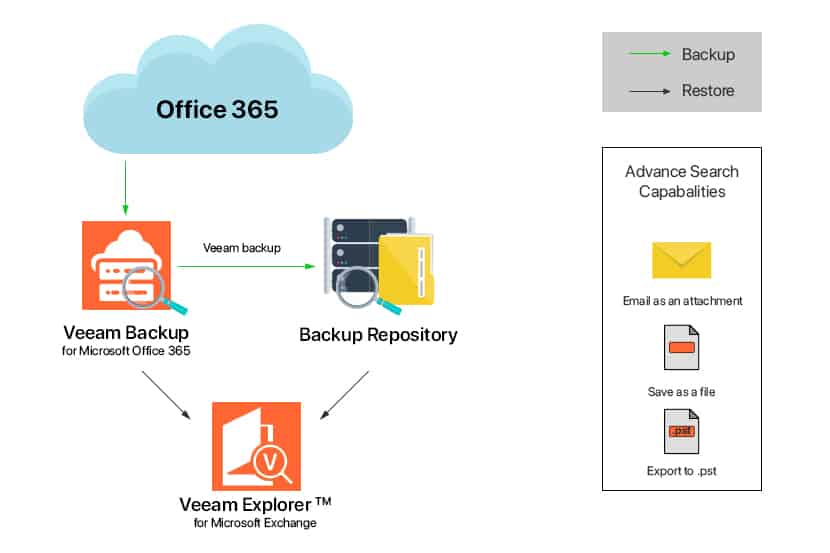

Cache helps in cost reduction and is aimed at reducing cost expensive operations, this is especially the case when reading and writing data to/from object storage repositories. With the help of cache, Veeam Explorer is powerful enough to open backups in Object Storage and use metadata to obtain the structure of the backup data objects. Such a benefit allows the end-user to navigate through backup data without the need to download any of it from Object Storage. Large chunks of data are first compressed and then saved to Object Storage. This process is handled by the Backup Proxy server and allows for a smarter way to store data. When using object storage, metadata and cache both reside locally, backup data is transferred and located in Object Storage

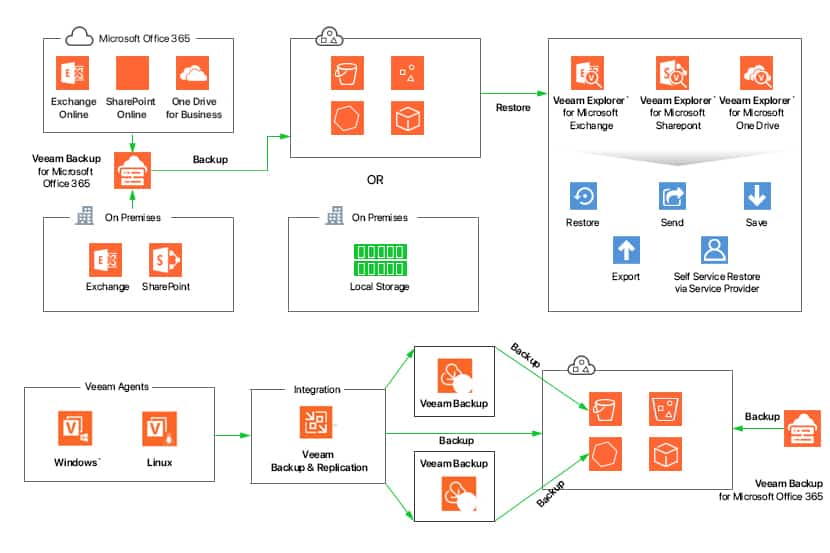

In this article, we’ll be speaking on how Object Storage is used as a target for VBO Backups, but one must point out that as explained in the picture below, other Veeam products are also able to interface with Object Storage as a backup repository.

Why should we consider using it?

With the right infrastructure and continuous upkeep, Office 365 administrators and MSPs are able to design an on-premise Object Storage repository to directly store or offload O365 backup data as needed but to fully achieve and consume all its benefits, Object Storage on cloud is the ideal destination for Office 365 backups due to its simpler deployment, unlimited scalability, and lower costs;

- Simple Deployment

As noted further down in this article one will have a clear picture of the steps required to set up an Object Storage repository on the cloud. With a few necessary pre-requires and proper planning, one can have this repository up and running in no time by following a simple wizard to create an Object Storage repository and present it as a backup repository. - Easily Scalable

While the ability to scale and design VBO server roles as needed is already a great benefit, the ability to leverage Object Storage to a cloud provider makes harnessing backup data growth easier to achieve and highly redundant. - Lower Cost Capabilities

An object-based architecture is the most effective way for organizations to store large amounts of data and since it utilizes a flat architecture it consumes disk space more efficiently thus benefiting from a relatively low cost without the overhead of traditional file architectures. Additionally, with the help of retention policies and storage limits, VBO provides great ways on how one can keep costs under control.

Veeam Backup for Microsoft Office 365 is licensed per user account and supports a variety of licensing options such as Subscription or Rental based licenses. In order to use Object Storage as a backup target, a storage account from a cloud service provider is required but other than that, feel free to start using it!

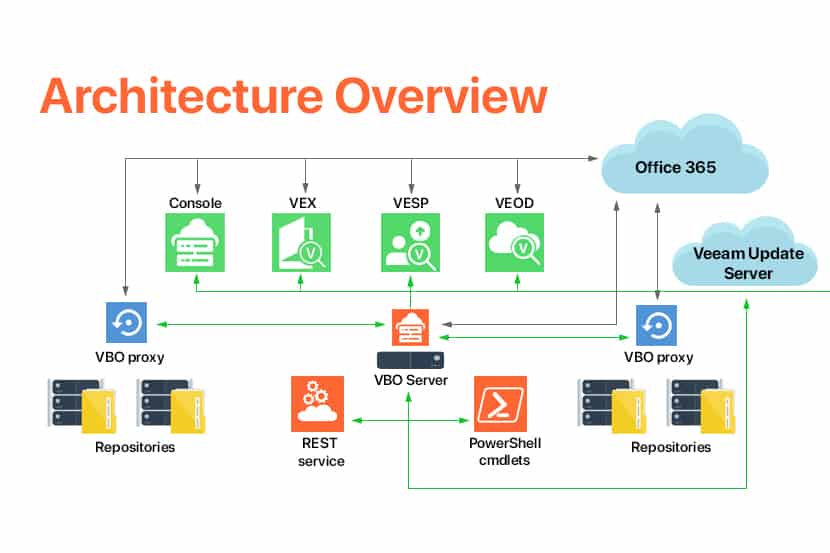

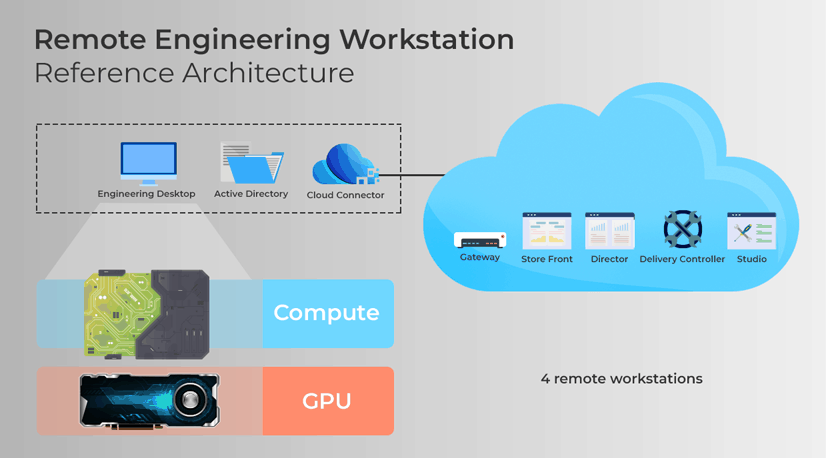

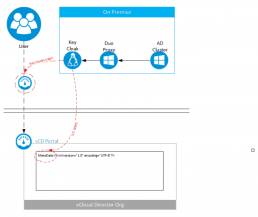

VBO Deployment Models

For the benefit of this article, we won’t be digging in too much detail on the various deployment models that exist for VBO, but we believe that you ought to know about the various models that exist when opting for VBO.

VBO can run on-premises, private cloud, and public cloud environments. O365 tenants have the flexibility to choose from different designs based on their current requirements and host VBO wherever they deem right. In any scenario, a local primary backup repository is required as this will be the direct storage repository for backups. Object Storage can then be leveraged to offload bulk backup data to a cheaper and safer storage solution provided by a cloud service provider like phoenixNAP to further achieve disaster recovery objectives and data protection.

In some instances, it might be required to run and store VBO in different infrastructures for full disaster recovery (DR) purposes. Both O365 tenants and MSPs are able to leverage the power of the cloud by collaborating with a VCSP like phoenixNAP to provide them the ability to host and store VBO into a completely different infrastructure while providing self-service restore capabilities to end-users. For MSPs, this is a great way to increase revenue by offering managed backup service plans for clients.

The prerequisites and how these components work for each environment are very similar, hence for the benefit of this article the following Object Storage configuration is generally the same for each type of deployment.

Click here to see the image in full size.

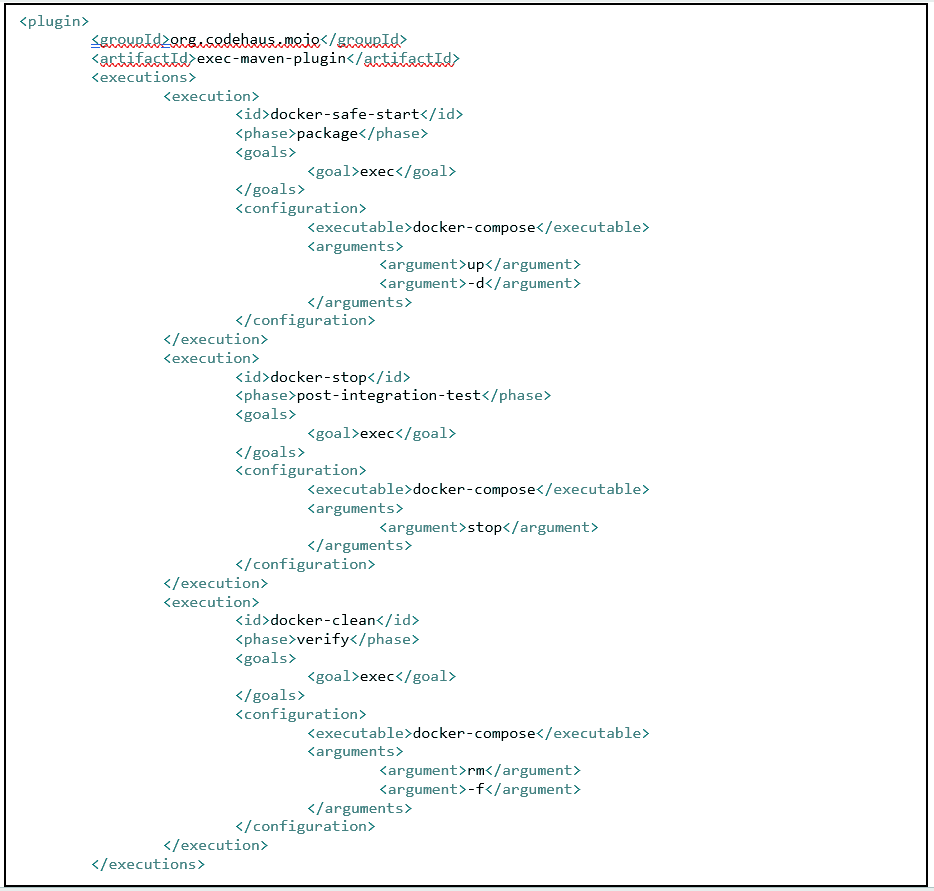

Configuring Object Storage in Veeam Backup for Office 365

As explained in the previous section, although there are different ways on how one can deploy VBO, the procedure to configure and set up Object Storage repository is quite similar in any case, hence no specific attention will be given to a particular deployment model during the following configuration walk-through.

This section of the document will assume that the initial configuration as highlighted with checkmarks below, has so far been accomplished and in a position to; set up Object Storage as a Repository, Configure the local Repository, Secure Object Storage and Restore Backup Data.

- Defined Policy-based settings and retention requirements according to Data Protection Plan and Service Costs

- Object Storage cloud account details and credentials in hand

- Office 365 prerequisite configurations to connect with VBO

- Hosted and Deployed VBO

- Installed and Licensed VBO

- Created an Organization in VBO

Adding S3 Compatible Object Storage Repository*

Adding Local Backup Repository

Secure Object Storage

Restore Backup Data

* When opting for Object Storage, it is a suggested best practice that S3 Object Storage configuration is set up in advance, this will come in handy when asked for Object Storage repository option when adding the Local Backup Repository.

Adding S3 Compatible Object Storage Repository

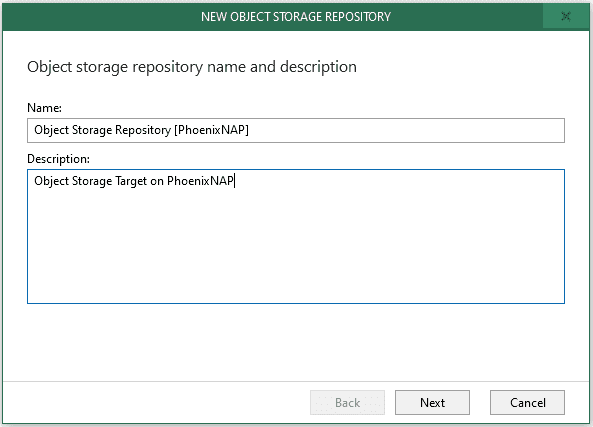

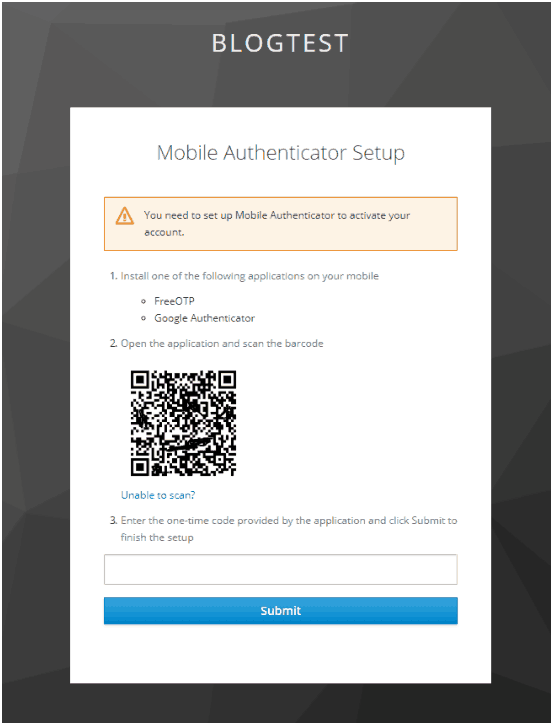

Step 1. Launch New Object Storage Repository Wizard

Right-click Object Storage Repositories, select Add object storage.

Step 2. Specify Object Storage Repository Name

Enter a Name for the Object Storage Repository and optionally a Description. Click Next.

Step 3. Select Object Storage Type

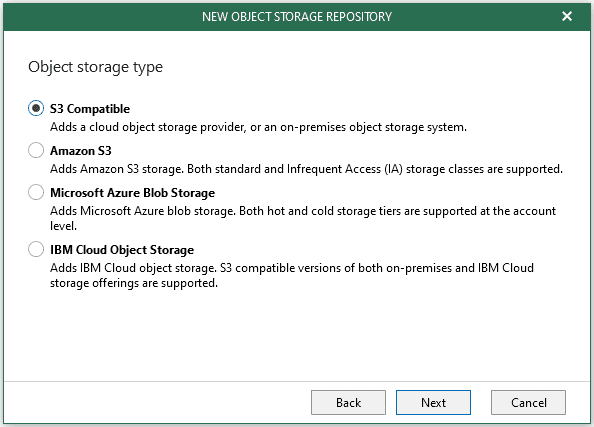

On the new Object storage type page, select S3 Compatible (phoenixNAP compatible). Click Next.

Step 4. Specify Object Storage Service Point and Account

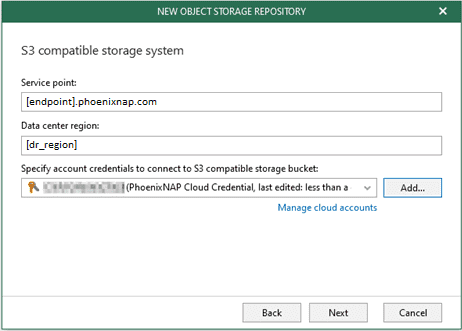

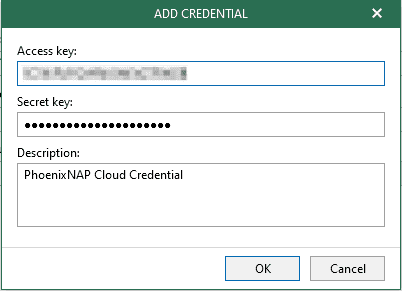

Specify the Service Point and the Datacenter region. Click Add to specify the credentials to connect with your cloud account.

If you already have a credentials record that was configured beforehand, select the record from the drop-down list. Otherwise, click Add and provide your access and secret keys, as described in Adding S3-Compatible Access Key. You can also click Manage cloud accounts to manage existing credentials records.

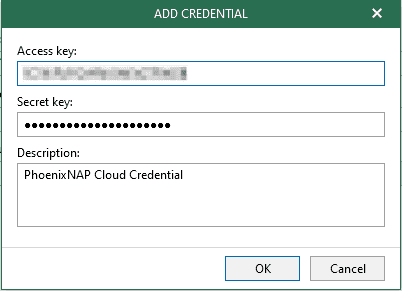

Enter the Access key, the Secret key, and a Description. Click OK to confirm.

Step 5. Specify Object Storage Bucket

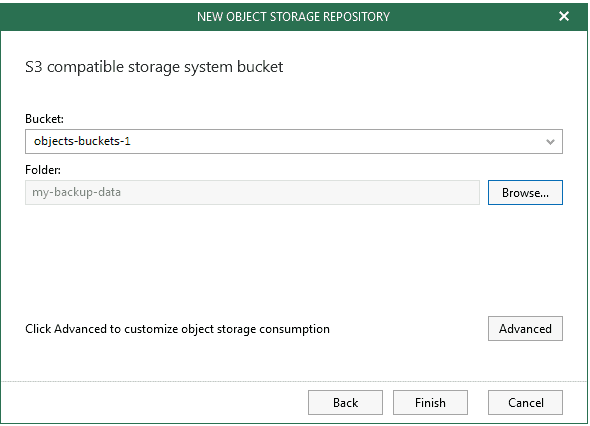

Finalize by selecting the Bucket to use and click Browse to specify the folder to store the backups. Click New folder to create a new folder and click OK to confirm

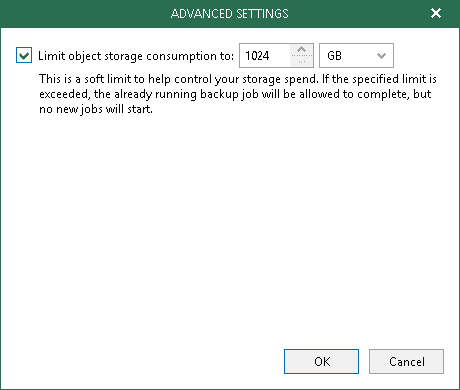

Clicking Advanced lets you specify the storage consumption soft limit to keep costs under control, this will be the global retention storage policy for Object Storage. As a best practice, this consumption value should be lower than the Object Storage repository amount you’re entitled to from the cloud provider in order to leave room for additional service data.

Click OK followed by Finish.

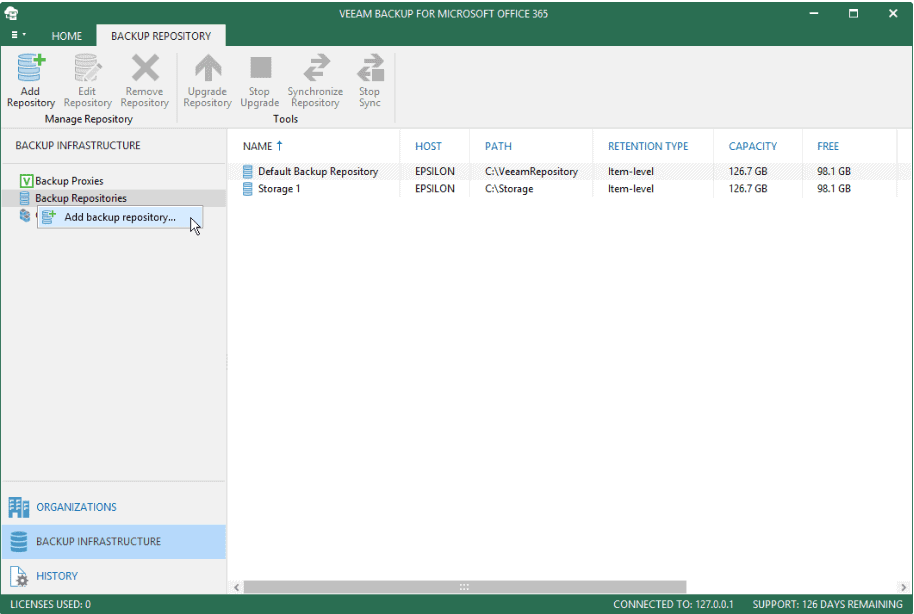

Adding Local Backup Repository

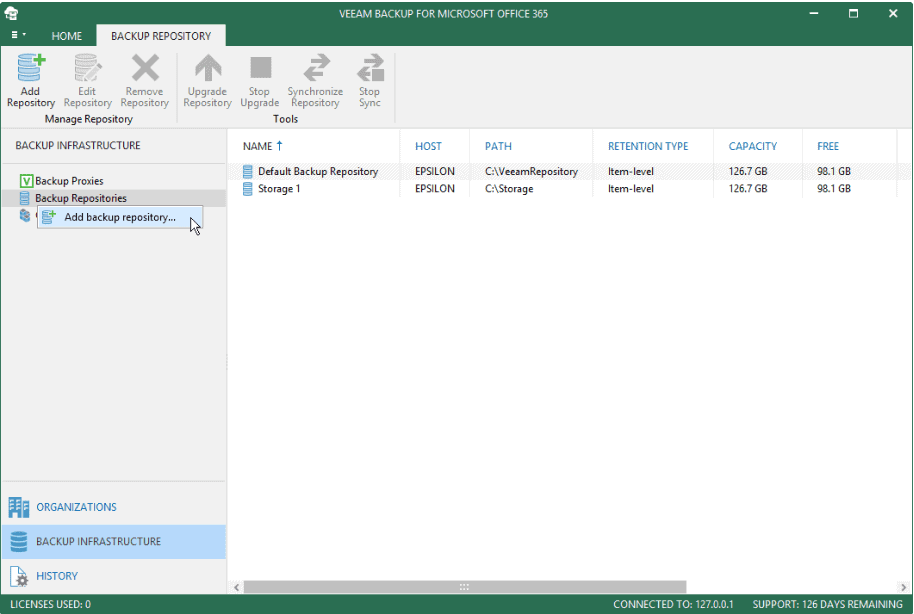

Step 1. Launch New Backup Repository Wizard

Open the Backup Infrastructure view.

In the inventory pane, select the Backup Repositories node.

On the Backup Repository tab, click Add Repository on the ribbon.

Alternatively, in the inventory pane, right-click the Backup Repositories node and select Add backup repository.

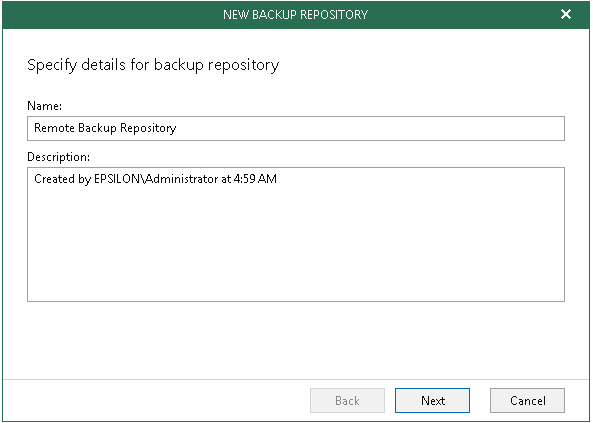

Step 2. Specify Backup Repository Name

Specify Backup Repository Name and Description then click Next.

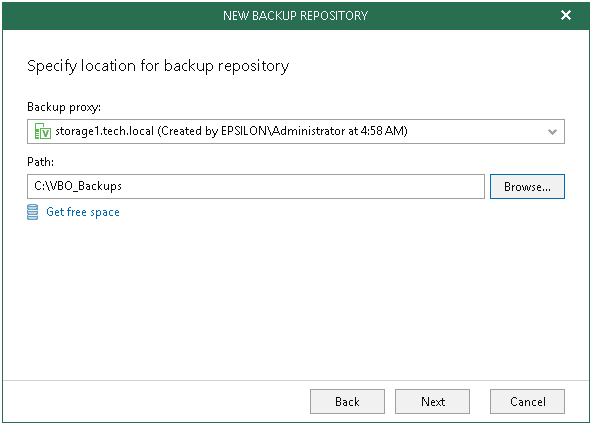

Step 3. Specify Backup Proxy Server

When planning to extend a backup repository with object storage, this directory will only include a cache consisting of metadata. The actual data will be compressed and backed up directly to object storage that you specify in the next step.

Specify the Backup Proxy to use and the Path to the location to store the backups. Click Next.

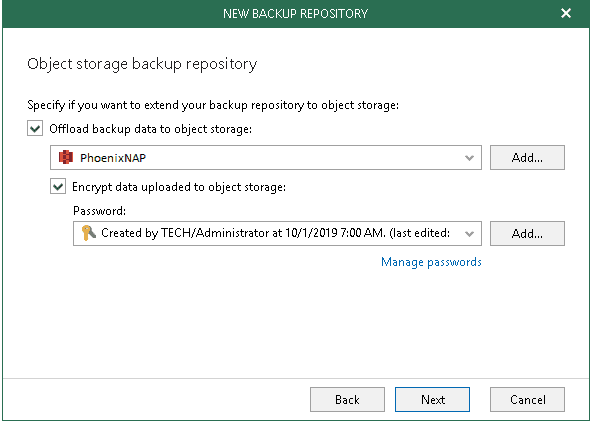

Step 4. Specify Object Storage Repository

At this step of the wizard, you can optionally extend a backup repository with object storage to back up data directly to the cloud.

To extend a backup repository with object storage, do the following:

- Select the Offload backup data to the object storage checkbox.

- In the drop-down list, select an object storage repository to which you want to offload your data.

Make sure that an object storage repository has been added to your environment in advance. Otherwise, click Add and follow the steps of the wizard, as described in Adding Object Storage Repositories. - To offload data encrypted, select Encrypt data uploaded to object storage and provide a password.

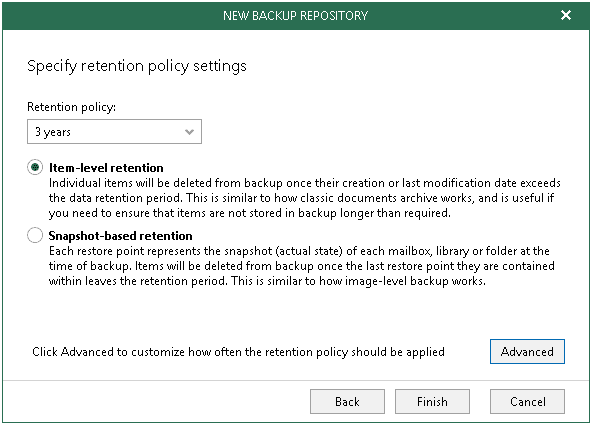

Step 5. Specify Retention Policy Settings

At this step of the wizard, specify retention policy settings.

Depending on how retention policies are configured, any obsolete restore points are automatically removed from Object Storage by VBO. A service task is used to calculate the age of offloaded restore points, when this exceeds the age of the specified retention period, it automatically purges obsolete restore points from Object Storage.

- In the Retention policy drop-down list, specify how long your data should be stored in a backup repository.

- Choose a retention type:

- Item-level retention.

Select this type if you want to keep an item until its creation time or last modification time is within the retention coverage.

- Item-level retention.

- Snapshot-based retention.

Select this type if you want to keep an item until its latest restore point is within the retention coverage.

- Click Advanced to specify when to apply a retention policy. You can select to apply it on a daily basis, or monthly. For more information, see Configuring Advanced Settings.

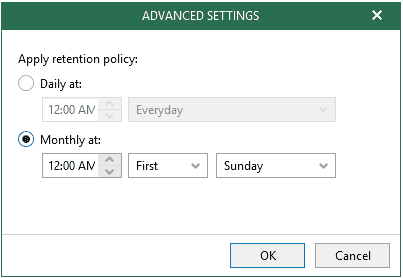

Configuring Advanced Settings

After you click Advanced, the Advanced Settings dialog appears in which you can select either of the following options:

- Daily at:

Select this option if you want a retention policy to be applied on a daily basis and choose the time and day.

- Monthly at:

Select this option if you want a retention policy to be applied on a monthly basis and choose the time and day, which can be the first, second, third, fourth or even the last one in the month.

Securing Object Storage

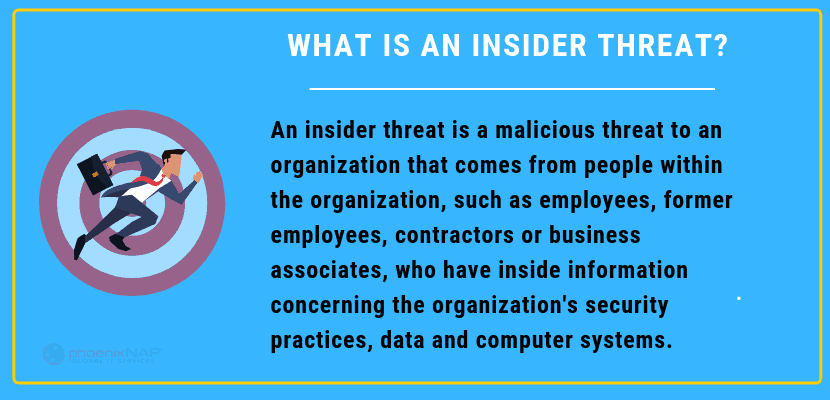

To ensure Backup Data is kept safe and secure from any possible vulnerabilities, one must make sure to secure the backup application itself, and its communication channels. Veeam has made this possible by continuously implementing key security measures to address and mitigate any possible threats while providing us with some great security functionalities to interface with Object Storage.

VBO v4 can provide the same level of protection for your data irrelevant to any deployment model used. Communications between VBO components are always encrypted and all communication between Microsoft Office 365 and VBO is encrypted by default. When using object storage, data can be protected with optional encryption at-rest.

VBO v4 also introduces a Cloud Credential Manager which lets us create and maintain a solid list of credentials provided by any of the Cloud Service Providers. These records allow us to connect with the Object Storage provider to store and offload backup data. Credentials will consist of access and secret keys and work with any S3-Compatible Object Storage.

Password Manager lets us manage encryption passwords with ease. One can create passwords to protect encryption keys that are used to encrypt data being transferred to object storage repositories. To encrypt data, VBO uses the AES-256 specification.

Watch one of our experts speak about the importance of Keeping a Tight Grip on Office 365 Security While Working Remotely.

Restoring from Object Storage

Restoring backup data from Object Storage is just as easy as if you’re restoring from any traditional storage repositories. As explained earlier in this article, Veeam Explorer is the tool used to open and navigate through backups without the need to download any of it.

Veeam Explorer uses metadata to obtain the structure of the backup data objects and once backup data has been identified for restore, you may choose to select any of the available restore options as required. When leverage Object Storage on the cloud, one is also able to host Veeam explorer locally and use it to restore Office 365 backup data from the cloud.

Where Does phoenixNAP Come into Play?

- Pricing per mailbox as low as $2 per mailbox

- Hosting S3-Compatible Object Storage

- Hosting Veeam Backup for Office 365 – Backup Proxy Server on our secure cloud platform

- VCSP information

For more information, please look at our product pages and use the form to request additional details or send an e-mail to sales@phoenixnap.com

Abbreviations Table

| DRaaS | Disaster Recovery as a Service |

| GDPR | General Data Protection Regulation |

| MSP | Managed Service Provider |

| O365 | Microsoft Office 365 |

| VBO | Veeam Backup for Office 365 |

| VCC | Veeam Cloud Connect |

| VCSP | Veeam Cloud & Service Provider |

Test Driven vs Behavior Driven Development: Key Differences

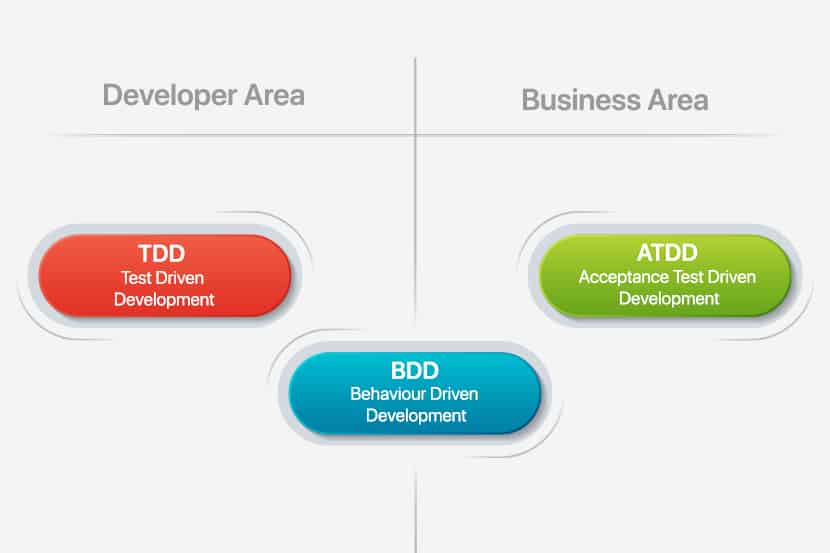

Test-driven development (TDD) and Behavior-driven development (BDD) are both test-first approaches to Software Development. They share common concepts and paradigms, rooted in the same philosophies. In this article, we will highlight the commonalities, differences, pros, and cons of both approaches.

What is Test-driven development (TDD)

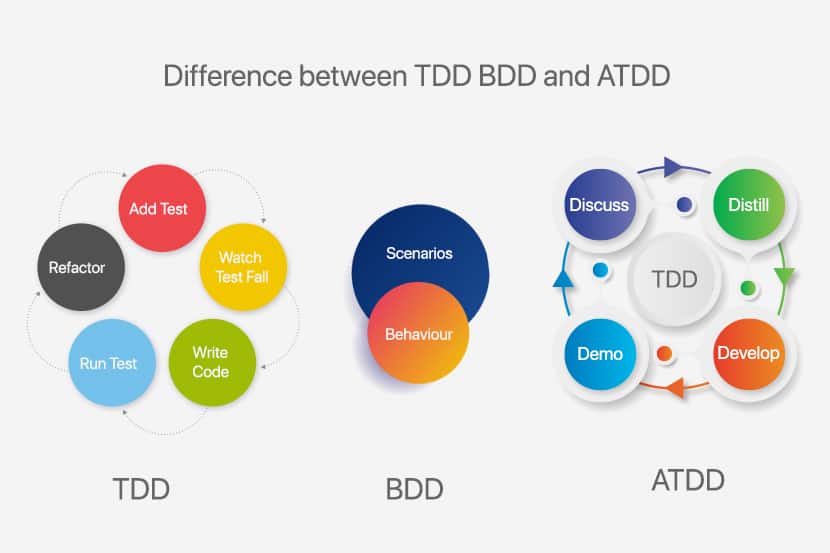

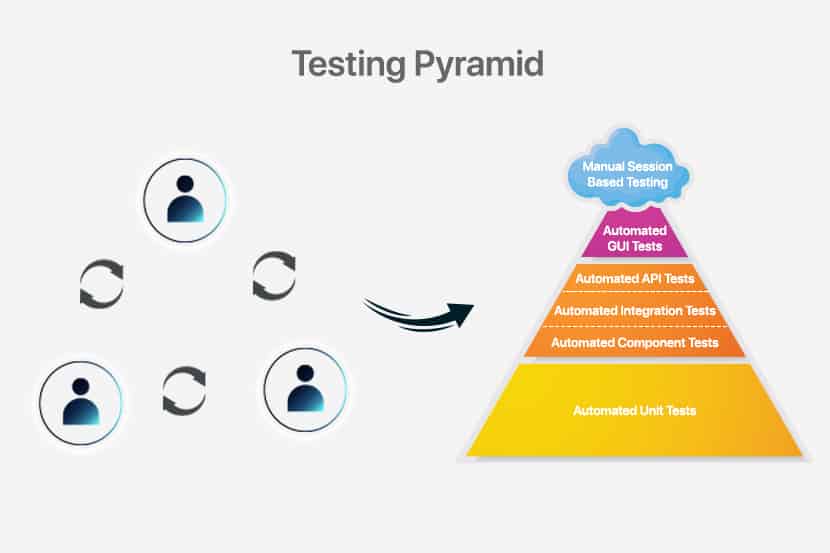

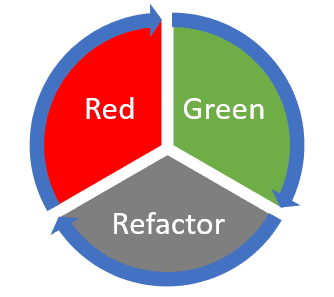

Test-driven development (TDD) is a software development process that relies on the repetition of a short development cycle: requirements turn into very specific test cases. The code is written to make the test pass. Finally, the code is refactored and improved to ensure code quality and eliminate any technical debt. This cycle is well-known as the Red-Green-Refactor cycle.

What is Behavior-driven development (BDD)

Behavior-driven development (BDD) is a software development process that encourages collaboration among all parties involved in a project’s delivery. It encourages the definition and formalization of a system’s behavior in a common language understood by all parties and uses this definition as the seed for a TDD based process.

Key Differences Between TDD and BDD

| TDD | BDD | |

| Focus | Delivery of a functional feature | Delivering on expected system behavior |

| Approach | Bottom-up or Top-down (Acceptance-Test-Driven Development) | Top-down |

| Starting Point | A test case | A user story/scenario |

| Participants | Technical Team | All Team Members including Client |

| Language | Programming Language | Lingua Franca |

| Process | Lean, Iterative | Lean, Iterative |

| Delivers | A functioning system that meets our test criteria | A system that behaves as expected and a test suite that describes the system’s behavior in human common-language |

| Avoids | Over-engineering, low test coverage, and low-value tests | Deviation from intended system behavior |

| Brittleness | Change in implementation can result in changes to test suite | Test suite-only needs to change if the system behavior is required to change |

| Difficulty of Implementation | Relatively simple for Bottom-up, more difficult for Top-down | The bigger learning curve for all parties involved |

Test-Driven Development (TDD)

In TDD, we have the well-known Red-Green-Refactor cycle. We start with a failing test (red) and implement as little code as necessary to make it pass (green). This process is also known as Test-First Development. TDD also adds a Refactor stage, which is equally important to overall success.

The TDD approach was discovered (or perhaps rediscovered) by Kent Beck, one of the pioneers of Unit Testing and later TDD, Agile Software Development, and eventually Extreme Programming.

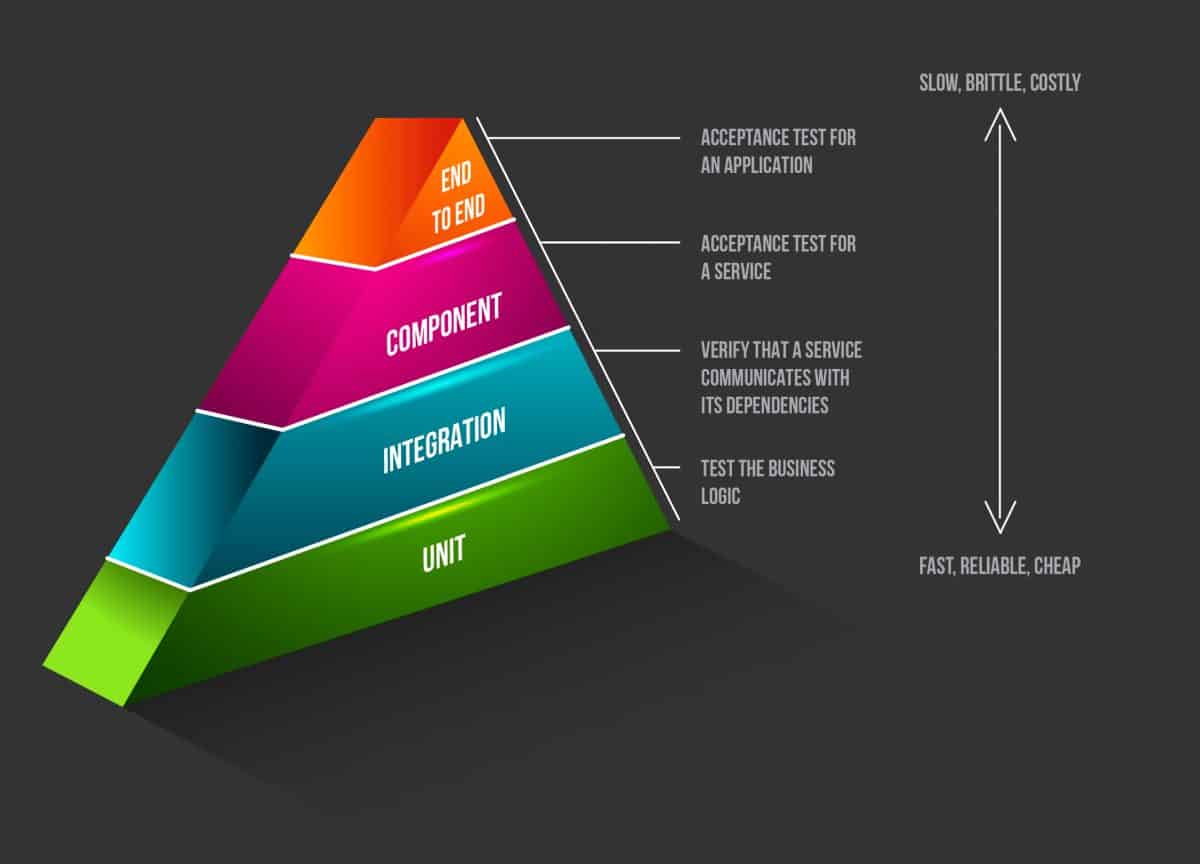

The diagram below does an excellent job of giving an easily digestible overview of the process. However, the beauty is in the details. Before delving into each individual stage, we must also discuss two high-level approaches towards TDD, namely bottom-up and top-down TDD.

Bottom-Up TDD

The idea behind Bottom-Up TDD, also known as Inside-Out TDD, is to build functionality iteratively, focusing on one entity at a time, solidifying its behavior before moving on to other entities and other layers.

We start by writing Unit-level tests, proceeding with their implementation, and then moving on to writing higher-level tests that aggregate the functionalities of lower-level tests, create an implementation of the said aggregate test, and so on. By building up, layer by layer, we will eventually get to a stage where the aggregate test is an acceptance level test, one that hopefully falls in line with the requested functionality. This process makes this a highly developer-centric approach mainly intended at making the developer’s life easier.

| Pros | Cons |

| Focus is on one functional entity at a time | Delays integration stage |

| Functional entities are easy to identify | Amount of behavior an entity needs to expose is unclear |

| High-level vision not required to start | High risk of entities not interacting correctly with each other thus requiring refactors |

| Helps parallelization | Business logic possibly spread across multiple entities making it unclear and difficult to test |

Top-Down TDD

Top-Down TDD is also known as Outside-In TDD or Acceptance-Test-Driven Development (ATDD). It takes the opposite approach. Wherein we start building a system, iteratively adding more detail to the implementation. And iteratively breaking it down into smaller entities as refactoring opportunities become evident.

We start by writing an acceptance-level test, proceed with minimal implementation. This test also needs to be done incrementally. Thus, before creating any new entity or method, it needs to be preceded by a test at the appropriate level. We are hence iteratively refining the solution until it solves the problem that kicked off the whole exercise, that is, the acceptance-test.

This setup makes Top-Down TDD a more Business/Customer-centric approach. This approach is more challenging to get right as it relies heavily on good communication between the customer and the team. It also requires good citizenship from the developer as the next iterative step needs to come under careful consideration. This process will speed-up in time but does have a learning curve. However, the benefits far outweigh any negatives. This approach results in the collaboration between customer and team taking center stage, a system with very well-defined behavior, clearly defined flows, focus on integrating first, and a very predictable workflow and outcome.

| Pros | Cons |

| Focus is on one user requested scenario at a time | Critical to get the Assertion-Test right thus requiring collaborative discussion between business/user/customer and team |

| Flow is easy to identify | Relies on Stubbing, Mocking and/or Test Doubles |

| Focus is on integration rather than implementation details | Slower start as the flow is identified through multiple iterations |

| Amount of behavior an entity needs to expose is clear | More limited parallelization opportunities until a skeleton system starts to emerge |

| User Requirements, System Design and Implementation details are all clearly reflected in the test suite | |

| Predictable |

The Red-Green-Refactor Life Cycle

Armed with the above-discussed high-level vision of how we can approach TDD, we are free to delve deeper into the three core stages of the Red-Green-Refactor flow.

Red

We start by writing a single test, execute it (thus having it fail) and only then move to the implementation of that test. Writing the correct test is crucial here, as is agreeing on the layer of testing that we are trying to achieve. Will this be an acceptance level test or a unit level test? This choice is the chief delineation between bottom-up and top-down TDD.

Green

During the Green-stage, we must create an implementation to make the test defined in the Red stage pass. The implementation should be the most minimal implementation possible, making the test pass and nothing more. Run the test and watch it pass.

Creating the most minimal implementation possible is often the challenge here as a developer may be inclined, through force of habit, to embellish the implementation right off the bat. This result is undesirable as it will create technical baggage that, over time, will make refactoring more expensive and potentially skew the system based on refactoring cost. By keeping each implementation step as small as possible, we further highlight the iterative nature of the process we are trying to implement. This feature is what will grant us agility.

Another key aspect is that the Red-stage, i.e., the tests, is what drives the Green-stage. There should be no implementation that is not driven by a very specific test. If we are following a bottom-up approach, this pretty much comes naturally. However, if we’re adopting a top-down approach, then we must be a bit more conscientious and make sure to create further tests as the implementation takes shape, thus moving from acceptance level tests to unit-level tests.

Refactor

The Refactor-stage is the third pillar of TDD. Here the objective is to revisit and improve on the implementation. The implementation is optimized, code quality is improved, and redundancy eliminated.

Refactoring can have a negative connotation for many, being perceived as a pure cost, fixing something improperly done the first time around. This perception originates in more traditional workflows where refactoring is primarily done only when necessary, typically when the amount of technical baggage reaches untenable levels, thus resulting in a lengthy, expensive, refactoring effort.

Here, however, refactoring is an intrinsic part of the workflow and is performed iteratively. This flexibility dramatically reduces the cost of refactoring. The code is not entirely reworked. Instead, it is slowly evolving. Moreover, the refactored code is, by definition, covered by a test. A test that has already passed in a previous iteration of the code. Thus, refactoring can be done with confidence, resulting in further speed-up. Moreover, this iterative approach to improvement of the codebase allows for emergent design, which drastically reduces the risk of over-engineering the problem.

It is of critical importance that behavior should not change, and we do not add extra functionality during the Refactor-stage. This process allows refactoring to be done with extreme confidence and agility as the relevant code is, by definition, already covered by a test.

Behavior-Driven Development (BDD)

As previously discussed, TDD (or bottom-up TDD) is a developer-centric approach aimed at producing a better code-base and a better test suite. In contrast, ATDD is more Customer-centric and aimed at producing a better solution overall. We can consider Behavior-Driven Development as the next logical progression from ATDD. Dan North’s experiences with TDD and ATDD resulted in his proposing the BDD concept, whose idea and the claim was to bring together the best aspects of TDD and ATDD while eliminating the pain-points he identified in the two approaches. What he identified was that it was helpful to have descriptive test names and that testing behavior was much more valuable than functional testing.

Dan North does a great job of succinctly describing BDD as “Using examples at multiple levels to create shared understanding and surface certainty to deliver software that matters.”

Some key points here:

- What we care about is the system’s behavior

- It is much more valuable to test behavior than to test the specific functional implementation details

- Use a common language/notation to develop a shared understanding of the expected and existing behavior across domain experts, developers, testers, stakeholders, etc.

- We achieve Surface Certainty when everyone can understand the behavior of the system, what has already been implemented and what is being implemented and the system is guaranteed to satisfy the described behaviors

BDD puts the onus even more on the fruitful collaboration between the customer and the team. It becomes even more critical to define the system’s behavior correctly, thus resulting in the correct behavioral tests. A common pitfall here is to make assumptions about how the system will go about implementing a behavior. This mistake occurs in a test that is tainted with implementation detail, thus making it a functional test and not a real behavioral test. This error is something we want to avoid.

The value of a behavioral test is that it tests the system. It does not care about how it achieves the results. This setup means that a behavioral test should not change over time. Not unless the behavior itself needs to change as part of a feature request. The cost-benefit over functional testing is more significant as such tests are often so tightly coupled with the implementation that a refactor of the code involves a refactor of the test as well.

However, the more substantial benefit is the retention of Surface Certainty. In a functional test, a code-refactor may also require a test-refactor, inevitably resulting in a loss of confidence. Should the test fail, we are not sure what the cause might be: the code, the test, or both. Even if the test passes, we cannot be confident that the previous behavior has been retained. All we know is that the test matches the implementation. This result is of low value because, ultimately, what the customer cares about is the behavior of the system. Thus, it is the behavior of the system that we need to test and guarantee.

A BDD based approach should result in full test coverage where the behavioral tests fully describe the system’s behavior to all parties using a common language. Contrast this with functional testing were even having full coverage gives no guarantees as to whether the system satisfies the customer’s needs and the risk and cost of refactoring the test suite itself only increase with more coverage. Of course, leveraging both by working top-down from behavioral tests to more functional tests will give the Surface Certainty benefits of behavioral testing. Plus, the developer-focused benefits of functional testing also curb the cost and risk of functional testing since they’re only used where appropriate.

In comparing TDD and BDD directly, the main changes are that:

- The decision of what to test is simplified; we need to test the behavior

- We leverage a common language which short-circuits another layer of communication and streamlines the effort; the user stories as defined by the stakeholders are the test cases

An ecosystem of frameworks and tools emerged to allow for common-language based collaboration across teams. As well as the integration and execution of such behavior as tests by leveraging industry-standard tooling. Examples of this include Cucumber, JBehave, and Fitnesse, to name a few.

The Right Tool for the Job

As we have seen, TDD and BDD are not really in direct competition with each other. Consider BDD as a further evolution of TDD and ATDD, which brings more of a Customer-focus and further emphasizes communication between the customer and the Technical team at all stages of the process. The result of this is a system that behaves as expected by all parties involved, together with a test suite describing the entirety of the system’s many behaviors in a human-readable fashion that everyone has access to and can easily understand. This system, in turn, provides a very high level of confidence in not only the implemented system but in future changes, refactors, and maintenance of the system.

At the same time, BDD is based heavily on the TDD process, with a few key changes. While the customer or particular members of the team may primarily be involved with the top-most level of the system, other team members like developers and QA engineers would organically shift from a BDD to a TDD model as they work their way in a top-down fashion.

We expect the following key benefits:

- Bringing pain forward

- Onus on collaboration between customer and team

- A common language shared between customer and team-leading to share understanding

- Imposes a lean, iterative process

- Guarantee the delivery of software that not only works but works as defined

- Avoid over-engineering through emergent design, thus achieving desired results via the most minimal solution possible

- Surface Certainty allows for fast and confident code refactors

- Tests have innate value VS creating tests simply to meet an arbitrary code coverage threshold

- Tests are living documentation that fully describes the behavior of the system

There are also scenarios where BDD might not be a suitable option. There are situations where the system in question is very technical and perhaps is not customer-facing at all. It makes the requirements more tightly bound to the functionality than they are to behavior, making TDD a possibly better fit.

Adopting TDD or BDD?

Ultimately, the question should not be whether to adopt TDD or BDD, but which approach is best for the task at hand. Quite often, the answer to that question will be both. As more people are involved in more significant projects, it will become self-evident that both approaches are needed at different levels and at various times throughout the project’s lifecycle. TDD will give structure and confidence to the technical team. While BDD will facilitate and emphasize communication between all involved parties and ultimately delivers a product that meets the customer’s expectations and offers the Surface Certainty required to ensure confidence in further evolving the product in the future.

As is often the case, there is no magic bullet here. What we have instead is a couple of very valid approaches. Knowledge of both will allow teams to determine the best method based on the needs of the project. Further experience and fluidity of execution will enable the team to use all the tools in its toolbox as the need arises throughout the project’s lifecycle, thus achieving the best possible business outcome. To find out how this applies to your business, talk to one of our experts today.

Recent Posts

Why Carrier-Neutral Data Centers are Key to Reduce WAN Costs

Every year, the telecom industry invests hundreds of billions on network expansion, which will rise by 2%-4% in 2020. Not surprisingly, the outcome is predictable: bandwidth prices keep falling.

As Telegeography reported, several factors accelerated this phenomenon in recent years. Major cloud providers like Google, Amazon, Microsoft, and Facebook have altered the industry by building their own massive global fiber capacity while scaling back their purchases from telecom carriers. These companies have simultaneously driven global fiber supply up and demand down. Technology advances, like 100 Gbps bit rates, have also contributed to the persistent erosion of costs.

The result is bandwidth prices that have never been lower. And the advent of Software-Defined Networking (SD-WAN) makes it simpler than ever to prioritize traffic between costly private networks and cheaper Internet bandwidth.

This period should be the best of times for enterprise network architects, but not necessarily.

Many factors conspire against buyers who seek to lower costs for the corporate WAN, including:

- Telecom contracts that are typically long-term and inflexible

- Competition that is often limited to a handful of major carriers

- Few choices for local access and Internet at corporate locations

- The tremendous effort required to change providers, meaning incumbents, have all the leverage

The largest telcos, companies like AT&T and Verizon, become trapped by their high prices. Protecting their revenue base makes these companies reluctant adopters of SD-WAN and Internet-based solutions.

So how can organizations drive down spending on the corporate WAN, while boosting performance?

As in most markets, the essential answer is: Competition.

The most competitive marketplaces for telecom services in the world are Carrier-Neutral Data Centers (CNDCs). Think about all the choices: long-haul networks; local access; Internet providers, storage, compute, SaaS, etc. CDNCs offer a wide array of networking options, and the carriers realize that competitors are just a cross-connect away.

How much savings are available? Enough to make it worthwhile for many large regional, national, and global companies. In one report, Forrester interviewed customers of Equinix, the largest retail colocation company, and found that they saved an average of 40% on bandwidth costs, and 60%-70% cloud connectivity and network traffic cost reduction.

The key is to leverage CNDCs as regional network hubs, rather than the traditional model of hubbing connectivity out of internal corporate data centers.

CNDCs like to remind the market that they offer much more than racks and power as these sites can offer performance benefits as well. Internet connectivity is often superior, and many CNDCs offer private cloud gateways that improve latency and security.

But lower costs and the savings alone should be enough to justify most deployments. To see how you can benefit, contact one of our experts today.

Next you should read

Extend Your Development Workstation with Vagrant & Ansible

The mention of Vagrant in the title might have led you to believe that this is yet another article about the power of sharing application environments. As one does with code or how Vagrant is a great facilitator for that approach. However, there exists plenty of content about that topic, and by now the benefits of it are widely known. Instead, we will describe our experience in putting Vagrant to use in a somewhat unusual way.

A Novel Idea

The idea is to extend a developer workstation running Windows to support running a Linux kernel in a VM and to make the bridge between the two as seamless as possible. Our motivation was to eliminate certain pain points or restrictions in development. Which are brought about by the choice of OS for the developer’s local workstation. Be it a requirement at an organizational level, regulatory enforcement or any other thing that might or might not be under the developer’s control.

This approach is not the only one evaluated, as we also considered shifting work entirely to a guest OS on a VM, using Docker containers, leveraging Cygwin. And yes, the possibility of replacing the host OS was also challenged. However, we found that the way technologies came together in this approach can be quite powerful.

We’ll take this opportunity to communicate some of the lessons learned and limitations of the approach and share some ideas of how certain problems can be solved.

Why Vagrant?

The problem that we were trying to solve and the concept of how we tried to do it does not necessarily depend on Vagrant. In fact, the idea was based on having a virtual machine (VM) deployed on a local hypervisor. Running the VM locally might seem dubious at first thought. However, as we found out, this gives us certain advantages that allow us to create a better experience for the developer by creating an extension to the workstation.

We opted to go for VirtualBox as a virtualization provider primarily because of our familiarity with the tool and this is where Vagrant comes into play. Vagrant is one of the tools that make up the open-source HashiCorp Suite, which is aimed at solving the different challenges in automating infrastructure provisioning.

In particular, Vagrant is concerned with managing VM environments in the development phase Note, for production environments there are other tools in the same suite that are more suitable for the job. More specifically Terraform and Packer, which are based on configuration as code. This implies that an environment can be easily shared between team members and changes are version controlled and can be tracked easily. Making the resultant product (the environment) consistently repeatable. Vagrant is opinionated and therefore declaring an environment and its configuration becomes concise, which makes it easy to write and understand.

Why Ansible?

After settling on using Vagrant for our solution and enjoying the automated production of the VM; the next step was to find a way to provision that VM in a way that marries the principles advertised by Vagrant.

We do not recommend having Vagrant spinning up the VMs in an environment and then manually installing and configuring the dependencies for your system. In Vagrant, provisioners are core and there are plenty from which you can choose. In our case, as long as our provisioning remained simple we stuck with using Shell (Vagrant simply uploads scripts to the guest OS and executes them).

Soon after, it became obvious that that approach would not scale well, alongside the scripts being too verbose. The biggest pain point was that developers would need to write in a way that favored idempotency. This is due to the common occurrence of needing to add steps to the configuration. All the while being overkill to have to re-provision everything from scratch.

At this point, we decided to use Ansible. Ansible by RedHat is another open-source automation tool that is built around the idea of managing the execution of plays. Using a playbook where a play can be thought of as a list of tasks mapped against a group of hosts in an environment.

These plays should ideally be idempotent which is not always possible. And again the entire configuration one would write is declared as code in YAML. The biggest win that was achieved with this strategy is that the heavy lifting is done by the community. It provides Ansible Modules, configurable Python scripts that perform specific tasks, for virtually anything one might want to do. Installing dependencies and configuring the guest according to industry standards becomes very easy and concise. Without requiring the developer to go into the nitty-gritty details since modules are in general highly opinionated. All of these concepts combine perfectly with the principles for Vagrant and integration between the two works like a charm.

There was one major challenge to overcome in setting up the two to work together. Our host machine runs Windows, and although Ansible is adding more support for managing Windows targets with time, it simply does not run from a Windows control machine. This leaves us with two options: having a further environment which can act as the Ansible controller or the simpler approach of having the guest VM running Ansible to provision itself.

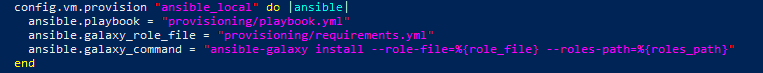

The drawback of this approach is that one would be polluting the target environment. We were willing to compromise on this as the alternative was cumbersome. Vagrant allows you to achieve this by simply replacing the provisioner identifier. Changing from ansible to ansible_local, it automatically installs the required Ansible binaries and dependencies on the guest for you to use.

File Sharing

One of the cornerstones we wanted to achieve was the possibility to make the local workspace available from within the guest OS. This is so you can have the tooling which makes up a working environment be readily available to easily run builds inside the guest. The options for solving this problem are plenty and they vary depending on the use case. The simplest approach is to rely on VirtualBox`s file-sharing functionality which gives near-instant, two-way syncing. And setting it up is a one-liner in the VagrantFile.

The main objective here was to share code repositories with the guest. It can also come handy to replicate configuration for some of the other toolings. For instance, one might find it useful to configure file sharing for Maven`s user settings file, the entire local repository, local certificates for authentication, etc.

Port Forwarding

VirtualBox`s networking options were a powerful ally for us. There are a number of options for creating private networks (when you have more than one VM) or exposing the VM on the same network as the host. It was sufficient for us to rely on a host-only network (i.e. the VM is reachable only from the host). And then have a number of ports configured for forwarding through simple NAT.

The major benefit of this is that you do not need to keep changing configuration for software, whether it is executing locally or inside the guest. All of this can be achieved in Vagrant by writing one line of configuration code. This NATting can be configured in either direction (host to guest or guest to host).

Bringing it together

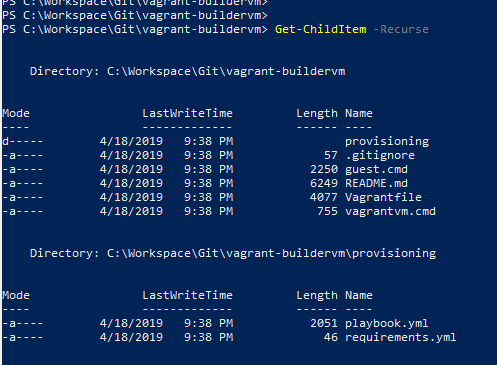

Having defined the foundation for our solution, let’s now briefly go through what we needed to implement all of this. You will see that for the most part, it requires minimal configuration to reach our target.

The first part of the puzzle is the Vagrantfile in which we define the base image for the guest OS (we went with CentOS 7). The resources we want to allocate (memory, vcpus, storage), file shares, networking details and provisioning.

Note that the vagrant plugin `vagrant-vbguest` was useful to automatically determine the appropriate version of VirtualBox’s Guest Addition binaries for the specified guest OS and installing them. We also opted to configure Vagrant to prefer using the binaries that are bundled within itself for functionality such as SSH (VAGRANT_PREFER_SYSTEM_BIN set to 0) rather than rely on the software already installed on the host. We found that this allowed for a simpler and more repeatable setup process.

The second major part of the work was integrating Ansible to provision the VM. For this we opted to leverage Vagrant’s ansible_local that works by installing Ansible in the guest on the fly and running provisioning locally.

Now, all that is required is to provide an Ansible playbook.yml file and here one would define any particular configuration or software that needs to be set up on the guest OS.

We went a step further and leveraged third-party Ansible roles instead of reinventing the wheel and having to deal with the development and ongoing maintenance costs.

The Ansible Galaxy is an online repository of such roles that are made available by the community. And you install these by means of the ansible-galaxy command.

Since Vagrant is abstracting away the installation and invocation of Ansible, we need to rely on Vagrant. Why? To make sure that these roles are installed and made available when executing the playbook. This is achieved through the galaxy_command parameter. The most elegant way to achieve this is to provide a requirements.yml file with the list of roles needed and have it passed to the ansible-galaxy command. Finally, we need to make sure that the Ansible files are made available to the guest OS through a file share (by default the directory of the VagrantFile is shared) and that the paths to them are relative to /vagrant.

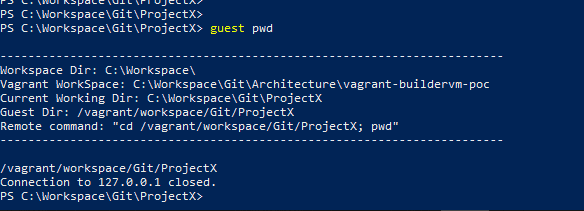

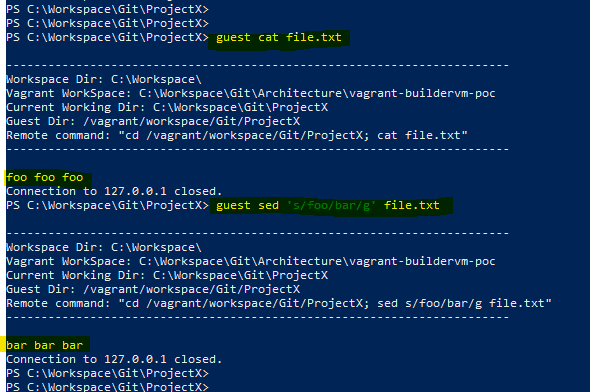

Building a seamless experience…BAT to the rescue

We were pursuing a solution that makes it as easy as possible to jump from working locally to working inside the VM. If possible, we also wanted to be able to make this switch without having to move through different windows.

For this reason, we wrote a couple of utility batch scripts that made the process much easier. We wanted to leverage the fact that our entire workspace directory was synced with the guest VM. This allowed us to infer the path in the workspace on the guest from the current location in the host. For example, if on our host we are at C:WorkspaceProjectX and the workspace is mapped to vagrantworkspace, then we wanted the ability to easily run a command in vagrantworkspaceprojectx without having to jump through hoops.

To do this we placed a script on our path that would take a command and execute it in the appropriate directory using Vagrant’s command flag. The great thing about this trick is that it allowed us to trigger builds on the guest with Maven through the IDE by specifying a custom build command.

We also added the ability to the same script to SSH into the VM directly in the path corresponding to the current location on the host. To do this, on VM provisioning we set up a file share that allows us to sync the bashrc directory in the vagrant user’s home folder. This allows us to cd in the desired path (which is derived on the fly) on the guest upon login.

Finally, since a good developer is an efficient developer, we also wanted the ability to manage the VM from anywhere. So if, for instance, we have not yet launched the VM we would not need to keep navigating to the directory hosting the VagrantFile.

This is standard Vagrant functionality that is made possible by setting the %VAGRANT_CWD% variable. What we added on top is the ability to define it permanently in a dedicated user variable. And simply set it up only when we wanted to manage this particular environment.

File I/O performance

In the course of testing out the solution, we encountered a few limitations that we think are relevant to mention.

The problems revolved around the file-sharing mechanism. Although there are a number of options available, the approach might not be a fit for certain situations that require intensive File I/O. We first tried to set up a plain VirtualBox file share and this was a good starting point since it works. And without requiring many configurations, it syncs 2-ways instantaneously, which is great in most cases.

The first wall was hit as soon as we tried running a FrontEnd build using NPM which relies on creating soft-links for common dependency packages. Soft-linking requires a specific privilege to be granted on the Windows host and still, it does not work very well. We tried going around the issue by using RSync which by default only syncs changes in one direction and runs on demand. Again, there are ways to make it poll for changes and bi-directionality could theoretically be set up by configuring each direction separately.

However, this creates a race-condition with the risk of having changes reversed or data loss. Another option, SMB shares, required a bit more work to set up and ultimately was not performant enough for our needs.

In the end, we found a solution to make the NPM build run without using soft-links and this allowed us to revert to using the native VirtualBox file share. The first caveat was that this required changes in our source-code repository, which is not ideal. Also, due to the huge number of dependencies involved in one of our typical NPM-based FrontEnd builds, the intense use of File I/O was causing locks on the file share, slowing down performance.

Conclusions

The aim was to extend a workstation running Windows by also running a Linux Kernel, to make it as easy as possible to manage and switch between working in either environment. The end result from our efforts turned out to be a very convenient solution in certain situations.

Our setup was particularly helpful when you need to run applications in an environment that is similar to production. Or when you want to run certain tooling for development, which is easier to install and configure on a Linux host. We have shown you how, with the help of tools like Vagrant and Ansible, it is easy to create a setup in such a way that can be shared and recreated consistently. Whilst keeping the configuration concise.

From a performance point of view, the solution worked very well for tasks that were demanding from a computation perspective. However, not the same can be said for situations that required intensive File I/O due to the overhead in synchronization.

For more knowledge-based information, check out what our experts have to say. Bookmark the site to stay updated weekly.

Next you should read

38 Cyber Security Conferences to Attend in 2020

Global cybersecurity ensures the infrastructure of global enterprises and economies, safeguarding the prosperity and well-being of citizens worldwide. As IoT (Internet of Things) devices rapidly expand, and connectivity and usage of cloud services increases, cyber-related incidents such as hacking, data breaches, and infrastructure tampering become common.

Global cybersecurity conferences are a chance for stakeholders to address these issues and formulate strategies to defend against attacks and disseminate knowledge on new cybersecurity policies and procedures.

Benefits of Attending a Cyber Security Conference in 2020:

- Networking with peers

- Education on new technologies

- Outreach

- New strategies

- Pricing information

- Giving back and knowledge-sharing

- Discovering new talent

- Case studies

Here is a list below of the top 37 cybersecurity conferences to attend in 2020. As future conference details become confirmed for later in the year, bookmark the page and check back for the latest info.

1. National Privacy and Data Governance Congress

- Date: January 28, 2020

- Location: Toronto, Canada

- Cost: $150

- https://pacc-ccap.ca/events

The National Privacy and Data Governance Congress Conference is an occasion to discover critical problems at the landmark of privacy, law, security, access, and data authority. This event joins specialists from the academe, industry, and government who are involved with compliance, data governance, privacy, security, and access within establishments.

The conference is extensive but provides an adequate amount of time for representatives to present inquiries, receive impromptu responses, and participate in eloquent discussions with hosts, associates, and decision-makers.

2. NextGen SCADA Europe 2020

- Date: January 30 - February 1, 2020

- Location: Berlin, Germany

- Cost: €995 - €5,230.05

- https://www.smartgrid-forums.com/forums/nextgen-scada-global/?ref=infosec-conferences.com

The 6th Annual NextGen SCADA Europe exhibition and networking conference is back thanks to the high demand. It is a dedicated forum that will provide content depth and networking emphasis you need to help with making critical new decisions. A decision such as upgrading your SCADA structure to meet the needs of the digital grid better.

In a matter of three intensive days, you can take part in 20+ utility case-studies. Such vital studies like the critical subjects of integration, system architecture, cybersecurity, and functionality. Enroll now to gain insight into the reason why these studies are the newest buzz around the cyber circle.

3. Sans Security East

- Date: February 1 - 8, 2020

- Location: New Orleans, Louisiana, United States

- Cost: Different prices for different courses. Most courses cost $7,020. An online course is available.

- https://www.sans.org/event/security-east-2020

Jump-start the New Year with one of the first training seminars of 2020. Attend the SANS Security East 2020 in New Orleans for an opportunity to learn new cybersecurity best practices for 2020 from the world’s top experts. This training experience is to assist you in progressing your career.

SANS’ development is unchallenged in the industry. The organization provides fervent instructors who are prominent industry specialists and practitioners. Their applied knowledge adds significance to the teaching syllabus. These skilled instructors guarantee you will be capable of utilizing what you learn immediately. From this conference, you can pick from over twenty information security courses that are prepared by first-rate mentors.

4. International Conference on Cyber Security and Connected Technologies (ICCSCT)

- Date: February 3 - 4, 2020

- Location: Tokyo, Japan

- Cost: $260 - $465

- https://iccsct.coreconferences.com

The 2020 ICCSCT is a leading research session focused on presenting new developments in cybersecurity. The seminar happens every year to make a perfect stage for individuals to share opinions and experiences.

The International Conference on Cyber Security and Connected Technologies centers on the numerous freshly forthcoming parts of cybersecurity and connected technologies.

5. Manusec Europe: Cyber Security for Critical Manufacturing

- Date: February 4 - 5, 2020

- Location: Munich, Germany

- Cost: $999 - $2,999

- https://europe.manusecevent.com

As the industrial division continues to adopt advancements in technology, it becomes vulnerable to an assortment of cyber threats. To have the best tools to tackle cyber threats in the twenty-first century, organizations must involve all levels of employees to cooperate and institute best exercise strategies to guard vital assets.

This event will bridge the gap between the corporate IT senior level and process control professionals. Such practices will allow teams to discuss critical issues and challenges, as well as to debate cyber security best practice guidelines.

6. The European Information Security Summit

- Date: February 12 - 13, 2020

- Location: London, England, United Kingdom

- Cost: £718.80 - £2400.00

- https://www.teiss.co.uk/london

This organization, known as TEISS, is currently one of the leading and most wide-ranging cybersecurity meetings in Europe. It features parallel sessions on the following four streams:

- Threat Landscape stream

- Culture and Education stream

- Plenary stream

- CISOs & Strategic stream

Join over 500 specialists in the cybersecurity industry and take advantage of different seminars.

7. Gartner Data & Analytics Summit

- Date: February 17 - 18, 2020

- Location: Sydney, Australia

- Cost: $2,750 - $3,350

- https://www.gartner.com/en/conferences/apac/data-analytics-australia

Data and analytics are conquering all trades as they become the core of the digital transformation. To endure and flourish in the digital age, having a grasp on data and analytics is critical for industry players.

Gartner is currently the global leader in IT conference providers. You, too, can benefit from our research, exclusive insight, and unmatched peer networking. Reinvent your role and your business by joining the 50,000+ attendees that walk away from this seminar annually, armed with a better understanding and the right tools to make their organization a leader in their industry.

8. Holyrood Connect’s Cyber Security

- Date: February 17 - 23, 2020

- Location: Edinburgh, Scotland, United Kingdom

- Cost: £99

- http://cybersecurity.holyrood.com/about-the-event

With cyber threats accelerating in frequency, organizations must protect themselves from the potentially catastrophic consequences due to security breaches.

In a time where being merely aware of security threats is unsustainable, Holyrood’s annual cybersecurity conference will research the latest developments, emerging threats, and constant practice.

Join relevant stakeholders, experts, and peers as they research the next steps to reinforce defenses, improve readiness, and maintain cyber resilience.

Critical issues to be addressed:

- Up-to-date briefing on the latest in cybersecurity practice and policy

- Expert analysis of the emerging threat landscape both at home and abroad

- Good practice and innovation in preventing, detecting and responding to cyber attacks

- Developing a whole-organization approach to cyber hygiene – improving awareness, culture, and behavior

- Horizon scanning: cybersecurity and emerging technology

9. 3rd Annual Internet of Things India Expo

- Date: February 19 - 21, 2020

- Location: Pragati Maidan, New Delhi, India

- Cost: Free for Visitors

- https://www.iotindiaexpo.com

The Internet of Things is a trade transformation vital to government, companies, and clients, renovating all industries. The Second Edition IoT India Expo will concentrate on the Internet of Things environment containing central bodies, software, services, and hardware. Distinct concentration for this symposium will be on:

- Industrial IoT

- Smart Appliances

- Robotics

- Cybersecurity

- Smart Solutions

- System Integrators

- Smart Wearable Devices

- AI

- Cloud Computing

- Sensors

- Data Analytics

- And much more…

10. BSides Tampa

- Category: General Cyber Security Conference

- Date: February 20, 2020

- Location: Tampa, Florida, United States

- Cost: General Admission - $50; Discount for specific parties like Teachers, Military and Students

- https://bsidestampa.net

The BSides Tampa conference focuses on offering attendees the latest information on security research, development, and uses. The conference is held yearly in Tampa and features various demonstrations and presentations from the best available in industry and academia.

Attendees have the opportunity to attend numerous training classes throughout the conference. These training classes provide individual technical courses on subjects ranging from penetration testing, security abuse, or cybersecurity certifications.

11. RSA Conference, the USA

- Date: February 24 - 28, 2020

- Location: San Francisco, California, United States

- Cost: $750 - $1,995

- https://www.rsaconference.com/usa

With the simplicity of joining the digital space opens the risk of real-world cyber dangers. The 2020 RSA Conference focuses on the topics of managing these cyber threats that prominent organizations, agencies, and businesses are facing.

This event is famous in the US as well as in Abu Dhabi, Europe, and Singapore. The RSA Conference is renowned for being one of the leading information security seminars that occur yearly. The event’s real objective is to utilize the active determination the leaders place into study and research.

12. RSA Conference, Cryptographers’ Track

- Date: February 24 - 28, 2020

- Location: San Francisco, California, United States

- Cost: $750 - $1,995

- https://www.rsaconference.com/usa

More than 40,000 industry-leading specialists attend the event as it is the first industry demonstration for the security business. As one of the industry-leading cybersecurity events, 2020 is the pathway to scientific documents on cryptography. It is a fantastic place for the broader cryptologic public to get in touch with attendees from the government, the industry, and academia.

13. Hack New York City 2020

- Date: March 6 - 8, 2020

- Location: Manhattan, New York, United States

- Cost: Free

- https://hacknyu.org

Hack NYC is about sharing ideas on how we can improve our daily cybersecurity practices and the overall economic strength. The threat of attack targeting the Critical National Infrastructure is real as provisions supporting businesses and communities face common weaknesses and an implicit dynamic threat.

Hack NYC emphasis’ on our planning for, and resistance to, the real potential for Kinetic Cyberattack. Be part of crucial solutions and lighten risks aimed at Critical National Infrastructure.

14. Healthcare Information and Management Systems Society (HIMSS)

- Date: March 9 - 13, 2020

- Location: Orlando, Florida, United States

- Cost: $195 - $1,485

- https://www.himssconference.org

Over 40,000 health IT specialists, executives, vendors, and clinicians come together from all over the globe for the yearly HIMSS exhibition and seminar. Outstanding teaching, first-class speakers, front-line health IT merchandise, and influential networking are trademarks of this symposium. Over three hundred instruction programs feature discussions and workspaces, front-runner meetings, keynotes, and an entire day of pre-conference seminars.

15. 14th International Conference on Cyber Warfare and Security

- Date: March 12 - 13, 2020

- Location: Norfolk, Virginia, United States

- Cost: £365 - £690

- https://www.academic-conferences.org/conferences/iccws

The 14th Annual ICCWS is an occasion for academe, consultants, military personnel, and practitioners globally to explore new methods of fighting data threats. Cybersecurity conferences like this one offer the opportunity to increase information systems safety and share concepts.

New risks that exist from migrating to the cloud and social networking are a growing focus for the research group, and the sessions designed to cover these matters particularly. Join the merging of crucial players as CCWS uniquely addresses cyber warfare, security, and information warfare.

16. PROTECT International Exhibition and Conference on Security and Safety

- Date: March 16 - 17 2020

- Location: Manila, Philippines

- Cost: for visitors - Free; For delegates - $625

- http://protect.leverageinternational.com

Leverage International (Consultants) Inc. arranges this annual international seminar and demonstration on safety and security. PROTECT first started in 2005 by the Anti-Terrorism Council. This conference is the one government-private division partnership sequence in the Philippines dedicated to protection and security. It contains a global display, an excellent level symposium, free to go to practical workspaces, and networking prospects.

17. TROOPERS20

- Date: March 16 - 20, 2020

- Location: Heidelberg, Germany

- Cost: 2,190€ - 3,190€

- https://www.troopers.de

The TROOPERS20 conference is a two-day event providing hands-on involvement, discussions of current IT security matters, and networking with attendees as well as speakers.

During the two-day seminar, you can expect discussions on numerous issues. There are also practical demonstrations on the latest research and attack methods to bring the topics closer to the participants.

The conference also includes discussions about cyber defense and risk management, as well as relevant demonstrations of InfoSec management matters.

18. 27th International Workshop on Fast Software Encryption

- Date: March 22 - 26, 2020

- Location: Athens, Greece

- Cost: $290 - $630

- https://fse.iacr.org/2020

The 2020 Fast Software Encryption conference arranged by the International Association for Cryptologic Research, will take place in Athens, Greece. FSE is broadly renowned as the globally prominent occasion in the field of symmetric cryptology.

This event will cover many topics, both practical and theoretical, including the design and analysis of block ciphers, stream ciphers, encryption schemes, hash functions, message authentication codes, authenticated encryption schemes, cryptanalysis, and evaluation tools, and secure implementations.

The IACR has been organizing FSE seminars since 2002 and is an international company with over 1,600 associates that brings researchers in cryptology together.

The conference concentrates on quick and protected primitives aimed at symmetric cryptography which contains the examining and planning of:

- Block Cryptographs

- Encryption Structures

- Stream Codes

- Valid Encryption Structures

- Hash Meanings

- Message Valid Schemes

- Cryptographic Variations Examination and

- Assessment Apparatuses

They’ll address problems and resolutions concerning their protected applications.

19. Vienna Cyber Security Week 2020

- Date: March 23 - March 27, 2020

- Location: Vienna, Austria

- Cost: $295

- https://www.energypact.org

Austrian non-governmental affiliates, international governmental entities, and the Energypact Foundation present this year’s conference. Its focus is to connect with significant global investors in the discussion and collaboration of the discipline of cybersecurity. The primary focus is an analytical structure with an emphasis on the energy subdivision.

20. Cyber Security for Critical Assets (CS4CA) the USA

- Date: March 24 – 25, 2020

- Location: Houston, Texas, United States

- Cost: $1,699 - $2,999

- https://usa.cs4ca.com

The Yearly CS4CA features two devoted issues for OT and IT authorizing representatives to enhance their professional areas of attention. The discussions intend to tackle some of the most common problems that connect both sets of specialists.

Each issue is curated by a set of industry-prominent professionals to be as significant, up-to-date, and detailed as possible for two days. Throughout this conference, you can expect the opportunities to take relevant tests, as well as to get inspired by some of the world’s prominent cybersecurity visionaries and network with colleagues.

21. World Cyber Security Congress 2020

- Date: March 26 - 27, 2020

- Location: London, England, United Kingdom

- Cost: £195 - £295

- https://www.neventum.com/tradeshows/world-cyber-security-congress

The World Cyber Security Seminar is an advanced international seminar that interests CISOs as well as other cybersecurity specialists from various divisions. Its panel of 150 + outstanding speakers represent a wide range of verticals, such as:

- Finance

- Retail

- Government

- Critical Infrastructure

- Transport

- Healthcare

- Telecoms

- Educational Services.

The World Cyber Security Congress is a senior-level meeting created with Data Analytics, Heads of Risk and Compliance, CIOs, and CTOs, as well as CISOs and Heads of Information of Security in mind.

22. InfoSec World 2020

- Date: March 30 - April 1, 2020

- Location: Lake Buena Vista, Florida, United States

- Cost: $50 - $3,695

- https://www.infosecworldusa.com

The 2020 InfoSec World Seminar will feature over one hundred industry specialists to provide applied and practical instructions on a wide array of security matters. The 2020 conference offers an opportunity for security specialists to research and examine concepts with colleagues.

Throughout the conference, attendees will have plenty of opportunities to increase their knowledge from this world-class seminar platform headed by the industry’s prominent specialists. They will also have a chance to receive 47 CPE credits over the one week course or attend New Tech Lab assemblies presented in real-life scenarios. Attendees also have the opportunity to participate in a virtual career fair where you can join via your tablet or at the fair section of the Expo.