RAML Tutorial: Using RAML to Document REST API contracts

In an ideal world, our programs, interfaces, and APIs would not need documentation. Our clients would all be magical beings, able to read our minds and understand precisely what it is our system does and how to use it.

Unfortunately, this is not the status quo today, since many enterprises use hand-written documentation (usually in PDF or Microsoft Word format) in a bid to make life easier for integrating developers. Hypermedia evangelists, in particular, are not pleased with this situation. If we had to compare this to websites, it would be the same as being provided a manual for each site we visit – and like websites, APIs should be self-documenting and give the user with enough information to allow him to use and navigate across API states. Unfortunately, however, many enterprises shy away from not having a “document” – particularly in areas like FinTech – since clients tend to be more conservative in the ways they work and the technologies they adopt.

To add insult to injury, these manual documents are written in a separate sphere from the implementation, sometimes by entirely different departments/teams within the enterprise. As such it is relatively common that synchronization issues occur which are very hard to fix since the manual review is pretty much the only way to identify and correct problems.

At this point many old-school SOAP aficionados rear their heads and make fun of this newfangled REST stuff. “… in our day we had the WSDL!, you could generate everything from the WSDL!, You don’t have a WSDL in REST ha!” And even though SOAP is inferior to REST in many ways, the WSDL is the one thing that was almost universally loved (except maybe by the person who had to author it J) and could facilitate understanding and integration of APIs by clients.

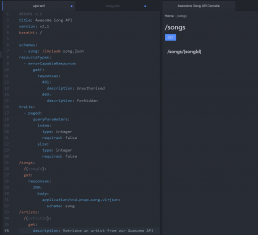

Luckily, REST now has its “WSDL.” Three new emerging technologies: RAML, Swagger, and API Blueprints aim to document the contract that is our API and to do so in a way that is simple and easy to use. For the rest of the article, we will be using RAML for our discussion and examples. However, the same points can be applied to Swagger and API Blueprints. We chose to use RAML since it is straightforward to write (at the time of evaluation) and understand, while at the same time providing constructs which encourage reuse heavily.

What is RAML?

Rest API Markup Language, aka RAML, is an open language designed to document REST APIs which are based on YAML. This means that indentation is used to create structure leaving the document free of braces and other text which is not directly relevant to the body being documented.

My favorite aspect of RAML as a language is that it has a very short learning curve and proper documentation, editors, and tutorials to get developers going. The open nature of the language means that plenty of tooling is being developed in this space. Another great feature is that the language encourages reuse through traits, resource types and other bits and bobs which allow us to define concepts once and reuse across our API stack. This not only saves time but encourages consistency.

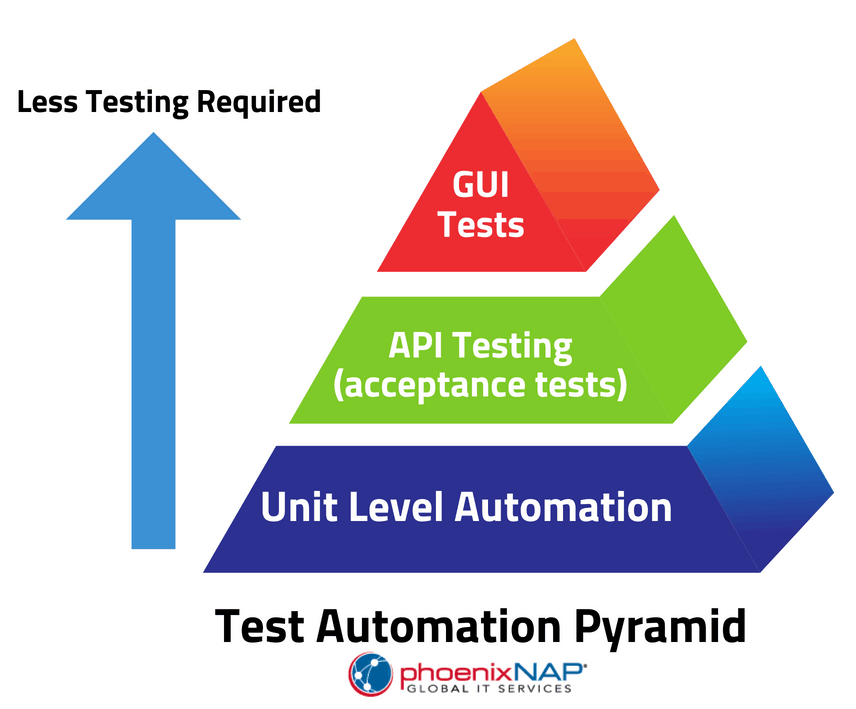

The main benefit of using RAML (or any other API Documentation Language) is that we are producing a document that is machine readable. This means that we are adding an extra step toward having some shiny document we can send to prospective clients, but it also means that this same RAML file can be used to generate all sorts of different documentation which can be consumed by different people:

- A simplified version of Management/Evaluation

- Interactive documentation including Sandbox for integrating developers

- Code generation for internal developers

- Test Generation and Verification of endpoints for QA teams and internal developers

- GUI generation for rapid prototyping

And the list goes on.

Contract-first vs Code-first

We can apply RAML to our projects using two approaches: “Contract-First,” i.e., when the RAML file is written before implementation starts or “Code-First,” where the implementation precedes or is done in sync with RAML development. Both have very different use-cases.

Let’s discuss contact-first, first J. In this approach, an effort is made on the API documentation before the coding begins. The main benefit here is that teams will focus on the consumption of the APIs rather than what is available under the hood and take design decisions which will ultimately result in a better experience for integrating clients.

Another benefit is that during the development of the API, tools such as the API Console or other WYSIWYG editors provide a live view of the API as it’s built. This can be mocked and exercised by various team members (UI Developers, Product Stakeholders, etc.) and can provide a very visual way of spotting issues and improve the design. Once the document is complete, this can be used as an integration point between teams – Javascript/UI developers can code against a mocked variant of the RAML file while backend developers can use it to generate code or tests.

We are trying to move all our internal development to a contract-first approach since the only drawback of this approach is that we are adding a small step to the development process. This approach is not always possible, especially with existing projects where there is already a large API footprint. In these cases, a big-bang documentation shift can be hard to justify, and a code-first approach can be adopted.

A Code-first approach does not help improve the overall design. However, there are different benefits. As a starting point, this approach can be applied to our existing code-base to generate documentation and all other associated benefits, with minimal effort. This documentation can provide visibility to teams who might not be operating outside the backend. At phoenixNAP, we have applied this technique successfully using an in-house plugin which generated a RAML file from custom annotations used in the backend of our phoenixNAP Client Portal web application. This internal RAML was used by our front-end teams to improve the communication with the backend developers and to allow us to work together on building a UI and a backend for a task simultaneously.

This plugin was extended to generate RAML from standard Spring MVC annotations and was open-sourced under the Apache 2.0 License and can be found on GitHub.

In the architecture department, the RAML plugin is used within our Spring Boot reference container to allow us to create API-driven proofs-of-concept rapidly. For these internal research projects, development speed is critical since their purpose is to validate or illustrate an approach we plan to take. Rather than having to develop an interface, writing simple APIs which are parsed by the SpringMVC RAML plugin provides us with an interface which we can then use to evaluate the solution. This tooling allows for very rapid prototyping and can also allow us to ship the POC to other departments without having to spend time developing an interface or documentation.

Keeping Things in Sync

One of the drawbacks and in some ways benefits of API documentation languages is that they are separate from the implementation. This puts us in a situation where we might have different sources of “truth” similar to how PDFs and their corresponding Word Documents may end up out of sync as changes are applied to one but not the other.

The most significant difference is that unlike PDF/Word, where a manual review is the only solution, in the RAML world we have tooling that can help us keep these documents in check.

Abao (https://github.com/cybertk/abao/) is a tool which parses the RAML file and executes tests on our endpoints to check if the implementation respects the contract. The SpringMVC RAML parser generates a RAML model from the implementation and compares this to the contract being published. This can be used as a Maven plugin which could trigger a build failure when incompatible scenarios are detected.

Conclusion

API documentation languages are at an exciting stage.

The open and straightforward nature of these languages makes adoption and extension reasonably simple – when we introduced RAML there were minimal issues, and many benefits out of the box. We were able to build our own tools on top of these technologies. As such, there is so much brought to the table that is there really any point to using static documents to document our APIs anymore?

Recent Posts

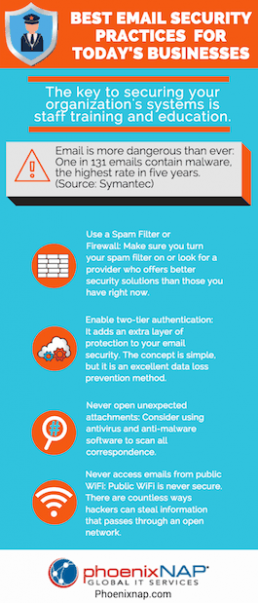

SECaaS: Why Security as a Service is a Trend To Watch

Your company is facing new cybersecurity threats daily. Learn how Security as a Service (SECaaS) efficiently protects your business.

The cybersecurity threat landscape is rapidly expanding. Technology professionals are fending off attacks from all directions.

The lack of security expertise in many organizations is a challenge that is not going away anytime soon.

CIOs and CSOs have quickly realized that creating custom solutions are often too slow and expensive.

They now realize that managed security service providers or MSSP companies are the best way to maintain protection. Software-as-a-service (SaaS) is becoming a more comfortable concept for many technology professionals.

What is Security as a Service?

SECaaS is a way to outsource complex security solutions needs to experts in the field while allowing internal IT and security teams to focus on core business competencies.

Not long ago, security was considered a specialization that needed to be in-house. Most technology professionals spent only a small portion of their time ensuring that backups always, the perimeter was secure, and firewalls were in place. There was a relatively black and white view of security with a more inward focus. Antivirus software offers only basic protection. It is not enough to secure against today’s threats.

Fast forward to today, where risks are mounting from all directions. Data assets spend a significant portion of their life in transit both within and outside the organization. New software platforms are being introduced on a weekly if not a daily timeline with many organizations. It is more difficult than ever to maintain a secure perimeter, and accessible data, while staying competitive and agile.

Threat Protection from All Sides

Today’s business users savvier about accessing secure information. Yet, many are less aware of the ways that they could be opening their networks to external attacks.

This causes a nightmare for system administrators and security professionals alike as they attempt to batten down the hatches of their information and keep it truly secure. Advanced threats from external actors who are launching malware and direct attacks at a rate of thousands per day are a challenge.

The drive towards accessibility of data and platforms at all times causes a constant tension between business users and technology teams. Security technologists seek to lock down internal networks at the same time users are clamoring for the ability to bring their own device to work.

There is a significant shift in today’s workforce towards the ability to work whenever and wherever the individual happens to be.

This makes it crucial that technology teams can provide a great user experience without placing too many hurdles in the way of productivity.

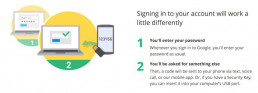

When business users find an obstacle, they are likely to come up with an unacceptable workaround that is less secure than the CSO would like. Account requirements too prohibitive?

No problem. Users will just share their usernames and passwords with internal and external parties. Providing easy access to confidential information. These are only the internal threats. External forces are constantly banging on your digital doors, looking for a point of weakness that they can exploit.

Cybercriminals are active throughout the world. No businesses are immune to this threat. Damage from cybercrime is set to exceed an annual amount of $6 trillion by 2021. Doubling the impact from just 2015.

The amount of wealth changing hands due to cybercrime is astronomical. This can be a heavy incentive both for businesses to become more secure and for criminals to continue their activity. Spending on cybersecurity is also rising at a rapid rate and expected to continue that trend for quite some time. However, businesses are struggling to find or train individuals in the wide spectrum of skills required to combat cyberterrorism.

Benefits of Security as a Service

SECaaS has a variety of benefits for today’s businesses including providing a full suite of managed cloud computing services.

Staffing shortages in information security fields are beginning to hit critical levels.

Mid-size and smaller businesses are unlikely to have the budget to hire these professionals. IT leaders anticipate that this issue will get worse before it improves. Technology budgets are feeling the strain. Businesses need to innovate to stay abreast of the competition.

The costs involved with maintaining, updating, patching and installing software are very high. There are additional requirement to scale platforms and secure data storage on demand. These are all areas cloud-based security provides a measure of relief for strained IT departments.

Managed cloud SECaaS businesses have the luxury of investing in the best in the business from a security perspective — from platforms to professionals. Subscribers gain access to a squad of highly trained security experts using the best tools that are available on the market today and tomorrow. These security as a service providers are often able to deploy new tech more rapidly and securely than a single organization.

Automating Manual Tasks

Having someone continually review your business logs to ensure software and data are still secure is probably not a good use of time. However, SECaaS platforms can monitor your entire employee base while also balancing endpoint management.

Results are delivered back in real time with automated alerts triggered when unusual activity is logged. Completing these tasks automatically allows trained technology professionals to focus more on efforts that move the business forward while much of the protection is done behind the scenes. Benchmarking, contextual analytics, and cognitive insights provide workers with quick access to items that may be questionable. This allows movement to happen without requiring drudge work behind the scenes.

Reducing Complexity Levels

Does your information technology team have at least a day each week to study updates and apply patches to your systems? If not, your business may be a prime candidate for security as a service.

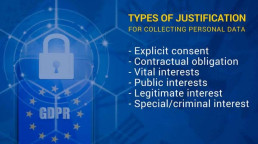

It is becoming nearly impossible for any IT team to stay updated on all platforms. Or, see how their security needs interact with other platforms that you’re utilizing and then apply the appropriate patches. Many organizations require layers of protection due to the storage of personally identifiable information (PII). This can add to the level of complexity.

Protecting Against New Threats

Cybercriminals are always looking for new ways to attack a large number of systems at once. Global ransomware damage costs are in the billions of dollars, and an attack will occur approximately every 14 seconds by 2020.

Industry insiders such as Warren Buffet state that cyber attacks are the worst problem faced by humankind — even worse than nuclear weapons. The upfront cost of paying a ransom is only the tip of the iceberg when it comes to damages that are caused. Businesses are finding hundreds of thousands of dollars in direct and indirect costs associated with regaining access to their information and software.

Examples of Security as a Service Providers Offerings

Traditional managed providers are enhancing security offerings to include incident management, mobile, endpoint management, web, and network security threats and more.

SECaaS is a sub-category of SaaS and continues to be of interest to businesses of all sizes as complexity levels rise.

Today’s security as a service vendors go beyond the traditional central management console and include:

- Security analysis: Review current industry standards and audit whether your organization is in compliance.

- Performance balancing with cloud monitoring tools: Guard against a situation where a particular application or data pathway is unbalancing the infrastructure.

- Email monitoring: Security tools to detect and block malicious emails, including spam and malware.

- Data encryption: Your data in transit is much more secure with the addition of cryptographic ciphers.

- Web security: Web application firewall management that monitors and blocks real-time. Threat management solutions from the web.

- Business continuity: Effective management of short-term outages with minimal impact to customers and users.

- Disaster recovery: Multiple redundancies and regional backups offer a quick path to resuming operations in the event of a disaster.

- Data loss prevention: DLP best practices include tracking and review of data that is in transit or in storage, with additional tools to verify data security.

- Access and identity management: Everything from password to user management and verification tools.

- Intrusion Management: Fast notifications of unauthorized access, using machine learning and pattern recognition for detection.

- Compliance: Knowledge of your specific industry and how to manage compliance issues.

- Security Information Event Management: Log and event information is aggregated and shown in an actionable format.

While offerings from security as a service companies may differ, these are some of the critical needs for external security management platforms.

Once you have a firm grasp of what can be offered, here’s how you can evaluate vendor partners based on the unique needs of your business.

Evaluating SECaaS Providers

Security has come to the forefront as businesses continue to rely on partners to perform activities from infrastructure support to data networks. This shift in how organizations view information risk makes it challenging to evaluate a potential cloud computing solution as a fit.

The total cost of ownership (TCO) for working with a SECaaS partner should represent significant savings for your organization. This is especially important when you balance against performing these activities internally. Evaluate total costs by looking at the expense of hiring information security professionals, building adequate infrastructure and reporting dashboards for monitoring. Be sure you fully disclose items such as total web traffic, the number of domains and data sources and other key metrics when requesting estimates.

The level of support that is provided, guaranteed uptime and SLAs are also essential statistics. Your vendor should be able to provide you with detailed information on the speed of disaster recovery. You will need the same information on how quickly infiltrations are identified and any issue resolved. A disaster situation is the least likely possibility. You should also review the time to address simple problems. For example, a user who is locked out of their account or adding a new individual to your network. A full security program will allow your network managed service provider to pinpoint problems quickly.

It is critical that the solution you select works with other business systems that are already in use. Secure cloud solutions are often easier to transition between than on-premise options. It is better to work with a single vendor to provide as many cloud services as possible. This allows for bundled pricing. It can enhance how well software packages work together.

Your team can monitor system health and data protection with real-time dashboards and reporting. This is valuable whether or not a vendor is also overseeing the threat detection process. You will improve the internal comfort level of your team while providing ready access to individuals who are most familiar with the systems. This availability of data will keep everything working smoothly. Be sure that your vendor understands how to provide actionable insight. They should also make recommendations for improving your web security. Access is always a concern.

Evaluating core IT security strategy factors help keep your organization’s goals aligned. A proactive SECaaS vendor-partner adds value to the business by providing DDOS protection. Plus, offering risk management and more.

Security challenges for today’s CIOs & CSOs are Real

Hackers target businesses of all sizes for ransomware and phishing attacks. Staying vigilant is no longer enough.

Today’s sophisticated environment requires proactive action taken regularly with the addition of advanced activity monitoring. Keeping all of this expertise in-house can be overly expensive. The costs involved with creating quality audits and control processes can also be quite high.

Security in the cloud offers the best of both worlds.

Learn more about our security as a service. Request a free initial consultation with the experts at PhoenixNAP.

Recent Posts

19 Best Automated Testing Tools For 2020

This article was updated in December 2019.

Before you begin introducing test automation into your software development process, you need a solution. A successful test automation strategy depends on identifying the proper tool.

This post compiles the best test automation tools.

The Benefits of Automated Testing Over Manual

Automated software testing solutions do a significant portion of the work otherwise done by manual testing. Thus, reducing labor overhead costs and improving accuracy. Automated testing is, well, not manual. Rather than having to program everything from the ground up, developers and testers use sets of pre-established tools.

This improves the speed of software testing and also increases reliability and consistency. Testers do not need to worry about the strength of their product, nor do they need to worry about maintaining it and managing it. They need only to test their own application.

When it comes to automating these tests, being both thorough and accurate is a necessity. Developers have already tested these automated solutions for thoroughness and accuracy. Solutions often come with detailed reporting and analysis that can be used to further improve upon applications.

Even when custom scripted, an automated testing platform is going to provide stability and reliability. Essentially, it creates a foundation on which the testing environment can be built. Depending on how sophisticated the program is, the automated solution may already provide all of the tools that the testers need.

Types of Automated Software Testing Tools

There are a few things to consider when choosing an automated testing platform:

Open-source or commercial?

Though commercial products may have better customer service, open-source products are often more easily customized and (of course) more affordable. Many of the most popular automated platforms are either open source or built on open-source software.

Which platform?

Developers create frameworks for mobile testing applications, web-based applications, desktop applications, or some mix of different environments. They may also run on different platforms; some may run through a browser while others may run as a standalone product.

What language?

Many programming environments favor one language over another, such as Java or C#. Some frameworks will accept scripting in multiple languages. Others have a single, proprietary language that scripters will need to learn.

For testers or developers?

Testers will approach automated testing best practices substantially differently from developers. While developers are more likely to program their automated tests, testers will need tools that let them create scenarios without having to develop custom scripting. Some of the best test automation frameworks are specifically designed for one audience or another, while others have features available for both.

Keyword-driven or data-driven?

Different performance testing formats may have a data-based approach or a keyword-driven approach, with the former being better for developers and the latter being better for testers. Either way, it depends on how your current software testing processes run.

A test automation framework may have more or less features, or provide more robust or less robust scripting options.

Open Source DevOps Automation Testing Tools

Citrus

Citrus is an automated testing tool with messaging protocols and data formats. HTTP, REST, JMS, and SOAP can all be tested within Citrus, outside of broader scope, functional automated testing tools such as Selenium. Citrus will identify whether the program is appropriately dispatching communications and whether the results are as expected. It can also be integrated with Selenium if another front-end functionality testing has to be automated. Thus, this is a specific tool that is designed to automate and repeat tests that will validate exchanged messages.

Citrus appeals to those who prefer tried and true. Citrus is designed to test messaging protocol. It contains support for HTTP, REST, SOAP, and JMS.

When applications need to communicate across platforms or protocols, there isn’t a more robust choice. It integrates well with other staple frameworks (like Selenium) and streamlines tests that compare user interfaces with back-end processes (such as verifying that the send button works when clicked). This enables an increased number of checks in a single test and an increase in test confidence.

Galen

Unique on this list, the Galen is designed for those who want to automate their user experience testing. Galen is a niche, specific tool that can be used to verify that your product is going to appear as it should on most platforms. Once testing has been completed, Galen can create detailed reports, including screenshots, and can help developers and designers analyze the appearance of their application over a multitude of environments. Galen can perform additional automated tasks using JavaScript, Java, or the Galen Syntax.

Karate-DSL

Built on the Cucumber-JVM. Karate-DSL is an API tool with REST API support. Karate includes many of the features and functionality of Cucumber-JVM, which includes the ability to automate tests and view reports. This solution is best left for developers, as it does require advanced knowledge to set up and use.

Robot Framework

Robot is a keyword-driven framework available for use with Python, Java, or .NET. It is not just for web-based applications; it can also test products ranging from Android to MongoDB. With numerous APIs available, the Robot Framework can easily be extended and customized depending on your development environment. A keyword-based approach makes the Robot framework more tester-focused than developer-focused, as compared to some of the other products on this list. Robot Framework relies heavily upon the Selenium WebDriver library but has some significant functionality in addition to this.

Robot Framework is particularly useful for developers who require Android and iOS test automation. It’s a secure platform with a low barrier to entry, suited to environments where testers may not have substantial development or programming skills.

Robot is a keyword-driven framework that excels in generating easy, useful, and manageable testing reports and logs. The extensive, pre-existing libraries streamline most test designing.

This enables Robot to empower test designers with less specialty and more general knowledge. It drives down costs for the entire process — especially when it comes to presenting test results to non-experts.

It functions best when the range of test applications is broad. It can handle website testing, FTP, Android, and many other ecosystems. For diverse testing and absolute freedom in development, it’s one of the best.

Well suited to environments where testers may not have substantial development or programming skills.

Selenium

You may have noticed that many of these solutions are either built on top of or compatible with Selenium testing. Selenium is undoubtedly the most popular automated security testing option for web applications. However, it has been extended quite often to add functionality to its core. Selenium is used in everything from Katalon Studio to Robot, but alone, it is primarily a browser automation product.

Those who believe they will be actively customizing their automated test environments may want to start with Selenium and customize it from there. In contrast, those who wish to begin in a more structured test environment may be better off with one of the systems that are built on top of Selenium. Selenium can be scripted in a multitude of languages, including Java, Python, PHP, C#, and Perl.

Selenium is not as user-friendly as many of the other tools on this list; it is designed for advanced programmers and developers. The other tools that are built on top of it tend to be easier to use.

Selenium can be described as a framework for a framework.

Many of the most modern and specialized frameworks draw design elements from Selenium. They are also often made to work in concert with Selenium.

Its original purpose was testing web applications, but over the years it has grown considerably. Selenium supports C#, Python, Java, PHP, Ruby, and virtually any other language and protocol needed for web applications.

Selenium comprises one of the largest communities and support networks in automation testing. Even tests that aren’t designed initially on Selenium will often draw upon this framework for at least some elements.

Watir

A light and straightforward automated software testing tool, Watir can be used for cross-browser testing and data-driven testing. Watir can be integrated with Cucumber, Test/Unit, and RSpec, and is free and open source. This is a solid product for companies that want to automate their web testing as well as for a business that works in a Ruby environment.

Gauge

Gauge is produced by the same company that developed Selenium. With Gauge, developers can use C#, Ruby, or Java to create automated tests Gauge itself is an extensible program that has plug-in support, but it is still in beta; use this only if you want to adopt cutting-edge technology now. Gauge is a promising product, and when it is complete will likely become a standard, both for developers and testers, as it has quite a lot of technology behind it.

Gauge aims to be a universal testing framework. Gauge is built around being lightweight. It uses a plugin architecture that can be work with every primary language, ecosystem, and IDE in existence today.

It is primarily a data-driven architecture, but the emphasis on simplicity is its real strength. Gauge tests can be written in a business language and still function. This makes it an ideal automated testing tool for projects that span workgroups. It is also a favorite for business experts who might be less advanced in scripting and coding. It is genuinely difficult to find a system that cannot be tested with Gauge.

Commercial Automation Tools

IBM Rational Functional Tester

A data-driven performance testing tool, IBM is a commercial solution that operates in Java, .Net, AJAX, and more. The IBM Rational Functional Tester provides unique functionality in the form of its “Storyboard” feature, whereby user actions can be captured and then visualized through application screenshots. IBM RFT will give an organization information about how users are using their product, in addition to how users are potentially breaking their product. RFT is integrated with lifecycle management systems, including the Rational Quality Manager and the Rational Team Concert. Consequently, it’s best used in a robust IBM environment.

Katalon Studio

Katalon Studio is a unique tool that is designed to be run both by automation testers and programmers and developers. There are different levels of testing skill set available, and the testing processes include the ability to automate tests across mobile applications, web services, and web applications. Katalon Studio is built on top of Appium and Selenium, and consequently offers much of the functionality of these solutions.

Katalon Studio is an excellent choice for larger development teams that may require multiple levels of testing. It can be integrated into other QA testing processes such as JIRA, Jenkins, qTest, and Git, and its internal analytics system tracks DevOps metrics, graphs, and charts.

Ranorex

Ranorex is a commercial automation tool designed for desktop and mobile testing. It also works well for web-based software testing. Ranorex has the advantages of a comparatively low pricing scale and Selenium integration. When it comes to tools, it has reusable test scripts, recording and playback, and GUI recognition. It’s a sufficient all-around tool, especially for developers who are needing to test on both web and mobile apps. It boasts that it is an “all in one” solution, and there is a free trial available for teams that want to test it.

Sahi Pro

Available in both open source and commercial versions, Sahi is centered around web-based application testing. Sahi is used inside of a browser and can record testing processes that are done against web-based applications. The browser creates an easy-to-use environment in which elements of the application can be selected and tested, and tests can be recorded and repeated for automation. Playback functionality further makes it easy to pare down to errors.

Sahi is a well-constructed testing tool for smaller parts of an application. Still, it may not be feasible to use for more wide-scale automated test production, as it relies primarily on recording and playback. Recording and playback is generally an inconsistent method of product testing.

TestComplete

Both keyword-driven and data-driven, TestComplete is a well-designed and highly functional commercial automated testing tool. TestComplete can be used for mobile, desktop, and web software testing, and offers some advanced features such as the ability to recognize objects, detect and update UI objects, and record and playback tasks. TestComplete can be integrated with Jenkins.

TestPlant eggPlant

TestPlant eggPlant is a niche tool that is designed to model the user’s POV and activity rather than simply scripting their actions. Testers can interact with the testing product as the end users would, making it easier for testers who may not have a development or programming background. TestPlant eggPlant can be used to create test cases and scenarios without any programming and can be integrated into lab management and CI solutions.

Tricentis Tosca

A model-based test automation solution, Tricentis Tosca offers analytics, dashboards, and multiple integrations that are intended to support agile test automation. Tricentis Tosca can be used for distributed execution, risk analysis, integrated project management, and can support applications, including mobile, web, and API.

Unified Functional Testing

Though it is expensive, Unified Functional Testing is one of the most popular tools for large enterprises. UFT offers everything that developers need for the process of load testing and test automation, which includes API, web services, and GUI testing for mobile apps, web, and desktop applications. A multi-platform test suite, UFT can perform advanced tasks such as producing documentation and providing image-based object recognition. UFT can also be integrated with tools such as Jenkins.

Cypress

Designed for developers, Cypress is an end-to-end solution “for anything that runs inside the browser.” By running inside of the browser itself, Cypress can provide for more consistent results when compared to other products such as Selenium. As Cypress runs, it can alert developers of the actions that are being taken within the browser, giving them more information regarding the behaviors of their applications.

Debuggers can be quickly introduced directly to applications to streamline the development process. Overall, Cypress is a reliable tool that is designed to be used for end-to-end during project management development.

Serenity

Serenity BDD (also known as Thucydides) is a Java-based framework that is designed to take advantage of behavior-driven development tools. Compatible with JBehave and Cucumber, Serenity makes it easier to create acceptance and regression testing. Serenity works on top of behavior-driven development tools and the Selenium WebDriver, essentially creating a secure access framework that can be used to create robust and complex products. Functionality in Serenity includes state management, WebDriver management, Jira integration, screenshot access, and parallel testing.

Through this built-in functionality, Serenity can make the process of performance testing much faster. It comes with a selection of detailed reporting options out-of-the-box, and a unique method of annotation called @Step. @Step is designed to make it easier to both maintain and reuse your tests, therefore streamlining and improving your test processes. Recent additions to Serenity have brought in RESTful API testing, which works through integration with REST assured. As an all-around testing platform, Serenity is one of the most feature-complete.

RedwoodHQ

RedwoodHQ is an Open Source test automation framework that works with any tool.

It uses a web-based interface that is designed to run tests on an application with multiple testers. Tests can be scripted in C#, Python, or Java/Groovy, and web-based applications can be tested through APIs, Selenium, and their web IDE. Creating test scripts can be completed on a drag-and-drop basis, and keyword-friendly searches make it easier for testers to develop their test cases and actions.

Though it may not be suitable for more in-depth testing, RedwoodHQ is a superb starting place and an excellent choice for those who operate in a primarily tester-driven environment. For developers, this performance testing tool may prove to be too shallow. That being said, it is a complete automation tool suite and has many necessary features built-in.

Appium

Appium has one purpose: testing mobile apps.

That does not mean to imply that it has a limited range of testing options. It works natively with iOS, Android, and other mobile operating systems. It supports simulators and emulators, and it is a darling for test designers who are also app developers. Perhaps the most notable perk of Appium is that it enables testing environments that do not require any changes to the original app code. That means apps are tested in their ready-to-ship state and produces test results that are as reliable as possible.

Apache JMeter

JMeter is made for load testing. It works with static and dynamic resources, and these tests are critical to all web applications.

It can simulate loads on servers, server groups, objects, and networks to ensure integrity on every level of the network. Like Citrus, it works across communication protocols and platforms for a universal look at communication. Unlike Citrus, it’s emphasis is not on basic functionality but in assessing high-stress activity.

A popular function among testers is JMeter’s ability to perform offline tests and replay test results. It enables far more scrutiny without keeping servers and networks busy during heavy traffic hours.

Find the Right Automated Testing Software

Ultimately, choosing the right test solution is going to mean paring down to the test results, test cases, and test scripts that you need. Automated tools make it easier to complete specific tasks. It is up to your organization to first model the data it has and identify the results that it needs before it can determine which automated testing tool will yield the best results.

Many companies may need to use multiple automated products, with some being used for user experience, others for data validation. Others are used as an all-purpose repetitive testing tool. There are free trials available for many of the products listed above. Testee each solution and see how it fits into its existing workflow and development pipeline.

Recent Posts

Choose the Best Cloud Service Provider: 12 Things to Know!

Choosing a cloud service provider is without question more involved than choosing the first result from a Google search. Each business has different requirements, customizations, and financial responsibilities.

It is crucial for the perfect service to meet and exceed businesses expectations.

That said; what does a business need to look for when searching for a cloud service?

The use of cloud and cloud services differ from one client to the next. Finding the right vendor will always vary though there are similar categories to narrow down what your business requires.

Below is a handy guide to help you navigate the plethora of options available to you and your business within the cloud server hosting industry.

Is the Cloud Server User Interface Actually Usable?

The user interface does not often receive the attention it should. An efficient and user-friendly interface goes a long way to allow more people to work on server-based tasks.

In the past, these seemingly trivial actions could require a full IT department to manage.

Amazon Direct Connect (AWS), for example, uses a somewhat cumbersome user interface. This could make it difficult for a business to perform medial tasks without a dedicated IT team. The point of a simple and effective UI is that you as a company need to access your data at all times.

A business needs to be able to access its internal and client data from anywhere; that is the beauty of the cloud. Having a simple UI allows for access from virtually anywhere, at all times, from varying devices just by logging in through the service provider’s client portal. Since it is web-based, using a smartphone, a laptop, or a tablet should not pose a problem.

How Does a Service Level Agreement (SLA) Work?

Cloud service agreements can often appear complicated and aren’t helped by a lack of industry standards for how they are constructed or defined. For SLAs in particular, many providers turn what could be a simple or straightforward agreement into an unnecessarily complicated, or worse, deliberately misleading language.

Having the technical proficiency and knowledge of terms can help decipher much of the complicated information, though it is often more reasonable to partner with a provider that offers transparency.

Most SLAs are split into two groups. The first is a conventional set of terms and conditions. This is a standard document provided to every applicant with the service provider. These types of forms are usually available online and agreed upon digitally.

The next part of the agreement is a customized contract between the client and vendor. Willingness to offer specific customization depends on each provider and should be part of the decision-making process of choosing the ideal solution.

The majority of these customizable SLA’s are for large, enterprise contracts. There are times when a smaller business may attempt to negotiate exclusive agreements and built-in provisions within their contract.

Regularly challenge service providers that appear prepared to offer flexible terms. Ask them to provide details on how they plan to support any requested customization, who is responsible for this modification and what are the steps in place used to administer this variation. Always remember your main components to cover in an SLA; service level objectives, remediation policies and penalties/incentives related to these objectives, and any exclusions or caveats.

Documentation, Provisioning and Account Set-Up

Service level agreement best practices and other contracts are often broken down into four different points of interest (with additional sub-sections as needed for customization). These four points of interest are legal protections, service delivery, business terms, and data assurance.

The legal protections portion of the SLA should cover Limitation of liability, Warranties, Indemnification, and Intellectual property rights. Customers are often wary of offering up too much information to avoid any potential for exposure if there were ever a breach, while the vendor would want to limit their liability if there were ever a claim.

Service delivery often varies depending on the size of the cloud computing service provider. The rule of thumb is to always look for a precise definition of all services and deliverables. Make sure you are crystal clear on all of the service company’s responsibilities relating to their offered services (service management, provisioning, delivery, monitoring, support, escalations, etc.).

The business terms will include points around publicity, insurance policies, specific business policies, operational reviews, fees, and commercial terms.

Within the business terms, specifics with regards to the contract need to include how, or to what extent, the service provider can unilaterally change the terms of the service or contract.

To prevent abrupt increases in billing, it is crucial that the SLA be adhered to, without changes during the course of an agreed upon terms.

The last point of emphasis is data policies and protection. The data assurance portion of an SLA will include detailed information covering data management, data conversion, data security, and ownership and use rights. It is essential to think long-term with any cloud storage provider and review data conversion policies to understand how transferable data may be if you decide to leave.

Reliability and Performance Metrics To Look For

There are numerous techniques for measuring the reliability of cloud server solutions.

First, validate the performance of the cloud infrastructure provider to their SLA’s for the last 6-12 months. Often, a service provider will publish this information publicly, but others should supply it if asked.

Here’s the thing though: don’t expect complete perfection. Server downtime is to be expected, and no solution will have a perfect record.

For more information on acceptable levels of downtime, research details on differentiating Data Center Tiers 1, 2, 3 & 4. What’s valuable about these reports is how the company responded to the downtown. Also, verify that all of the monitoring and reporting tools work with your existing management and reporting systems.

Accurate and detailed reporting on the reliability of the network provides a valuable window into what to expect when working with the service providers.

Confirm with the provider that they have an established, documented, and proven process for handling any planned and unplanned downtime. They should have documentation and procedures in place on their communication practices with customers during an outage. It is best that these processes include timelines, threat level assessments, and overall prioritization.

Ensure to have all documentation covered for all remedies and liability limitations offered when issues arise.

Is Disaster Recovery Important?

Beyond network reliability, a client needs to consider the cloud disaster recovery services protocols with individual vendors.

These days, data centers work to build their facilities in as disaster-free locations as possible, mitigating the risk of natural catastrophes at all possibilities. However, problems can still arise, and expectations have to be set in case something goes wrong. These expectations can include backup and redundancy protocols, restoration, data sources scheduling, and integrity checks (to name a few).

The disaster recovery protocol also needs to include what roles both client and service provider are responsible for. All roles and responsibilities for any escalation process must be documented as your company may be the ones to implement some of these processes.

Additional risk insurance is always a smart idea to help cover the potential costs associated with data recovery (when aspects of recovery fall under the jurisdiction of the client).

What Should I Know About Data Security?

Protecting data preserves a business and its clients from data theft. Securing data in the cloud affects both the direct client and those the client conducts business with.

Validate the cloud vendor’s different levels of systems and data security measures. Also, look into the capabilities of the security operations and security governance processes. The provider’s security controls should support your policies and procedures for security.

It is always a smart option to ask for the provider’s internal security audit reports, as well as incident reports and evidence of remedial actions for any issues that may have occurred.

Network Infrastructure and Data Center Location

The location of a data center for a service provider is crucial as it will dramatically affect many factors.

As mentioned previously, having a location where natural disasters are rare is always desirable. These areas are often remote enough that the cost of services can be lower than in a robust urban area.

Location of the data center also affects network latency. The closer a business location to the data center, the lower the latency and the faster data reaches the client. Therefore, a company based in Los Angeles will receive its data from a Phoenix-based data center faster than a data center located in Amsterdam.

For businesses that require more of a global presence, utilizing data centers around the world for distribution and redundancy is always an option. When looking for your ideal provider, it is worth inquiring how many locations globally they offer.

What If I Need Tech Support?

Tech support can be the bane of existence and the cause for insurmountable levels of frustration if not cohesive with the provider. Making sure that the provider you are looking for has reliable, actionable, and efficient support is essential.

If an issue arises, the longer a problem festers, the higher the risk of security threats or worse: a damaged reputation. Clients may become frustrated with a business if they are unable to access their accounts or contact the company. This could wreak havoc on many levels if issues are not resolved quickly.

Some data centers and service providers offer tailored resources to technical problems that could go as far to include 24/7 on-call service.

Tech support is a vital part of the selection process for a CSP. You want to feel at ease with your data and services, and a reliable support system is critical.

Business Health of Service Provider

Technical, logistical, and securities aside, it is essential to take a look at the operational capabilities of cloud service providers

. It is crucial to research your final CSP options’ financial health, reputation, and overall profile.

It is necessary to perform due diligence to validate that the service provider is in a healthy financial position and responsible enough to maintain business through the agreed-upon service term. If the provider were to run into financial troubles during your term, it could cause unrecoverable damage to your company.

Research if the provider has any past or current legal problems by researching and requesting data from the company. Asking about potential changes within the corporate structure (such as acquisitions or mergers) is another point of helpful info. Remember, this does not have to be a doom and gloom conversation. An acquisition could benefit the services and support you are offered down the line.

The background of major decision-makers within the cloud computing providers can be a useful roadmap for identifying trends and future potential issues.

Certifications and Standards

When searching for a cloud service provider, it’s always wise to validate the current best practices and technological understanding that they represent.

One way to do this is to see what certifications a provider has earned and how often they renew. This shows not only how up-to-date they are, how detail oriented a provider is, but also how in tune with industry standards they are. While these criteria may not determine which service provider you choose, they can be beneficial in shortlisting potential suppliers.

There are many different standards and certifications that a service provider can acquire. It depends entirely on the organization, the levels of security, the other clientele that a vendor works with, and numerous other conditions. Some standards to become familiar with in your search are DMTF, ETSI, ISO, Open Grid Forum, GICTV, SNIA, Open Cloud Consortium, Cloud Standards Customer Council, NLST, OASIS, IEEE, and IETF.

More than just a lengthy repertoire of certifications, you want to keep an eye out for structured processes, strong knowledge management, effective data control, and service status visibility. It is also worth researching how the service intends on staying current with new certifications and technology expansion.

Cloud security standards exist on a separate facet and certifications are awarded by different organizations. The primary criteria for security include the CSA (CS-A, SSAE, PCI, IEC, ICO, COBIT, HIPAA, Cyber Essentials, ISAE 3402, COBIT and GDPR.

Operational standards are a third category to consider and to seek out certification. These certifications include ISO, ITIL, IFPUG, CIF, DMTV, COBIT, TOGAF 9, MOF, TM Forum and FitSM.

Cloud and secure data storage solutions should be proud of their earned certifications and display them on their website. If certification badges are not present, inquiring about current certifications is easy enough.

Service Dependencies and Partnerships

Service providers often rely on different vendors for hardware and services. It is necessary to consider the various vendors and how each impacts a company’s cloud and data server experience.

Validating the provider’s relationships with vendors is essential. Also keeping an eye on their accreditation levels and technical capabilities are useful practices.

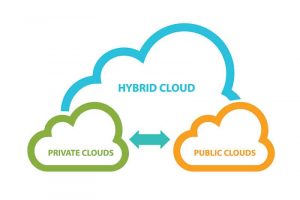

Think about whether the services of these vendors fit into a broader ecosystem of other services that might complement or support it. Some of the vendors may connect easier with IaaS, SaaS or PaaS cloud services. There could be some overlap or pre-configured in services that your business could see as a benefit.

Knowing the partnerships a provider has and whether it uses one, or several of the three cloud service models is helpful. It illustrates whether the service partner is the best fit for the ultimate goals of the business.

IT Cloud Services Migration Support and Exit Planning

When searching for your ideal partner, take care to look at the long-term strategy.

In the event you ever decide to move your services or grow too large for the service capabilities of a provider. The last thing you want to run into is a scenario called Vendor Lock-In. This is a situation in which you, as a potential customer, using a product or service cannot easily transition to a competitor. This circumstance often arises when proprietary technologies are used by a provider that end up being incompatible with other providers.

There are specific terms to keep an eye out for when comparing build apps and data centers. Some examples of CSPs using vendor lock-in technology includes:

-

- CSP compatible application architecture

- Proprietary secure cloud management tools

- Customized geographic diversity

- Proprietary cloud API’s

- Personalized cloud Web services (e.g., Database)

- Premium configurations

- Custom configurations

- Data controls and applications access

- Secure data formats (not standardized)

- Service density with one provider

Choosing a provider with standard services without relying on tailor crafted systems will reduce long-term pain points and help to avoid vendor lock-in.

Always remember to have a clear cloud migration strategy planned out even before the beginning of your relationship. Transitioning to a new provider is not always a smooth or seamless transition, so it is worth finding out about their processes before signing a contract.

Furthermore, consider how you will access your data, what state it will be in, and for how long the provider will keep it after you have moved on.

Takeaways On Cloud-Based Computing Vendors

Deciding between business cloud server providers seems like a daunting task.

With the right strategy and talking points, a business can find the right solution for a service provider in no time.

Remember to think long-term to avoid any potential for data center lock-in. Avoid the use of proprietary technologies and a build a defined exit strategy to prevent any possible headaches down the line.

Spend the time necessary to build workable and executable SLAs with contractual terms. A detailed SLA is the primary form of assurance you have that the services will be delivered and supported as agreed.

With the right research and remaining vigilant for what your business requires, finding the perfect solution is possible for everyone.

Recent Posts

Speed Up WordPress: 25 Performance and Optimization Tips

Nearly 30% of the entire internet runs on WordPress.

WordPress performance issues are well known.

The fact is, you have seconds, if not fractions of a second, to convince users to stay on a web page.

When a webpage’s loading time increases from just 1 to 3 seconds, the probability of the user leaving a site rises by 32%. If you stretch the load time to 5 seconds, bounces increase dramatically to 90%.

In addition to the effect on user experience and visitor retention, site speed impacts site placement in search engines.

Google has made it clear that it provides preferential treatment to sites that loads faster. All other factors being equal if your site is faster than your competitor’s, Google will favor you in the rankings.

The primary culprits include:

-

-

- Executing numerous scripts

- Downloading graphics and other embedded elements

- Repeated HTTP requests to the server

- Pulling information from the WordPress database

-

Here are 25 tips that answer the question, how to speed up WordPress and stop losing potential customers.

1. Choose a High-Quality Wordpress Hosting Provider

If your website is on shared web hosting with potentially hundreds of other sites competing for the same resources, you may notice frustrating site speed.

For smaller sites, shared web hosting can be completely acceptable, provided it is hosted by a reputable provider that includes sufficient memory.

Once you start hitting roughly 30k monthly visitors and above, consider moving to a dedicated server or at the very least, a virtual private server (VPS). Both of these prevent a “bad neighbor” from hogging all of the shared resources. Experts also recommend looking for a server that is physically located close to your target audience. The less distance your data has to travel, the quicker it will arrive.

Many hosting companies are offering shared WordPress hosting packages. These often provide lower storage options but with faster and more dedicated hardware packages. Even better, a managed server hosting solution is often cheaper and more inclusive than other available options.

2. Apply WordPress Updates Promptly

To any security professional, applying updates quickly seems like a no-brainer. However, in practice, roughly 40 percent of WordPress sites are running the latest version. Software updates often include speed tweaks in addition to security improvements, so be sure to update ASAP.

Updates also apply to WordPress Security plugins as well. Some may say they are even more critical to maintain as they are often the driving force for speed bottlenecks and security vulnerabilities. It is recommended to check daily for available updates.

3. Avoid Bloated Themes

Choose a WordPress theme with speed in mind. It does not mean you have to opt for a bare-bones site, but don’t go for an “everything but the kitchen sink” theme either. Many commercial WordPress themes come packed with features that don’t get used. Those features, stored on the server in an idle state can create a drag on performance.

The default Twenty Fifteen, for example, offers plenty of functionality, including mobile first, while remaining streamlined and trim. Look for a WordPress theme that provides what you need and only what you need.

4. Use a Caching Plugin

Caching your site can dramatically speed up your website and is among the most critical fixes on this list.

With a caching plugin, copies of previously generated pages are stored in memory where they can be quickly retrieved the next time they are needed. Caching a webpage is much faster than querying the WordPress database multiple times and loading from the source. It is also more resource friendly.

Caching plugins are smart enough to refresh the cached copy if the content of the page is updated. Recommended caching plugins to speed up WordPress include WP Super Cache (free), W3 Total Cache (free), and WP Rocket (paid, but the fastest in tests).

5. Optimize Images

Ensure that images are in an appropriate format (PNG or GIF for graphics, JPEG for photos) and no larger than they need to be. This is one of the easiest ways to speed up WordPress.

Compress them to make images smaller so they will download quicker for the end-user. You can do this manually before you upload images or automate the process with a plugin such as WP Smush. With WP-Smush, any images you upload to your WordPress site will automatically be compressed.

Be aware of higher resolution screens when optimizing your images. Utilize @2x (and variations) code if you intend to direct the highest resolution images for specific devices.

6. Consider a CDN

A content delivery network, or CDN, is a geographically distributed group of servers that work together to deliver content quickly. Copies of your static website content are cached in the CDN. Static content includes things like images, stylesheets, and JavaScript files.

When a user’s browser calls for a particular piece of content, it is loaded from the closest node of the CDN. For example: if the user is in the UK, and your website is hosted in the U.S. the data does not need to transit the Atlantic to reach the user. Instead, it is served up by a nearby CDN node.

For high-traffic sites, a CDN offers additional benefits. Without a CDN, all of your pages are served from a single location, placing the full load on a single server. With a CDN, server load is distributed across multiple sites other than your own. A CDN can also help protect from security threats such as distributed denial of service (DDoS) attacks.

A CDN is not a WordPress hosting service. It is a separate service that can be used to leverage performance. Cloudflare is a popular choice for small websites because it offers a free version. StackPath (formerly MaxCDN) isn’t free (it starts at $9/month) but features a beginner-friendly control panel and interfaces with most popular WordPress caching plugins.

7. Configure Lazy Loading

Why spend valuable bandwidth (and time) loading images that your visitor cannot see?

Lazy loading forces only images that are “above the fold” (before a user needs to scroll) to load immediately and delays the rest until the user scrolls down.

Lazy loading is especially valuable if a page contains multiple images or videos that can slow down your site. As with many WordPress speed boosters, there are plugins for this.

The most popular include BJ Lazy Load and Lazy Load by WP Rocket.

8. Best WordPress Speed Plugins

Your WordPress database stores all of your website content. That includes blog posts, revisions, pages, comments, and custom posts such as form content.

It is also where themes and plugins track their data and settings. As your site grows, so does the database, and so does the amount of overhead required by each table in the database. As the size and overhead increase, the database becomes less efficient. Optimizing it from time to time can alleviate this problem. Think of it like defragmenting a hard drive.

You can optimize your database through your hosting control panel’s SQL WordPress database tool. For many, this is phpMyAdmin.

If using phpMyAdmin, click the box to select all tables in the database. Then at the bottom of the screen choose “optimize table” from the drop-down menu.

Alternatively, you can install a plugin such as WP-Optimize or WP-DB Manager. The plugins have the advantage that they will remove additional, unneeded items in addition to optimizing the remaining data.

Why waste time and space storing things like trashed or unapproved comments and stale data?

9. Give Your Plugins a Checkup

Every plugin you add to your site adds extra code. Many add more than that because they can load resources from other domains. A lousy plugin might load 12 external files while an optimized one settles for one or two.

When selecting plugins, only choose those offered by established developers and that are recommended by trusted sources. If you are searching for plugins within the WordPress repository, a quick check is to see how many other people are also using the same plugin.

If you find a plugin is slowing your site, then search for another one that does the same job more efficiently.

Tools like Pingdom (look in file requests section) and GTmetrix (look at the Waterfall tab) can help you find the worst offenders.

10. Remove Unnecessary Plugins

Every plugin consumes resources and reduces site speed. Don’t leave old, unused plugins in your database. Simply, delete them to improve WordPress performance.

11. Set an Expires Header for Static Resources

An expires header is a way to tell browsers not to bother to re-fetch content that’s unlikely to be changed since the last time it was loaded. Instead of obtaining a fresh copy of the resource from your web server, the user’s browser will utilize the local copy stored on their computer, which is much quicker to retrieve.

You can do this by adding a few lines of code to your root .htaccess file as described in this handy article by GTmetrix.

It is worth noting that if you are still developing your site and potentially changing your CSS, don’t add a far-off expiration for your CSS files. Otherwise, visitors may not see the benefit from your latest CSS tweaks.

12. Disable Hotlinking

Hotlinking (a.k.a. leeching) is a form of bandwidth theft. It works like this: another webmaster directly links to content on your site (usually an image or video) from within their content. The image will be displayed on the thief’s website but loaded from yours.

Hotlinking can increase your server load and decrease your site’s performance, not to mention that it is an abysmal use of web etiquette. You can protect your site from hotlinking by blocking outside links to certain types of content, such as images, on your site.

If you have cPanel or WHM provided by your hosting service, you can always use the built-in hotlink protection tools they contain. Otherwise, if your site uses Apache web server (Linux hosting), all you need to do is add a few lines of code to your root .htaccess file.

You can choose from very key code strings that allow internal links and links from search engines such as Google. You could also add more complex rules such as to enable links from sources such as a feed service or to set up a default image to show in place of a hotlinked image.

You can choose from very key code strings that allow internal links and links from search engines such as Google. You could also add more complex rules such as to enable links from sources such as a feed service or to set up a default image to show in place of a hotlinked image.

13. Turn on GZIP

Decreasing the size of your pages is crucial to fast delivery.

GZIP is an algorithm that compresses files on the sending end and restores them on the receiving end. Enabling GZIP compression on your server can dramatically reduce page load times by reducing page sizes up to 70%.

When a browser requests a page, it checks to see if the “Content-encoding: gzip” response header exists. If so, it knows the server has GZIP enabled and content such as HTML, stylesheets, and JavaScript can be compressed. These days GZIP is enabled by default on many servers, but it is best to be sure. This free check GZIP compression tool will tell you.

If you need to turn GZIP on, the easiest way is to use a plugin such as WP Rocket. W3 Total Cache also provides a way for you to turn it on, under its performance options. If you cannot use a plugin because of permission problems or don’t want to and you are using Linux hosting, you can do it yourself by modifying your site’s root .htaccess file. This article explains how to turn on gzip using .htaccess.

14. Optimize Your Home Page for WordPress Site Speed

Your home page will create the first impression of your brand and your business. It is crucial to optimize for mobile responsiveness, a pleasing UI/UX, and most of all for speed.

Steps to take include:

-

- Remove unnecessary widgets. You do not need to display every widget everywhere.

- Don’t include sharing widgets and plugins on the homepage. Reserve them for posts.

- Restrict the number of posts on the homepage. Fewer posts equal a smaller, faster-loading.

- Use excerpts instead of full posts. Again, smaller equals quicker site speed.

- Go easy on the graphics. Images and videos take longer to load than text.

15. Host Videos Elsewhere

Rather than uploading videos as media and serving them on your site, take advantage of video services to offload the bandwidth and processing.

Make use of the second most trafficked search engine on the web and upload your videos to YouTube, or a similar service like Vimeo. Then you can copy a small bit of code and paste it into a post on your site where you want the video to appear. This is known as embedding a video. When a user views the page, the video will stream from the third-party server rather than your own. Unlike hotlinking, this is the recommended practice for media-rich content such as video.

16. Limit Post Revisions

The ability to revert to a previously saved version of a post can come in handy, but do you need to keep every copy of every post you have ever made? Probably not.

These extra copies clutter your database and add overhead, so it is best to put a cap on the number stored. You can do so by using a plugin, such as Revision Control, or you can set a limit by adding the following line to your wp-config.php file:

define( 'WP_POST_REVISIONS', 3 );

Set a number you feel comfortable with. Anything will be less than the default, which automatically stores revisions without a limit.

17. Set the Default Gravatar Image to Blank

Gravatar is a web service that allows anyone to create a profile with an associated avatar image that links automatically to his or her email address. When the user leaves a comment on a WordPress blog, their avatar is displayed alongside. If the user does not have a Gravatar image defined, WordPress displays the default Gravatar image. This means you can have dozens of comments showing the same, uninformative “mystery man” image. Why waste page load time on something that’s not very useful when you can get rid of it?

Changing the default Gravatar image is easy. In your WordPress Admin dashboard, go to Settings > Discussion. Scroll to the Avatars section. Select Blank as the default Avatar. Mission accomplished.

18. Disable Pingbacks and Trackbacks

When Pingbacks and Trackbacks were implemented, they were intended to be a vehicle for sharing credit and referring to related content. In practice, they are mainly a vehicle for spam. Every ping/track you receive adds entries (i.e., more data) to your database.

Since they’re rarely used for anything beyond obtaining a link from your site to the spammer’s, consider disabling them entirely. To do so, go to Settings > Discussion. Under default article settings, uncheck the line that says “Allow link notifications from other blogs (pingbacks and trackbacks).” Note that this will disable ping/tracks going forward, but won’t apply retroactively to existing posts.

19. Break Comments into Pages

Getting loads of comments on your blog is a great thing, but it can slow down overall page load. To eliminate this potential problem, go to Settings > Discussion and check the box next to the “Break comments into pages” option. You can then specify how many comments you would like per page and whether to display the newest or oldest first.

20. Get Rid of Sliders

Whether or not sliders look great is a matter of opinion. But the fact that they will slow down your WordPress website is not.

Sliders add extra JavaScript that takes time to load and reduce conversion rates.

They also push your main content down the page, perhaps out of site. Why take a performance hit for something that only serves as ineffective eye candy?

21. Move Scripts to the Footer

JavaScript is a nifty scripting language that lets you do all kinds of exciting things. It also takes time to load the scripts that make the magic happen. When scripts are in the footer, they will still have to load, but they will not hold up the rest of the page in the process. Be aware that sometimes scripts have to load in a particular order, so keep the same order if you move them.

22. Combine Buttons and Icons into CSS Sprites

A sprite is a large image that’s made up of a bunch of smaller images. With CSS sprites, you load the sprite image and then position it to show the portion you wish to display.

That way only one HTTP request for the image results, instead of individual requests for each component image.

23. Minify JavaScript and CSS

Minification is the process of making certain files smaller, so they transmit more quickly. It is accomplished by stripping out white spaces, line breaks, and unnecessary characters from source code.

Better WordPress Minify is a popular minify plugin. It offers many customization options. Start by checking the first two general options.

Those two specify that JavaScript and CSS files should be minified. The plugin works by creating new, minified copies of the original files. The originals are left in place, so you can quickly revert to them if desired.

24. Avoid Landing Page Redirects

WordPress is pretty smart about many things, and redirects are one of them. If a visitor types http://yourdomain.com/greatarticle.html into their browser, and you have your site set up to use the www prefix, the visitor will automatically be redirected to the correct page (with the www prefix added), http://www.yourdomain.com/greatarticle.html.

What if the user types in https:// instead of http://? They will likely still arrive at the desired page, but there may be another redirect as HTTPS converts to HTTP, and then that is redirected to the www URL. More time spent waiting for the target page to load.

Redirects are handy for landing your visitor on the right page, but they take time, delaying loading. Therefore, you should avoid them when possible. When linking to a page on your site, be sure to use the correct, non-redirected version of the URL. Also, your server should be configured so that users can reach any URL with no more than one redirection, no matter which combination of HTTP/HTTPS or www is used.

To check out the status of redirections currently employed by your site, try a few URLs in this Redirect mapper. If you see more than one redirect, then you will need to modify your server to ensure visitors get to the right place more quickly.

If your site is hosted on Linux, you can accomplish this by adding URL rewrite rules to your .htaccess file. For other hosting platforms, check your dashboard to see if there’s an option to configure redirection. If not, contact technical support and ask them to fix it for you.

25. Replace PHP with static HTML

PHP is a fast-executing language, but it is not as speedy as static HTML. Chances are your theme is executing a half-dozen PHP statements in the header that can be swapped out for static HTML. If you view your header file (Presentation > Theme Editor) you will see many lines that look similar to this:

The part in bold is PHP that gets executed every time your page loads. While this takes up minimal time, it is slower than straight HTML.

If you’re determined to make your website as speedy as possible, you can swap out the PHP for text. To see what you to replace the PHP with, use the View Source option while looking at your web page (right click > view source).

You will see something like this:

The bold area is the text that resulted from the processed PHP code. Replace the original PHP call with this code. If you do so for the main PHP calls in the header alone, you can save at least six calls to PHP. If you use different titles or article titles on separate pages for SEO purposes, don’t change out the PHP in the first line. Check your footer and sidebar for additional opportunities to switch out PHP for text.

Site Speed Putting it All Together: WordPress Optimization

By now, you should have a firm grasp on how to speed up your WordPress site.

You should be able to understand the importance of a responsive site that is focused on performance and speed to gain the attention of your users quickly.

Chances are, as you navigated this guide, you discovered that your website had plenty of room for improvement.

Once you apply the tips above, rerun a WordPress website speed test through Google’s TestMySite and Page Speed Insights.