Chaos Engineering: How it Works, Principles, Benefits, & Tools

Finding faults in a distributed system goes beyond the capability of standard application testing. Companies need smarter ways to test microservices continuously. One strategy that is gaining popularity is chaos engineering.

Using this proactive testing practice, an organization can look for and fix failures before they cause a costly outage. Read on to learn how chaos engineering improves the reliability of large-scale distributed systems.

What Is Chaos Engineering? Defined

Chaos engineering is a strategy for discovering vulnerabilities in a distributed system. This practice requires injecting failures and errors into software during production. Once you intentionally cause a bug, monitor the effects to see how the system responds to stress.

By “breaking things” on purpose, you discover new issues that could impact components and end-users. Address the identified weaknesses before they cause data loss or service impact.

Chaos engineering allows an admin to:

- Identify weak points in a system.

- See in real-time how a system responds to pressure.

- Prepare the team for real failures.

- Identify bugs that are yet to cause system-wide issues.

Netflix was the first organization to introduce chaos engineering. In 2010, the company released a tool called Chaos Monkey. With this tool, admins were able to cause failures in random places at random intervals. Such a testing approach made Netflix’s distributed cloud-based system much more resilient to faults.

Who Uses Chaos Engineering?

Many tech companies practice chaos engineering to improve the resilience of distributed systems. Netflix continues to pioneer the practice, but companies like Facebook, Google, Microsoft, and Amazon have similar testing models.

More traditional organizations have caught on to chaos testing too. For example, the National Australia Bank applied chaos to randomly shut down servers and build system resiliency.

The Need for Chaos Engineering

Peter Deutsch and his colleagues from Sun Microsystem listed eight false assumptions programmers commonly make about distributed systems:

- The network is reliable.

- There is zero latency.

- Bandwidth is infinite.

- The network is secure.

- Topology never changes.

- There is one admin.

- Transport cost is zero.

- The network is homogeneous.

These fallacies show the dynamics of a distributed application designed in a microservices architecture. This kind of system has many moving parts, and admins have little control over the cloud infrastructure.

Constant changes to the setup cause unexpected system behavior. It is impossible to predict these behaviors, but we can reproduce and test them with chaos engineering.

Difference Between Chaos Engineering and Failure Testing

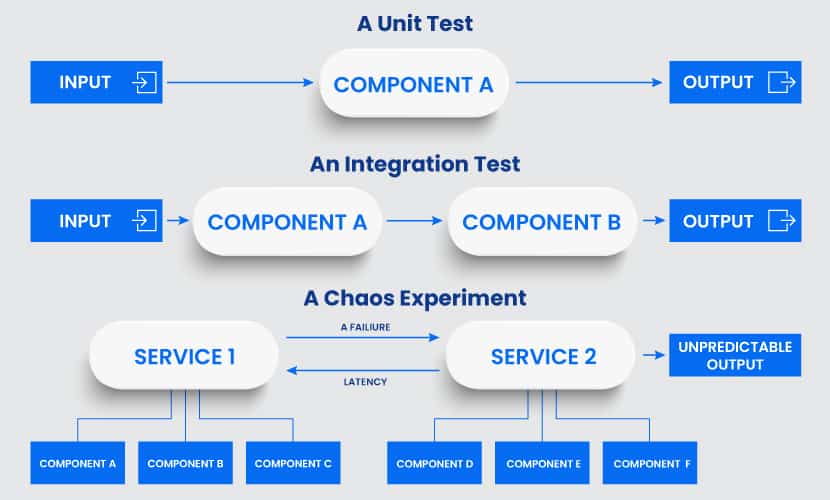

A failure test examines a single condition and determines whether a property is true or false. Such a test breaks a system in a preconceived way. The results are usually binary, and they do not uncover new information about the application.

The goal of a chaos test is to generate new knowledge about the system. Broader scope and unpredictable outcomes enable you to learn about the system’s behaviors, properties, and performance. You open new avenues for exploration and see how you can improve the system.

While different, chaos and failure testing do have some overlap in concerns and tools used. You get the best results when you use both disciplines to test an application.

How Chaos Engineering Works

All testing in chaos engineering happens through so-called chaos experiments. Each experiment starts by injecting a specific fault into a system, such as latency, CPU failure, or a network black hole. Admins then observe and compare what they think will occur to what actually happens.

An experiment typically involves two groups of engineers. The first group controls the failure injection, and the second group deals with the effects.

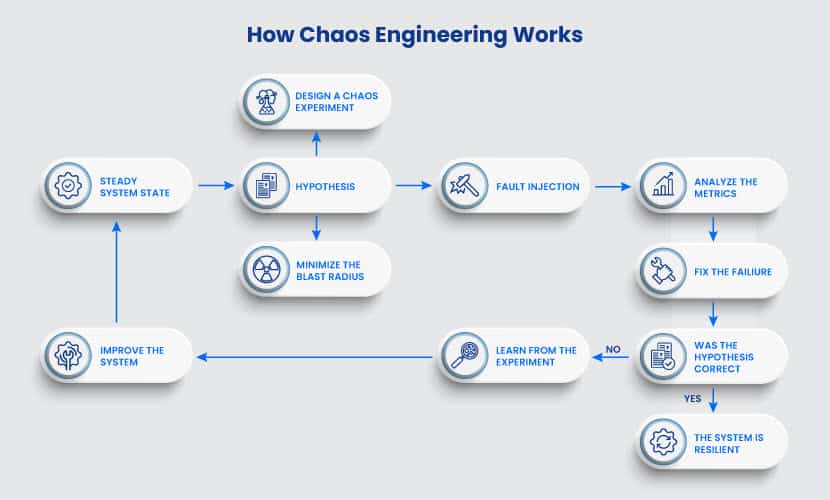

Here is a step-by-step flow of a chaos experiment:

Step 1: Creating a Hypothesis

Engineers analyze the system and choose what failure to cause. The core step of chaos engineering is to predict how the system will behave once it encounters a particular bug.

Engineers also need to determine critical metric thresholds before starting a test. Metrics typically come in two sets:

- Key metrics: These are the primary metrics of the experiment. For example, you can measure the impact on latency, requests per second, or system resources.

- Customer metrics: These are precautionary metrics that tell you if the test went too far. Examples of customer metrics are orders per minute, or stream starts per second. If a test begins impacting customer metrics, that is a sign for admins to stop experimenting.

In some tests, the two metrics can overlap.

Step 2: Fault Injection

Engineers add a specific failure to the system. Since there is no way to be sure how the application will behave, there is always a backup plan.

Most chaos engineering tools have a reverse option. That way, if something goes wrong, you can safely abort the test and return to a steady-state of the application.

Step 3: Measuring the Impact

Engineers monitor the system while the bug causes significant issues. Key metrics are the primary concern but always monitor the entire system.

If the test starts a simulated outage, the team looks for the best way to fix it.

Step 4: Verify (or Disprove) Your Hypothesis

A successful chaos test has one of two outcomes. You either verify the resilience of the system, or you find a problem you need to fix. Both are good outcomes.

Principles of Chaos Engineering

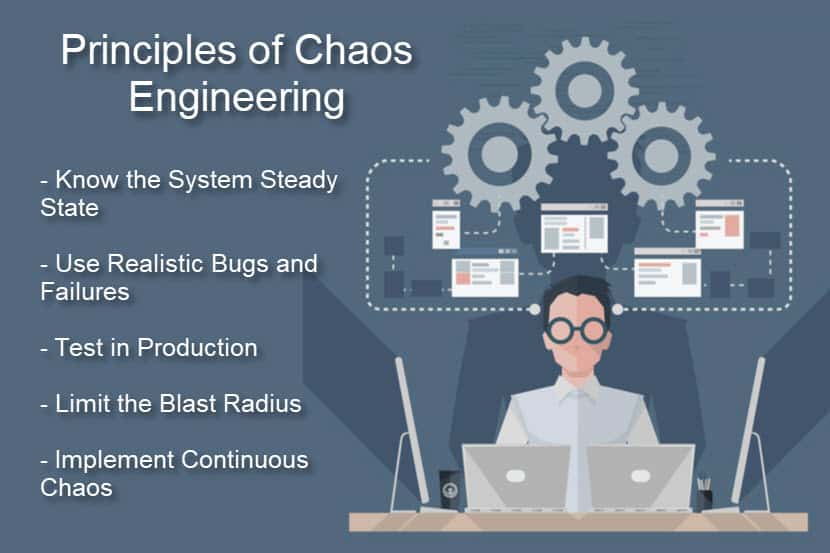

While the name may suggest otherwise, there is nothing random in chaos engineering.

This testing method follows strict principles, which include the following principles:

Know the Normal State of Your System

Define the steady-state of your system. The usual behavior of a system is a reference point for any chaos experiment. By understanding the system when it is healthy, you will better understand the impact of bugs and failures.

Inject Realistic Bugs and Failures

All experiments should reflect realistic and likely scenarios. When you inject a real-life failure, you get a good sense of what processes and technologies need an upgrade.

Test in Production

You can only see how outages affect the system if you apply the test to a production environment.

If your team has little to no experience with chaos testing, let them start experimenting in a development environment. Test the production environment once ready.

Control the Blast Radius

Always minimize the blast radius of a chaos test. As these tests happen in a production environment, there is a chance that the test could affect end-users.

Another standard precaution is to have a team ready for actual incident response, just in case.

Continuous Chaos

You can automate chaos experiments to the same level as your CI/CD pipeline. Constant chaos allows your team to improve both current and future systems continuously.

Benefits of Chaos Engineering

The benefits of chaos engineering span across several business fronts:

Business Benefits

Chaos engineering helps stop large losses in revenue by preventing lengthy outages. The practice also allows companies to scale quickly without losing the reliability of their services.

Technical Benefits

Insights from chaos experiments reduce incidents, but that is not where technical benefits end. The team gets an increased understanding of system modes and dependencies, allowing them to build a more robust system design.

A chaos test is also excellent on-call training for the engineering team.

Customer Benefits

Fewer outages mean less disruption for end-users. Improved service availability and durability are the two chief customer benefits of chaos engineering.

Chaos Engineering Tools

These are the most common chaos engineering tools:

- Chaos Monkey: This is the original tool created at Netflix. While it came out in 2010, Chaos Monkey still gets regular updates and is the go-to chaos testing tool.

- Gremlin: Gremlin helps clients set up and control chaos testing. The free version of the tool offers basic tests, such as turning off machines and simulating high CPU load.

- Chaos Toolkit: This open-source initiative makes tests easier with an open API and a standard JSON format.

- Pumba: Pumba is a chaos testing and network emulation tool for Docker.

- Litmus: A chaos engineering tool for stateful workloads on Kubernetes.

To keep up with new tools, bookmark the diagram created by the Chaos Engineering Slack Community. Besides the tools, the chart also keeps track of known engineers working with chaos tests.

Chaos Engineering Examples

There are no limits to chaos experiments. The type of tests you run depends on the architecture of your distributed system and business goals.

Here is a list of the most common chaos tests:

- Simulating the failure of a micro-component.

- Turning a virtual machine off to see how a dependency reacts.

- Simulating a high CPU load.

- Disconnecting the system from the data center.

- Injecting latency between services.

- Randomly causing functions to throw exceptions (also known as function-based chaos).

- Adding instructions to a program and allowing fault injection (also known as code insertion).

- Disrupting syncs between system clocks.

- Emulating I/O errors.

- Causing sudden spikes in traffic.

- Injecting byzantine failures.

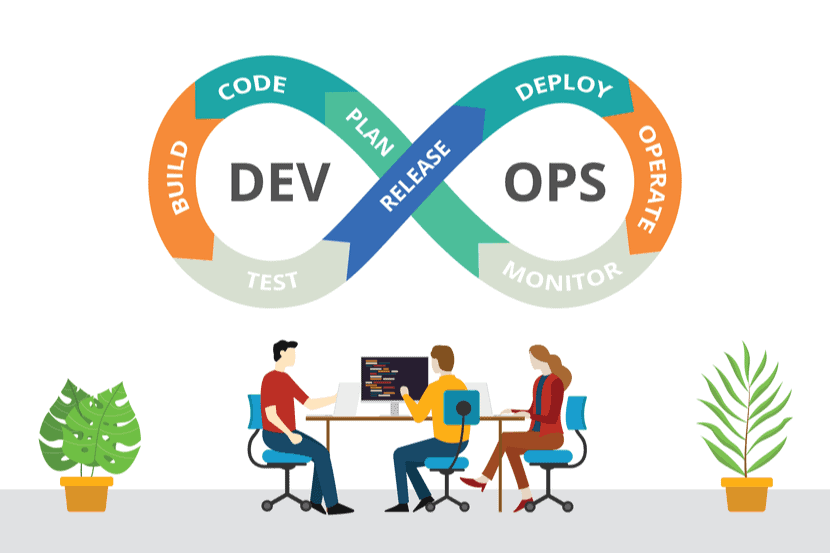

Chaos Engineering and DevOps

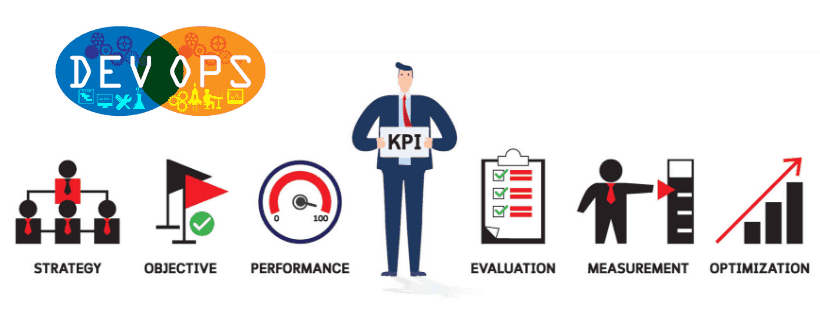

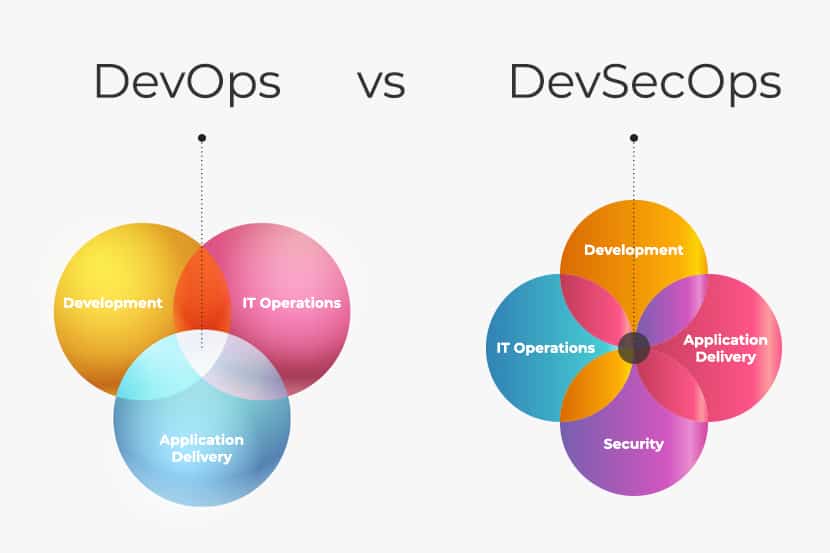

Chaos engineering is a common practice within the DevOps culture. Such tests allow DevOps to thoroughly analyze applications while keeping up with the tempo of agile development.

DevOps teams commonly use chaos testing to define a functional baseline and tolerances for infrastructure. Tests also help create better policies and processes by clarifying both steady-state and chaotic outputs.

Some companies prefer to integrate chaos engineering into their software development life cycle. Integrated chaos allows companies to ensure the reliability of every new feature.

A Must for any Large-Scale Distributed System

Continuous examination of software is vital both for application security and functionality. By proactively examining a system, you can reduce the operational burden, increase system availability, and resilience.

Recent Posts

What is YAML? How it Works With Examples

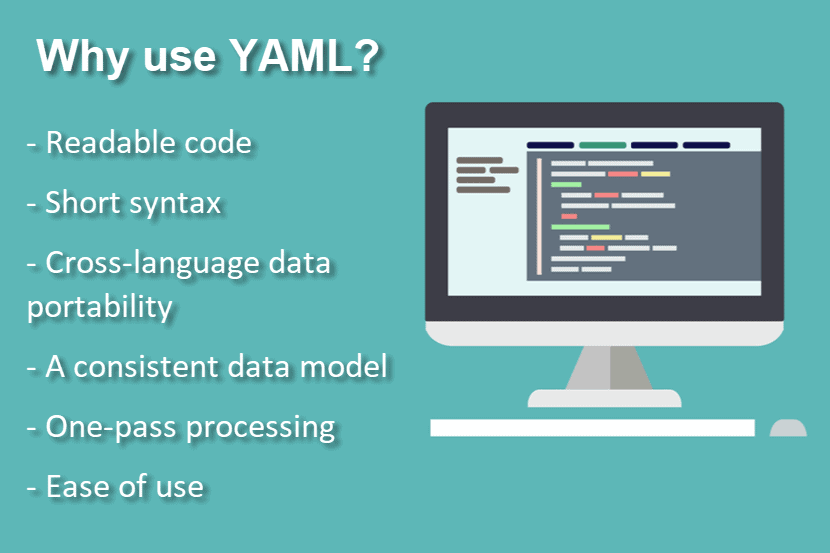

Most formatting languages display data in a non-human readable format. Even JSON, the most popular data format in use, has poor code readability.

YAML is an alternative to JSON that formats data in a natural, easy-to-read, and concise manner.

This article will introduce you to the YAML markup language. We cover the basic concepts behind this markup language, explain its key features, and show what YAML offers to DevOps teams.

What is YAML?

YAML is a data serialization language. Back when it came out in 2001, YAML stood for “Yet Another Markup Language.” The acronym was later changed to “YAML Ain’t Markup Language” to emphasize that the language is intended for data and not documents.

It is not a programming language in the true sense of the word. YAML files store information, so they do not include actions and decisions.

Unlike XML or JSON, YAML presents data in a way that makes it easy for a human to read. The simple syntax does not impact the language’s capabilities. Any data or structure added to an XML or JSON file can also be stored in YAML.

Besides human-readable code, YAML also features:

- Cross-language data portability

- A consistent data model

- One-pass processing

- Ease of implementation and use

Users can write code for reading and generating YAML in any programming language. The extensions in YAML are .yaml and .yml. Both extensions stand for the same file type.

Yaml Features

YAML has several features that make it an excellent option for data formatting.

Multi-Document Support

Users can add multiple documents to a single YAML file. Separate different documents with three dashes (---), like this:

--- time: 19:04:12 player: playerOne action: strike (miss) --- time: 20:03:47 player: playerTwo action: strike (hit) ...

Three dots (“…“) mark the end of a document without starting a new one.

Built-In Comments

YAML allows users to add comments to their code. YAML comments start with the # symbol and do not have to be on a separate line:

key: #This is a single line comment - value line 10 #This is a #multi-line comment - value line 20

Clean Syntax

Like Python, YAML relies on indentations to show the levels and structure in the data. There are no usual format symbols, such as braces, square brackets, closing tags, or quote marks. The syntax is clean and easy to scan through.

The clean syntax is why several popular tools rely on YAML, such as Ansible, Kubernetes, and OpenStack.

No Tabs

YAML does not allow tabs. Spaces are the only way to achieve indentation.

It is good practice to display whitespace characters in your text editor to prevent accidental uses of tabs.

Precise Feedback

YAML feedback refers to specific lines in the file. You can quickly find and fix errors when you know where to look.

Support for Complex Structures

YAML provides the ability to reference other data objects. With referencing, you can write recursive data in the YAML file and build advanced data structures.

Explicit Data Types with Tags

YAML auto-detects the type of data, but users are free to specify the type they need. To specify the type of data, you include a “!!” symbol:

# The value should be an int: is-an-int: !!int 5.6 # Turn any value to a string: is-a-str: !!str 90.88 # The next value should be a boolean: is-a-bool: !!bool yes

No Executable Commands

YAML is a data-representation format. There are no executable commands, which makes the language highly secure when exchanging files with third parties.

If a user wishes to add an executable command, YAML must be integrated with other languages. Add Perl parsers, for example, to enable Perl code execution.

How YAML Works

YAML matches native data structures of agile methodology and its languages, such as Perl, Python, PHP, Ruby, and JavaScript. It also derives features from other languages:

- Scalars, lists, and arrays come from Perl.

- The three-dash separator comes from MIME.

- Whitespace wrapping comes from HTML.

- Escape sequences come from C.

YAML supports all essential data types, including nulls, numbers, strings, arrays, and maps. It recognizes some language-specific data types, such as dates, timestamps, and special numerical values.

Colon and a single space define a scalar (or a variable):

string: "17" integer: 17 float: 17.0 boolean: No

A | character denotes a string that preserves newlines and a > character denotes a string that folds newlines:

data: | Every one Of these Newlines Will be Broken up. data: > This text is wrapped and will be formed into a single paragraph.

Basics aside, there are two vital types of structures you need to know about in YAML:

- YAML lists

- YAML maps

Use these two structures for formatting in YAML.

YAML Maps (With Examples)

Maps associate name-value pairs, a vital aspect of setting up data. A YAML configuration file can start like this:

--- apiVersion: v3 kind: Pod

Here is the JSON equivalent of the same file opening:

{

"apiVersion": "v3",

"kind": "Pod"

}

Both codes have two values, v3Pod, mapped to two keys, apiVersion and kind. In YAML, the quotation marks are optional, and there are no brackets.

This markup language allows you to specify more complex structures by creating a key that maps to another map rather than a string. See the YAML example below:

--- apiVersion: v3 kind: Pod metadata: name: rss-site labels: app: web

We have a key (metadata) with two other keys as its value name and labels. The labels key has another map as its value. YAML allows you to nest maps as far as you need to.

The number of spaces does not matter, but it must be consistent throughout the file. In our example, we used two spaces for readability. Name and labels have the same indentation level, so the processor knows both are part of the same map.

The same mapping would look like this in JSON:

{

"apiVersion": "v3",

"kind": "Pod",

"metadata": {

"name": "rss-site",

"labels": {

"app": "web"

}

}

}

YAML Lists (With Examples)

A YAML list is a sequence of items. For example:

args: - shutdown - "1000" - msg - "Restart the system"

A list may contain any number of items. An item starts with a dash, while indentation separates it from the parent. You can also store maps within a list:

--- apiVersion: v3 kind: Pod metadata: name: rss-site labels: app: web spec: containers: - name: front-end image: nginx ports: - containerPort: 80 - name: rss-reader image: nickchase/rss-php-nginx:v1 ports: - containerPort: 88

We have a list of containers (objects). Each consists of a name, an image, and a list of ports. Each item under ports is a map that lists the containerPort and its value.

Our example would look like this in JSON:

{ “apiVersion”: “v3”, “kind”: “Pod”, “metadata”: { “name”: “rss-site”, “labels”: { “app”: “web” } }, “spec”: { “containers”: [{ “name”: “front-end”, “image”: “nginx”, “ports”: [{ “containerPort”: “80” }] }, { “name”: “rss-reader”, “image”: “nickchase/rss-php-nginx:v1”, “ports”: [{ “containerPort”: “88” }] }] } }

What is the difference between YAML and JSON?

JSON and YAML are used interchangeably, and they serve the same purpose. However, there are significant differences between the two:

| YAML | JSON |

|---|---|

| Easy for a human to read | Hard for a human to read |

| Allows comments | No comments |

| Space characters determine hierarchy | Brackets and braces denote arrays and objects |

| String quotes support single and double quotes | Strings must be in double quotes |

| The root node can be any of the valid data types | The root node is either an object or an array |

The main difference between YAML and JSON is code readability. The best example of this is the official YAML homepage. That website is itself valid YAML, yet it is easy for a human to read.

YAML is a superset of JSON. If you paste JSON directly into a YAML file, it resolves the same through YAML parsers. Users can also convert most documents between the two formats. It is possible to convert JSON files into YAML either online or use a tool like Syck or XS.

YAML in IaC

YAML is a common option when writing configuration files for Infrastructure as Code. These files store parameters and settings for the desired cloud environment.

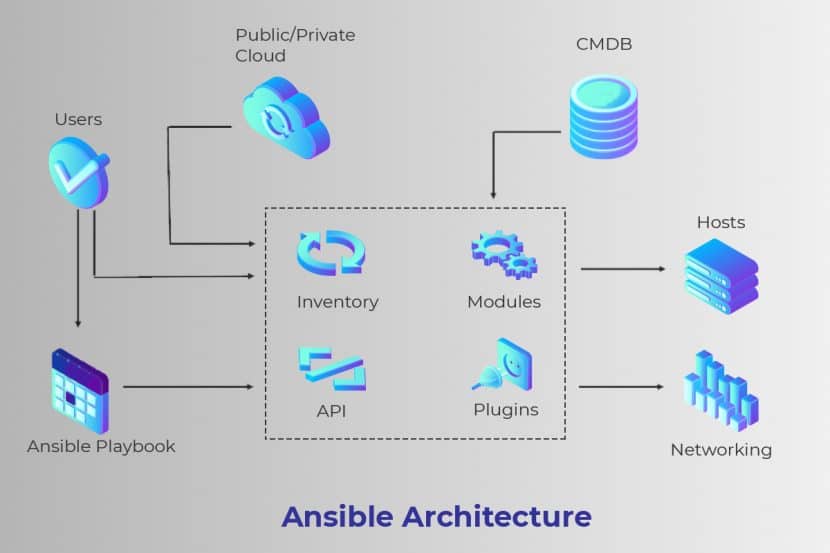

Red Hat’s Ansible, one of the most popular IaC tools, uses YAML for file management. Ansible users create so-called playbooks written in YAML code that automate manual tasks of provisioning and deploying a cloud environment.

In the example below, we define an Ansible playbook verify-apache.yml:

--- - hosts: webservers vars: http_port: 90 max_clients: 250 remote_user: root tasks: - name: ensure apache is at the latest version yum: name: httpd state: latest - name: write the apache config file template: src: /srv/httpd.j2 dest: /etc/httpd.conf notify: - restart apache - name: ensure apache is running service: name: httpd state: started handlers: - name: restart apache service: name: httpd state: restarted

There are three tasks in this YAML playbook:

- We update Apache to the latest version using the

yumcommand. - We use a template to copy the Apache configuration file. The playbook then restarts the Apache service.

- We start the Apache service.

Once set, a playbook is run from the command line. While the path varies based on the setup, the following command runs the playbook:

ansible-playbook -i hosts/groups verify_apache.yml

The Use of YAML in DevOps

Many DevOps teams define their development pipelines using YAML. YAML allows users to approach pipeline features like a markup file and manage them as any source file. Pipelines are versioned with the code, so teams can identify issues and roll back changes quickly.

To add a YAML build definition, a developer adds a source file to the root of the repository.

Thanks to YAML, DevOps separate logic from the configuration. That way, the configuration code follows best practices, such as:

- No hard-coded strings.

- Methods that perform one function and one function only.

- Testable code.

Several tools with a prominent role in DevOps rely on YAML:

- Azure DevOps provides a YAML designer to simplify defining build and release tasks.

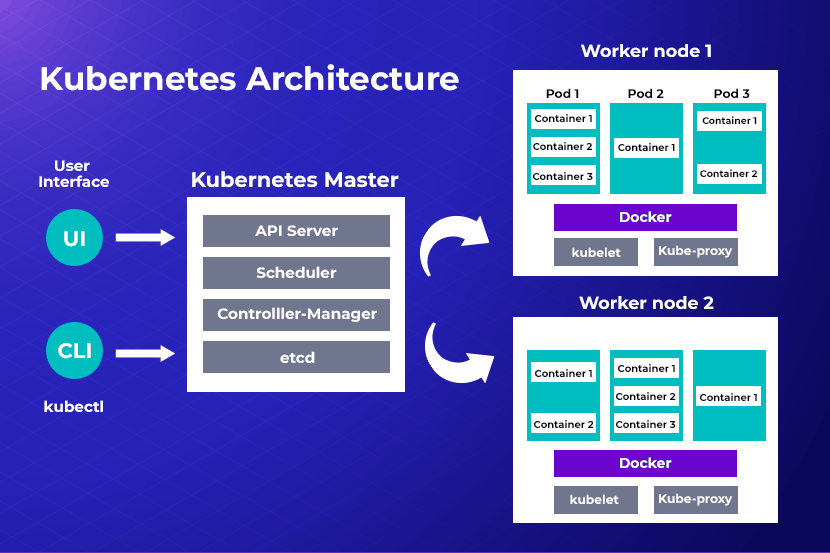

- Kubernetes uses YAML to create storage and lightweight Linux virtual machines.

- Docker features YAML files called Dockerfiles. Dockerfiles are blueprints for everything you need to run software, including codes, runtime, tools, settings, and libraries.

An Efficient, User-Friendly Data Formatting Language

YAML offers levels of code readability other data-formatting languages cannot deliver. YAML also allows users to perform more operations with less code, making it an ideal option for DevOps teams that wish to speed up their delivery cycles.

Recent Posts

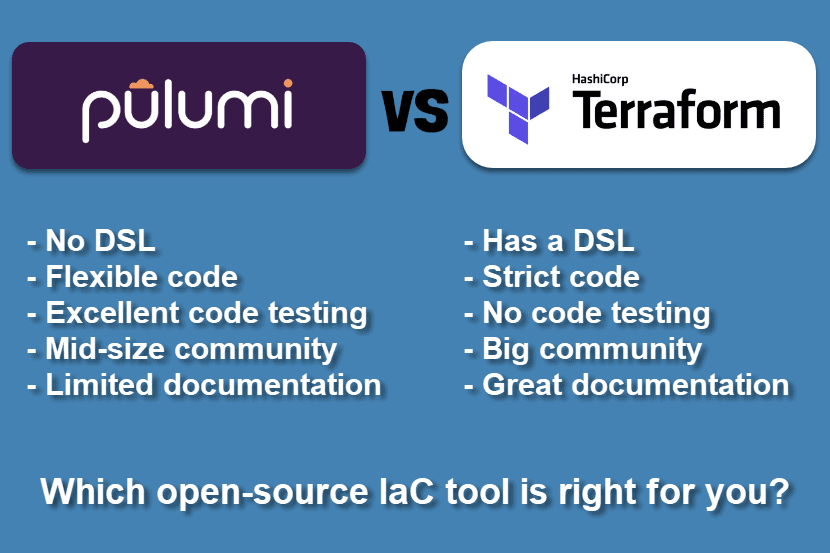

Pulumi vs Terraform: Comparing Key Differences

Terraform, and Pulumi are two popular Infrastructure as Code (IaC) tools used to provision and manage virtual environments. Both tools are open source, widely used, and provide similar features. However, it isn’t easy to choose between Pulumi and Terraform without a detailed comparison.

Below is an examination of the main differences between Pulumi and Terraform. The article analyzes which tool performs better in real-life use cases and offers more value to an efficient software development life cycle.

Key Differences Between Pulumi and Terraform

- Pulumi does not have a domain-specific software language. Developers can build infrastructure in Pulumi by using general-purpose languages such as Go, .NET, JavaScript, etc. Terraform, on the other hand, uses its Hashicorp Configuration Language.

- Terraform follows a strict code guideline. Pulumi is more flexible in that regard.

- Terraform is well documented and has a vibrant community. Pulumi has a smaller community and is not as documented.

- Terraform is easier for state file troubleshooting.

- Pulumi provides superior built-in testing support due to not using a domain-specific language.

What is Pulumi?

Pulumi is an open-source IaC tool for designing, deploying and managing resources on cloud infrastructure. The tool supports numerous public, private, and hybrid cloud providers, such as AWS, Azure, Google Cloud, Kubernetes, phoenixNAP Bare Metal Cloud, and OpenStack.

Pulumi is used to create traditional infrastructure elements such as virtual machines, networks, and databases. The tool is also used for designing modern cloud components, including containers, clusters, and serverless functions.

While Pulumi features imperative programming languages, use the tool for declarative IaC. The user defines the desired state of the infrastructure, and Pulumi builds up the requested resources.

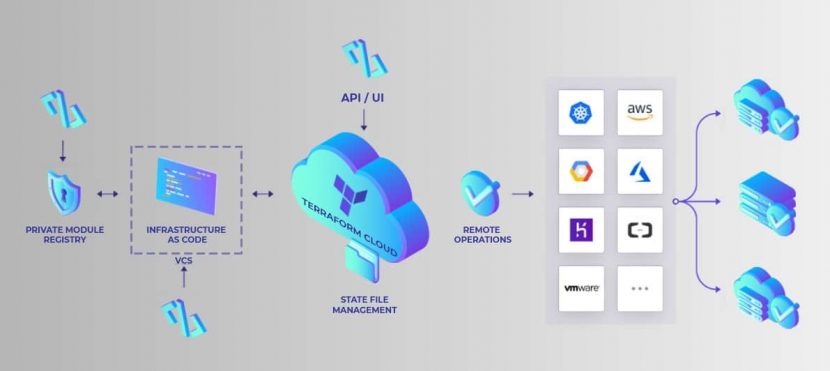

What is Terraform?

Terraform is a popular open-source IaC tool for building, modifying, and versioning virtual infrastructure.

The tool is used with all major cloud providers. Terraform is used to provision everything from low-level components, such as storage and networking, to high-end resources such as DNS entries. Building environments with Terraform is user-friendly and efficient. Users can also manage multi-cloud or multi offering environments with this tool.

How to Install Terraform?

Learn how to get started with Terraform in our guide How to Install Terraform on CentOS/Ubuntu.

Terraform is a declarative IaC tool. Users write configuration files to describe the needed components to Terraform. The tool then generates a plan describing the required steps to reach the desired state. If the user agrees with the outline, Terraform executes the configuration and builds the desired infrastructure.

Pulumi vs Terraform Comparison

While both tools serve the same purpose, Pulumi and Terraform differ in several ways. Here are the most prominent differences between the two infrastructure as code tools:

1. Unlike Terraform, Pulumi Does Not Have a DSL

To use Terraform, a developer must learn a domain-specific language (DSL) called Hashicorp Configuration Language (HCL). HCL has the reputation of being easy to start with but hard to master.

In contrast, Pulumi allows developers to use general-purpose languages such as JavaScript, TypeScript, .Net, Python, and Go. Familiar languages allow familiar constructs, such as for loops, functions, and classes. All these functionalities are available with HCL too, but their use requires workarounds that complicate the syntax.

The lack of a domain-specific language is the main selling point of Pulumi. By allowing users to stick with what they know, Pulumi cuts down on boilerplate code and encourages the best programming practices.

2. Different Types of State Management

With Terraform, state files are by default stored on the local hard drive in the terraform.tfstate file. With Pulumi, users sign up for a free account on the official website, and state files are stored online.

By enabling users to store state files via a free account, Pulumi offers many functionalities. There is a detailed overview of all resources, and users have insight into their deployment history. Each deployment provides an analysis of configuration details. These features enable efficient managing, viewing, and monitoring activities.

What's a State File?

State files help IaC tools map out the configuration requirements to real-world resources.

To enjoy similar benefits with Terraform, you must move away from the default local hard drive setup. To do that, use a Terraform Cloud account or rely on a third-party cloud storing provider. Small teams of up to five users can get a free version of Terraform Cloud.

Pulumi requires a paid account for any setup with more than a single developer. Pulumi’s paid version offers additional benefits. These include team sharing capabilities, Git and Slack integrations, and support for features that integrate the IaC tool into CI/CD deployments. The team account also enables state locking mechanisms.

3. Pulumi Offers More Code Versatility

Once the infrastructure is defined, Terraform guides users to the desired declarative configuration. The code is always clean and short. Problems arise when you try to implement certain conditional situations as HCL is limited in that regard.

Pulumi allows users to write code with a standard programming language, so numerous methods are available for reaching the desired parameters.

4. Terraform is Better at Structuring Large Projects

Terraform allows users to split projects into multiple files and modules to create reusable components. Terraform also enables developers to reuse code files for different environments and purposes.

Pulumi structures the infrastructure as either a monolithic project or micro-projects. Different stacks act as different environments. When using higher-level Pulumi extensions that map to multiple resources, there is no way to deserialize the stack references back into resources.

5. Terraform Provides Better State File Troubleshooting

When using an IaC tool, running into a corrupt or inconsistent state is inevitable. A crash usually causes an inconsistent state during an update, a bug, or a drift caused by a bad manual change.

Terraform provides several commands for dealing with a corrupt or inconsistent state:

refreshhandles drift by adjusting the known state with the real infrastructure state.state {rm,mv}is used to modify the state file manually.importfinds an existing cloud resource and imports it into your state.taint/untaintmarks individual resources as requiring recreation.

Pulumi also offers several CLI commands in the case of a corrupt or inconsistent state:

refreshworks in the same way as Terraform’s refresh.state deleteremoves the resource from the state file.

Pulumi has no equivalent of taint/untaint. For any failed update, a user needs to edit the state file manually.

6. Pulumi Offers Better Built-In Testing

As Pulumi uses common programming languages, the tool supports unit tests with any framework supported by the user’s software language of choice. For integrations, Pulumi only supports writing tests in Go.

Terraform does not offer official testing support. To test an IaC environment, users must rely on third-party libraries like Terratest and Kitchen-Terraform.

7. Terraform Has Better Documentation and a Bigger Community

When compared to Terraform, the official Pulumi documentation is still limited. The best resources for the tool are the examples found on GitHub and the Pulumi Slack.

The size of the community also plays a significant role in terms of helpful resources. Terraform has been a widely used IaC tool for years, so its community grew with its popularity. Pulumi‘s community is still nowhere close to that size.

8. Deploying to the Cloud

Pulumi allows users to deploy resources to the cloud from a local device. By default, Terraform requires the use of its SaaS platform to deploy components to the cloud.

If a user wishes to deploy from a local device with Terraform, AWS_ACCESS_KEY and AWS_SECRET_ACCESS_KEY variables need to be added to the Terraform Cloud environment. This process is not a natural fit with federated SSO accounts for Amazon Web Services (AWS). Security concerns over a third-party system having access to your cloud are also worth noting.

The common workaround is to use Terraform Cloud solely for storing state information. This option, however, comes at the expense of other Terraform Cloud features.

Note: The table is scrollable horizontally!

| Pulumi | Terraform | |

|---|---|---|

| Publisher | Pulumi | HashiCorp |

| Method | Push | Push |

| IaC approach | Declarative | Declarative |

| Price | Free for one user, three paid packages for teams | Free for up to five users, two paid packages for larger teams |

| Written in | Typescript, Python, Go | Go |

| Source | Open | Open |

| Domain-Specific Language (DSL) | No | Yes (Hashicorp Configuration Language) |

| Main advantage | Code in a familiar programming language, great out-of-the-box GUI | Pure declarative IaC tool, works with all major cloud providers, lets you create infrastructure building blocks |

| Main disadvantage | Still unpolished, documentation lacking in places | HCL limits coding freedom and needs to be mastered to use advanced features |

| State files management | State files are stored via a free account | State files are by default stored on a local hard drive |

| Community | Mid-size | Large |

| Ease of use | The use of JavaScript, TypeScript, .Net, Python, and Go keeps IaC familiar | HCL is a complex language, albeit with a clean syntax |

| Modularity | Problematic with higher-level Pulumi extensions | Ideal due to reusable components |

| Documentation | Limited, with best resources found on Pulumi Slack and GitHub | Excellent official documentation |

| Code versatility | As users write code in different languages, there are multiple ways to reach the desired state | HCL leaves little room for versatility |

| Deploying to the cloud | Can be done from a local device | Must be done through the SaaS platform |

| Testing | Test with any framework that supports the used programming language | Must be performed via third-party tools |

Using Pulumi and Terraform Together

It is possible to run IaC by using both Pulumi and Terraform at the same time. Using both tools requires some workarounds, though.

Pulumi supports consuming local or remote Terraform state from Pulumi programs. This support helps with the gradual adoption of Pulumi if you decide to continue managing a subset of your virtual infrastructure with Terraform.

For example, you might decide to keep your VPC and low-level network definitions written in Terraform to avoid disrupting the infrastructure. Using the state reference support, you can design high-level infrastructure with Pulumi and still consume the Terraform-powered VPC information. In that case, the co-existence of Pulumi and Terraform is easy to manage and automate.

Conclusion: Both are Great Infrastructure as Code Tools

Both Terraform and Pulumi offer similar functionalities. Pulumi is a less rigid tool focused on functionality. Terraform is more mature, better documented, and has strong community support.

However, what sets Pulumi apart is its fit with the DevOps culture.

By expressing infrastructure with popular programming languages, Pulumi bridges the gap between Dev and Ops. It provides a common language between development and operations teams. In contrast, Terraform reinforces silos across departments, pushing development and operations teams further apart with its domain-specific language.

From that point of view, Pulumi is a better fit for standardizing the DevOps pipeline across the development life cycle. The tool reinforces uniformity and leads to quicker software development with less room for error.

Recent Posts

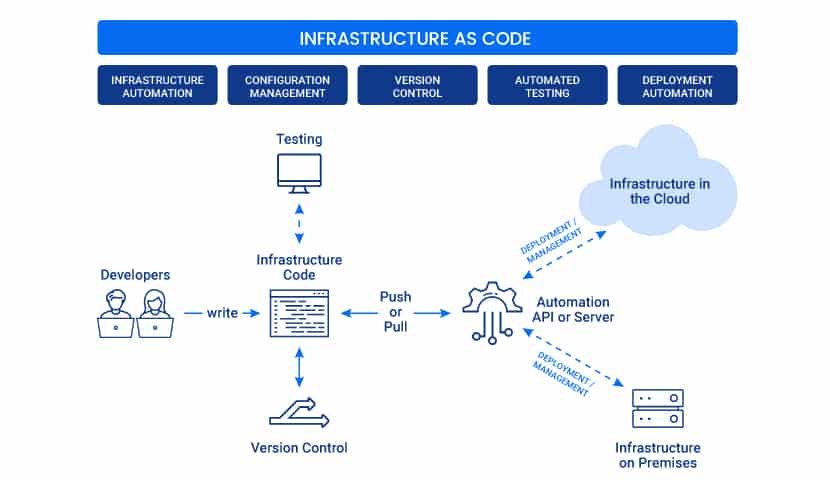

What Is Infrastructure as Code? Benefits, Best Practices, & Tools

Infrastructure as Code (IaC) enables developers to provision IT environments with several lines of code. Unlike manual infrastructure setups that require hours or even days to configure, it takes minutes to deploy an IaC system.

This article explains the concepts behind Infrastructure as Code. You will learn how IaC works and how automatic configurations enable teams to develop software with higher speed and reduced cost.

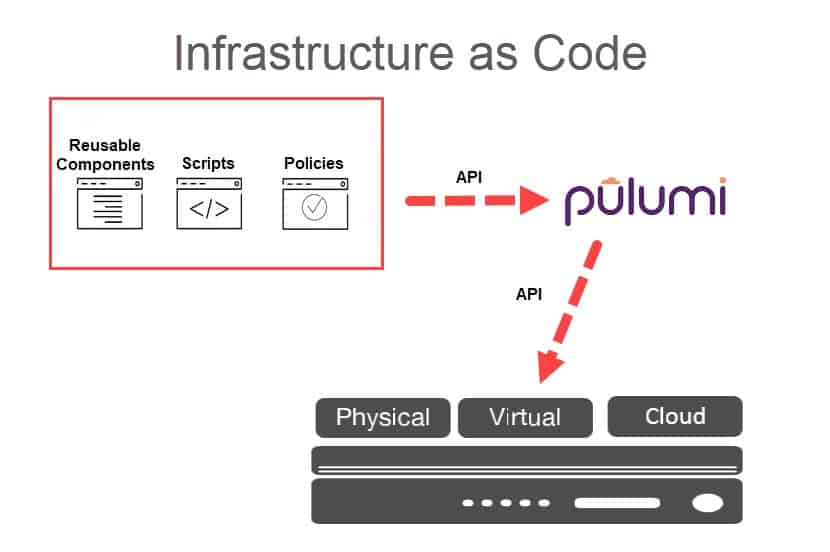

What is Infrastructure as Code (IaC)?

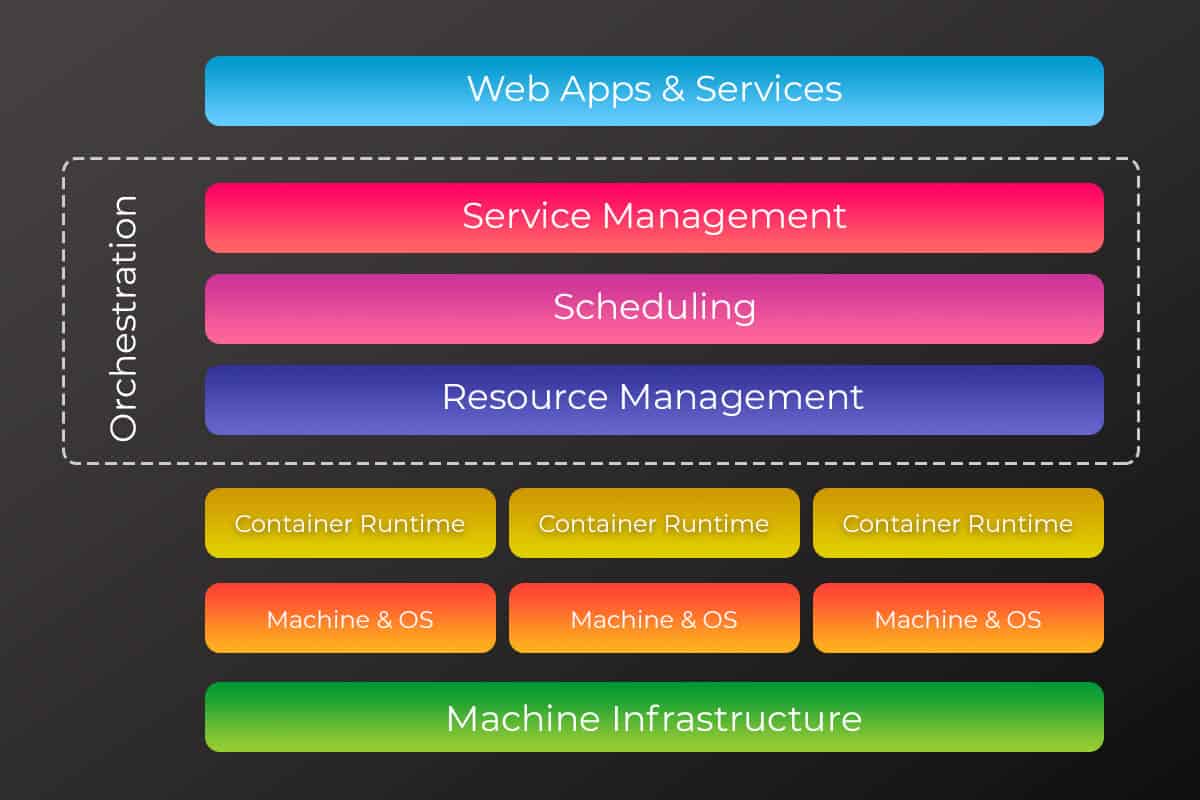

Infrastructure as Code is the process of provisioning and configuring an environment through code instead of manually setting up the required devices and systems. Once code parameters are defined, developers run scripts, and the IaC platform builds the cloud infrastructure automatically.

Such automatic IT setups enable teams to quickly create the desired cloud setting to test and run their software. Infrastructure as Code allows developers to generate any infrastructure component they need, including networks, load balancers, databases, virtual machines, and connection types.

How Infrastructure as Code WorkS

Here is a step-by-step explanation of how creating an IaC environment works:

- A developer defines the configuration parameters in a domain-specific language (DCL).

- The instruction files are sent to a master server, a management API, or a code repository.

- The IaC platform follows the developer’s instructions to create and configure the infrastructure.

With IaC, users don’t need to configure an environment every time they want to develop, test, or deploy software. All infrastructure parameters are saved in the form of files called manifests.

As all code files, manifests are easy to reuse, edit, copy, and share. Manifests make building, testing, staging, and deploying infrastructure quicker and consistent.

Developers codify the configuration files store them in version control. If someone edits a file, pull requests and code review workflows can check the correctness of the changes.

What Issues Does Infrastructure as Code Solve?

Infrastructure as Code solves the three main issues of manual setups:

- High price

- Slow installs

- Environment inconsistencies

High Price

Manually setting up each IT environment is expensive. You need dedicated engineers for setting up the hardware and software. Network and hardware technicians require supervisors, so there is more management overhead.

With Infrastructure as Code, a centrally managed tool sets up an environment. You pay only for the resources you consume, and you can quickly scale up and down your resources.

Slow Installs

To manually set up an infrastructure, engineers first need to rack the servers. They then manually configure the hardware and network to the desired settings. Only then can engineers start to meet the requirements of the operating system and the hosted application.

This process is time-consuming and prone to mistakes. IaC reduces the setup time to minutes and automates the process.

Environment Inconsistencies

Whenever several people are manually deploying configurations, inconsistencies are bound to occur. Over time, it gets difficult to track and reproduce the same environments. These inconsistencies lead to critical differences between development, QA, and production environments. Ultimately, the differences in settings inevitably cause deployment issues.

Infrastructure as Code ensures continuity as environments are provisioned and configured automatically with no room for human error.

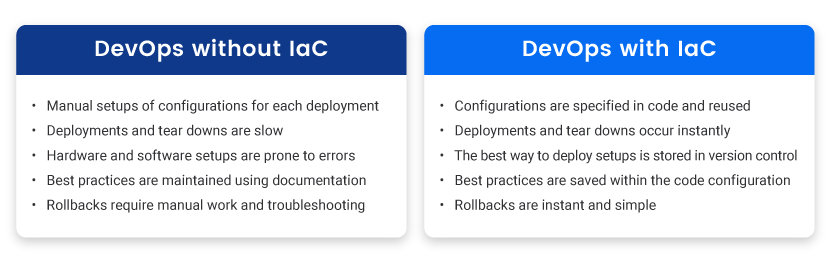

The Role of Infrastructure as Code in DevOps

Infrastructure as Code is essential to DevOps. Agile processes and automation are possible only if there is a readily available IT infrastructure to run and test the code.

With IaC, DevOps teams enjoy better testing, shorter recovery times, and more predictable deployments. These factors are vital for quick-paced software delivery. Uniform IT environments lower the chances of bugs arising in the DevOps pipeline.

The IaC approach has no limitations as DevOps teams provision all aspects of the needed infrastructure. Engineers create servers, deploy operating systems, containers, application configurations, set up data storage, networks, and component integrations.

IaC can also be integrated with CI/CD tools. With the right setup, the code can automatically move app versions from one environment to another for testing purposes.

Benefits of Infrastructure as Code

Here are the benefits an organization gets from Infrastructure as Code:

Speed

With IaC, teams quickly provision and configure infrastructure for development, testing, and production. Quick setups speed up the entire software development lifecycle.

The response rate to customer feedback is also faster. Developers add new features quickly without needing to wait for more resources. Quick turnarounds to user requests improve customer satisfaction.

Standardization

Developers get to rely on system uniformity during the delivery process. There are no configuration drifts, a situation in which different servers develop unique settings due to frequent manual updates. Drifts lead to issues at deployment and security concerns.

IaC prevents configuration drifts by provisioning the same environment every time you run the same manifest.

Reusability

DevOps teams can reuse existing IaC scripts in various environments. There is no need to start from scratch every time you need new infrastructure.

Collaboration

Version control allows multiple people to collaborate on the same environment. Thanks to version control, developers work on different infrastructure sections and roll out changes in a controlled manner.

Efficiency

Infrastructure as Code improves efficiency and productivity across the development lifecycle.

Programmers create sandbox environments to develop in isolation. Operations can quickly provision infrastructure for security tests. QA engineers have perfect copies of the production environments during testing. When it is deployment time, developers push both infrastructure and code to production in one step.

IaC also keeps track of all environment build-up commands in a repository. You can quickly go back to a previous instance or redeploy an environment if you run into a problem.

Lower Cost

IaC reduces the costs of developing software. There is no need to spend resources on setting up environments manually.

Most IaC platforms offer a consumption-based cost structure. You only pay for the resources you are actively using, so there is no unnecessary overhead.

Scalability

IaC makes it easy to add resources to existing infrastructure. Upgrades are provisioned quickly, and with ease, so you can quickly expand during burst periods.

For example, organizations running online services can easily scale up to keep up with user demands.

Disaster Recovery

In the event of a disaster, it is easy to recover large systems quickly with IaC. You just re-run the same manifest, and the system will be back online at a different location if need be.

Infrastructure as Code Best Practices

Use Little to No Documentation

Define specifications and parameters in configuration files. There is no need for additional documentation that gets out of sync with the configurations in use.

Version Control All Configuration Files

Place all your configuration files under source control. Versioning gives flexibility and transparency when managing infrastructure. It also allows you to track, manage, and restore previous manifests.

Constantly Test the Configurations

Test and monitor environments before pushing any changes to production. To save time, consider setting up automated tests to run whenever the configuration code gets modified.

Go Modular

Divide your infrastructure into multiple components and then combine them through automation. IaC segmentation offers many advantages. You control who has access to certain parts of your code. You also limit the number of changes that can be made to manifests.

Infrastructure as Code Tools

IaC tools speed up and automate the provisioning of cloud environments. Most tools also monitor previously created systems and roll back changes to the code.

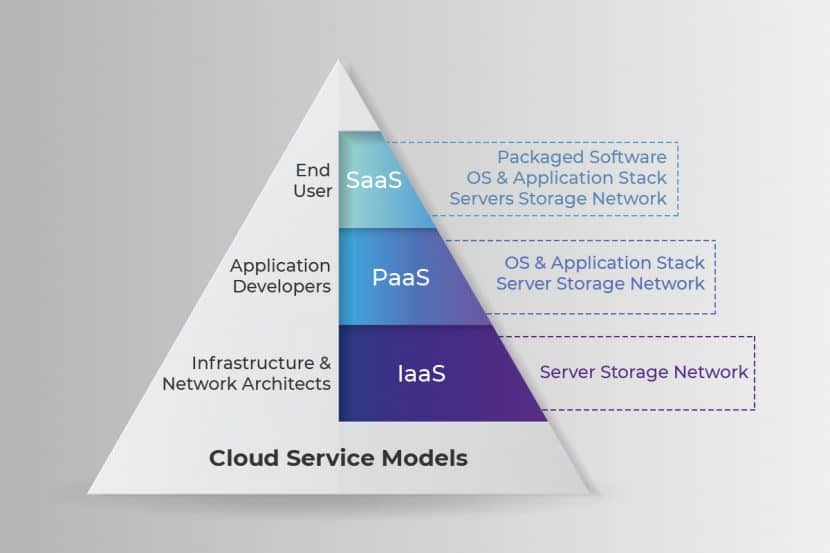

While they vary in terms of features, there are two main types of Infrastructure as Code tools:

- Imperative tools

- Declarative tools

Imperative Approach Tools

Tools with an imperative approach define commands to enable the infrastructure to reach the desired state. Engineers create scripts that provision the infrastructure one step at a time. It is up to the user to determine the optimal deployment process.

The imperative approach is also known as the procedural approach.

When compared to declarative approach tools, imperative IaC requires more manual work. More tasks are required to keep scripts up to date.

Imperative tools are a better fit with system admins who have a background in scripting.

const aws = require("@pulumi/aws");

let size = "t2.micro";

let ami = "ami-0ff8a91507f77f867"

let group = new aws.ec2.SecurityGroup("webserver-secgrp", {

ingress: [

{protocol: "tcp", fromPort: 22, toPort: 22, cidrBlocks: ["0.0.0.0/0"] },

],

});

let server = new aws.ec2.Instance("webserver-www", {

instanceType: size,

securityGroups: [ group.name ],

ami: ami,

});

exports.publicIp = server.publicIp;

exports.publicHostName= server.publicDns;Imperative IaC example (using Pulumi)

Declarative Approach Tools

A declarative approach describes the desired state of the infrastructure without listing the steps to reach that state. The IaC tool processes the requirements and then automatically configures the necessary software.

While no step-by-step instruction is needed, the declarative approach requires a skilled administrator to set up and manage the environment.

Declarative tools are catered towards users with strong programming experience.

resource "aws_instance" "myEC2" {

ami = "ami-0ff8a91507f77f867"

instance_type = "t2.micro"

security_groups = ["sg-1234567"]

}Declarative Infrastructure as Code example (using Terraform)

Popular IaC Tools

The most widely used Infrastructure as Code tools on the market include:

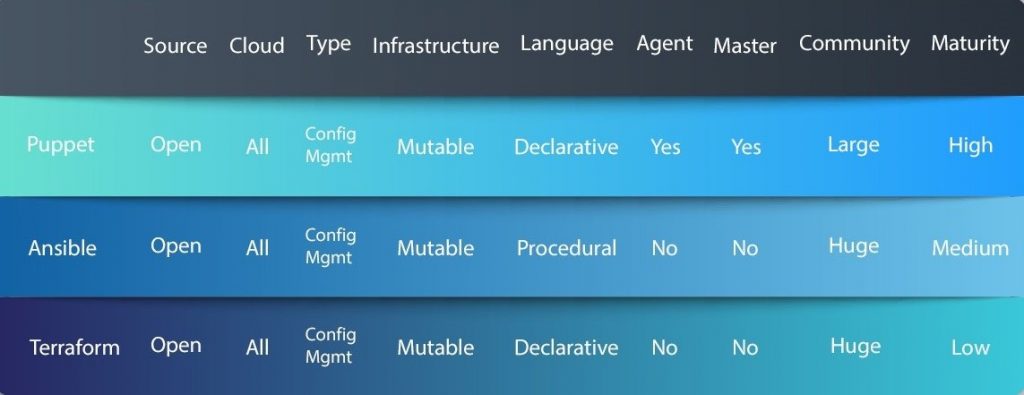

- Terraform: This open-source declarative tool offers pre-written modules that you populate with parameters to build and manage an infrastructure.

- Pulumi: The main advantage of Pulumi is that users can rely on their favorite language to describe the desired infrastructure.

- Puppet: Using Puppet’s Ruby-based DSL, you define the desired state of the infrastructure, and the tool automatically creates the environment.

- Ansible: Ansible enables you to model the infrastructure by describing how the components and systems relate to one another.

- Chef: Chef is the most popular imperative tool on the market. Chef allows users to make “recipes” and “cookbooks” using its Ruby-based DSL. These files specify the exact steps needed to achieve the desired environment.

- SaltStack: What sets SaltStack apart is the simplicity of provisioning and configuring infrastructure components.

Learn more about Pulumi in our article What is Pulumi?.

To see how different options tools stack up, read Ansible vs. Terraform vs. Puppet.

Want to Stay Competitive, IaC is Not Optional

Infrastructure as Code is an effective way to keep up with the rapid pace of current software development. In a time when IT environments must be built, changed, and torn down daily, IaC is a requirement for any team wishing to stay competitive.

PhoenixNAP’s Bare Metal Cloud platform supports API driven provisioning of servers. It’s also fully integrated with Ansible and Terraform, two of the leading Infrastructure as Code tools.

Learn more about Bare Metal Cloud and how it can help propel an organization’s Infrastructure as Code efforts.

Recent Posts

DevOps vs Agile: Differences + Head to Head Comparison

The evolution of software development has three significant milestones. First was introducing the waterfall method that focused on the time required to release a product. Then came the agile methodology which optimized the development life-cycle.

Now, DevOps seeks to unite development and operations to work together as a single team. It increases productivity, improves collaboration, and delivers superior products.

Adopting agile and DevOps practices in software development presents a challenge to many. The first step to overcoming this obstacle is understanding the difference between agile and DevOps and the role these development methodologies play.

Key Differences Between Agile and DevOps

- Agile focuses on cross-functional team communication and deploying constant iterative releases. DevOps focuses on uniting the Development and Operations teams in a continuous development process.

- Agile focuses on embracing mid-project changes. DevOps focuses on the end-to-end software engineering process that embraces constant development and testing.

- Agile advocates making incremental deployments after each sprint. DevOps aims to introduce continuous delivery daily.

- In Agile, teams have similar skill-sets. In DevOps, team members have diverse skill-sets.

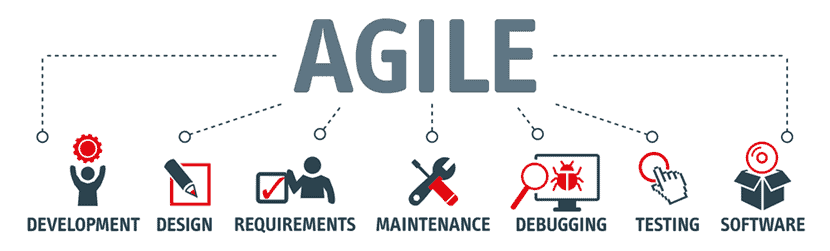

What is Agile?

Agile is a methodology that focuses on continuously delivering small manageable increments of a project through iterative development and testing. It was introduced as an alternative to the traditional waterfall methodology, known for its structured, linear, sequential life-cycle.

Dynamic processes such as project management and software development require the ability to adapt to changes and new conditions. The inflexible waterfall approach couldn’t meet the expectations of the fast-paced world of continuous technological innovation. Thus, agile was born.

Agile provides effective, day-to-day management of complex projects, improving communication and collaboration among team members and customers.

Agile Values

The methodology is defined by the Agile Manifesto, 12 principles that lay the foundation and values of “working agile.”

There are four core values at the heart of agile software development:

Individuals and interactions over processes and tools. The manifesto emphasizes the importance of valuing each team member and fostering a healthy and stimulating work environment. To maximize efficiency, it encourages constant communication between teammates, so everyone is involved in the development process.

Working software over comprehensive documentation. Documentation cannot stand in the way of delivering software. Previously, every project had to start with detailed documentation of the requirements and expectations of the developing software. Agile is focused on embarrassing changes and avoids spending too much time on documentation that will probably get altered later.

Customer collaboration over contract negotiation. Continuous development involves collaborating with the customer regularly. Immediate feedback guides the project in the direction which will eventually give the best results. Negotiating a contract with the customer before development and referring back to it after production leads to potential miscommunication. It should be avoided.

Responding to change over following a plan. Changes made mid-project need to be readily accepted as they can help with the product’s overall success. Adapting to new circumstances and embracing new features is one of the prominent differences between agile and waterfall.

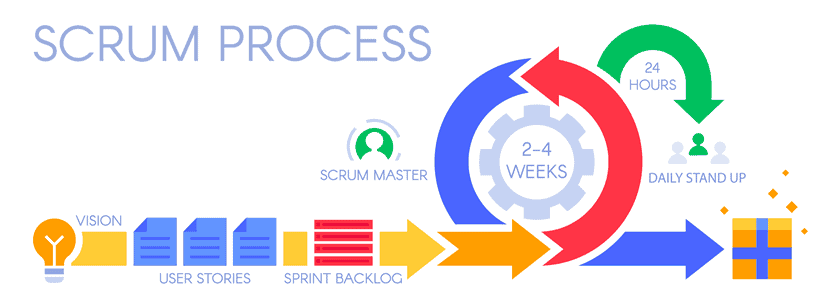

Agile Software Development

Agile software development involves implementing agile frameworks, such as Scrum and Kanban. Each software development life-cycle starts with breaking up the project into manageable stories and requirements. The tasks are organized into sprints. A sprint takes place over two weeks, during which the team works on getting a specific feature up and running.

During the sprint, the team focuses on building, testing, and deploying software, making adjustments along the way. Once they complete a sprint, they move on to the next, until the project is complete. Such a practice allows continuous delivery of software. At the same time, customers, stakeholders, and project managers can follow and give feedback to ensure satisfactory results.

Some development stages can also include automated processes to speed up integration (such as automation testing and code management) and ensure everything is working correctly.

During development, the team collaborates, gives each other feedback, and reviews their work after each sprint, during regular retrospective sessions.

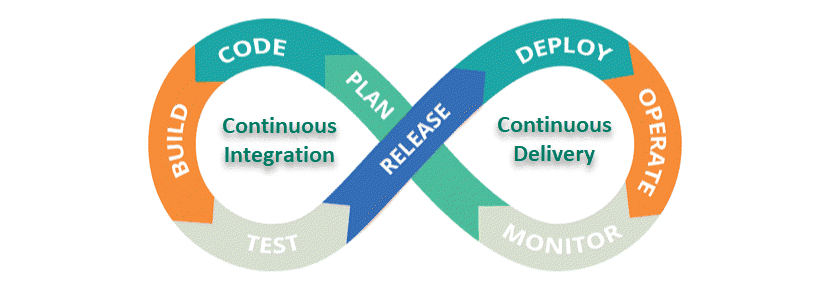

What is DevOps?

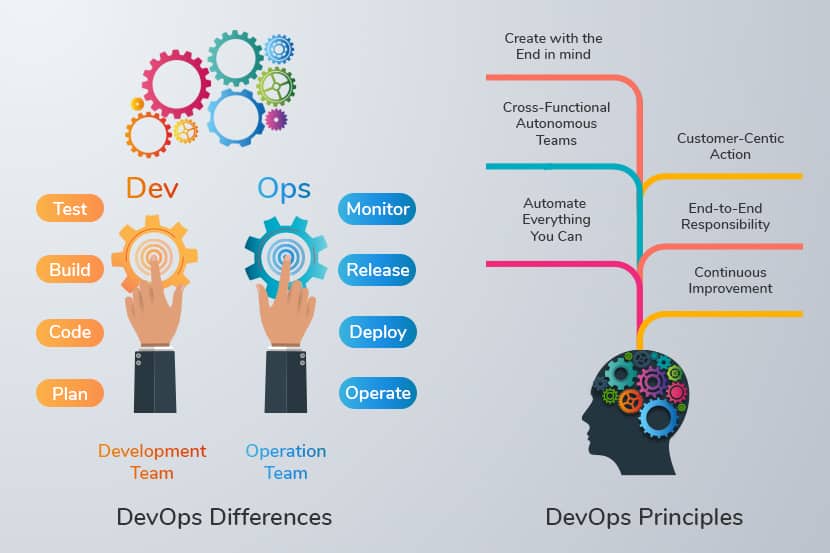

DevOps is a software development culture in which the development team and operations team work together to improve collaboration and productivity. The practice also involves implementing DevOps principles and practices and using a set of DevOps tools for testing.

DevOps principles foster communication, end-to-end responsibility, and information sharing. They define DevOps and set their goals.

Unlike traditional software development, DevOps consists of a continuous cycle of building, testing, deploying, and monitoring software. DevOps’ main objective is to deliver quality software efficiently.

DevOps Principles

More and more companies are transitioning to DevOps. Implementing DevOps has many advantages, such as fast and easily integrated software deployments.

The transition to this new culture is impossible without understanding the fundamental values that drive it. It requires a change of mindset within the development and the operations team, which inspires them to work as a united front.

The following principles are the foundation that steers the engineering process in a DevOps environment:

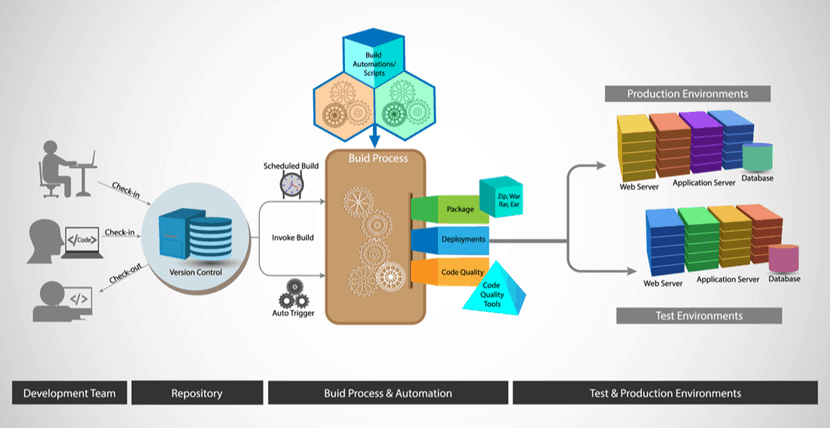

Version Control. Developers submit code changes to a central repository several times a day. Prior to submitting code to the master repository (master branch), all code must be verified. To facilitate collaboration, other developers can track changes.

Continuous Integration. Members of the development team integrate their code in a shared repository, several times a day. Each developer segments the work into small, manageable chunks of code and detects potential merge conflicts and bugs quicker.

Continuous Delivery. As the code is continuously integrated, it is also consistently delivered to the end-user. Smaller contributions allow faster update releases, which is a crucial factor for customer satisfaction.

Continuous Deployment. A big part of DevOps is automating processes to speed up production. Continuous deployment involves automating releases of minor updates that do not pose a substantial threat to the existing architecture.

Continuous Testing. Such a strategy involves testing as much as possible in every step of development. Automated tests give valuable feedback and a risk assessment of the process at hand.

Continuous Operations. The DevOps team is always working on upgrading software with small but frequent releases. That is why DevOps requires constant monitoring of performance. Its main goal is to prevent downtime and availability issues during code release.

Collaboration. One of the main goals of DevOps is to foster collaboration and feedback sharing. Development and Operations need to proactively communicate and share feedback to maintain an efficient DevOps pipeline.

For a detailed overview of DevOps principles and practices, read 9 Key DevOps Principles.

DevOps Software Development

DevOps software development focuses on an established pipeline the project has to pass through. The number of stages depends on the complexity and type of software the team is developing. The key stages include developing, building, testing, and deploying.

A planning stage often precedes all the previously mentioned, and a monitoring stage is also added after deployment.

For more information about DevOps software development stages and how to plan a DevOps pipeline, check out What is DevOps Pipeline & How to Build One.

Agile vs. DevOps

| Agile | DevOps | |

| Basic Philosophy | A culture that focuses on continuously delivering small manageable increments of a project through iterative development and testing. | A practice in which the development and operations team work together is integrated to improve collaboration and productivity. |

| Use | It can be utilized in any department to help manage a complex project. | Focuses on the end-to-end engineering process. |

| Focus | Creating an environment that welcomes mid-project changes to improve quality. | Merging development and operations teams to ensure they practice continual testing and development. |

| Team | Smaller in number, team members work closely together and have similar skill sets. | A wide variety of skill sets inside a larger team which consists of multiple departments. |

| Delivery | Incremental deployments after each sprint (usually a weekly or biweekly period). | The goal is to provide continuous delivery daily (or even every few hours). |

| Documentation | Extremely light documentation to enhance flexibility in the development process. | Sufficient documentation to ensure the teams collaborate well. Emphasizing communication over official documentation. |

| Quality and Risk | The quality of the product increases, while the risk decreases after every sprint. | Production of high-quality products with low risk due to effective collaboration and automated testing. |

| Feedback | Focuses on customer feedback and adjusts the product accordingly. | Encourages internal feedback among teammates to improve and speed up delivery. |

| Tools | Kanboard, JIRA, Active Collab, Bugzilla, Slack, Trello. | TeamCity, AWS, Puppet, OpenStack, Docker, Jenkins, Kubernetes, GitLab. |

Merging Agile and DevOps

There are many advantages to merging agile and DevOps. Including, speeding up delivery, higher user satisfaction, and effective collaboration within a team.

Combining the practices of DevOps with the culture of agile requires changing existing strategies and attitudes.

Understanding. The agile methodology requires team members to understand each other’s tasks. Mutual understanding is especially important for Scrum Masters, Project Managers, and Product Owners. To successfully manage a project, they need to know every step required to deliver the product.

Collaboration. DevOps involves the development team working together with the operations team. The previously mentioned roles now need to understand all aspects of the development process and operations.

DevOps practices in sprints. Next, the team needs to adopt integrating DevOps while handling sprints. That involves including the entire DevOps team (along with QA) in planning, daily standups, and retrospections.

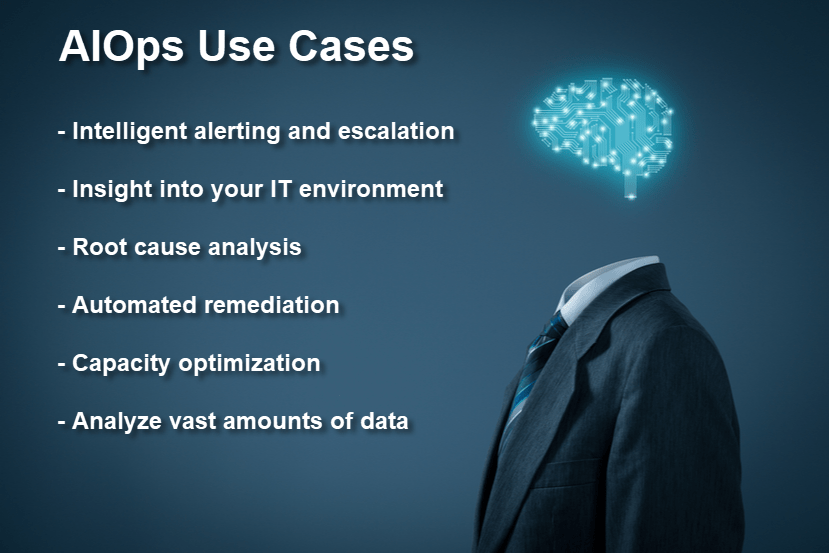

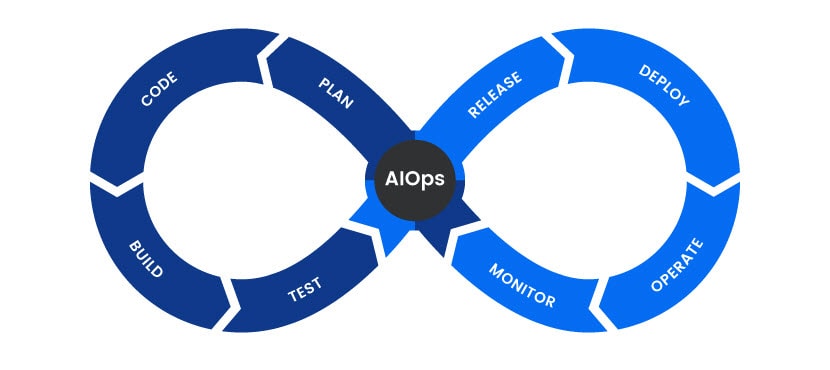

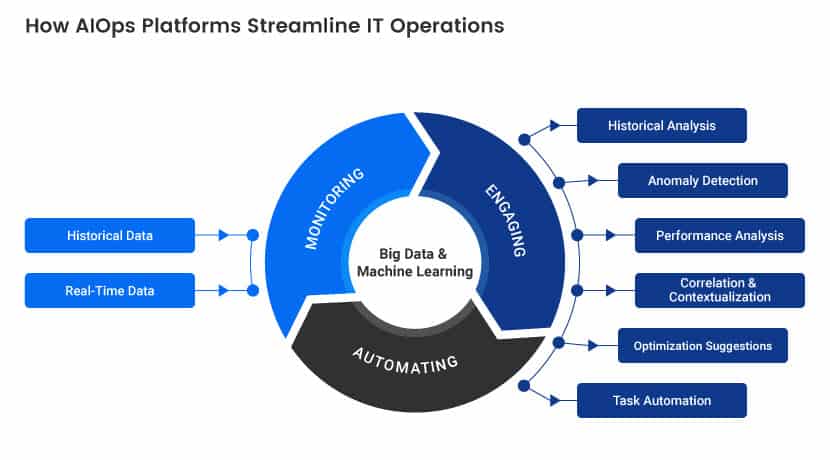

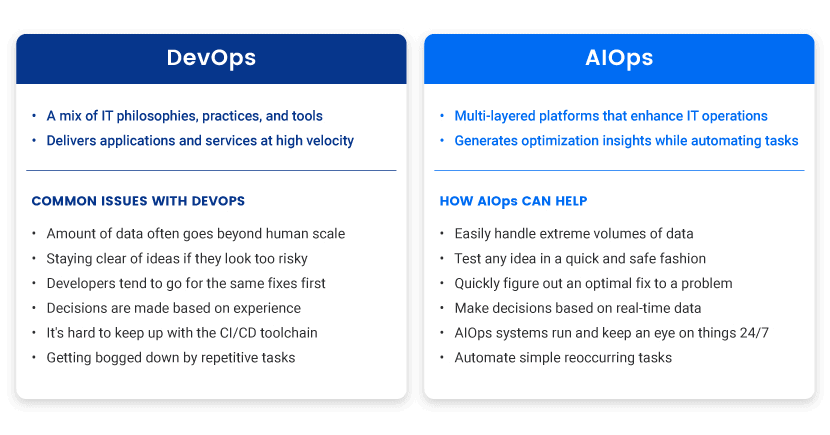

Automate workflows. As automation is an essential part of DevOps development, it should be included in the agile workflow and project planning. Emerging fields, such as AIOps, use artificial intelligence to automate manual workflows in the IT environment.

Measure success. Merging agile and DevOps also involves assessing and measuring key DevOps metrics and KPIs within end-to-end development.

DevOps and Agile Can Work Together

Agile and DevOps both aim towards delivering quality software in a timely manner. The difference between agile and DevOps is that agile focuses on optimizing the development life-cycle, while DevOps unites development and operations in a CI/CD environment.

DevOps and agile are not mutually exclusive. Any organization transitioning to DevOps should not abandon existing agile workflows. DevOps is an extension of agile built around the practices that are not in agile’s focus. When used together, both practices improve software development and lead to better products.

Recent Posts

9 Key DevOps Principles: Practices Your Team Needs to Adopt

DevOps is a set of principles that Development and Operations teams implement to deliver high-quality software in record time.

In Agile, Dev and Ops teams worked separately in silos, which negatively impacted the organization’s overall productivity. DevOps solves that problem by uniting Development and Operations into one group tasked with working together to achieve common objectives.

In this article, learn nine crucial Devops principles and practices and how implementing them can help your organization get the most out of DevOps.

What is DevOps?

DevOps is a software development culture that unites development, operations, and quality assurance processes into a continuous set of actions. It facilitates cross-functional communication, end-to-end responsibility, and collaboration, and is a natural extension of the Agile methodology.

Transitioning to DevOps does not require any technical innovation. It depends on adopting the right DevOps principles, values, and adapting them to your organization’s needs.

DevOps Principles

DevOps is a mindset or a philosophy that encompasses collaboration, communication, sharing, openness, and a holistic approach to software development.

DevOps relies on a comprehensive set of strategies and methodologies. They ensure the timely delivery of quality software. DevOps principles guide how to organize a DevOps environment.

1. Incremental Releases

Before DevOps, teams had to wait their turn to deploy code. A delay in code release often caused bottlenecks or what is known as “merge hell.” When developers have to wait for a prolonged period of time to contribute code, errors, and incompatibility issues are inevitable.

DevOps encourages developers to contribute new code as often as possible, usually many times during the day. In a DevOps environment, a single project is divided into small, manageable chunks, and teams submit their code in increments. That makes it easier to troubleshoot problematic code before it gets released to production. Depending on the workflow, DevOps teams release code updates and bug fixes on a daily, weekly, or monthly basis.

Incremental releases make the development and deployment cycle more flexible. As a result, teams can quickly respond to sudden changes and fix errors and bugs immediately. The primary goal is to prevent bad code from being deployed to the end-user.

2. Automation

One of the critical practices of DevOps is automating as much of the software development process as possible. Automating workflows allows developers to focus solely on writing code and developing new features.

Anything that can be automated should be automated in a DevOps environment. For example, instead of wasting time on manually checking code for errors, DevOps teams use different software solutions to build and test applications automatically. It is as simple as running a single command to compile the source code to determine if it will work in production.

If the application is written in a language that doesn’t need to be compiled, DevOps teams can run automated tests to check if the new code is production-ready. If there are any errors or bugs in the code, automation will trigger an alert letting developers know which lines of code are causing issues.

Read how Artificial Intelligence is automating IT Operations workflows in our article What is AIOps.

Automation also plays a vital role in dealing with infrastructure management procedures.

In a DevOps environment, teams utilize Infrastructure-as-Code (IaC). Infrastructure-automation software, such as Pulumi, helps manage the provisioning and decommissioning of resources. It involves utilizing scripts, APIs, and CLIs to manage infrastructure as code rather than doing everything manually.

The goal is to enable developers to quickly provision anything from containers and virtual machines to bare metal cloud servers, storage, databases, and other infrastructure.

Infrastructure-as-Code (IaC). IaC made it possible for enterprises to deploy hundreds of servers or applications every day and destroy them when they are not needed. By letting automation take care of infrastructure management procedures, teams can focus their efforts on building and deploying software.

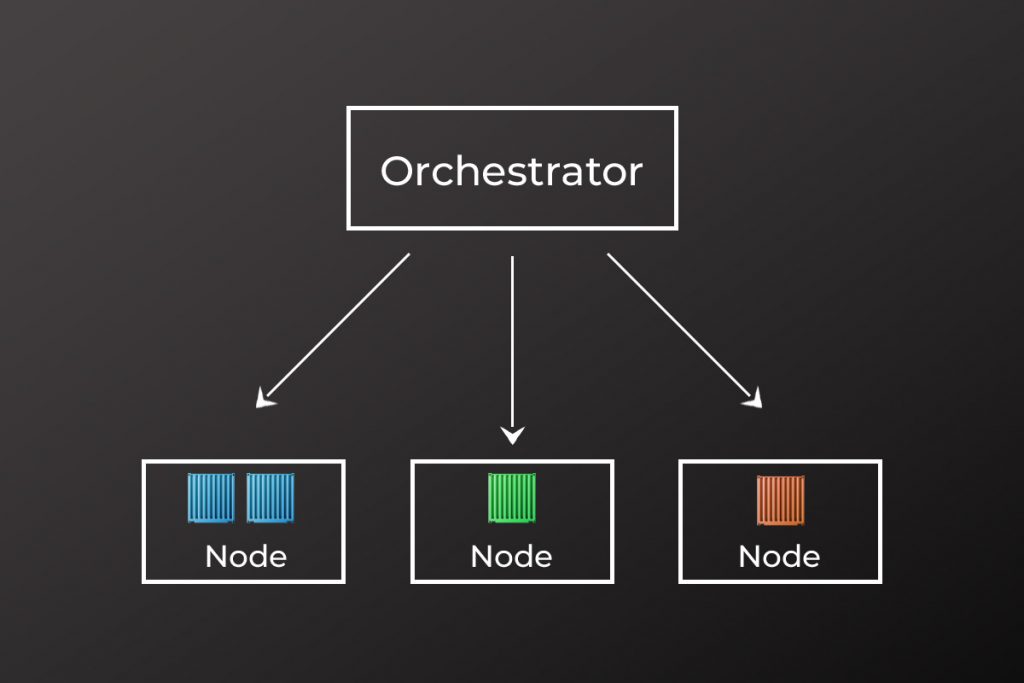

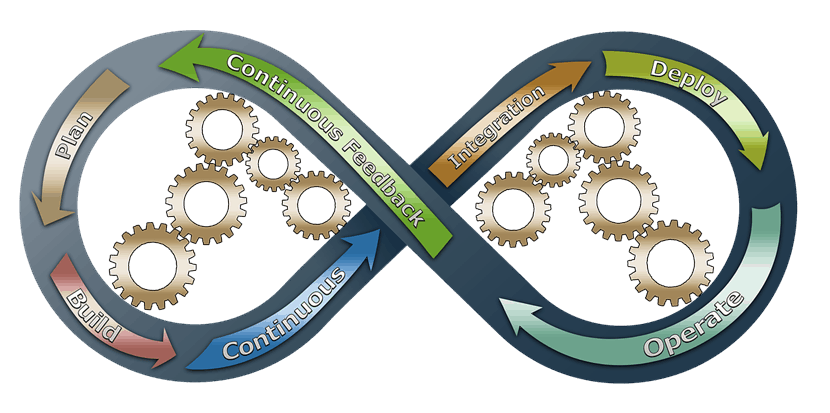

3. DevOps Pipeline

DevOps aims to establish a repeatable system, a loop that facilitates continuity in development. To achieve that, DevOps teams create pipelines.

A pipeline denotes a repeatable system made up of stages through which code has to pass before being deployed to production. A typical DevOps pipeline consists of four primary phases:

- Develop. First, the developers have to write the code.

- Build. Then, the team compiles the code into a build to check for errors.

- Test. After the build phase, operations teams run tests to ensure that the new code will behave as intended in the production environment.

- Deploy. Once the new code has passed the testing phase, it gets deployed to the end-user.

Learn what a DevOps pipeline is and how to create one.

DevOps teams implement critical strategies to achieve a steady flow of code through the pipeline. The most important among them is Continuous Integration (CI) and Continuous Delivery (CD), also known as CI/CD.

Continuous Integration has to do with enabling multiple developers to submit and merge their code regularly, while Continuous Delivery is all about releasing code updates to production as often as possible.

In that regard, “continuous everything” is a DevOps principle that has to do with creating a never-ending development and deployment pipeline.

Along with CI/CD, in professional DevOps environments, teams strive to ensure continuity in every aspect of the pipeline – monitoring, feedback gathering, and deployments.

4. Continuous Integration

Continuous Integration (CI) plays a pivotal role in a DevOps pipeline. It encourages developers to submit their code to a central code repository multiple times a day.

By integrating smaller chunks of code regularly, the likelihood of bad code moving down the pipeline and causing service interruptions is significantly reduced. Some of the largest organizations that implement DevOps practices commit new code hundreds of times a day.

Another critical aspect of Continuous Integration is automated testing. Before developers commit their code to the master branch, they create builds of the project to determine if the new code is compatible with the existing code. If the build is successful, developers will submit their code to the shared repository. CI relies on a version control system, a central code repository that helps teams track code changes and manage merge requests.

5. Continuous Delivery

Continuous Delivery (CD) is all about releasing code updates frequently and fast. CD relies on developers to manually deploy code to production as opposed to Continuous Deployment, which takes an automated approach to code release. For CD to yield positive results, it’s paramount to create a repeatable system that will push the code through the DevOps pipeline.

Developers working in a CD environment need to keep in mind that their code contributions may get deployed to production at any moment. Having passed all tests and reviews, code updates can be released to production with a click of a button.

Numerous benefits come with continuous delivery. First, it eliminates the risk of downtime and other performance issues because code changes are usually minor. Second, CD enables organizations to release high-quality features much easier, ensuring faster time-to-market, and ultimately eliminating fixed costs associated with the deployment process.

Quality Assurance teams set a committed code test using automation testing tools such as UFT, Ranorex, or Selenium. If QA finds vulnerabilities or bugs, they go back to the engineers. This stage features version control to look for any integration problem in advance. The Version Control System allows developers to record the changes in the files for sharing with other team members, regardless of location.

Codes that pass automated tests get integrated into one shared repository. Several code submissions serve to prevent future drastic differences in code branch and mainline code, lengthening integration. Popular continuous integration tools include GitLab CI, Jenkins, TeamCity, and Bamboo.

DevOps tools help engineers deploy a product in increments, such as Puppet, Chef, Google Cloud Deployment Manager, and Azure Resource Manager.

6. Continuous Monitoring

Continuous Monitoring builds on the concepts of CI/CD, and it ensures the application performs without issues. DevOps teams implement monitoring technologies and techniques to keep track of how the application is behaving.

DevOps teams monitor logs, apps, systems, and infrastructure. Once a problem is detected, DevOps teams can quickly revert the app to a previous state. During that time, the team works on resolving known issues without making the user aware that the code is updated continuously.

Continuous monitoring also helps DevOps teams detect issues that are hindering productivity in the pipeline. After each release cycle, teams should optimize the CI/CD pipeline to eliminate any bottlenecks and ensure a smooth transition of code from one stage to the next.

Peter Borg, SCRUM Master at phoenixNAP, shared his experience on transitioning to DevOps.

7. Feedback Sharing

DevOps thrives on feedback. Developers need actionable information from different sources to help them improve the overall quality of the application. Without feedback, DevOps teams can fall victim to spending time on building products that don’t bring value to stakeholders or customers.

DevOps teams usually gather feedback from stakeholders, end-users, and software-based monitoring technologies before, during, and after the release cycle. They collect feedback through various channels, such as social media and surveys, or by discussing with colleagues.

Teams need to have procedures that will help them sift through the feedback they’ve gathered to extract the most valuable information. Misleading or inaccurate feedback could prove to be detrimental to the entire development process.

8. Version Control

Version control, also known as source control, lies at the heart of every successful DevOps workflow. It helps DevOps teams stay organized, focused, and up to date with what members of the team are doing. Version control is also crucial for ensuring teams collaborate faster and easier to support frequent software releases.

In a nutshell, version control is a central code repository — a place where developers contribute their code and track changes during the development process. Most version control systems allow team members to create branches of the main project. Branches are copies of the project’s source code that individual developers can work on without modifying the original code.

Get started with Git, a popular version control tool, in no time by downloading our free Git Commands Cheat Sheet.

In a typical scenario, each developer works on a separate branch of the same project, submitting code updates and running automated tests. Before the newly written code gets merged with the master branch, automation will create a build of the application that will ensure the new code is compatible with the existing code.

If the build is successful, developers merge the new code with the master branch and deploy it to production or run other tests, depending on the workflow.

With a robust version control system in place, DevOps teams can be confident that only error-free code is moving down the pipeline and getting deployed to production.

9. Collaboration

The main idea behind DevOps is to establish trust among developers and operations. Dev and Ops teams need to communicate, share feedback, and collaborate throughout the entire development and deployment cycle.

In such a setting, both groups are responsible for ensuring the application delivers on its promises. That requires continuously optimizing and improving the performance, costs, and delivery of services while keeping users satisfied.

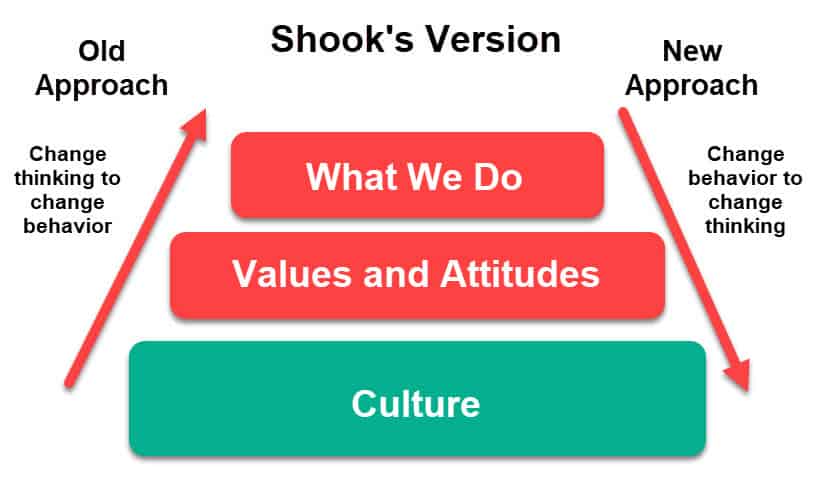

Creating this type of inclusive, collaborative environment also involves accepting a cultural shift within the company. To execute DevOps successfully, it’s crucial to have the stakeholders and DevOps teams be on the same page, working together to deliver software solutions that bring real value to the company and its customers. DevOps requires the entire company to behave like a startup, continuously adjusting to market changes, and investing time and resources in features that attract more customers.

Remember, a Devops Culture Change Requires a Unified Team

DevOps is just another buzzword without the proper implementation of certain principles that sit at the heart of DevOps.

DevOps revolves around specific technologies that help teams get the job done. However, DevOps is, first and foremost, a culture. Building a devops culture requires an organization to work as a unified team, from Development and Operations to stakeholders and management. That is what sets DevOps apart from other development models.

When transitioning to DevOps, remember that these principles are not set in stone. Organizations should implement DevOps methodologies based on their goals, workflows, resources, and the team’s skill set.

Recent Posts

What is Hadoop? Hadoop Big Data Processing

The evolution of big data has produced new challenges that needed new solutions. As never before in history, servers need to process, sort and store vast amounts of data in real-time.

This challenge has led to the emergence of new platforms, such as Apache Hadoop, which can handle large datasets with ease.

In this article, you will learn what Hadoop is, what are its main components, and how Apache Hadoop helps in processing big data.

What is Hadoop?

The Apache Hadoop software library is an open-source framework that allows you to efficiently manage and process big data in a distributed computing environment.

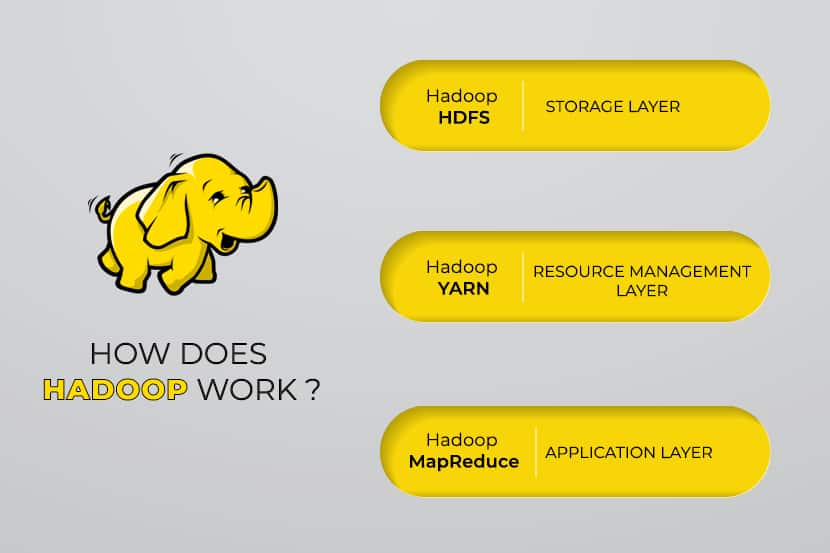

Apache Hadoop consists of four main modules:

Hadoop Distributed File System (HDFS)

Data resides in Hadoop’s Distributed File System, which is similar to that of a local file system on a typical computer. HDFS provides better data throughput when compared to traditional file systems.

Furthermore, HDFS provides excellent scalability. You can scale from a single machine to thousands with ease and on commodity hardware.

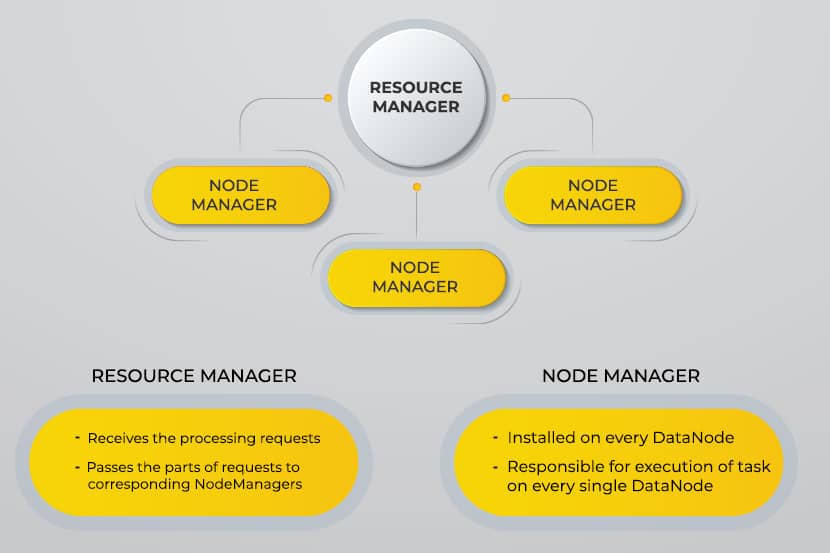

Yet Another Resource Negotiator (YARN)

YARN facilitates scheduled tasks, whole managing, and monitoring cluster nodes and other resources.

MapReduce

The Hadoop MapReduce module helps programs to perform parallel data computation. The Map task of MapReduce converts the input data into key-value pairs. Reduce tasks consume the input, aggregate it, and produce the result.

Hadoop Common

Hadoop Common uses standard Java libraries across every module.

To learn how Hadoop components interact with one another, read our article that explains Apache Hadoop Architecture.

Why Was Hadoop Developed?

The World Wide Web grew exponentially during the last decade, and it now consists of billions of pages. Searching for information online became difficult due to its significant quantity. This data became big data, and it consists of two main problems:

- Difficulty in storing all this data in an efficient and easy-to-retrieve manner

- Difficulty in processing the stored data

Developers worked on many open-source projects to return web search results faster and more efficiently by addressing the above problems. Their solution was to distribute data and calculations across a cluster of servers to achieve simultaneous processing.

Eventually, Hadoop came to be a solution to these problems and brought along many other benefits, including the reduction of server deployment cost.

How Does Hadoop Big Data Processing Work?

Using Hadoop, we utilize the storage and processing capacity of clusters and implement distributed processing for big data. Essentially, Hadoop provides a foundation on which you build other applications to process big data.

Applications that collect data in different formats store them in the Hadoop cluster via Hadoop’s API, which connects to the NameNode. The NameNode captures the structure of the file directory and the placement of “chunks” for each file created. Hadoop replicates these chunks across DataNodes for parallel processing.

MapReduce performs data querying. It maps out all DataNodes and reduces the tasks related to the data in HDFS. The name, “MapReduce” itself describes what it does. Map tasks run on every node for the supplied input files, while reducers run to link the data and organize the final output.

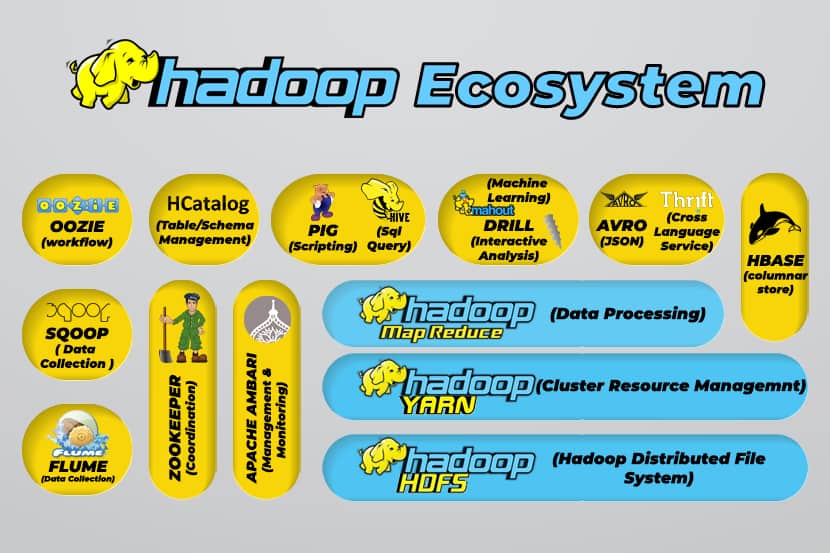

Hadoop Big Data Tools

Hadoop’s ecosystem supports a variety of open-source big data tools. These tools complement Hadoop’s core components and enhance its ability to process big data.

The most useful big data processing tools include:

- Apache Hive

Apache Hive is a data warehouse for processing large sets of data stored in Hadoop’s file system.

- Apache Zookeeper

Apache Zookeeper automates failovers and reduces the impact of a failed NameNode.

- Apache HBase

Apache HBase is an open-source non-relation database for Hadoop.

- Apache Flume

Apache Flume is a distributed service for data streaming large amounts of log data.

- Apache Sqoop

Apache Sqoop is a command-line tool for migrating data between Hadoop and relational databases.

- Apache Pig

Apache Pig is Apache’s development platform for developing jobs that run on Hadoop. The software language in use is Pig Latin.

- Apache Oozie

Apache Oozie is a scheduling system that facilitates the management of Hadoop jobs.

- Apache HCatalog

Apache HCatalog is a storage and table management tool for sorting data from different data processing tools.

If you are interested in Hadoop, you may also be interested in Apache Spark. Learn the differences between Hadoop and Spark and their individual use cases.

Advantages of Hadoop

Hadoop is a robust solution for big data processing and is an essential tool for businesses that deal with big data.

The major features and advantages of Hadoop are detailed below:

- Faster storage and processing of vast amounts of data

The amount of data to be stored increased dramatically with the arrival of social media and the Internet of Things (IoT). Storage and processing of these datasets are critical to the businesses that own them.

- Flexibility

Hadoop’s flexibility allows you to save unstructured data types such as text, symbols, images, and videos. In traditional relational databases like RDBMS, you will need to process the data before storing it. However, with Hadoop, preprocessing data is not necessary as you can store data as it is and decide how to process it later. In other words, it behaves as a NoSQL database.

- Processing power

Hadoop processes big data through a distributed computing model. Its efficient use of processing power makes it both fast and efficient.

- Reduced cost

Many teams abandoned their projects before the arrival of frameworks like Hadoop, due to the high costs they incurred. Hadoop is an open-source framework, it is free to use, and it uses cheap commodity hardware to store data.

- Scalability

Hadoop allows you to quickly scale your system without much administration, just by merely changing the number of nodes in a cluster.

- Fault tolerance

One of the many advantages of using a distributed data model is its ability to tolerate failures. Hadoop does not depend on hardware to maintain availability. If a device fails, the system automatically redirects the task to another device. Fault tolerance is possible because redundant data is maintained by saving multiple copies of data across the cluster. In other words, high availability is maintained at the software layer.

The Three Main Use Cases

Processing big data