Orchestration vs Automation: What You Need to Know

Netflix, Amazon, and Facebook are all pioneers and innovators of Cloud computing and technology.

Orchestration

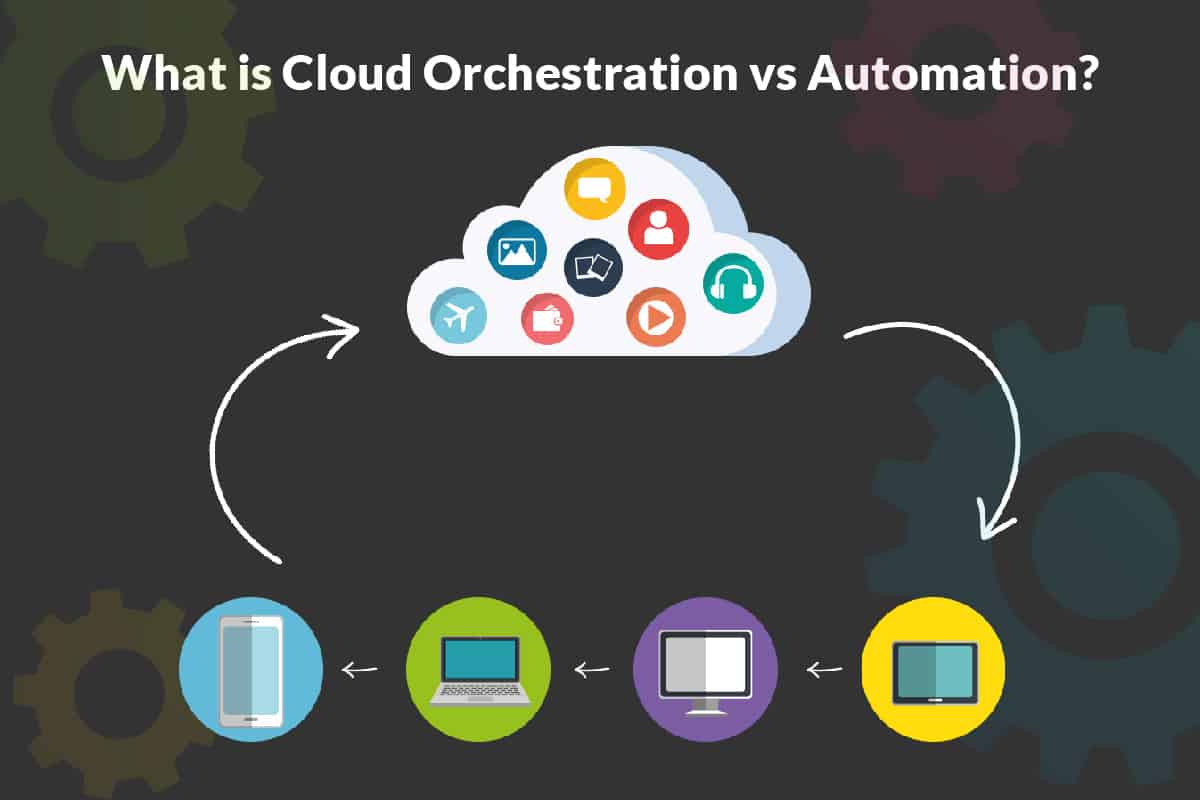

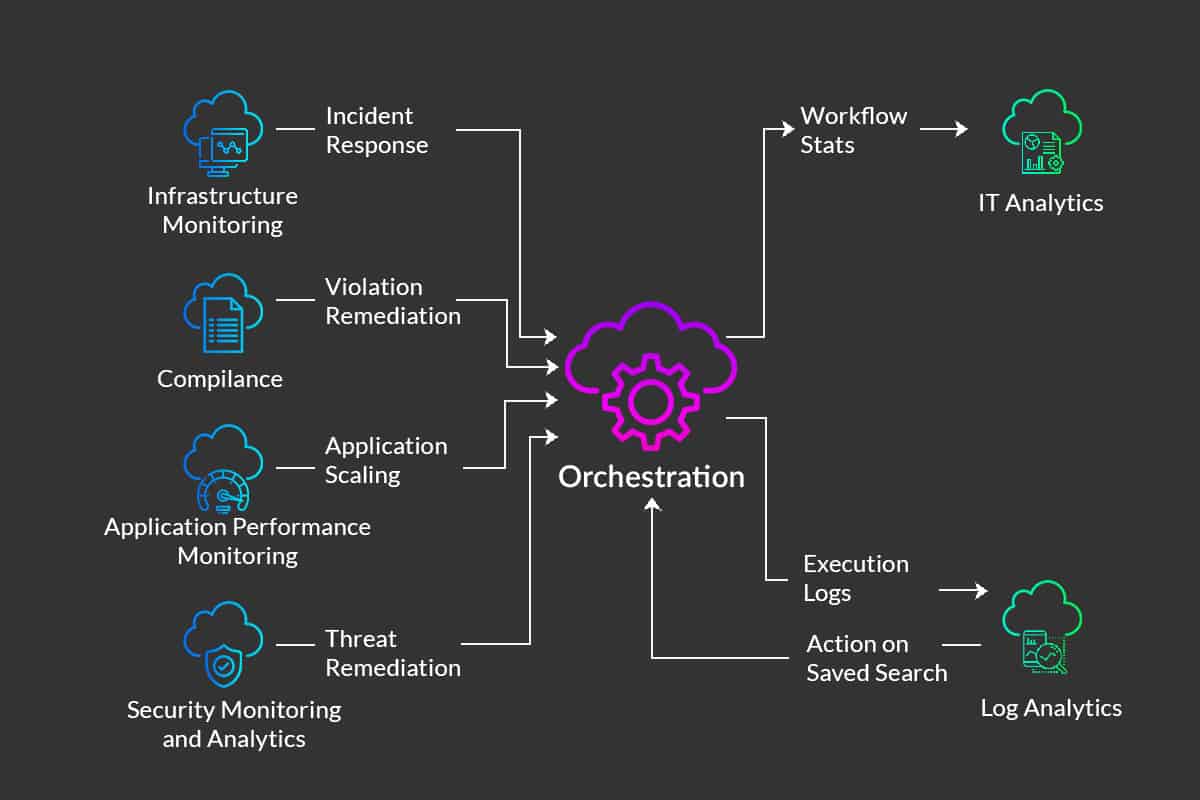

Orchestration is an integral part of their services and complex business processes. Orchestration is the mechanism that organizes components and multiple tasks to run applications seamlessly.

The greater the workload, the higher the need to efficiently manage these processes and avoid downtime. None of these big industry players can afford to be offline. It’s why orchestration has become central to their IT strategies and applications, which require automatic execution of massive workflows.

Orchestration works by minimizing redundancies and optimizes by streamlining repeating processes. It ensures a quicker and more precise deployment of software and updates. Offering a secure solution that is both flexible and scalable it gives enterprises the freedom to adapt and evolve rapidly.

Shorter turn-around times, from app development to market means more profit and success. It allows businesses to keep pace with evolving technological demands.

Hand in hand with orchestration, enterprises use automation to reduce the degree of manual work required to run an application. Automation refers to single repeatable tasks or processes that can be automated.

Automation

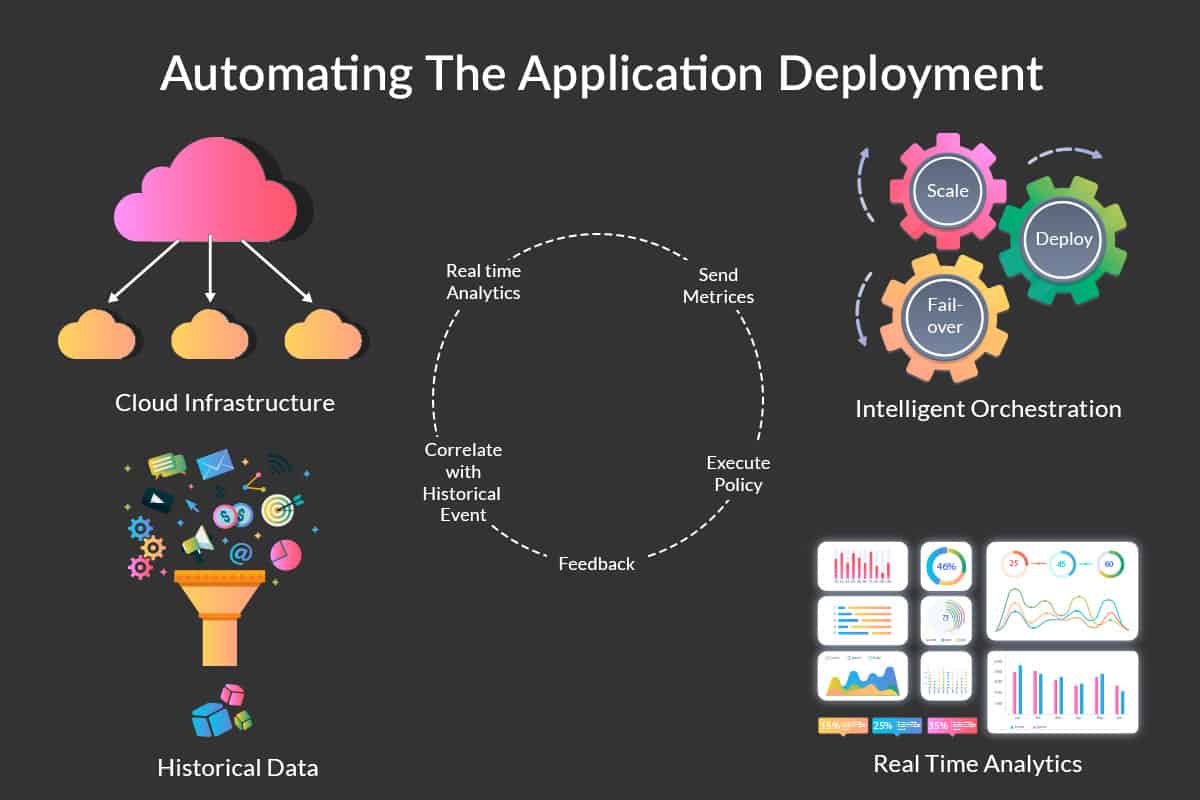

Automation allows enterprises to gain and maintain speed efficiency via software automation tools that regulate functionalities and workloads on the cloud. Orchestration takes advantage of automation and executes large workflows systematically. It does so by managing multiple sophisticated automated processes and coordinating them across separate teams and functions.

In the cloud, orchestration not only deploys an application, but it connects it to the network to enable communication between users and other apps. It ensures auto-scaling to initiate in the right order, implementing the correct permissions and security rules. Automation makes orchestration easier to execute.

To put it simply, automation specifies a single task. Orchestration arranges multiple tasks to optimize a workflow. To understand orchestration vs. automation in more detail, we will take a look at each operation.

What is Cloud Orchestration?

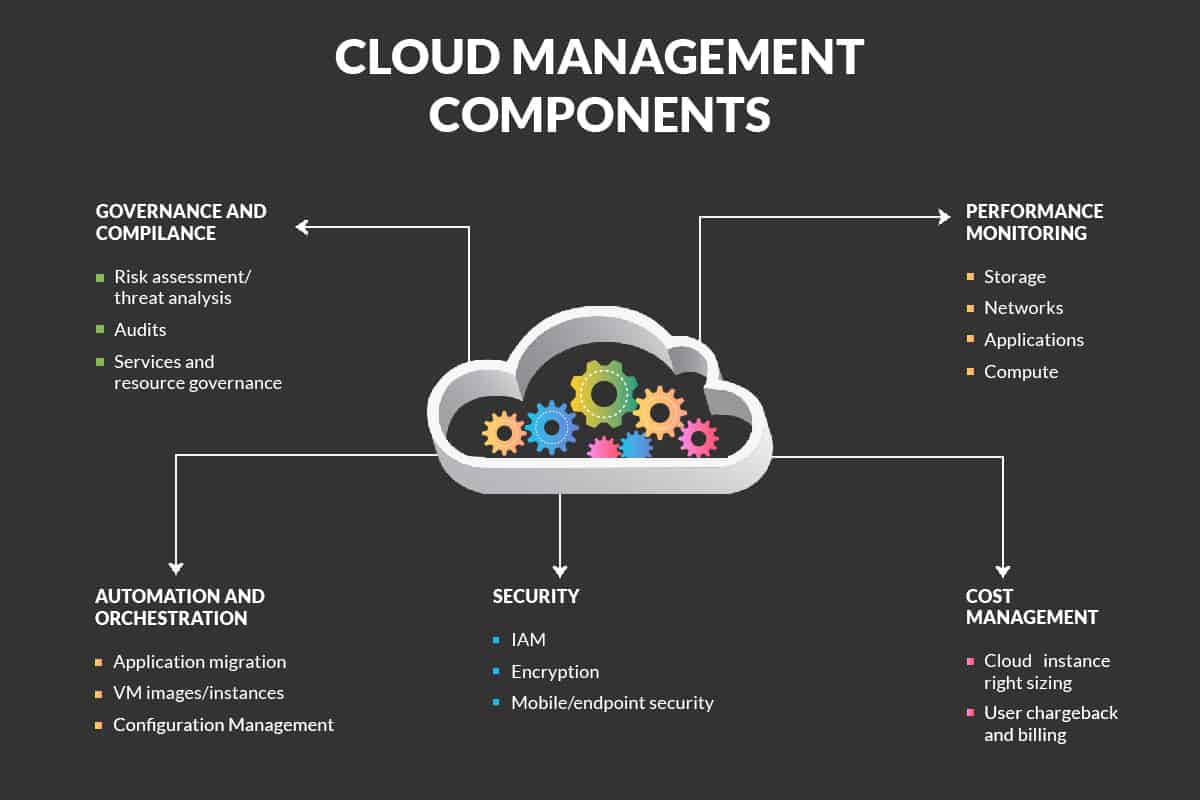

Cloud orchestration refers to the process of creating an automation environment across a particular enterprise. It facilitates the coordination of teams, cloud services, functions, compliance, and security activities. It eliminates the chances of making costly mistakes by creating an entirely automated, repeatable, and end-to-end environment for all processes.

It’s the underlying framework across services and clouds that joins all automation routines. Orchestration, when combined with automation, makes cloud services run more efficiently as all automated routines become perfectly timed and coordinated.

By using software abstractions, cloud orchestration can coordinate among multiple systems located in various sites by using an underlying infrastructure. Modern IT teams that are responsible for managing hundreds of applications and servers require orchestration. It facilitates the delivering of dynamically scaling applications and cloud systems. In doing so, it alleviates the need for staff to run processes manually. Automation is tactical, and orchestration is the strategy.

What is Cloud Automation?

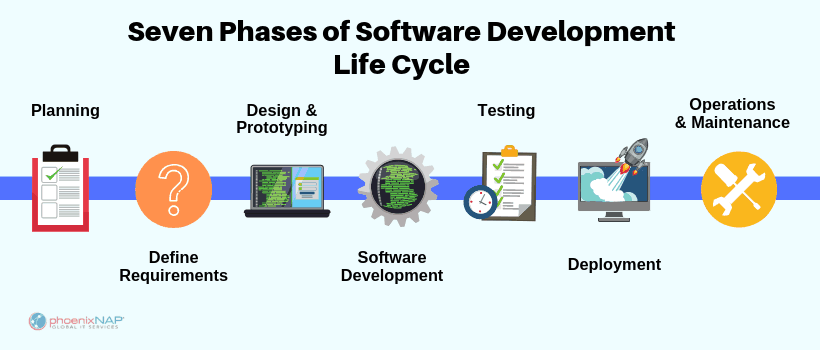

In terms of software development life cycle, the process of cloud automation is best described as a connector or several connectors which provide a data flow when triggered. Automation is typically performed to achieve or complete a task between separate functions or systems, without any manual intervention.

Cloud automation leverages the potential of cloud technology for a system of automation across the entire cloud infrastructure. Results in an automated and frictionless software delivery pipeline eliminate the chances of human error, manual roadblocks. It automates several complex tasks that would otherwise require developers or operations teams to perform them. Automation makes maintaining an operating system efficient by automating simultaneously, multiple repetitive manual processes. It’s like having your workflow run on railways tracks.

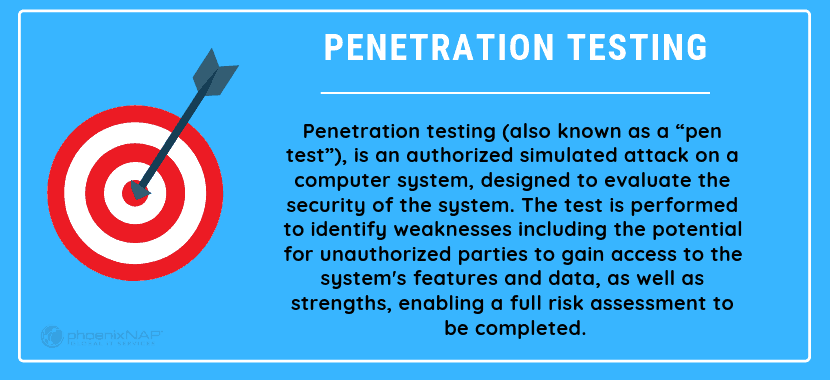

By limiting errors in the system, automation prevents setbacks, which minimize the system’s availability and lower the overall negative effects on performance. This lessens the possibility of breaches of sensitive or critical information. In turn, the system is more reliable and transparent. Cloud automation supports the twelve principles of agile development and DevOps methodologies that enable scalability, rapid resource deployment, and continuous integration and delivery.

The most common use cases are workload management to allocate resources, workflow version control to monitor changes. To establish an Infrastructure as Code (IAC) environment, which streamlines system resources. To regulate data back-ups and act as a data loss prevention tool. And can act as an integral part of a Hybrid cloud system, tying together disparate elements cohesively, such as applications on public and private clouds.

Automation is implemented via orchestration tools that manage operations where these tools write down deployment processes and their management routines. Automation tools are then used to execute the procedures listed above.

Importance of Cloud Orchestration Tools

Microservices framework is prevalent in the modern IT landscape, with automation playing an essential part.

Repetitive jobs, which include deploying applications and managing application lifecycles, require tools that can handle complex job dependencies. Cloud orchestration currently facilitates enterprise-level security policies, flexible monitoring, and visualization, among other tasks.

Installing a cloud orchestra at an enterprise-level means that certain conditions have to be met beforehand to ensure success, such as;

- Minimizing human errors by handling the setup and execution of automation tasks, automatically.

- Reducing human intervention when it comes to managing automation tasks by using orchestration tools.

- Ensuring proper permissions are provided to users to prevent unauthorized access to the automation system.

- Simplifying the process of setting up new data integration by managing governing policies around it.

- Providing generalizable infrastructure to remove the need for building any ad hoc tools.

- Providing comprehensive diagnostic support, which results in fast debugging and auditing.

Comparing Cloud Orchestration with Cloud Automation

Both Cloud automation and cloud orchestration are used significantly in modern IT industries. There are some specific fundamental differences between the two, which are explained briefly below.

| Points of Difference | Cloud Automation | Cloud Orchestration |

| Concept | Cloud automation refers to tasks or functions which are accomplished without any human intervention in a cloud environment. | Cloud orchestration relates to the arranging and coordination of tasks that are automated. The main aim is to create a consolidated workflow or process. |

| Nature of Tools | Cloud automation tools and activities related to it occur in a particular order using certain groups or tools. They are also required to be granted permissions and given roles. | Cloud orchestration tools can enumerate the various resources, IAM roles, instance types, etc., configure them and ensure that there is a degree of interoperability between those resources. This is true regardless of the tools native to the LaaS platform or belongs to a third party. |

| Role of Personnel | Engineers are required to complete a myriad of manual tasks to deliver a new environment. | It requires less intervention from personnel. |

| Policy decisions | Cloud automation does not typically implement any policy decisions which fall outside of OS-level ACLs. | Cloud orchestration handles all permissions and security of automation tasks.

|

| Resources Used | It uses minimal resources outside of the assigned specific task. | Ensures that cloud resources are efficiently utilized.

|

| Monitoring and Alerting | Cloud automation can send data to third party reporting services. | Cloud orchestration only involves monitoring and alerting for its workflows. |

Benefits of Orchestration and Automation

Many organizations have shifted towards cloud orchestration tools to simplify the process of deployment and management. The benefits provided by cloud orchestration are many, with the major ones outlined below.

Simplified optimization

Under cloud orchestration, individual tasks are bundled together into a larger, more optimized workflow. This process is different than standard cloud automation, which handles these individual tasks one at a time. For instance, an application that utilizes cloud orchestration includes the automated provisioning of multiple storages, servers, databases, and networking.

Automation is unified

Cloud administrators usually have a portion of their processes automated. It is different from a fully unified automation platform that cloud orchestration provides. Cloud orchestration centralizes the entire automation process under one roof. This, in turn, makes the whole process cost-effective, faster, and easier to change and expand the automated services if required later.

Forces best practices

Cloud orchestration processes cannot be fully implemented without cleaning up any existing processes that do not adhere to best practices. As automation is easier to achieve with properly organized cloud resources, any existing cloud procedures need to be evaluated and cleaned up if necessary. There are several good practices associated with cloud orchestration that are adapted by organizations. These include pre-built templates for deployment, structured IP addressing, and baked-in security.

Self-service portal

Cloud orchestration provides self-service portals that are favored by infrastructure administrators and developers alike. It gives developers the freedom to select the cloud services they desire via an easy to use web portal. This setup eliminates the need for infrastructure admins in the entire deployment process.

Visibility and control are improved

VM sprawl, which refers to a point where the system administrator can no longer effectively manage a high number of virtual machines, is a common occurrence for many organizations. If left unattended, it can cause unnecessary wastage of financial resources and can complicate the management of a particular cloud platform. Cloud orchestration tools can be used to automatically monitor any VM instances that may occur, which reduces the number of staff hours required for managing the cloud.

Long term cost savings

By properly implementing cloud orchestration, an organization can reduce and improve its cloud service footprint, reducing the need for infrastructure staffing significantly.

Automated chargeback calculation

For companies that offer self-service portals for their different departments, cloud orchestration tools such as metering, and chargeback tools could keep close track of the number of cloud resources employed.

Helps facilitate business agility

The shift into a purely digital environment is happening at a much faster pace, and businesses are keen on hopping onboard. IT shops are thus required to design and manage their computer resources, which would allow them to pivot towards any new or emerging opportunity in short notice. This rapid flexibility is facilitated by implementing a robust cloud orchestration system.

A Collaborative Cloud Solution

Both automation and orchestration can take place on an individual level as well as on a companywide level. Employees can take advantage of automation suites that support apps such as emails, Microsoft products, Google products, etc. as they do not need any prior or advanced knowledge about coding. It’s recommended to choose projects which can create significant and measurable business value and not use orchestration for merely speeding up the entire process.

It’s not a debate of orchestration vs automation, but instead, a matter of collaboration and implementing them in the right degree and combination. Doing so allows any company to lower IT costs, increase productivity, and reduce staffing needs. As more organizations start relying on cloud automation, the role of orchestration technology will only increase. The complexity of automation management cannot be performed with only manual intervention, and so, cloud orchestration is considered to be the key to growth, long-term stability, and prosperity.

By streamlining routines automation and its orchestration, free up resources that can be reinvested into further improvement and innovation. Cloud automation and orchestration support more cost-effective business and DevOps/CloudOps pipelines. Whether it’s offsite cloud services, onsite, or a hybrid model, better use of system resources produces better results and can give an organization major advantages over their competition.

Now that you’ve understood the basic differences between orchestration and automation, you can start looking into the variety of orchestration tools available. IT orchestration tools vary in degree, from basic script-based app deployment tools to specialized tools such as Kubernetes’ container orchestration solution.

Are you interested in cloud solutions and using the infrastructure-as-code paradigm? Then speak to a professional today, and find out which tool is the right one for your environment.

Recent Posts

Kubernetes vs OpenStack: How Do They Stack Up?

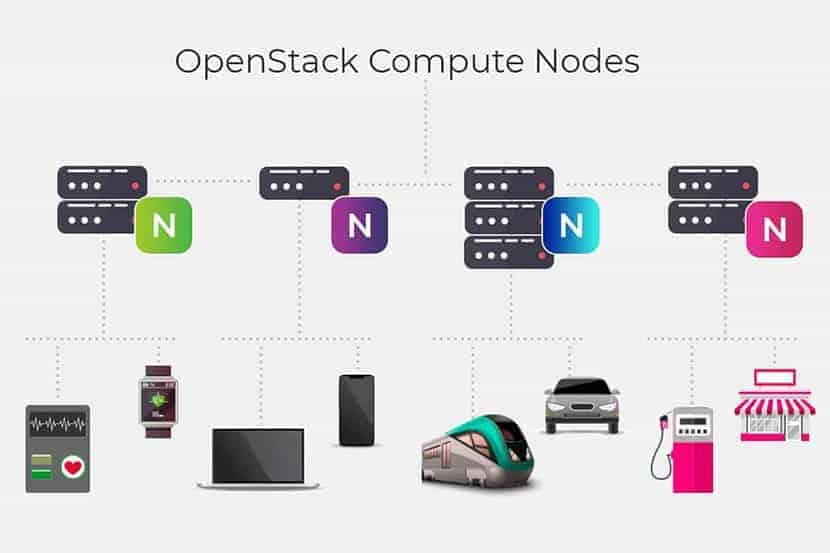

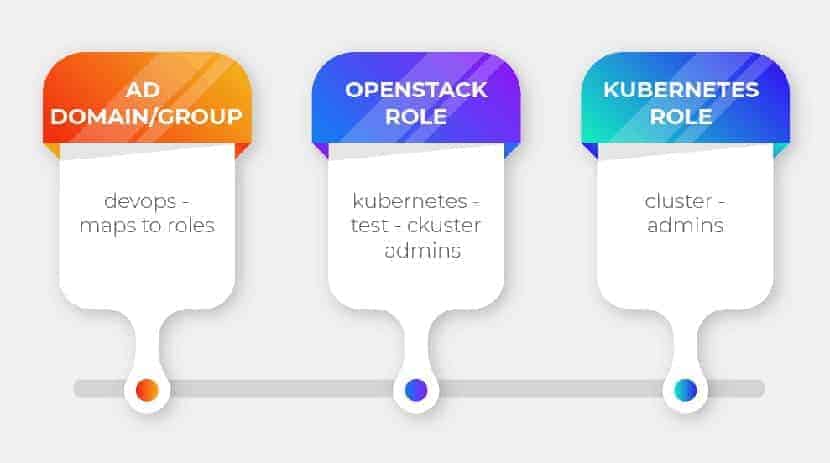

Cloud interoperability keeps evolving alongside its platforms. Kubernetes and OpenStack are not merely direct competitors but can now also be combined to create cloud-native applications. Kubernetes is the most widely used container orchestration tool to manage/orchestrate Linux containers. It deploys, maintains, and schedules applications efficiently. OpenStack lets businesses run their Infrastructure-as-a-Service (IaaS) and is a powerful software application.

Kubernetes and OpenStack have been regarded as competitors, but in actuality, both these open-source technologies can be combined and are complementary to one other. They both offer solutions to problems that are relatively similar but do so on different layers of the stack. When you combine Kubernetes and OpenStack, it can give you noticeably enhanced scalability and automation.

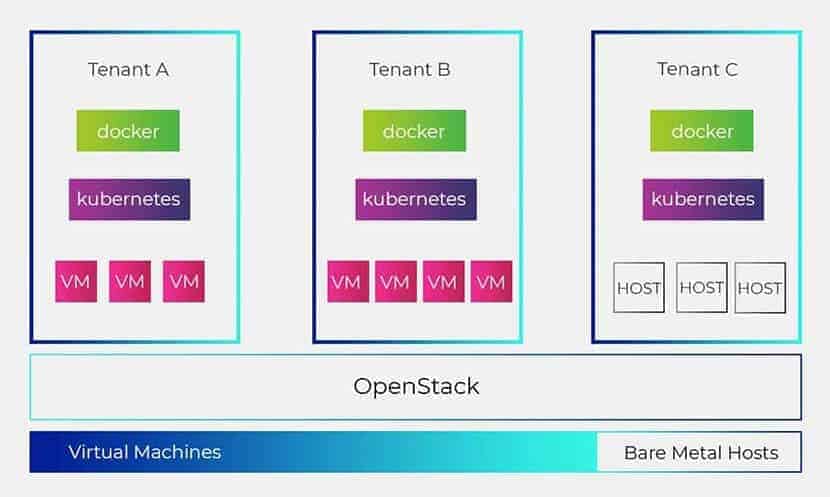

It’s now possible for Kubernetes to deploy and manage applications on an OpenStack cloud infrastructure. OpenStack as a cloud orchestration tool allows you to run Kubernetes clusters on top of white label hardware more efficiently. Containers can be aligned with this open infrastructure, which enables them to share computer resources in rich environments, such as networking and storage.

Difference between OpenStack and Kubernetes

Kubernetes and OpenStack still do compete for users, despite their overlapping features. Both have their own sets of merits and use cases. It’s why it’s necessary to take a closer look at both options to determine their differences and find out which technology or combination is best for your business.

To present a more precise comparison between the two technologies, let’s start with the basics.

What is Kubernetes?

Kubernetes is an open-source cloud platform for managing containerized workloads and services. Kubernetes is a tool used to manage clusters of containerized applications. In computing, this process is often referred to as orchestration.

The analogy with a music orchestra is, in many ways, fitting. Much as a conductor would, Kubernetes coordinates multiple microservices that together form a useful application. It automatically and perpetually monitors the cluster and makes adjustments to its components. Kubernetes architecture provides a mix of portability, extensibility, and functionality, facilitating both declarative configuration and automation. It handles scheduling by using nodes set up in a compute cluster. Kubernetes also actively manages workloads, ensuring that their state matches with the intentions and desired state set by the user.

Kubernetes is designed to make all its components swappable and thus have modular designs. It is built for use with multiple clouds, whether it is public, private, or a combination of the two. Developers tend to prefer Kubernetes for its lightweight, simple, and accessible nature. It operates using a straightforward model. We input how we would like our system to function – Kubernetes compares the desired state to the current state within a cluster. Its service then works to align the two states and achieve and maintain the desired state.

How is Kubernetes Employed?

Kubernetes is arguably one of the most popular tools employed when it comes to getting the most value out of containers. Its features ensure that it is a near-perfect tool designed to automate scaling, deployment, and operating containerized applications.

Kubernetes is not only an orchestration system. It is a set of independent, interconnected control processes. Its role is to continuously work on the current state and move the processes in the desired direction. Kubernetes is ideal for service consumers, such as developers working in enterprise environments as it provides support for programmable, agile, and rapidly deployable environments.

Kubernetes is used for several different reasons:

- High Availability: Kubernetes includes several high-availability features such as multi-master and cluster federation. The cluster federation feature allows clusters to be linked together. This setup exists so that containers can automatically move to another cluster if one fails or goes down.

- Heterogeneous Clusters: Kubernetes can run on heterogeneous clusters allowing users to build clusters from a mix of virtual machines (VMs) running the cloud, according to user requirements.

- Persistent Storage: Kubernetes has extended support for persistent storage, which is connected to stateless application containers.

- Built-in Service Discovery and Auto-Scaling: Kubernetes supports service discovery, out of the box, by using environment variables and DNS. For increased resource utilization, users can also configure CPU based auto-scaling for containers.

- Resource Bin Packing: Users can declare the maximum and minimum compute resources for both CPU and memory when dealing with containers. It slots the containers into wherever they fit, which increases compute efficiency, which results in lower costs.

- Container Deployments and Rollout Controls: The Deployment feature allows users to describe their containers and specify the required quantity. It keeps those containers running and also handles deploying changes. This enables users to pause, resume, and rollback changes as per requirements.

What is OpenStack?

OpenStack an open-source cloud operating system that is employed to develop public and private cloud environments. Made up of multiple interdependent microservices, it offers an IaaS layer that is production-ready for virtual machines and applications. OpenStack, first developed as a cloud infrastructure in July 2010, was a product of the joint effort of many companies, including NASA and Rackspace.

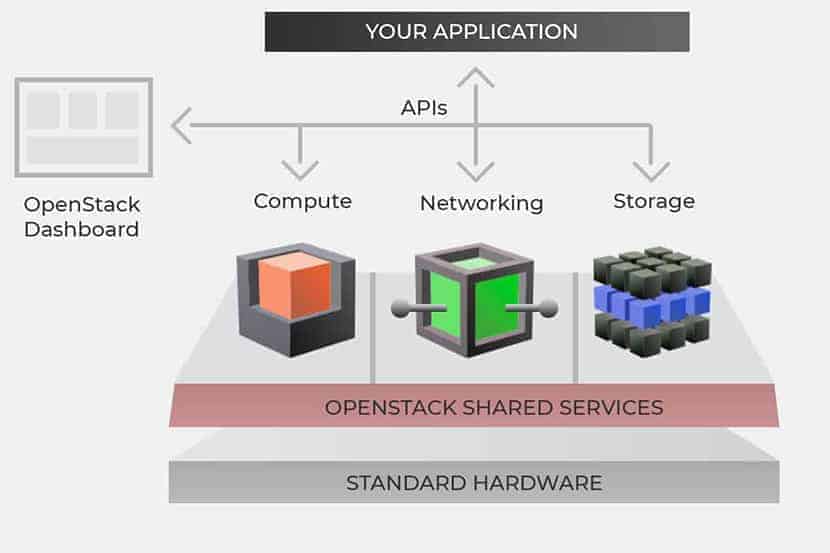

Their goal since has been to provide an open alternative to the top cloud providers. It’s also considered a cloud operating system that can control large pools of computation, storage, and networking resources through a centralized datacenter. All of this is managed through a user-friendly dashboard, which provides users with increased control by allowing them to provision resources through a simple graphic web interface. OpenStack’s growing in popularity because it offers open-source software to businesses wanting to deploy their own private cloud infrastructure versus using a public cloud platform.

How is OpenStack Used?

It’s known for its complexity, consisting of around sixty components, also called ‘services’, six of them are core components, and they control the most critical aspects of the cloud. These services are for the compute, identity, storage management, and networking of the cloud, including access management.

OpenStack comprises of a series of commands known as scripts, which are bundled together into packages called projects. The projects are responsible for relaying tasks that create cloud environments. OpenStack does not virtualize resources itself; instead, it uses them to build clouds.

When it comes to cloud infrastructure management, OpenStack can be employed for the following.

Containers

OpenStack provides a stable foundation for public and private clouds. Containers are used to speed up the application delivery time while also simplifying application management and deployment. Containers running on OpenStack can thus scale container benefits ranging from single teams to even enterprise-wide interdepartmental operations.

Network Functions Virtualization

OpenStack can be used for network functions virtualization, and many global communications service providers include it on their agenda. OpenStack separates a network’s key functions to distribute it among different environments.

Private Clouds

Private cloud distributions tend to run on OpenStack better than other DIY approaches due to the easy installation and management facilities provided by OpenStack. The most advantageous feature is its vendor-neutral API. Its open API erases the worries of single-vendor lock-in for businesses and offers maximum flexibility in the cloud.

Public Clouds

OpenStack is considered as one of the leading open-source options when it comes to creating public cloud environments. OpenStack can be used to set up public clouds with services that are on the same level as most other major public cloud providers. This makes them useful for small scale startups as well as multibillion-dollar enterprises.

What are the Differences between Kubernetes and OpenStack?

Both OpenStack and Kubernetes provide solutions for cloud computing and networking in very different ways. Some of the notable differences between the two are explained in the table below.

| Points of Difference | Kubernetes | OpenStack |

| Classification | Classified as a Container tool | Classified as an Open Source Cloud tool |

| User Base | It has a large Github community of over 55k users as well as, 19.1 Github forks. | Not much of an organized community behind it |

| Companies that Use them | Google, Slack, Shopify, Digital Ocean, 9GAG, Asana, etc. | PayPal, Hubspot, Wikipedia, Hazeorid, Survey Monkey, etc. |

| Main Functions | Efficient docker container and management solution | A flexible and versatile tool for managing Public and Private Clouds |

| Tools that can be Integrated | Docker, Ansible, Microsoft Azure, Google Compute Engine, Kong, Etc. | Fastly, Stack Storm, Spinnaker, Distelli, Morpheus Etc. |

How Can Kubernetes and OpenStack Work Together?

Can Kubernetes and OpenStack work together? This is a common question among potential users.

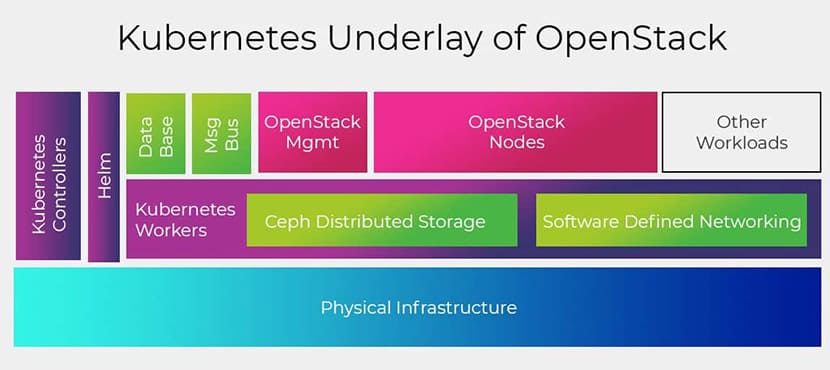

One of the most significant obstacles in the path of OpenStack’s widespread adoption is its ongoing life cycle management. For enterprises, using OpenStack and Kubernetes together can radically simplify the management of OpenStack’s many components. In this way, users benefit from a consistent platform for managing workloads.

Kubernetes and OpenStack can be used together to reap the combined benefits of both the tools. By integrating Kubernetes into OpenStack, Kubernetes users can access a much more robust framework for application deployment and management. Kubernetes’s features, scalability, and flexibility make ‘Stackanetes’ an efficient solution for managing OpenStack and makes operating OpenStack as easy as running any application on Kubernetes.

Benefits of Leveraging Both OpenStack and Kubernetes

Faster Development of Apps

Running Kubernetes and OpenStack together can offer on-demand and access-anytime services. It also helps to increase application portability and reduces development time.

Improving OpenStack’s lifecycle management

Kubernetes, along with cloud-native patterns, improve OpenStack lifecycle management through rolling updates and versioning.

Increased Security

Security has always been a critical concern in container technology. OpenStack solves this by providing a high level of security. It supports the verification of trusted container content by integrating tools for image signing, certification, and scanning.

Standardization

By combining Kubernetes and OpenStack, container technology can become more universally applicable. This makes it easier for organizations to set up as well as deploy container technology, using the existing OpenStack infrastructure.

Easier to Manage

OpenStack can be complex to use and has a steep learning curve, which creates a hindrance for any users. The Stackanetes initiative circumvents the complexity by using Kubernetes cluster orchestration to deploy and manage OpenStack.

Speedy Evolution

Both are widely employed by tech industry giants, notwithstanding Amazon, Google, and eBay. This popularity drives software applications to develop and innovate faster. They increase the pace of evolution to offer solutions to issues as they crop up. Evolving and simultaneously integrating, creates rapidly upgraded enterprise-grade infrastructure and application platforms.

Stability

OpenStack on its own lacks the stability to run smoothly. Kubernetes, on the other hand, uses a large-scale distributed system, allowing it to run smoothly. By combining the two, OpenStack can use a more modernized architecture, which also increases its stability.

Kubernetes and OpenStack are Better Together

There has always been competition between OpenStack and Kubernetes, both of whom are giants in the open-source technology landscape. That’s why it can be surprising to some users when we talk about the advantages of using these two complementary tools together. As both of them solve similar problems but on different layers, combining the two is the most practical solution for scalability and automation. Combined, more than ever, DevOps teams would have more freedom to create cloud-native applications. Both Kubernetes and OpenStack have their advantages and use cases, making it very difficult to compare between the two, as both of them are used in different contexts.

OpenStack, together with Kubernetes, can increase the resilience and scale of its control panel, allowing faster delivery of infrastructure innovation. These different yet complementary technologies, widely used by industry leaders, will keep both innovating at an unprecedented pace.

Find out how these solutions can work for you, and get your free quote today.

Recent Posts

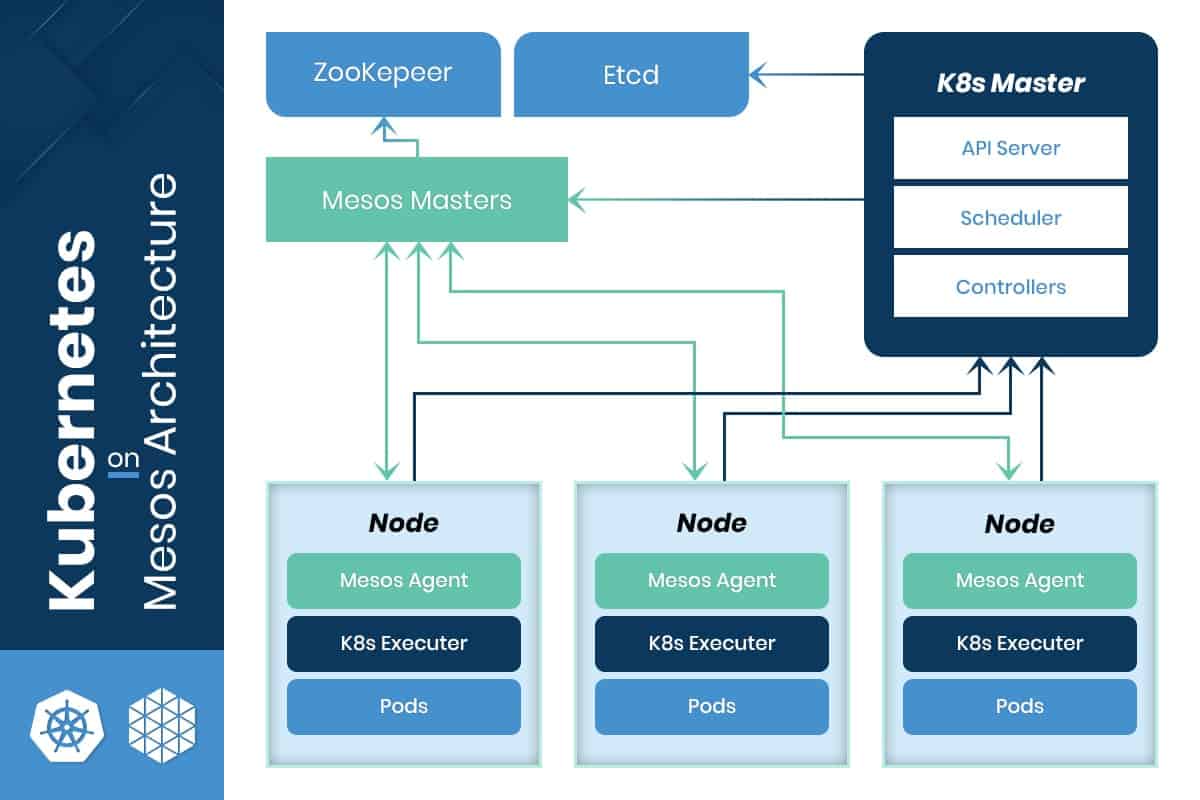

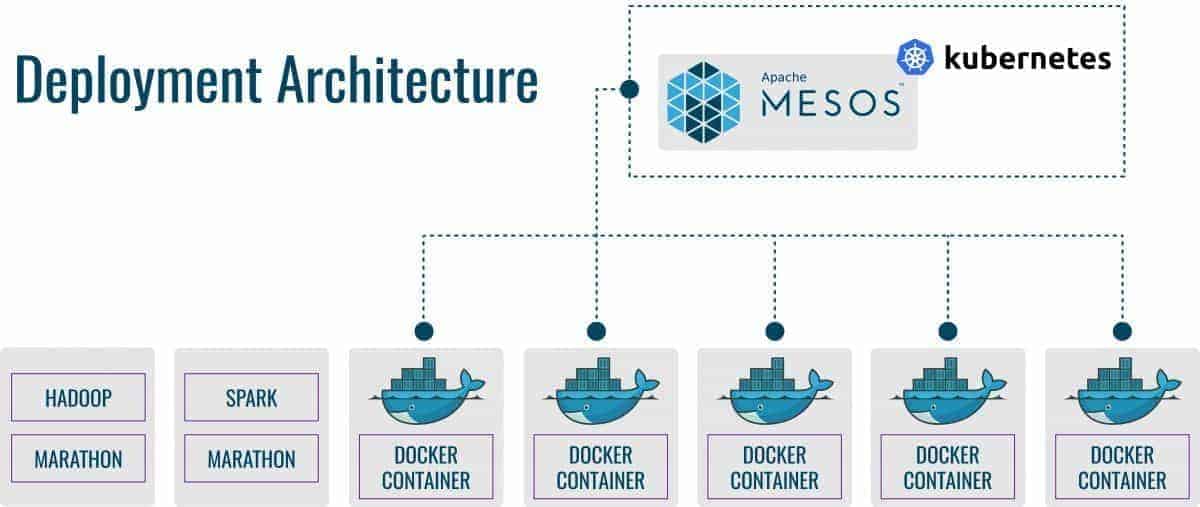

Kubernetes vs Mesos: Detailed Comparison

Container orchestration is a fast-evolving technology. There are three current industry giants; Kubernetes, Docker Swarm, and Apache Mesos. They fall into the category of DevOps infrastructure management tools, known as ‘Container Orchestration Engines’. Docker Swarm has won over large customer favor, becoming the lead choice in containerization. Kubernetes and Mesos are the main competition. They have something more to offer in this regard. They provide differing gradients of usability, with many evolving features.

Despite the popularity of Docker Swarm, it has some drawbacks and limited functionalities:

- Docker Swarm is platform-dependent.

- Docker Swarm does not provide efficient storage options.

- Docker Swarm has limited fault tolerance.

- Docker Swarm has inadequate monitoring.

These drawbacks provoke businesses to question: ‘How to choose the right container management and orchestration tool?’ Many companies are now choosing an alternative to Docker Swarm. This is where Kubernetes and Mesos come in. To examine this choice systematically, it’s essential to look at the core competencies both options have. So, one can come to an independently informed conclusion.

Characteristics of Docker Swarm, Kubernetes, and Mesos

| Characteristics | Docker Swarm | Kubernetes | Mesos/Marathon |

| Initial Release Date | Mar 2013, Stable release July 2019 | July 2015, v1.16 in Sept 2019 | July 2016, Stable release August 2019 |

| Deployment | YAML based | YAML based | Unique format |

| Stability | Comparatively new and constantly evolving | Quite mature and stable with continuous updates | Mature |

| Design Philosophy | Docker-based | Pod-based resource-groupings | Cgroups and control groups based in Linux |

| Images Supported | Docker-image format | Supports Docker and rkt, limitedly | Supports mostly Docker |

| Learning Curve | Easy | Steep | Steep |

What is Kubernetes?

First released in June of 2014, Kubernetes, was also known as k8s. It is a container orchestration platform by Google for Cloud-native computing. In terms of features, Kubernetes is one of the most natively integrated options available. It also has a large community behind it. Google makes use of Kubernetes for its Container as a Service offering, renamed as the Google Container Engine. Other platforms that have extended support to Kubernetes include Microsoft Azure and Red Hat OpenShift. It also supports Docker and uses a YAML based deployment model.

Constructed on a modular API core, the architecture of Kubernetes allows vendors to integrate systems around its proprietary technology. It does a great job of empowering application developers with a powerful tool for Docker container orchestration and open-source projects.

What is Apache Mesos?

Apache Mesos’ roots go back to 2009 when Ph.D. students first developed it at UC Berkley. When compared to Kubernetes and Docker Swarm, it takes more of a distributed approach when it comes to managing datacenter and cloud resources.

It takes a modular approach when dealing with container management. It allows users to have flexibility in the types and scalability of applications that they can run. Mesos allows other container management frameworks to run on top of it. This includes Kubernetes, Apache Aurora, Mesosphere Marathon, and Chronos.

Mesos was created to solve many different challenges. One being, to abstract data center resources into a single pool. Another, to collocate diverse workloads and automate day-two operations. And lastly, to provide evergreen extensibility to running tasks and new applications. It has a unique ability to manage a diverse set of workloads individually, including application groups such as Java, stateless micro-services, etc.

Container Management: Explained

Before we decide on how to choose a container management tool, the concept of Container Management must be explained further.

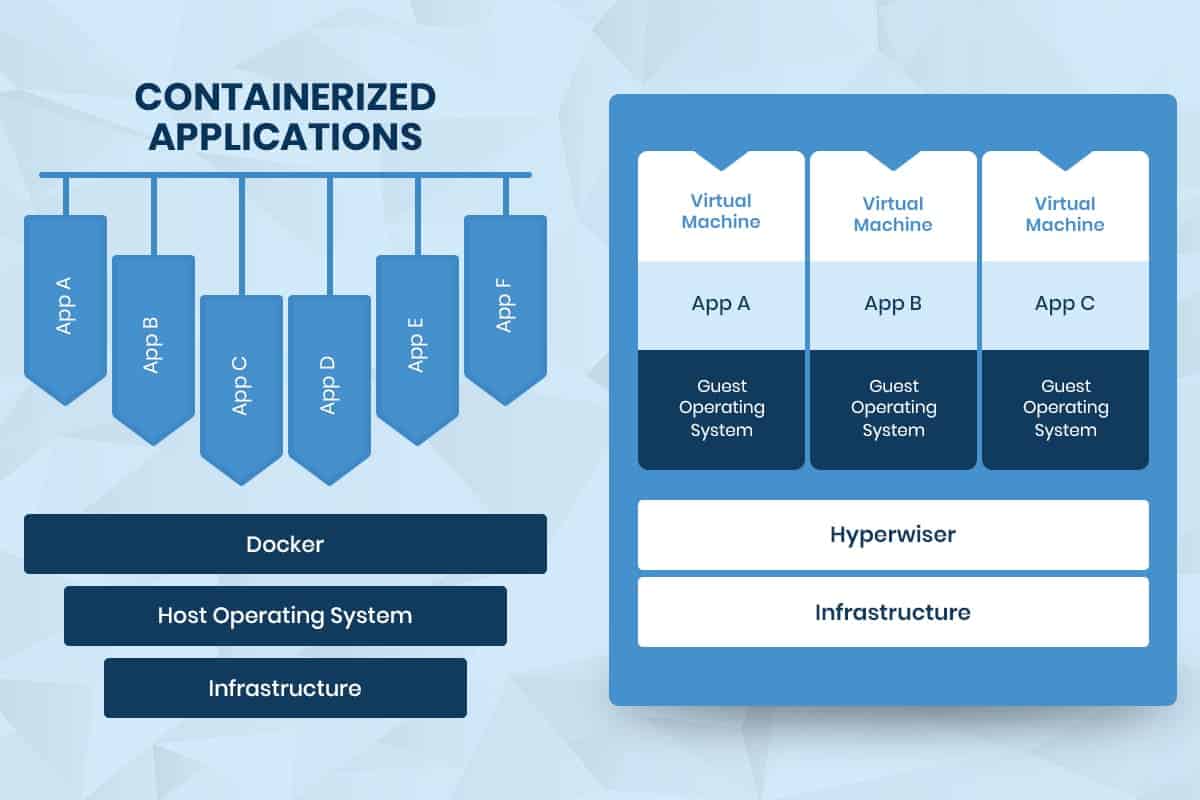

Container Management is the process of adding, organizing, and replacing large numbers of software containers. It utilizes software for automatically creating, deploying, and scaling containers. Container management requires a platform to organize software containers, known as operated-system-level virtualizations. This platform optimizes the efficiency and streamlines container delivery without the use of complex interdependent system architectures.

Containers have become quite popular, as more enterprises are using DevOps for quicker development and for its applications. Container management gives rise to the need for container orchestration, which is a more specialized tool. It automates deployment, management, networking scaling, and availability of container-based applications.

Container Orchestration: Explained

Container Orchestration refers to the automatic process of managing or scheduling of individual containers used for microservices-based applications within multiple clusters. It works with both Kubernetes and Mesos. It also schedules the deployment of containers into the clusters, determining the best host for the container.

Some of the reasons why a container orchestration framework is required include;

- Configuring and scheduling containers

- Container Availability

- Container Provisioning and Deployment

- Container Configuration

- Scaling applications of containers for load balancing

- Health monitoring of containers

- Securing interactions between containers

How to Select Container management and orchestration tool?

There are many variables to consider when deciding on how to implement container management and orchestration efficiently. The final selection will depend on the specific requirements of the user. Some of which are briefly explained below.

- CNI Networking: A good tool should allow trivial network connectivity between services. This is to avoid developers having to spend time on special-purpose codes for finding dependencies.

- Simplicity: The tool in use should be as simple to implement as possible.

- Active Development: The tool chosen should have a development team that provides users with regular updates. This is due to the ever-evolving nature of container orchestration.

- Cloud Vendor: The tool chosen should not be tied to any single cloud provider.

Note: Container orchestration is just one example of a workload that the Mesos Modular Architecture can run. This specialized orchestration framework is called Marathon. It was originally developed to orchestrate app archives in Linux cgroup containers, later extended support to Docker containers in 2014.

What are the differences between Kubernetes and Mesos?

Kubernetes and Mesos have different approaches to the same problem. Kubernetes acts as a container orchestrator, and Apache Mesos works like a cloud operating system. Therefore, there are several fundamental differences between the two, which are highlighted in the table below.

| Points of Difference | Kubernetes | Apache Mesos |

| Application Definition | Kubernetes is a combination of Replica Sets, Replication Controllers, Pods, along with certain Services and Deployments. Here, “Pod” refers to a group of co-located containers, which is considered as the atomic unit of deployment. | The Mesos’ Application Group is modeled as an n-ary tree, with groups as branches and applications as leaves. It’s used to partition multiple applications into manageable sets, where components are deployed in order of dependency. |

| Availability | Pods are distributed among Worker Nodes. | Applications are distributed among Slave nodes. |

| Load Balancing | Pods are exposed via a service that acts as a load balancer. | Applications can be reached through an acting load balancer, which is the Mesos-DNS. |

| Storage | There are two Stage APIs.

The first one provides abstractions for individual storage back-ends such as NFS, AWS, and EBS, etc. The second one provides an abstraction for a storage resource request. This is fulfilled with different storage back-ends. |

A Marathon Container has the capability to use persistent volumes, which are local to the node where they are created. Hence the container always required to run on the said node. The experimental flocker integration is responsible for supporting persistent volumes that are not local to one single node. |

| Networking Model | Kubernetes’ Networking model allows any pod to communicate with any service or with other pods. It requires two separate networks to operate, with neither network requiring connectivity from outside the cluster. This is accomplished by deploying an overlay network on the cluster nodes. | Marathon’s Docker integration allows mapping container ports to hose ports, which are a limited resource. Here, the container will not automatically acquire an IP, that is only possible by integrating with Calico. It should be noted that multiple containers cannot share the same network namespace. |

| Purpose of Use | It is ideal for newcomers to the clustering world, providing a quick, easy, and light way to start begin their journey in cluster-oriented development. It offers a great degree of versatility, portability, and is supported by a few big-name providers such as Microsoft and IBM. | It is ideal for large systems as it is designed for maximum redundancy. For existing workloads such as Hadoop or Kafka, Mesos provides a framework allowing the user to interleave those workloads with each other. It is a much more stable platform while being comparatively complex to use. |

| Vendors and Developers | Kubernetes is used by several companies and developers and is supported by a few other platforms such as Red Hat OpenShift and Microsoft Azure. | Mesos is supported by large organizations such as Twitter, Apple, and Yelp. Its learning curve is steep and quite complex as its core focus is one Big Data and analytics. |

Conclusion

Kubernetes and Mesos employ different tactics to tackle the same problem. In comparing them based on several features, we have found that both solutions are equivalent in terms of features and other advantages when compared to Docker Swarm.

The conclusion we can come to is that they are both viable options for container management and orchestration. Each tool is effective in managing docker containers. They both provide access to container orchestration for the portability and scalability of applications.

The intuitive architectural design of Mesos provides good options when it comes to handling legacy systems and large-scale clustered environments via its DC/OS. It’s also adept at handling more specific technologies such as distributed processing with Hadoop. Kubernetes is preferred more by development teams who want to build a system dedicated exclusively to docker container orchestration.

Our straightforward comparison should provide users with a clear picture of Kubernetes vs Mesos and their core competencies. The goal has been to provide the reader with relevant data and facts to inform their decision.

How to choose between them will depend on finding the right cluster management solution that fits your company’s technical requirements. If you’d like to find out more about which solution would suit you best, contact us today for a free consultation.

Recent Posts

Kubernetes vs Docker Swarm: What are the Differences?

As an increasing number of applications move to the cloud, their architectures and distributions continue to evolve. This evolution requires the right set of tools and skills to manage a distributed topology across the cloud effectively. The management of microservices across virtual machines, each with multiple containers in varied groupings, can quickly become complicated. To reduce this complexity, container orchestration is utilized.

Container orchestration is the automatic managing of work for singular containers. It’s for applications based on microservices within multiple clusters. A single tool for the deployment and management of microservices in virtual machines. Distributing microservices across many machines is complex. Orchestration provides the following solutions:

- A centralized tool for the distribution of applications across many machines.

- Deploys new nodes when one goes down.

- Relays information through a centralized API, communicating to every distributed node.

- Efficient management of resources.

- Distribution based on personalized configuration.

In regards to how modern development and operations teams test and deploy software, microservices have proven to be the best solution. Containers can help companies modernize themselves by allowing them to deploy and scale applications quickly. Containerization is an entirely new infrastructure system. Before discussing the main differences between the Kubernetes and the Docker system, the concept of containerization will be expanded on.

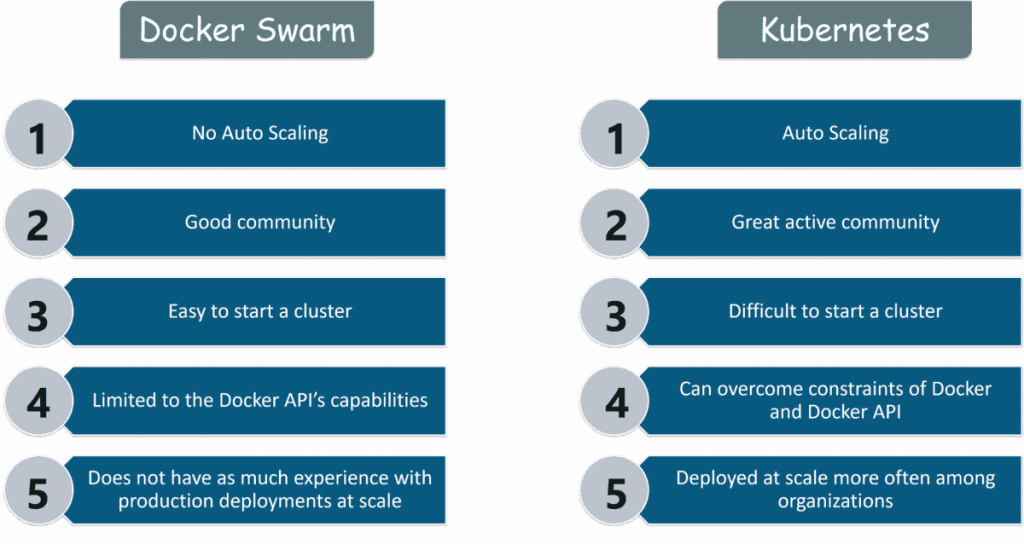

The orchestration tools we will stack up against each other are Kubernetes vs. Docker Swarm. There are several differences between the two. This article will discuss what each tool does, followed by a comparison between them.

What is Kubernetes?

Created by Google in 2014, Kubernetes (also called K8s) is an open-source project for effectively managing application deployment. In 2015, Google also partnered with the Linux Foundation to create the Cloud Native Computing Foundation (CNCF). CNCF now manages the Kubernetes project.

Kubernetes has been gaining in popularity since its creation. A Google Trends search over the last five years shows Kubernetes has surpassed the popularity of Docker Swarm, ending August 2019 with a score of 91 vs. 3 for Docker Swarm.

Kubernetes architecture was designed from the ground up with orchestration in mind. Based on the primary/replica model, where there is a master node that distributes instructions to worker nodes. Worker nodes are the machines that microservices run on. This configuration allows each node to host all of the containers that are running within a container run-time (i.e., Docker). Nodes also contain Kubelets.

Think of Kubelets as the brains for each node. Kubelets take instructions from the API, which is in the master node, and then process them. Kubelets also manage pods, including creating new ones if a pod goes down.

A pod is an abstraction that groups containers. By grouping containers into a pod, those containers can share resources. These resources include processing power, memory, and storage. At a high level, here are some of Kubernetes’ main features:

- Automation

- Deployment

- Scaling

Before discussing Kubernetes any further, let’s take a closer look at Docker Swarm.

Explaining Docker Swarm

Docker Swarm is a tool used for clustering and scheduling Docker containers. With the help of Swarm, IT developers and administrations can easily establish and manage a cluster of Docker nodes under a single virtual system. Clustering is an important component for container technology, allowing administrators to create a cooperative group of systems that provide redundancy.

Docker Swarm failover can be enabled in case of a node outage. With the help of a Docker swarm cluster, administrators, as well as developers, can add or subtract container iterations with the changing computing demand.

For companies that want even more support, there is Docker’s Docker Enterprise-as-a-Service (EaaS). EaaS performs all the necessary upgrades and configurations, removing this burden from the customer. Companies who use AWS or Microsoft Azure can “consume Docker Enterprise 3.0.” Cluster management and orchestration are also known as a swarm. Docker Swarmkit is a tool for creating and managing swarms.

Similar to Kubernetes, Docker Swarm can both deploy across nodes and manage the availability of those nodes. Docker Swarm calls its main node, the manager node. Within the Swarm, the manager nodes communicate with the worker nodes. Docker Swarm also offers load balancing.

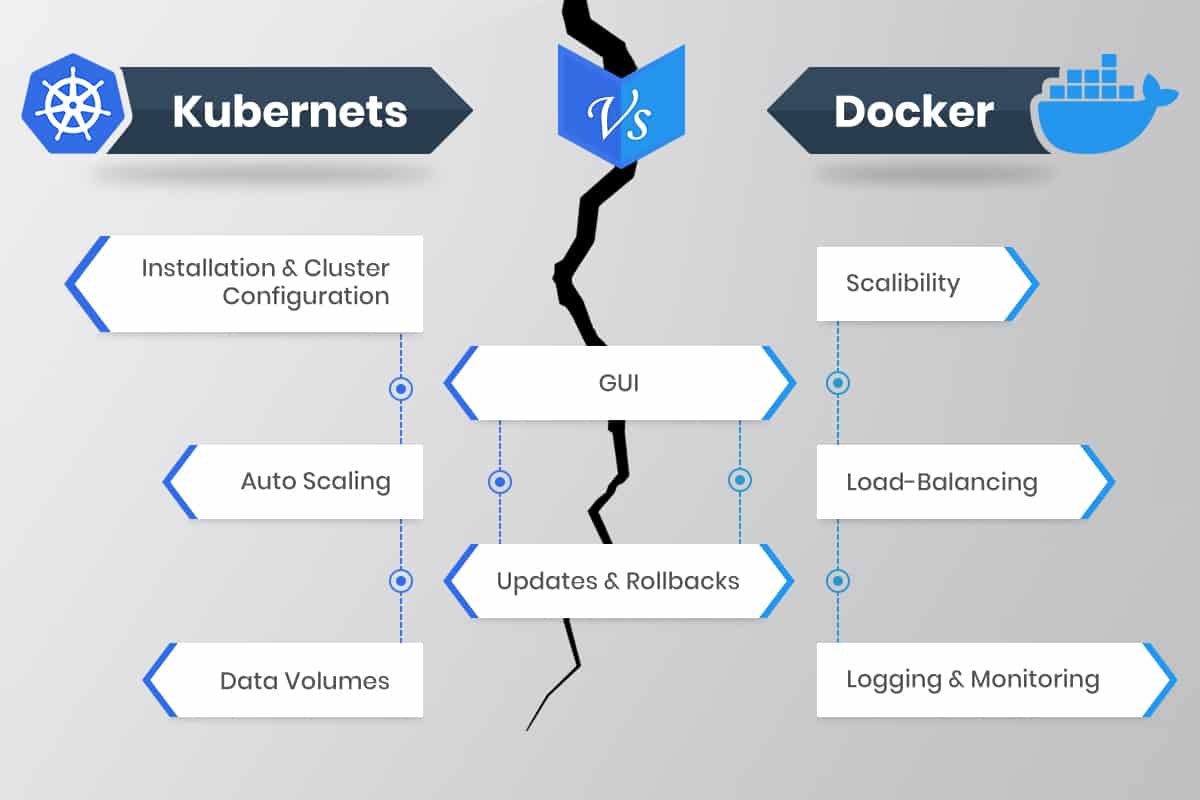

What is the Difference Between Kubernetes and Docker Swarm?

Both Kubernetes and Docker Swarm are two of the most used open-source platforms, which provide mostly similar features. However, on closer inspection, several fundamental differences can be noticed between how these two functions. The Table below illustrates the main points of difference between the two:

| Point of Difference | Kubernetes | Docker Swarm |

| Application Deployment | Applications can be deployed in Kubernetes using a myriad of microservices, deployments, and pods. | Applications can be used only as microservices in a swarm cluster. Multi containers are identified by utilizing YAML files. With the help of Docker Compose, the application can also be installed. |

| Scalability | Kubernetes acts like more of an all-in-one framework when working with distributed systems. It is thus significantly more complicated as it provides strong guarantees in terms of the cluster state and a unified set of APIs. Container scaling and deployment are therefore slowed down. | Docker Swarm can deploy containers much faster than Kubernetes, which allows faster reaction times for scaling on demand. |

| Container Setup | By utilizing its own YAML, API, and client definitions, Kubernetes differs from other standard docker equivalents. Thus, Docker Compose or Docker CLI cannot be used to define containers. Also, YAML definitions and commands must be rewritten when switching platforms. | The Docker Swarm API offers much of the familiar functionality from Docker, supporting most of the tools that run with Docker. However, Swarm cannot be utilized if the Docker API is deficient in a particular operation. |

| Networking | Kubernetes has a flat network model, allowing all the pods to communicate with each other. Network policies are in place to define how the pods interact with one another. The network is implemented typically as an overlay, requiring two CIDRS for the services and the pods. | When a node joins a swarm cluster, it creates an overlay network for services for each host in the docker swarm. It also creates a host-only docker bridge network for containers. This gives users a choice while encrypting the container data traffic to create its own overlay network. |

| Availability | Kubernetes offers significantly high availability as it distributes all the pods among the nodes. This is achieved by absorbing the failure of an application. Unhealthy pods are detected by load balancing services, which subsequently deactivate them. | Docker also offers high availability architecture since all the services can be cloned in Swarm nodes. The Swarm manager Nodes manage the worker’s node resources and the whole cluster. |

| Load Balancing | In Kubernetes, pods are exposed via service, allowing them to be implemented as a load balancer inside a cluster. An ingress is generally used for load balancing. | Swarm Mode comes with a DNS element which can be used for distributing incoming requests to a service name. Thus services can be assigned automatically or function on ports that are pre-specified by the user. |

| Logging and Monitoring | It includes built-in tools for managing both processes. | It does not require using any tools for logging and monitoring. |

| GUI | Kubernetes has detailed dashboards to allow even non-technical users to control the clusters effectively. | ocker Swarm, alternatively, requires a third-party tool such as Portainer.io to manage the UI conveniently. |

Our comparison reveals that both Kubernetes and Docker Swarm are comprehensive “de-facto” solutions for intelligently managing containerized applications. Even though they offer similar capabilities, the two are not directly comparable, as they have distinct roots and solve different problems.

Thus, Kubernetes works well as a container orchestration system for Docker containers, utilizing the concept of pods and nodes. Docker is a platform and a tool for building, distributing, and running docker containers, using its native clustering tool to orchestrate and schedule containers on machine clusters.

Which one should you use: Kubernetes or Docker Swarm?

The choice of tool depends on the needs of your organization.

Docker Swarm is a good choice if you need a quick setup and do not have exhaustive configuration requirements. It delivers software and applications with microservice-based architecture effectively. Its main positives are the simplicity of installation and a gradual learning curve. As a standalone application, it’s perfect for software development and testing. Thus, with easy deployment and automated configuration, it also uses fewer hardware resources, it could be the first solution to consider. The downside is that native monitoring tools are lacking, and the Docker API limits functionality. But it still offers overlay networking, load balancing, high availability, and several scalability features. Final verdict: Docker Swarm is ideal for users who want to set up a containerized application and get it up and running without much delay.

Kubernetes would be the best containerization platform to use if the app you’re developing is complex and utilizes hundreds of thousands of containers in the process. It has high availability policies and auto-scaling capabilities. Unfortunately, the learning curve is steep and might hinder some users. The configuration and setup process is also lengthy. Final verdict: Kubernetes is for those users, who are comfortable with customizing their options and need extensive functionalities.

Find out which solution would suit your business best, and contact us today for a free consultation.

Recent Posts

When is Microservice Architecture the Way to Go?

Choosing and designing the correct architecture for a system is critical. One must ensure the quality of service requirements and the handling of non-functional requirements, such as maintainability, extensibility, and testability.

Microservice architecture is quite a recurrent choice in the latest ecosystems after companies adopted Agile and DevOps. While not being a de facto choice, when dealing with systems that are extensively growing and where a monolith architecture is no longer feasible to maintain, it is one of the preferred options. Keeping components service-oriented and loosely coupled allows continuous development and release cycles ongoing. This drives businesses to constantly test and upgrade their software.

The main prerequisites that call for such an architecture are:

- Domain-Driven Design

- Continuous Delivery and DevOps Culture

- Failure Isolation

- Decentralization

It has the following benefits:

- Team ownership

- Frequent releases

- Easier maintenance

- Easier upgrades to newer versions

- Technology agnostic

It has the following cons:

- microservice-to-microservice communication mechanisms

- Increasing the number of services increases the overall system complexity

The more distributed and complex the architecture is, the more challenging it is to ensure that the system can be expanded and maintained while controlling cost and risk. One business transaction might involve multiple combinations of protocols and technologies. It is not just about the use cases but also about its operations. When adopting Agile and DevOps approaches, one should find a balance between flexibility versus functionality aiming to achieve continuous revision and testing.

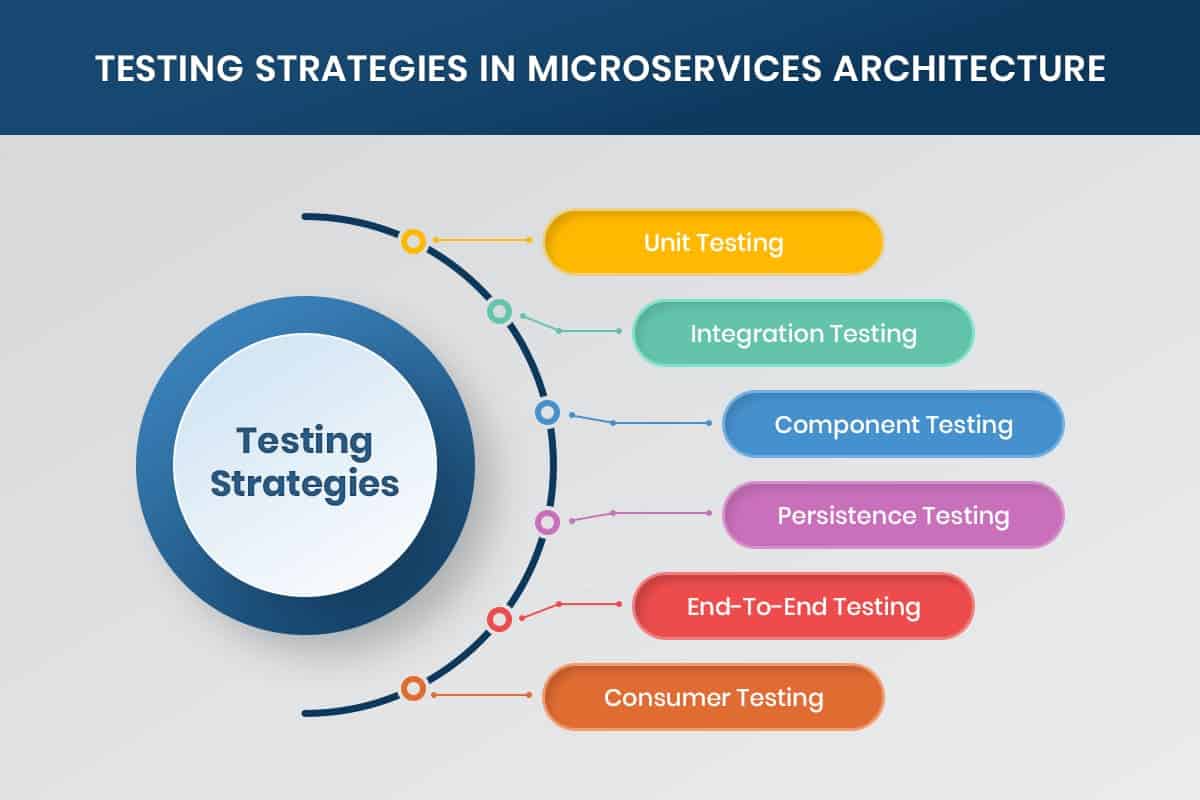

The Importance of Testing Strategies in Relation to Microservices

Adopting DevOps in an organization aims to eliminate the various isolated departments and move towards one overall team. This move seeks to specifically improve the relationships and processes between the software team and the operations team. Delivering at a faster rate also means ensuring that there is continuous testing as part of the software delivery pipeline. Deploying daily (and in some cases even every couple of hours) is one of the main targets for fast end-to-end business solution delivery. Reliability and security must be kept in mind here, and this is where testing comes in.

The inclusion of test-driven development is the only way to achieve genuine confidence that code is production-ready. Valid test cases add value to the system since they validate and document the system itself. Apart from that, good code coverage encourages improvements and assists during refactoring.

Microservices architecture decentralizes communication channels, which makes testing more complicated. It’s not an insurmountable problem. A team owning a microservice should not be afraid to introduce changes because they might break existing client applications. Manual testing is very inefficient, considering that continuous integration and continuous deployment is the current best practice. DevOps engineers should ensure to include automation tests in their development workflow: write tests, add/refactor code, and run tests.

Common Microservice Testing Methods

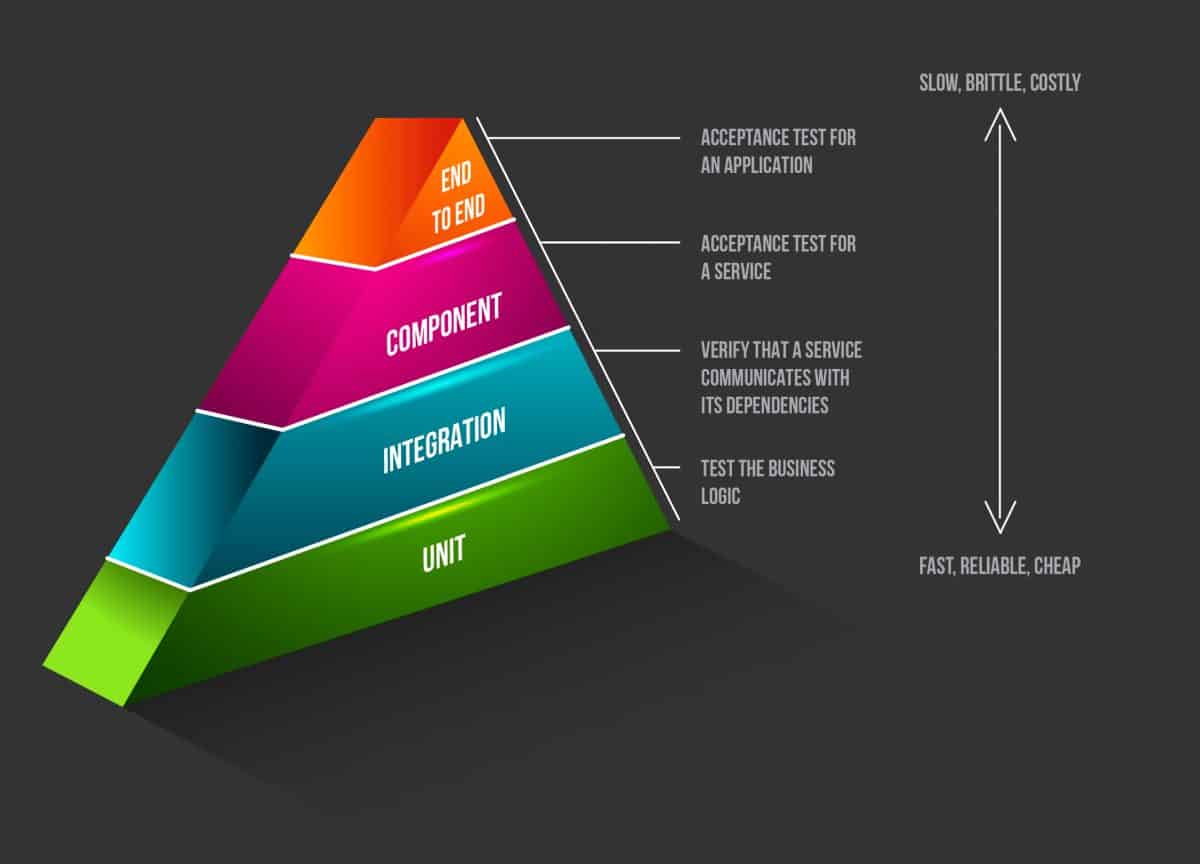

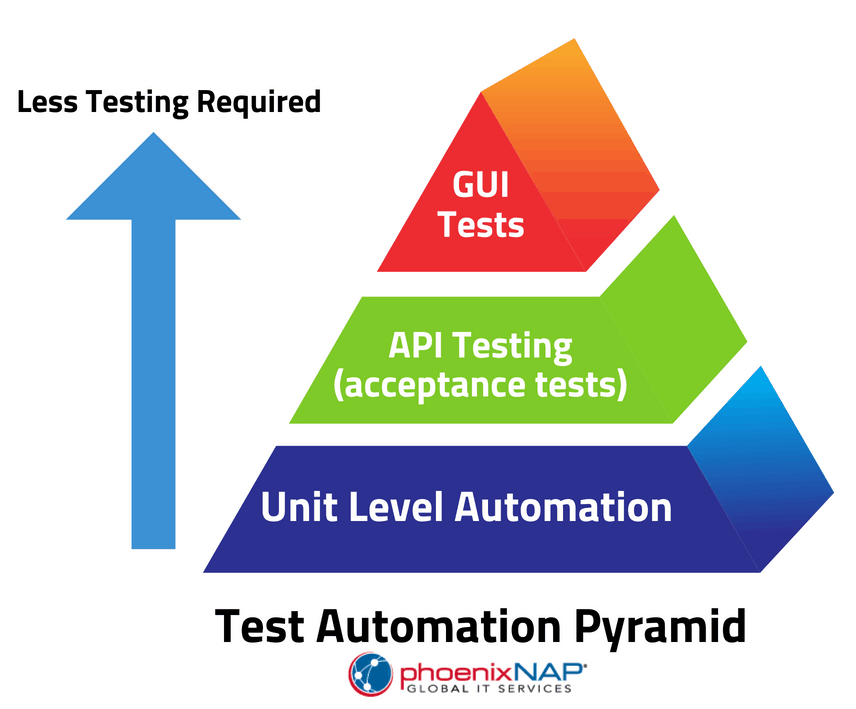

The test pyramid is an easy concept that helps us identify the effort required when writing tests, and where the number of tests should decrease if granularity decreases. It also applies when considering continuous testing for microservices.

To make the topic more concrete, we will tackle the testing of a sample microservice using Spring Boot and Java. Microservice architectures, by construct, are more complicated than monolithic architecture. Nonetheless, we will keep the focus on the type of tests and not on the architecture. Our snippets are based on a minimal project composed of one API-driven microservice owning a data store using MongoDB.

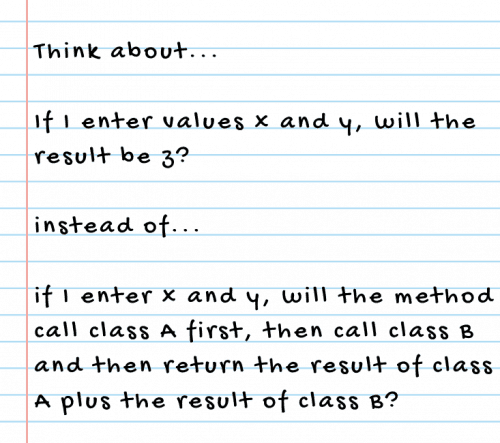

Unit tests

Unit tests should be the majority of tests since they are fast, reliable, and easy to maintain. These tests are also called white-box tests. The engineer implementing them is familiar with the logic and is writing the test to validate the module specifications and check the quality of code.

The focus of these tests is a small part of the system in isolation, i.e., the Class Under Test (CUT). The Single Responsibility Principle is a good guideline on how to manage code relating to functionality.

The most common form of a unit test is a “solitary unit test.” It does not cross any boundaries and does not need any collaborators apart from the CUT.

As outlined by Bill Caputo, databases, messaging channels, or other systems are the boundaries; any additional class used or required by the CUT is a collaborator. A unit test should never cross a boundary. When making use of collaborators, one is writing a “sociable unit tests.” Using mocks for dependencies used by the CUT is a way to test sociable code with a “solitary unit test.”

In traditional software development models, developer testing was not yet wildly adopted, having to test completely off-sync from development. Achieving a high code coverage rating was considered a key indicator of test suite confidence.

With the introduction of Agile and short iterative cycles, it’s evident now that previous test models no longer work. Frequent changes are expected continuously. It is much more critical to test observable behavior rather than having all code paths covered. Unit tests should be more about assertions than code coverage because the aim is to verify that the logic is working as expected.

It is useless to have a component with loads of tests and a high percentage of code coverage when tests do not have proper assertions. Applying a more Behavior-Driven Development (BDD) approach ensures that tests are verifying the end state and that the behavior matches the requirements set by the business. The advantage of having focused tests with a well-defined scope is that it becomes easier to identify the cause of failure. BDD tests give us higher confidence that failure was a consequence of a change in feature behavior. Tests that otherwise focus more on code coverage cannot offer much confidence since there would be a higher risk that failure is a repercussion for changes done in the tests themselves due to code paths implementation details.

Tests should follow Martin Fowler’s suggestion when he stated the following (in Refactoring: Improving the Design of Existing Code, Second Edition. Kent Beck, and Martin Fowler. Addison-Wesley. 2018):

Another reason to focus less on minor implementation details is refactoring. During refactoring, unit tests should be there to give us confidence and not slow down work. A change in the implementation of the collaborator might result in a test failure, which might make tests harder to maintain. It is highly recommended to keep a minimum of sociable unit tests. This is especially true when such tests might slow down the development life cycle with the possibility that tests end up ignored. An excellent situation to include a sociable unit test is negative testing, especially when dealing with behavior verification.

Integration tests

One of the most significant challenges with microservices is testing their interaction with the rest of the infrastructure services, i.e., the boundaries that the particular CUT depends on, such as databases or other services. The test pyramid clearly shows that integration tests should be less than unit tests but more than component and end-to-end tests. These other types of tests might be slower, harder to write, and maintain, and be quite fragile when compared to unit tests. Crossing boundaries might have an impact on performance and execution time due to network and database access; still, they are indispensable, especially in the DevOps culture.

In a Continuous Deployment scope, narrow integration tests are favored instead of broad integration tests. The latter is very close to end-to-end tests where it requires the actual service running rather than the use of a test double of those services to test the code interactions. The main goal to achieve is to build manageable operative tests in a fast, easy, and resilient fashion. Integration tests focus on the interaction of the CUT to one service at a time. Our focus is on narrow integration tests. Verification of the interaction between a pair of services can be confirmed to be as expected, where services can be either an infrastructure service or any other service.

Persistence tests

A controversial type of test is when testing the persistence layer, with the primary aim to test the queries and the effect on test data. One option is the use of in-memory databases. Some might consider the use of in-memory databases as a sociable unit test since it is a self-contained test, idempotent, and fast. The test runs against the database created with the desired configuration. After the test runs and assertions are verified, the data store is automatically scrubbed once the JVM exits due to its ephemeral nature. Keep in mind that there is still a connection happening to a different service and is considered a narrow integration test. In a Test-Driven Development (TDD) approach, such tests are essential since test suites should run within seconds. In-memory databases are a valid trade-off to ensure that tests are kept as fast as possible and not ignored in the long run.

@Before

public void setup() throws Exception {

try {

// this will download the version of mongo marked as production. One should

// always mention the version that is currently being used by the SUT

String ip = "localhost";

int port = 27017;

IMongodConfig mongodConfig = new MongodConfigBuilder().version(Version.Main. PRODUCTION)

.net(new Net(ip, port, Network.localhostIsIPv6())).build();

MongodStarter starter = MongodStarter.getDefaultInstance();

mongodExecutable = starter.prepare(mongodConfig);

mongodExecutable.start();

} catch (IOException e) {

e.printStackTrace();

}

}Snippet 1: Installation and startup of the In-memory MongoDB

The above is not a full integration test since an in-memory database does not behave exactly as the production database server. Therefore, it is not a replica for the “real” mongo server, which would be the case if one opts for broad integration tests.

Another option for persistence integration tests is to have broad tests running connected to an actual database server or with the use of containers. Containers ease the pain since, on request, one provisions the database, compared to having a fixed server. Keep in mind such tests are time-consuming, and categorizing tests is a possible solution. Since these tests depend on another service running apart from the CUT, it’s considered a system test. These tests are still essential, and by using categories, one can better determine when specific tests should run to get the best balance between cost and value. For example, during the development cycle, one might run only the narrow integration tests using the in-memory database. Nightly builds could also run tests falling under a category such as broad integration tests.

@Category(FastIntegration.class)

@RunWith(SpringRunner.class)

@DataMongoTest

public class DailyTaskRepositoryInMemoryIntegrationTest {

. . .

}

@Category(SlowIntegration.class)

@RunWith(SpringRunner.class)

@DataMongoTest(excludeAutoConfiguration = EmbeddedMongoAutoConfiguration.class)

public class DailyTaskRepositoryIntegrationTest {

...

}Snippet 2: Using categories to differentiate the types of integration tests

Consumer-driven tests

Inter-Process Communication (IPC) mechanisms are one central aspect of distributed systems based on a microservices architecture. This setup raises various complications during the creation of test suites. In addition to that, in an Agile team, changes are continuously in progress, including changes in APIs or events. No matter which IPC mechanism the system is using, there is the presence of a contract between any two services. There are various types of contracts, depending on which mechanism one chooses to use in the system. When using APIs, the contract is the HTTP request and response, while in the case of an event-based system, the contract is the domain event itself.

A primary goal when testing microservices is to ensure those contracts are well defined and stable at any point in time. In a TDD top-down approach, these are the first tests to be covered. A fundamental integration test ensures that the consumer has quick feedback as soon as a client does not match the real state of the producer to whom it is talking.

These tests should be part of the regular deployment pipeline. Their failure would allow the consumers to become aware that a change on the producer side has occurred, and that changes are required to achieve consistency again. Without the need to write intricate end-to-end tests, ‘consumer-driven contract testing’ would target this use case.

The following is a sample of a contract verifier generated by the spring-cloud-contract plugin.

@Test

public void validate_add_New_Task() throws Exception {

// given:

MockMvcRequestSpecification request = given()

.header("Content-Type", "application/json;charset=UTF-8")

.body("{\"taskName\":\"newTask\",\"taskDescription\":\"newDescription\",\"isComplete\":false,\"isUrgent\":true}");

// when:

ResponseOptions response = given().spec(request).post("/tasks");

// then:

assertThat(response.statusCode()).isEqualTo(200);

assertThat(response.header("Content-Type")).isEqualTo("application/json;charset=UTF-8");

// and:

DocumentContext parsedJson = JsonPath.parse(response.getBody().asString());

assertThatJson(parsedJson).field("['taskName']").isEqualTo("newTask");

assertThatJson(parsedJson).field("['isUrgent']").isEqualTo(true);

assertThatJson(parsedJson).field("['isComplete']").isEqualTo(false);

assertThatJson(parsedJson).field("['id']").isEqualTo("3");

assertThatJson(parsedJson).field("['taskDescription']").isEqualTo("newDescription");

}Snippet 3: Contract Verifier auto-generated by the spring-cloud-contract plugin

A BaseClass written in the producer is instructing what kind of response to expect on the various types of requests by using the standalone setup. The packaged collection of stubs is available to all consumers to be able to pull them in their implementation. Complexity arises when multiple consumers make use of the same contract; therefore, the producer needs to have a global view of the service contracts required.

@RunWith(SpringRunner.class)

@SpringBootTest

public class ContractBaseClass {

@Autowired

private DailyTaskController taskController;

@MockBean

private DailyTaskRepository dailyTaskRepository;

@Before

public void before() {

RestAssuredMockMvc.standaloneSetup(this.taskController);

Mockito.when(this.dailyTaskRepository.findById("1")).thenReturn(

Optional.of(new DailyTask("1", "Test", "Description", false, null)));

. . .

Mockito.when(this.dailyTaskRepository.save(

new DailyTask(null, "newTask", "newDescription", false, true))).thenReturn(

new DailyTask("3", "newTask", "newDescription", false, true));

}Snippet 4: The producer’s BaseClass defining the response expected for each request

On the consumer side, with the inclusion of the spring-cloud-starter-contract-stub-runner dependency, we configured the test to use the stubs binary. This test would run using the stubs generated by the producer as per configuration having version specified or always the latest. The stub artifact links the client with the producer to ensure that both are working on the same contract. Any change that occurs would reflect in those tests, and thus, the consumer would identify whether the producer has changed or not.

@SpringBootTest(classes = TodayAskApplication.class)

@RunWith(SpringRunner.class)

@AutoConfigureStubRunner(ids = "com.cwie.arch:today:+:stubs:8080", stubsMode = StubRunnerProperties.StubsMode.LOCAL)

public class TodayClientStubTest {

. . .

@Test

public void addTask_expectNewTaskResponse () {

Task newTask = todayClient.createTask(

new Task(null, "newTask", "newDescription", false, true));

BDDAssertions.then(newTask).isNotNull();

BDDAssertions.then(newTask.getId()).isEqualTo("3");

. . .

}

}Snippet 5: Consumer injecting the stub version defined by the producer

Such integration tests verify that a provider’s API is still in line with the consumers’ expectations. When using mocked unit tests for APIs, we would have stubbed APIs and mocked the behavior. From a consumer point of view, these types of tests will ensure that the client is matching our expectations. It is essential to note that if the producer side changes the API, those tests will not fail. And it is imperative to define what the test is covering.

// the response we expect is represented in the task1.json file

private Resource taskOne = new ClassPathResource("task1.json");

@Autowired

private TodayClient todayClient;

@Test

public void createNewTask_expectTaskIsCreated() {

WireMock.stubFor(WireMock.post(WireMock.urlMatching("/tasks"))

.willReturn(WireMock.aResponse()

.withHeader(HttpHeaders.CONTENT_TYPE, MediaType.APPLICATION_JSON_UTF8_VALUE)

.withStatus(HttpStatus.OK.value())

.withBody(transformResourceJsonToString(taskOne))));

Task tasks = todayClient.createTask(new Task(null, "runUTest", "Run Test", false, true));

BDDAssertions.then(tasks.getId()).isEqualTo("1");Snippet 6: A consumer test doing assertions on its own defined response

Component tests

Microservice architecture can grow fast, and so the component under test might be integrating with multiple other components and multiple infrastructure services. Until now, we have covered white-box testing with unit tests and narrow integration tests to test the CUT crossing the boundary to integrate with another service.

The fastest type of component testing is the in-process approach, where, alongside the use of test doubles and in-memory data stores, testing remains within boundaries. The main disadvantage of this approach is that the deployable production service is not fully tested; on the contrary, the component requires changes to wire the application differently. The preferred method is out-of-process component testing. These are like end-to-end tests, but with all external collaborators changed out with test doubles, by doing so, it exercises the fully deployed artifact making use of real network calls. The test would be responsible for properly configuring any externals services as stubs.

@Ignore

@RunWith(SpringRunner.class)

@SpringBootTest(classes = { TodayConfiguration.class, TodayIntegrationApplication.class,

CloudFoundryClientConfiguration.class })

public class BaseFunctionalitySteps {

@Autowired

private CloudFoundryOperations cf;

private static File manifest = new File(".\\manifest.yml");

@Autowired

private TodayClient client;

// Any stubs required

. . .

public void setup() {

cf.applications().pushManifest(PushApplicationManifestRequest.builder()

.manifest(ApplicationManifestUtils.read(manifest.toPath()).get(0)).build()).block();

}

. . .

// Any calls required by tests

public void requestForAllTasks() {

this.client.getTodoTasks();

}

}Snippet 7: Deployment of the manifest on CloudFoundry and any calls required by tests

Cloud Foundry is one of the options used for container-based testing architectures. “It is an open-source cloud application platform that makes it faster and easier to build, test, deploy, and scale applications.” The following is the manifest.yml, a file that defines the configuration of all applications in the system. This file is used to deploy the actual service in the production-ready format on the Pivotal organization’s space where the MongoDB service is already set up, matching the production version.

---

applications:

- name: today

instances: 1

path: ../today/target/today-0.0.1-SNAPSHOT.jar

memory: 1024M

routes:

- route: today.cfapps.io

services:

- mongo-itSnippet 8: Deployment of one instance of the service depending on mongo service

When opting for the out-of-process approach, keep in mind that actual boundaries are under test, and thus, tests end up being slower since there are network and database interactions. It would be ideal to have those test suites written in a separate module. To be able to run them separately at a different maven stage instead of the usual ‘test’ phase.

Since the emphasis of the tests is on the component itself, tests cover the primary responsibilities of the component while purposefully neglecting any other part of the system.

Cucumber, a software tool that supports Behavior-Driven Development, is an option to define such behavioral tests. With its plain language parser, Gherkin, it ensures that customers can easily understand all tests described. The following Cucumber feature file is ensuring that our component implementation is matching the business requirements for that particular feature.

Feature: Tasks

Scenario: Retrieving one task from list

Given the component is running

And the data consists of one or more tasks

When user requests for task x

Then the correct task x is returned

Scenario: Retrieving all lists

Given the data consists of one or more tasks

When user requests for all tasks

Then all tasks in database are returned

Scenario: Negative Test

Given the component is not running

When user requests for task x it fails with response 404Snippet 9: A feature file defining BDD tests

End-to-end tests

Similar to component tests, the aim of these end-to-end tests is not to perform code coverage but to ensure that the system meets the business scenarios requested. The difference is that in end-to-end testing, all components are up and running during the test.

As per the testing pyramid diagram, the number of end-to-end tests decreases further, taking into consideration the slowness they might cause. The first step is to have the setup running, and for this example, we will be leveraging docker.

version: '3.7'

services:

today-app:

image: today-app:1

container_name: "today-app"

build:

context: ./

dockerfile: DockerFile

environment:

- SPRING_DATA_MONGODB_HOST=mongodb

volumes:

- /data/today-app

ports:

- "8082:8080"

links:

- mongodb

depends_on:

- mongodb

mongodb:

image: mongo:3.2

container_name: "mongodb"

restart: always

environment:

- AUTH=no

- MONGO_DATA_DIR=/data/db

- MONGO_LOG_DIR=/dev/log

volumes:

- ./data:/data

ports:

- 27017:27017

command: mongod --smallfiles --logpath=/dev/null # --quietSnippet 10: The docker.yml definition used to deploy the defined service and the specified version of mongo as containers

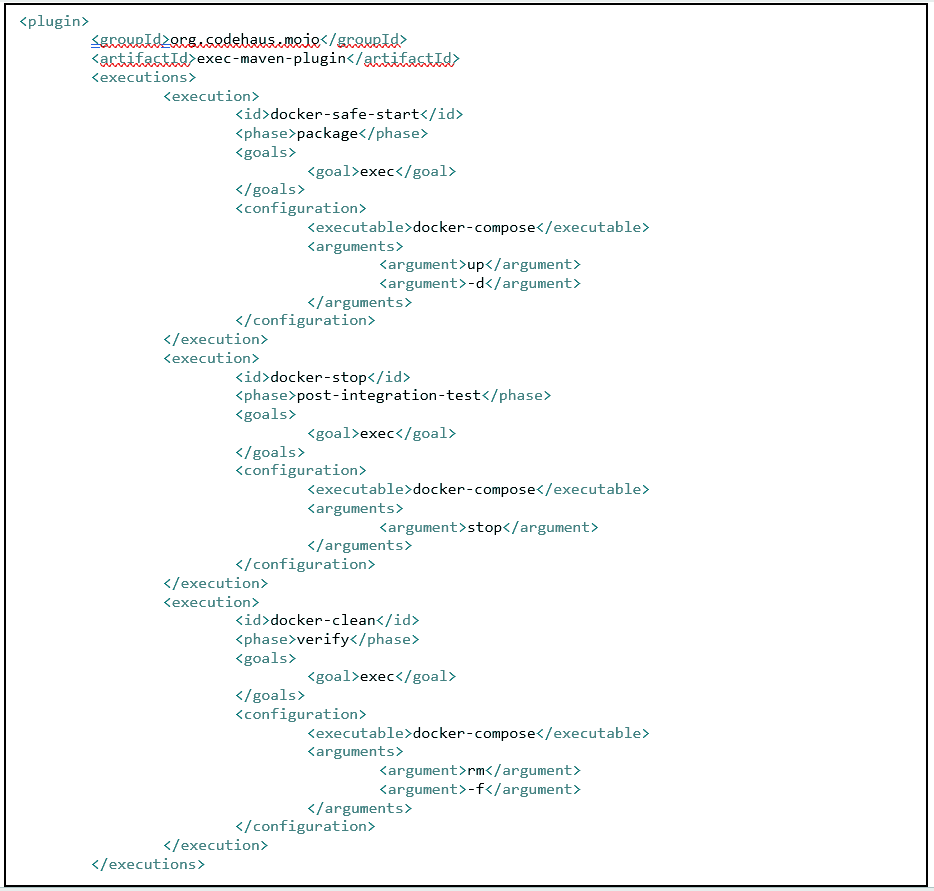

As per component tests, it makes sense to keep end-to-end tests in a separate module and different phases. The exec-maven-plugin was used to deploy all required components, exec our tests, and finally clean and teardown our test environment.

Since this is a broad-stack test, a smaller selection of tests per feature will be executed. Tests are selected based on perceived business risk. The previous types of tests covered low-level details. That means whether a user story matches the Acceptance Criteria. These tests should also immediately stop a release, as a failure here might cause severe business repercussions.

Conclusion

Handoff centric testing often ends up being a very long process, taking up to weeks until all bugs are identified, fixed, and a new deployment readied. Feedback is only received after a release is made, making the lifespan of a version of our quickest possible turnaround time.

The continuous testing approach ensures immediate feedback. Meaning the DevOps engineer is immediately aware of whether the feature implemented is production-ready or not, depending on the outcome of the tests run. From unit tests up to end-to-end tests, they all assist in speeding up the assessment process.

Microservices architecture helps create faster rollouts to production since it is domain-driven. It ensures failure isolation and increases ownership. When multiple teams are working on the same project, it’s another reason to adopt such an architecture: To ensure that teams are independent and do not interfere with each other’s work.

Improve testability by moving toward continuous testing. Each microservice has a well-defined domain, and its scope should be limited to one actor. The test cases applied are specific and more concise, and tests are isolated, facilitating releases and faster deployments.

Following the TDD approach, there is no coding unless a failed test returns. This process increases confidence once an iterative implementation results in a successful trial. This process implies that testing happens in parallel with the actual implementation, and all the tests mentioned above are executed before changes reach a staging environment. Continuous testing keeps evolving until it enters the next release stage, that is, a staging environment, where the focus switches to more exhaustive testing such as load testing.

Agile, DevOps, and continuous delivery require continuous testing. The key benefit is the immediate feedback produced from automated tests. The possible repercussions could influence user experience but also have high-risk business consequences. For more information about continuous testing, Contact phoenixNAP today.

Recent Posts

Cloud-Native Application Architecture: The Future of Development?

Cloud native application architecture allows software and IT to work together in a faster modern environment.

Applications designed around cloud-native structure define the difference between how new technology is built, packaged, and distributed, instead of where it was created and stored. When creating these applications, you retain complete control and have the final say in the process.

Even if you are not currently hosting your application in the cloud, this article will influence how you develop modern applications moving forward. Read on to find out what cloud native is, how it works, and it’s future implications.

What is Cloud-Native? Defined

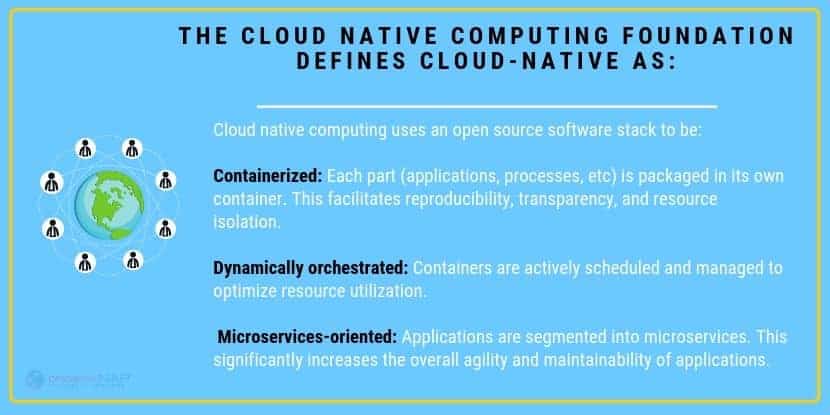

Cloud native in terms of applications are container-based environments or packaged apps of microservices. Cloud-native technologies build applications that contain several services packaged together which are deployed together and managed on cloud infrastructure using DevOps processes which provide uninterrupted delivery workflows. These microservices create what is called the architectural approach, which is in place to create smaller bundled applications.

What is a Cloud-Native Architecture?

Cloud–native architecture is built specifically to run in the cloud.

Cloud-native apps start as packaged software called containers. Containers are run through a virtual environment and become isolated from their original environments to make them independent and portable. You can run your personalized design through test systems to see where it’s located. Once you’ve tested it, you can edit to add or remove options.

Cloud-native development allows you to build and update applications quickly while improving quality and reducing risk. It’s efficient, run responsive, and scalable. These are fault-tolerant apps which be run anywhere, from public or private environments, or in hybrid clouds. You can test and build your application until it is precisely how you want it to be. For development aspects that you are not an expert in, you can easily outsource them.

The architecture of your system can be built up with the help of microservices. With the help of these services, you can set up the smaller parts of your apps individually, instead of reworking the entire app, all at once. More specifically, with DevOps and containers, applications become easier to update and release. As a collection of loosely connected services, such as microservices are easier to upgrade instead of waiting for one significant release which takes more time and effort.

Lastly, you’ll want to make sure your application has access to the elasticity of the cloud. With this elasticity it allows your developers to push code to production much faster than in traditional server-based models. You can move and scale your app’s resources at any time.

What Are The Characteristics of Cloud Native Applications?

Now that you know the basics about cloud-native apps, here are a few design princicples that you discuss with your developer in the development stages:

Develop With The Best Languages And Frameworks

All services of cloud-native applications are made using the best languages and frameworks. Make sure you can choose what language and framework suites your apps best.

Build With APIs For Collaboration & Interaction