12 Docker Container Monitoring Tools You Should Be Using

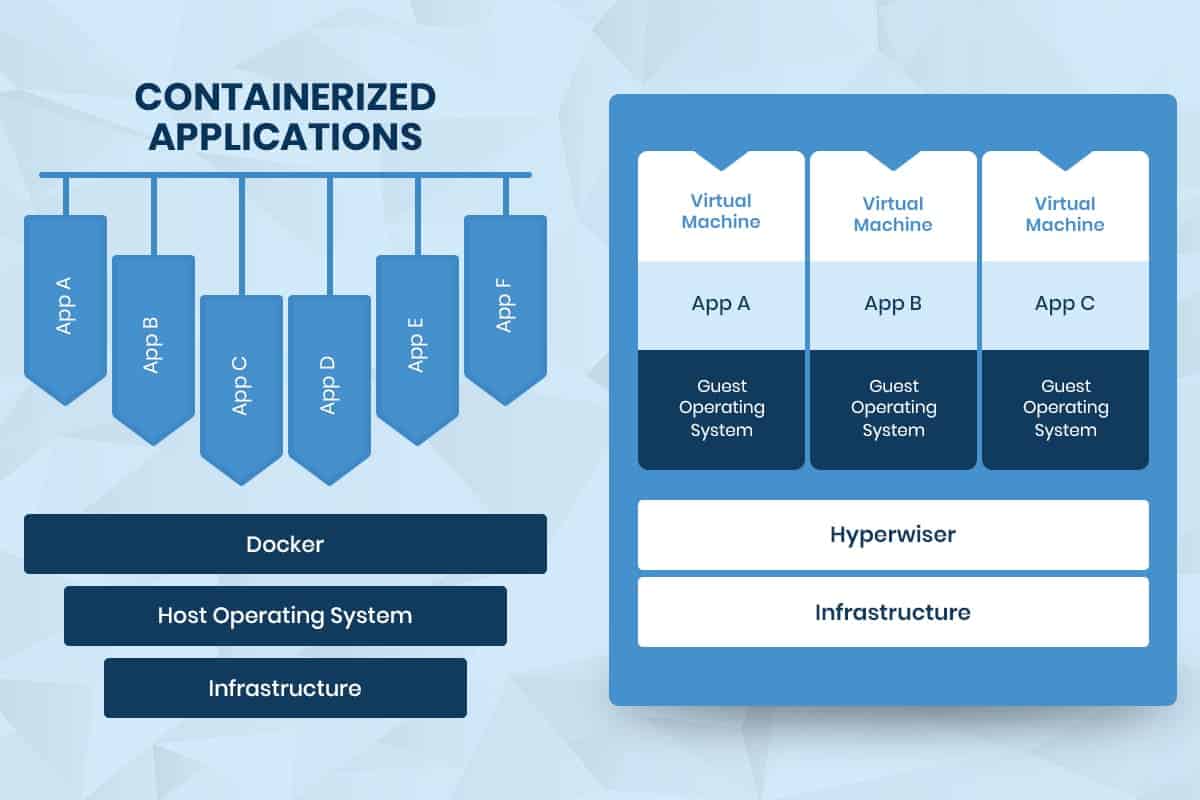

Containers are the industry standard for hosting applications. The benefits they offer to cloud-based microservices are infinite and have allowed organizations both large and small, to deploy hundreds and thousands of containers to power their applications.

What is Container Monitoring?

A typical company deploying its applications in a containerized manner could have anywhere from a few to thousands of containers working at any given time. Containers running complex configurations can be dynamically deployed and removed depending on the scale and load expected. Scaling poses challenges in tracking their performance issues and overall health on an on-going basis.

It’s why monitoring the performance of containerized applications to ensure application continuity is essential. Monitoring and alerting becomes effective through analyzing metrics, obtained from many sources such as host and daemon logs, and monitoring agents installed on each node.

Why You Need to Monitor Docker Containers

The health of an organization’s containerized applications directly impacts the efficacy of its business. Monitoring application performance ensures that both the containerized applications and the infrastructure are always at optimum levels.

Also, monitoring historical-data and CPU usage are useful to recognize trends that lead to recurring issues or bottlenecks. Use these metrics to forecast resource needs more accurately, as it will lead to better resource allocations and deployments.

On-going monitoring keeps app performance at its peak. It helps you detect and solve problems early on, so you can be proactive. You can avoid risks at the production level. Monitor the whole environment so you can implement changes safely.

Below you will find the top twelve monitoring tools we recommend for Docker. Take a closer look at the analytics to see what’s supported and suits your needs best. Take advantage of a free trial before you commit.

12 Best Monitoring Tools for Docker

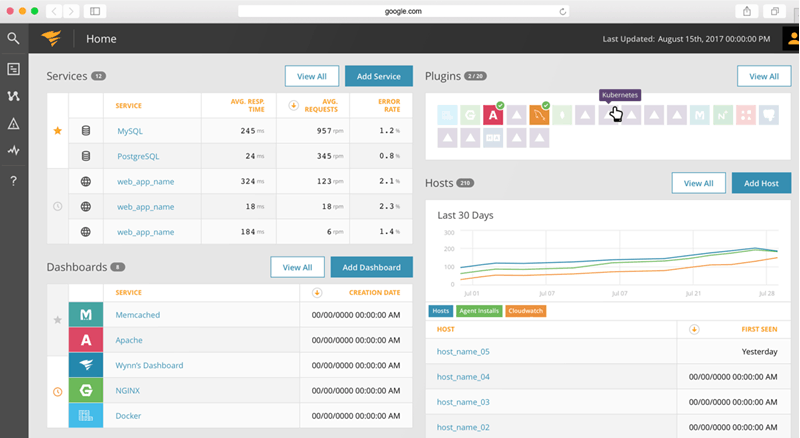

1. AppOptics Docker Monitoring with APM

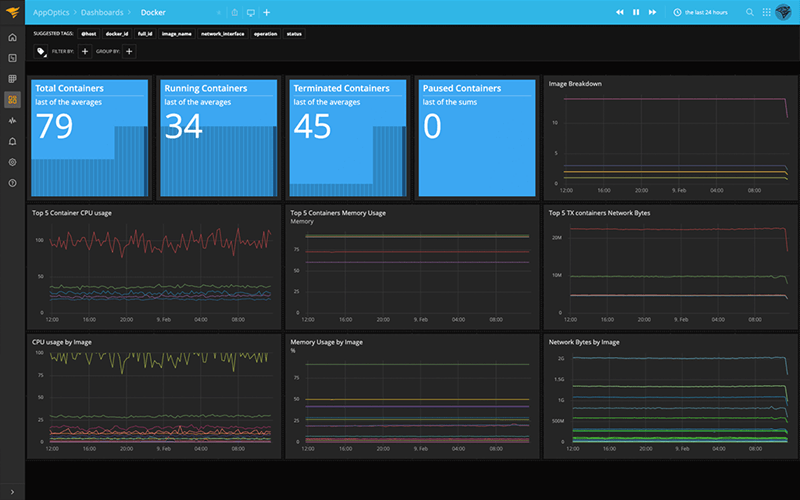

AppOptics provides a robust monitoring solution for Docker containers. It is a SaaS-based integration with Docker that does not require any modifications to your images. AppOptics achieves this by picking up metrics directly from the Docker daemon.

AppOptic’s pre-configured docker monitoring dashboard visualizes per-container CPU, Memory, and network metrics, among others. The integration with Docker can be set up quite quickly through the AppOptics integrations tab and connects with all hosts automatically. Once the agent is active, data starts flowing through to the dashboard.

The dashboard visualizes each containerized application in a process-isolated manner. This feature is so you can identify any unusual behavior. AppOptics allows monitoring across on-premise and distributed cloud all through the same dashboard.

2. SolarWinds Server & Application Monitor

SolarWinds provides tracking for key performance metrics such as CPU, memory, and uptime of individual Docker containers through a simple dashboard. Instant alerts regarding depleted resources are a salient feature provided by SolarWinds.

One of the distinguishing features of SolarWinds is its ability to detect issues directly from the container layer, which gives it an edge over other traditional server monitors.

Shared resources are one of the major performance concerns for containers, especially when multiple containers are in use. One of SolarWind’s core strengths is the ability to isolate individual containers and monitor them concerning their neighboring nodes.

SolarWinds focuses on average and peak loads to provide forecasts required for capacity planning on a separate dashboard of its own.

To find out more about container resource usage, read our Knowledge Base article on how to set a container's memory and CPU usage limit.

3. Prometheus

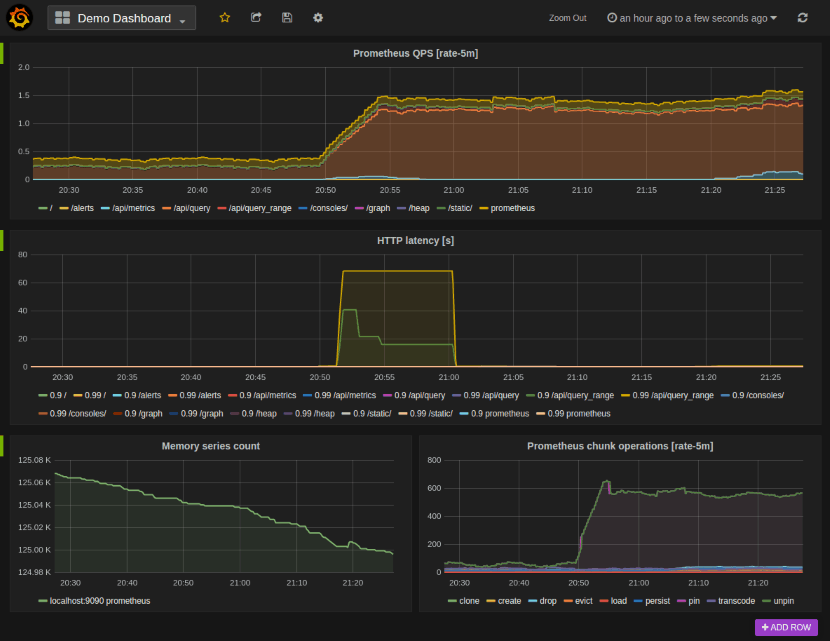

Prometheus is one of the best known open-source Docker monitoring tools and is one of the three solutions recommended by Docker. It is available as an image easily installed on Docker containers. However, there are some advanced configurations recommended for production environments. Meaning it’s not the easiest to configure for larger setups.

Once the docker target image installation, the Prometheus monitoring tool detects the container and will be available for monitoring. However, one downside is that it is not possible to monitor containerized applications via the Docker target. Docker recommends other tools for this purpose.

Prometheus provides a simple docker dashboard that visualizes the workloads of targeted Docker instances. It creates a separate volume to store recorded metrics.

The Prometheus Query Language (PromQL) can then be used to query metrics and statistics through the dashboard in tabular or graphical form. The integrated HTTP API makes this data available to external systems for seamless monitoring.

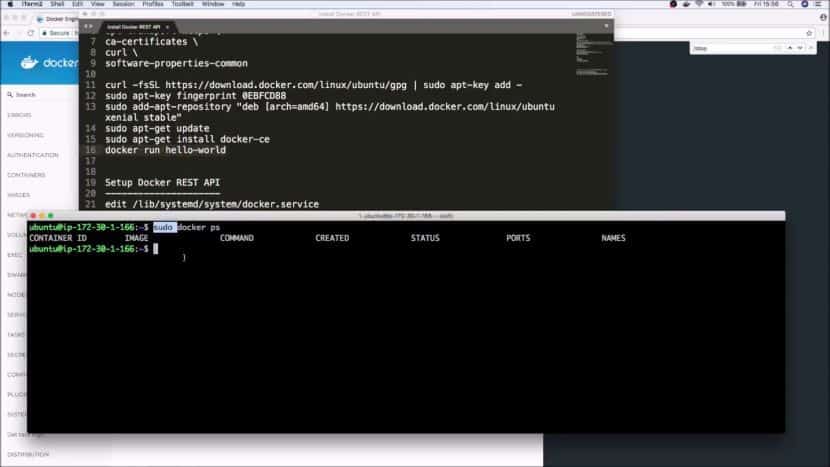

4. Docker API

Docker API is the official HTTP Web Service API for integrating with Docker. Used to connect any external Docker monitoring tool via the secure API endpoints to gather metrics and store or visualize them.

The Docker API is one of the more technically intensive monitoring solutions for Docker. However, it is best suited for organizations that have their own applications for monitoring containers from multiple service providers.

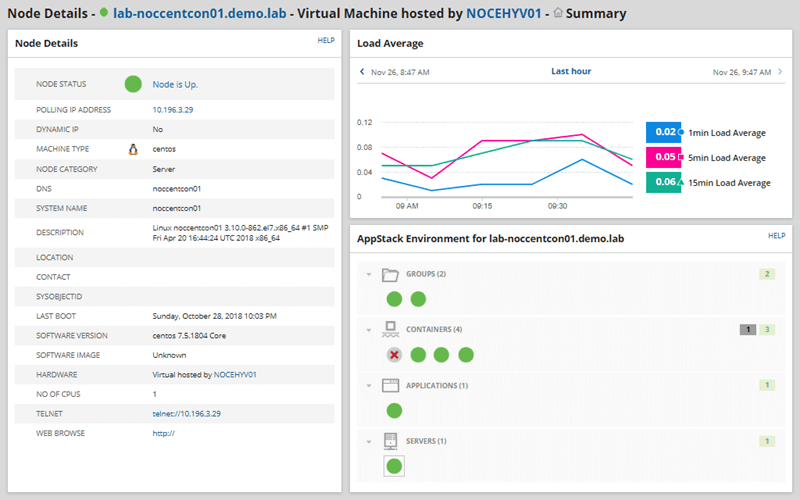

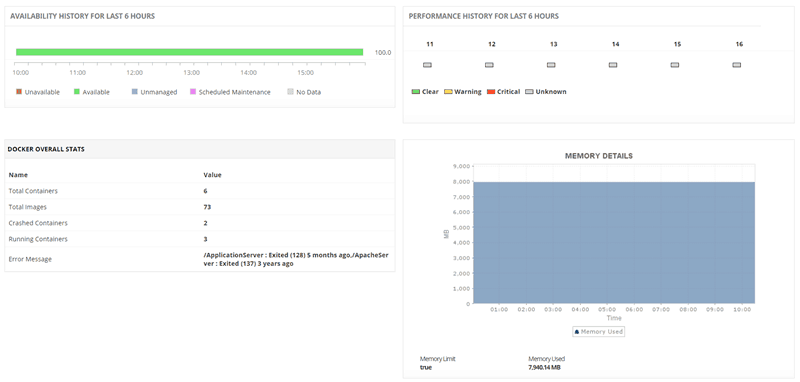

5. ManageEngine Applications Manager

The ManageEngine Applications Manager is a popular server monitoring solution with native support for monitoring Docker hosts. It specializes in tracking both container environments as well as the applications encapsulated within them. Monitoring becomes vital for containers as isolation happens at the kernel level with dynamic resource allocation.

In addition to the usual statistics like CPU, network, and memory, ManageEngine allows monitoring all containers within each host to reduce bottlenecks in performance and availability.

Application Manager allows to set up pre-configured rules regarding container status and performance metrics. It then uses these triggers to alert users via email or SMS whenever anomalies are detected, allowing them to resolve issues quickly before they escalate and affect performance.

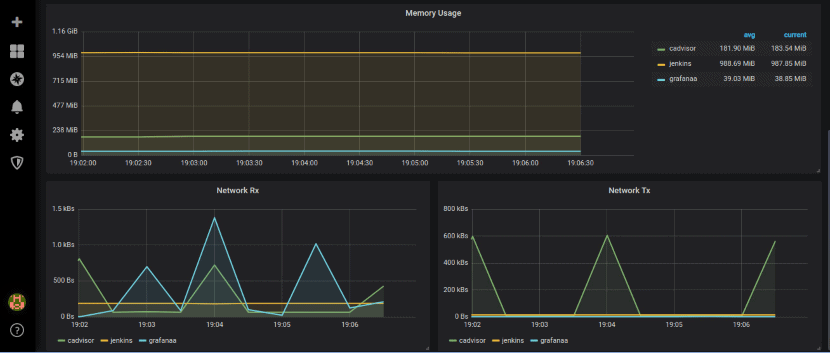

6. cAdvisor

Container Advisor (cAdvisor) from Google is another open-source tool for Docker Monitoring. It is a running daemon that collects, aggregates, and exports resource usage and performance data of targeted containers.

cAdvisor is a popular tool known for its focus on resource isolation parameters, historical resource usage, and histograms of historical-data. This data is stored both by container as well as holistically for easier analysis of past performance and forecasting.

Builds of cAdvisor are available as images that you can install on Docker hosts. cAdvisor provides both a Web UI and a REST API to cater to both users that are looking to monitor their Docker containers directly as well as integrate metrics to an external application via web service endpoints.

7. SolarWinds Librato

Librato brings along all the benefits of SolarWinds with more customized features. It provides the ability to monitor a wide range of languages and frameworks through RPC calls, queues, and other sources.

Librato provides native integrations to over 150 cloud solutions, including Docker making it very suitable for organizations that use multiple services. To this end, Librato focuses on an API first approach, which means that it makes all available metrics and statistics available via secure web API.

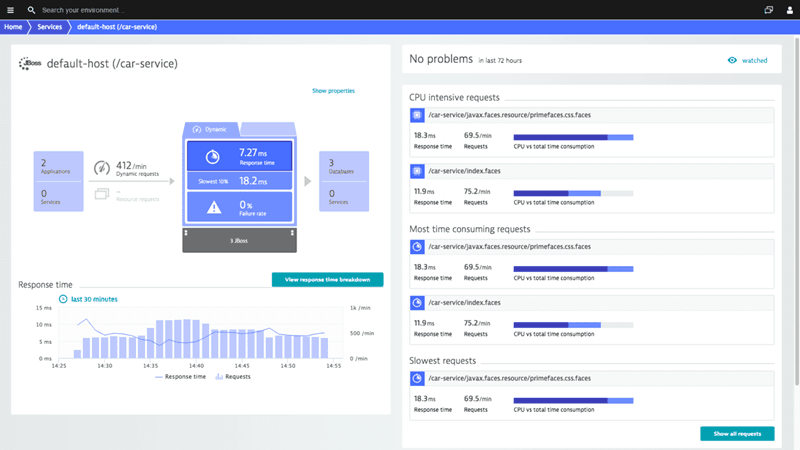

8. Dynatrace

Dynatrace provides an out-of-the-box solution for monitoring containerized applications without having to install any images or modifying run commands. It automatically detects the creation of new containers and containerized applications.

Common Micro-deployments with highly dynamic Docker environments is one of Dynatrace’s strong suits. Monitoring tracks large numbers of Docker containers, which are deployed and removed dynamically.

Dynatrace enables extensive tracking and monitoring through log monitoring even when details such as docker name, ID, or host details are not available. Each detailed log entry includes all this information.

This setup allows users to view virtual docker log monitoring of files that are specific to a particular container or application. One of the benefits of this method is that Dynatrace does not require extensive storage space in contrast to many other monitoring tools.

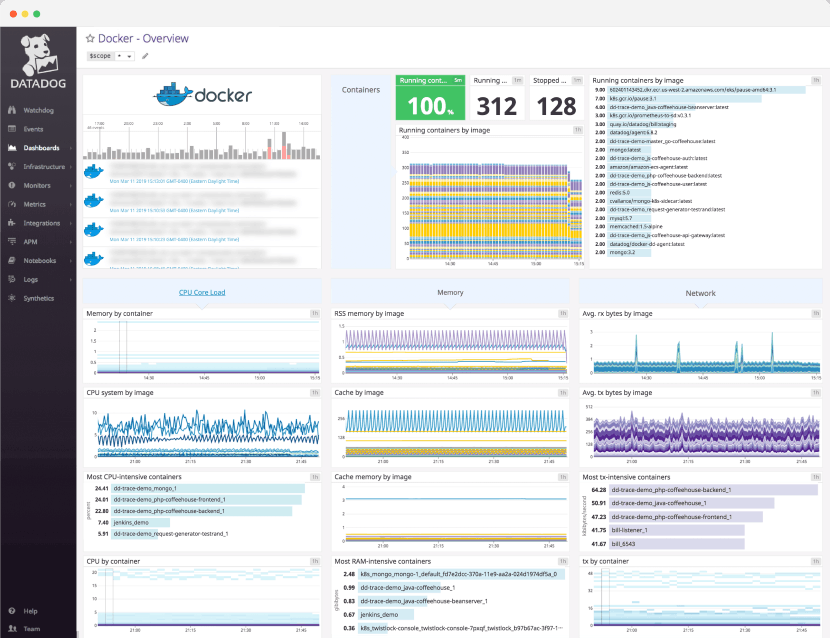

9. Datadog

Datadog is another one of the docker system monitors recommended by Docker. It provides integrations across hundreds of cloud services platforms, which makes it another good fit for organizations that have a mix of containerized applications and cloud solutions.

Monitoring is available across applications through Trace requests, which feed graphical visualizations and alerts. Datadog collects data regarding services, applications, and platforms via detailed log data. This data is automatically correlated and visualized to highlight unusual behavior.

Another feature of Datadog that distinguishes it is the ability to monitor data by the platform natively. This feature allows for monitoring both holistically as well as to drill down to container level. All this data is available via interactive dashboards that provide real-time data.

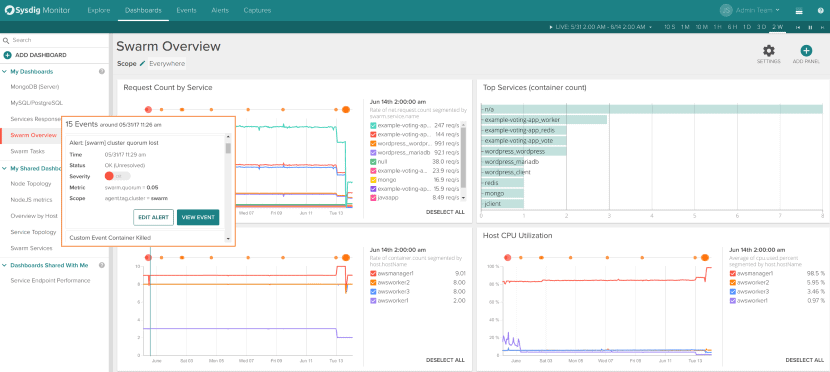

10. Sysdig

Sysdig claims the most in-depth integrations within the Docker ecosystem and tracks data directly from container metadata to enable security and Docker monitoring. Docker recommends Sysdig as a monitoring solution for containerized applications.

One of the most significant advantages of Sysdig is that it provides monitoring for containers, cloud services, and Kubernetes. The open-source Prometheus monitoring tool is part of the Sysdig platform and comes as an enterprise solution with a myriad of additional features.

Topology maps are a top feature provided by Sysdig to monitor traffic flows, identify bottlenecks, and understand dependencies between micro-services. Sysdig supports multi-condition alerts regarding changes in nodes, clusters, and metrics. It’s able to provide this data to many incident management tools like ServiceNow and Slack.

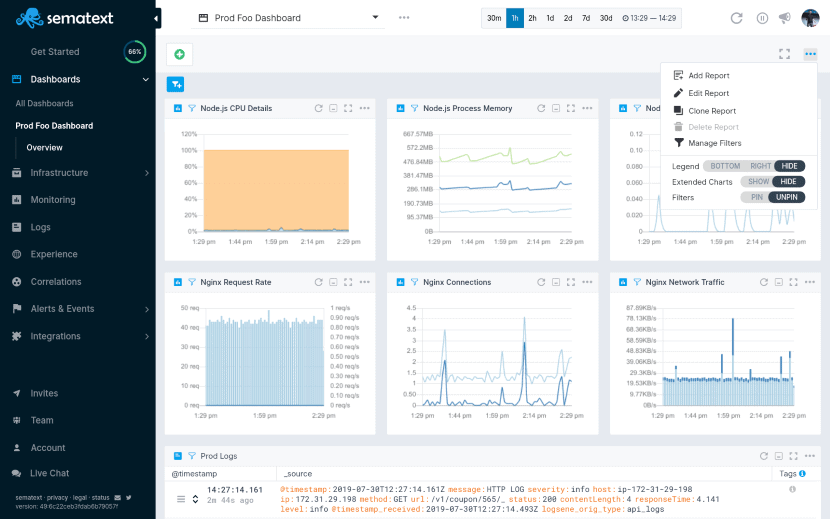

11. Sematext

Sematext Agent for Docker is a monitoring tool executed as a tiny container and collects data from all hosts and containers. While it is a very light agent, it has to run on each of the Docker hosts that need monitoring.

Logagent is used to track and store logs of all container activity. These logs enable monitoring Docker containers with dashboards and alerts and track many metrics such as CPU, memory, network, I/O, and memory failed counters.

Logs are structured and well suited for visualizing via the provided dashboard. You get further options like searching and filtering to facilitate troubleshooting.

Sematext can be deployed to all nodes within a swarm with a single command. It also supports the auto-discovery of applications running within containers for effortless monitoring.

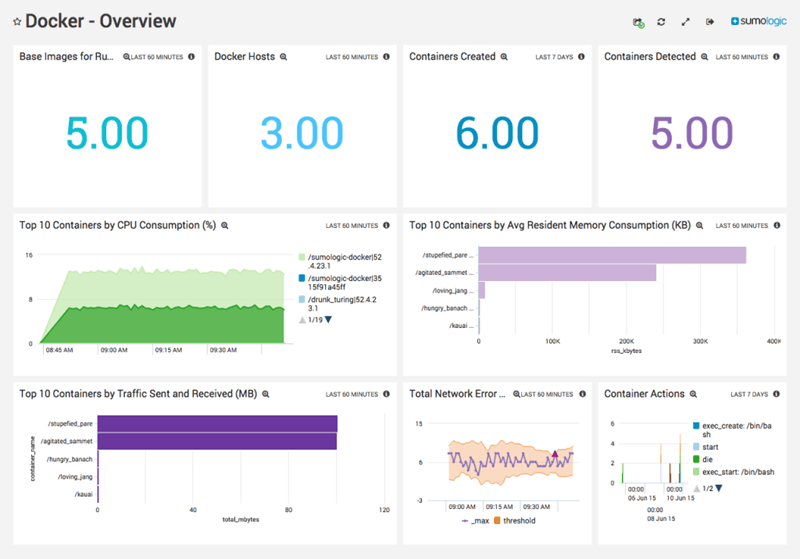

12. Sumo Logic

Sumo Logic provides a comprehensive monitoring experience through a container aware approach in contrast to other tools that use log-based monitoring.

It applies to host and daemon logs to provide a comprehensive overview of targeted Docker environments. Monitoring of the entire Docker infrastructure happens via a native collection source.

Sumo Logic is thus able to provide real-time monitoring based on logs and metrics data. The usual bells and whistles like alerts are available out-of-the-box. Sumo Logic uses a container to centrally collect data from each host via the Docker remote API, Inspect API, and daemon logs.

Choosing the Best Docker Container Monitoring Tool

Docker is one of the many ways of deploying containerized applications. You can learn more about other container orchestration tools like Docker in our article about The Best Docker Orchestration Tools for 2020.

The difficulties of monitoring Docker containers revolves around containerized applications, isolated within containers, and with resources allocated dynamically.

As container images become increasingly complicated with patches and updates, it is crucial to choose Docker monitoring tools that are robust and allow them to be deployed quickly across many thousands of nodes. For more information about which tool is best for you, call us today.

Recent Posts

15 Kubernetes Tools For Deployment, Monitoring, Security, & More

Kubernetes has leveled the competition. Now a mature technology, enterprises across the globe are rapidly adopting a microservices-based, container driven approach to software delivery. Kubernetes is the industry standard. Industry leaders are helping it mushroom, developing comprehensive applications and ecosystem based on a Kubernetes core. It’s the most popular open-source container orchestration platform due to its ability to support the diverse requirements and constraints an application can create.

We are going to look at 15 of the best Kubernetes tools. These applications will complement K8s and enhance your development work so you can get more from your Kubernetes.

Kubernetes Monitoring Tools

cAdvisor

cAdvisor can auto-detect all containers in a server. It then collects, processes, and disseminates container information. It has one weakness. It is limited in terms of storing metrics for long term monitoring. cAdvisor’s container abstraction is based on lmctfy. It inherits the nested hierarchical behavior.

Kubernetes Dashboard

Many options are available for trouble-shooting. The Dashboard allows monitoring of aggregate CPU and memory usage. It can monitor the health of workloads. Installation is straight forward as ready-made YAML templates are available. The cabin is the mobile version of the Kubernetes Dashboard. It provides similar functions for Android and iOS.

Kubelet

Furthermore, Kublet accepts PodSpecs from the API server. They are also able to do so from other sources. But are unable to manage them. Docker’s cAdvisor is one such source. Its main benefit is that it allows monitoring the entire cluster.

Kubernetes Security Tools

The security requirements of containers are unique. They differ from other types of hosting, like VPS. The reason is that they have more layers to be secured. These include container runtime, orchestrator, and application images. Below are some specialized tools.

Twistlock

Falco

Aqua Security

Aqua performs this task while ensuring isolation between tenants. Isolation refers to both data and access; it scans for multiple security issues. These include known threats, embedded secrets, and malware. It runs other tests for problems in settings and permissions. Aqua Security is compatible with over ten container vendors, and that’s in addition to Kubernetes.

Kubernetes Deployment Tools

Helm

Apollo

Kubespray

Kubernetes CLI Tools

Kubectl

kubectx / kubens

Kube-shell

Kubernetes Serverless Tools

Kubeless

Fission software logo

IronFunction

Final Word On Choosing a Kubernetes Tool

We have looked at five important types of Kubernetes tools. Though a partial list of open-source tools available for Kubernetes, all of them can make your container management experience more efficient and less stressful.

Kubernetes is continually evolving and community-driven. Thanks to this super-active community, the gaps get quickly filled with extensions, built-ins, add-ons, and bonus plugins, making this container orchestration framework the best choice for running your workloads.

If you are still new to Kubernetes and want to learn more about container management technology or migrating legacy apps, reach out to one of our experts today.

Next you should read

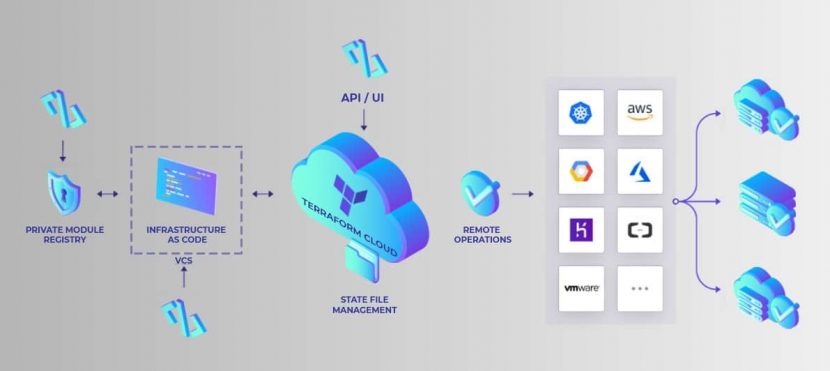

Ansible vs Terraform vs Puppet: Which to Choose?

In the ‘DevOps’ world, organizations are implementing or building processes using Infrastructure as Code (IAC). Ansible, Terraform, and Puppet allow enterprises to scale and create repeatable configurations that test and enforce procedures to continually ensure the right results.

We will examine the differences between these three more in-depth. To guide you through choosing a platform that will work best for your needs. All three are advanced-level platforms for deploying replicable and repetitive applications that have highly complex requirements.

Compare the similarities and differences these applications have in terms of configuration management, architecture, and orchestration and make an informed decision.

Infrastructure as Code

Introduced over a decade ago, the concept of Infrastructure as Code (IAC) refers to the process of managing and provisioning computer data centers. It’s a strategy for managing data center servers, networking infrastructure, and storage. Its purpose is to simplify large-scale management and configuration dramatically.

IAC allows provisioning and managing of computer data centers via machine-readable definition files without having to configure tools or physical hardware. In simpler terms, IAC treats manual configurations, build guides, run books, and related procedures as code. Read by software, the code that maintains the state of the infrastructure.

Designed to solve configuration drift, system inconsistencies, human error, and loss of context, IAC resolves all these potentially crippling problems. These processes used to take a considerable amount of time; modern IAC tools make all processes faster. It eliminates manual configuration steps and makes them repeatable and scalable. It can load several hundred servers significantly quicker. It allows users to gain predictable architecture and confidently maintain the configuration or state of the data center.

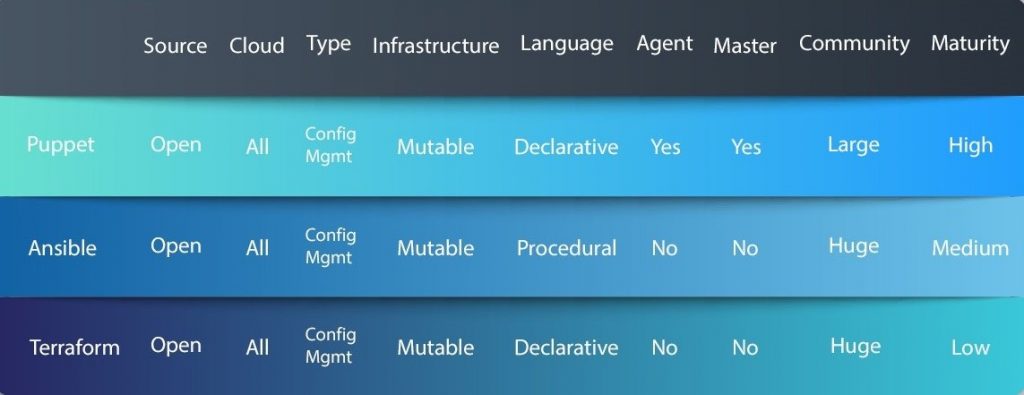

There are several IAC tools to choose from, with three major examples being Ansible, Terraform, and Puppet. All of them have their unique set of strengths and weaknesses, which we’ll explore further.

Short Background on Terraform, Ansible, and Puppet

Before we begin comparing the tools, see a brief description below:

- Terraform (released 2014 – current version 0.12.8): Hashicorp developed Terraform as an infrastructure orchestrator and service provisioner. It is cloud-agnostic, supporting several providers. As a result, users can manage multi-cloud or multi offering environments, using the same programming language and configuration construct. It utilizes the Haschorp Language and is quite user-friendly as compared to other tools.

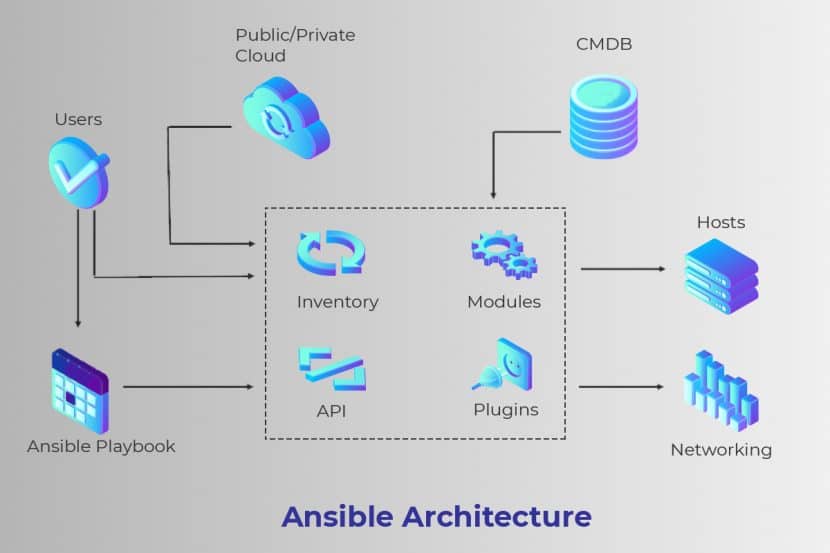

- Ansible (released 2012 – current version 2.8.4): Ansible is a powerful tool used to bring services and servers into the desired state, utilizing an assortment of classes and configuration methods. Additionally, it can also connect to different providers via wrapper modules to configure resources. Users prefer it because it is lightweight when coding is concerned, with speedy deployment capabilities.

- Puppet (released 2005 – current version 6.8.0): Puppet is one of the oldest declarative desired state tools available. It is server/client-based, one which refreshes the state on clients via a catalog. It uses “hieradata”, a robust metadata configuration method. It enforces system configuration with programs through its power to define infrastructure as code. It’s widely used on Windows or Linux to pull strings on multiple application servers simultaneously.

Orchestration vs Configuration Management

Ansible and Terraform have some critical differences, but the two do have some similarities as well. They differ when we look at two DevOps concepts: orchestration and configuration management, which are types of tools. Terraform is an orchestration tool. Ansible is mainly a configuration management tool (CM); they perform differently but do have some overlaps since these functions are not mutually exclusive. Optimized for various usage and strengths, use these tools for situations.

Orchestration tools have one goal: to ensure an environment is continuously in its ‘desired state.’ Terraform is built for this as it stores the state of the environment, and when something does not function properly, it automatically computes and restores the system after reloading. It’s perfect for environments that need a constant and invariable state. ‘Terraform Apply’ is made to resolve all anomalies efficiently.

Configuration management tools are different; they don’t reset a system. Instead, they locally repair an issue. Puppet has a design that installs and manages software on servers. Like Puppet, Ansible also can configure each action and instrument and ensure its functioning correctly without any error or damage. A CM tool works to repair a problem instead of replacing the system entirely. In this case, Ansible is a bit of a hybrid since it can do both, perform orchestration and replace infrastructure. Terraform is more widely used. It’s considered the superior product since it has advanced state management capabilities, which Ansible does not.

The important thing to know here is that there is an overlap of features here. Most CM tools can do provisioning to some level, and vice versa, many provisioning tools can do a bit of configuration management. The reality is that different tools are a better fit for certain types of tasks, so it comes down to the requirements of your servers.

Procedural vs. Declarative

DevOps tools come in two categories that define their actions: ‘declarative’ and ‘procedural.’ Not every tool will fit this mold as an overlap exists. Procedural defines a tool that needs precise direction and procedure that you must lay out in code. Declarative refers to a tool ‘declaring’ exactly what is needed. It does not outline the process needed to gain the result.

In this case, Terraform is wholly declarative. There is a defined environment. If there is any alteration to that environment, it’s rectified on the next ‘Terraform Apply.’ In short, the tool attempts to reach the desired end state, which a sysadmin has described. Puppet also aims to be declarative in this way.

With Terraform, you simply need to describe the desired state, and Terraform will figure out how to get from one state to the next automatically.

Ansible, alternatively, is somewhat of a hybrid in this case. It can do a bit of both. It performs ad-hoc commands which implement procedural-style configurations and uses most of the modules that perform in a declarative-style.

If you decide to use Ansible, read the documentation carefully, so you know its role and understand the behavior to expect. It’s imperative to know if you need to add or subtract resources to obtain the right result or if you need to indicate the resources required explicitly.

Comparing Provisioning

Automating the provisioning of any infrastructure is the first step in the automation of an entire operational lifecycle of an application and its deployment. In the cloud, the software runs from a VM, Docker container, or a bare metal server. Either Terraform, or Ansible is a good choice for provisioning such systems. Puppet is the older tool, so we’ll take a closer look at the newer DevOps programs for managing multiple servers.

Terraform and Ansible approach the process of provisioning differently, as described below, but there is some overlap.

Provisioning with Terraform:

There are certain behaviors not represented in Terraform’s existing declarative model. This setup adds a significant amount of uncertainty and complexity when using Terraform in the following ways:

The Terraform model is unable to model the actions of provisioners when it is part of a plan. It requires coordinating more details than what is necessary for normal Terraform usage to use provisioners successfully.

It requires additional measures such as granting direct network access to the user’s servers, installing essential external software, and issuing Terraform credentials for logging in.

Provisioning with Ansible:

Ansible can provision the latest cloud platforms, network devices, bare metal servers, virtualized hosts, and hypervisors reliably.

After completing bootstrapping, Ansible allows separate teams to connect nodes to the storage. It can add them to a load balancer, or any security patched or other operational tasks. This setup makes Ansible the perfect connecting tool for any process pipeline.

It aids in automatically taking bare infrastructure right through to daily management. Provisioning with Ansible, allows users to use a universal, human-readable automation language seamlessly across configuration management, application deployment, and orchestration.

Differences between Ansible and Terraform for AWS

AWS stands for Amazon Web Services, a subsidiary of Amazon, which provides individuals, companies, and business entities on-demand cloud computing platforms. Both Terraform and Ansible treat AWS management quite differently.

Terraform with AWS:

Terraform is an excellent way for users who do not have a lot of virtualization experience to manage AWS. Even though it can feel quite complicated at first, Terraform has drastically reduced the hurdles standing in the way of increasing adoption.

There are several notable advantages when using Terraform with AWS.

- Terraform is open-source, bringing with it all the usual advantages of using open-source software, along with a growing and eager community of users behind it.

- It has an in-built understanding of resource relationships.

- In the event of a failure, they isolate to dependent resources. Non-dependent resources, on the other hand, continue to be created, updated, and destroyed.

- Terraform gives users the ability to preview changes before being applied.

- Terraform comes with JSON support and a user-friendly custom syntax.

Ansible with AWS:

Ansible has offered significant support for AWS for a long time. This support allows interpretation of even the most complicated of AWS environments using Ansible playbooks. Once described, users can deploy them multiple times as required, with the ability to scale out to hundreds and even thousands of instances across various regions.

Ansible has close to 100 modules that support AWS capabilities. Such as Virtual Private Cloud (VPC), Simple Storage Service (S3), Security Token Service, Security Groups, Route53, Relational Database Service, Lambda, Identity Access Manager (IAM), AMI Management and CloudTrail to name a few. Also, it includes over 1300 additional modules for managing different aspects of a user’s Linux, Windows, UNIX, etc.

Here are the advantages when using Ansible with AWS.

- With Ansible Tower’s cloud inventory synchronization, you will find out precisely which AWS instances register despite no matter how they launched.

- You can control inventory by keeping track of deployed infrastructure accurately via their lifecycles. So, you can be sure systems manage properly, and security policies execute correctly.

- Safety in automation with its set of role-based access controls ensuring users will only have access to the AWS resources they need to fulfill their job.

- The same simple playbook language manages infrastructure and deploys applications on a large scale and to different infrastructures easily.

Comparison of Ansible, Puppet, and Terraform

Puppet, Terraform, and Ansible have been around for a considerable period. However, they differ when it comes to set up, GUI, CLI, language, usage, and other features.

You can find a detailed comparison between the three below:

| Point of Difference | Ansible | Puppet | Terraform |

| Management and Scheduling | In Ansible, instantaneous deployments are possible because the server pushes configurations to the nodes. When it comes to scheduling, Ansible Tower, the enterprise version, has the capabilities while it is absent in the free version. | Puppet focuses mainly on the push and pulls configuration, where the clients pull configurations from the server. Configurations must be written in Puppet’s language. When it comes to scheduling, Puppet’s default settings allow it to check all nodes to see if they are in the desired state.

|

In Terraform, resource schedulers work similarly as providers enabling it to request resources from them. Thus, it is just not limited to physical providers such as AWS, allowing its use in layers. Terraform can be used to provision onto the scheduled grid, as well as setting up the physical infrastructure running the schedulers. |

| Ease of Setup and Use | Ansible is simpler to install and use. It has a master without agents, running on the client machines. The fact that it is agentless contributes significantly to its simplicity. Ansible uses YAML syntax, written in the Python language, that comes built-in most Linux and Unix deployments. | Puppet is more model-driven, meant for system administrators. Puppet servers can be installed on one or more servers, while the puppet agent requires installation on all the nodes that require management. The model is thus a client-server or agent-master model. Installation times can take somewhere around ten to thirty minutes. | Terraform is also simpler to understand when it comes to its setup as well as usage. It even allows users to use a proxy server if required to run the installer. |

| Availability: | Ansible has a secondary node in case an active node falls. | Puppet has one or more masters in case the original master fails. | Not Applicable in Terraform’s case. |

| Scalability: | Scalability is easier to achieve | Scalability is less easy to achieve | Scalability is comparatively easily achieved |

| Modules | Ansible’s repository or library is called Ansible Galaxy. It does not have separate sorting capabilities and requires manual intervention. | Puppet’s repository or library is called Puppet Forge. It contains close to 6000 modules. Users can mark puppet modules as approved or supported by Puppet, saving considerable time. | In Terraform’s case, modules allow users to abstract away any reusable parts. These parts can be configured once and can be used everywhere. It thus enables users to group resources, as well as defining input and output variables. |

| GUI | Less developed is Ansible’s GUI, first introduced as a command-line only tool. Even though the enterprise version offers a UI, it still falls short of expectations suffering from syncing issues with the command-line. | Puppet’s GUI is superior to that of Ansible, capable of performing many complex tasks. Used for efficiently managing, viewing, and monitoring activities. | Only third party GUIs are available for Terraform. For example, Codeherent’s Terraform GUI. |

| Support | Ansible also includes two levels of professional support for its enterprise version. Additionally, AnsibleFest, which is a big gathering of users and contributors, is held annually. The community behind it is smaller when compared to Puppet. | Puppet has a dedicated support portal, along with a knowledge base. Additionally, two levels of professional support exist; Standard and Premium. A “state of DevOps” report is produced annually by the Puppet community. | Terraform provides direct access to HashiCorp’s support channel through a web portal. |

Three Comprehensive Solutions To Consider

After looking at the above comparisons, Ansible is quite beneficial for storage and configuring systems in script-like fashion, versus the others. Users can efficiently work in short-lived environments. It also works seamlessly with Kubernetes for configuring container hosts.

Puppet is more mature when it comes to its community support. Puppet has superior modules that allow it to work more as an enterprise-ready solution. Its robust module testing is easy to use. Ansible is suitable for small, temporary, and fast deployments. Whereas, Puppet comes recommended for longer-term or more complex deployments and can manage Docker containers and container orchestrators.

Terraform performs better when it comes to managing cloud services below the server. Ansible is excellent at provisioning software and machines; Terraform is excellent at managing cloud resources.

All three have their benefits and limitations when designing IAC environments for automation. Success depends on knowing which tools to use for which jobs.

Find out which platform can best help redefine the delivery of your services. Reach out to one of our experts for a consultation today.

Next you should read

Best Container Orchestration Tools for 2020

Orchestration tools help users manage containerized applications during development, testing, and deployment. They orchestrate the complete application life cycle based on given specifications. Currently, there is a large variety of Container Orchestration Tools. Do not be surprised if many are Kubernetes related, as many different organizations use it for their production environments. Let’s compare some of the top tools available in 2020.

Introduction to Container Orchestration

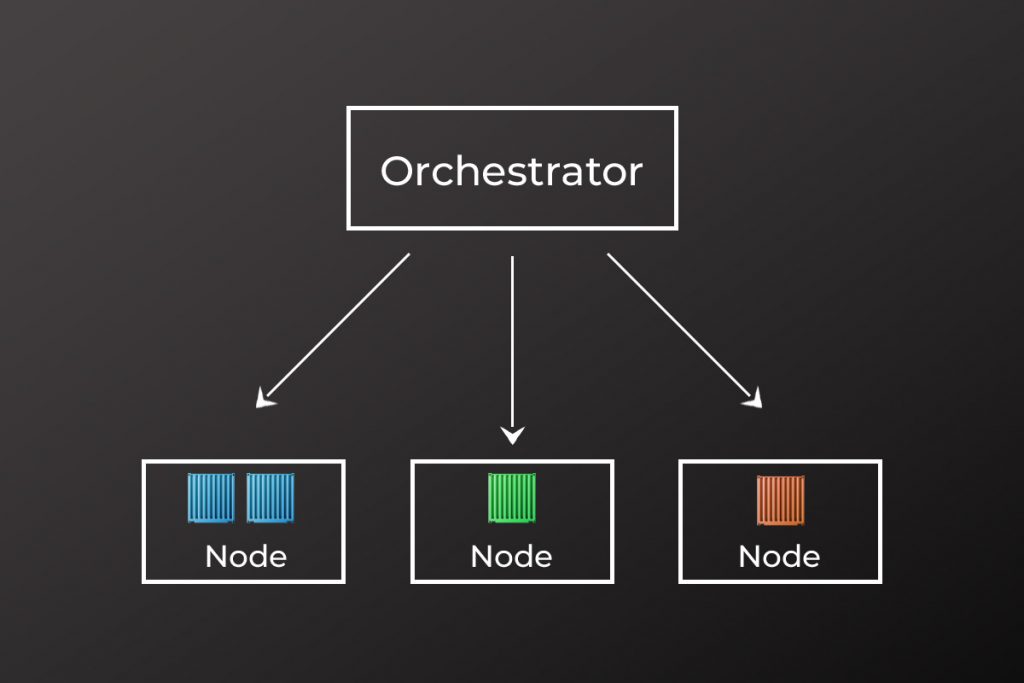

Container orchestration is the process of automating the management of container-based microservice applications across multiple clusters. This concept is becoming increasingly popular within organizations. Alongside it, a wide array of Container Orchestration tools have become essential in deploying microservice-based applications.

Modern software development is no longer monolithic. Instead, it creates component-based applications that reside inside multiple containers. These scalable and adjustable containers come together and coordinate to perform a specific function or microservice. They can span across many clusters depending on the complexity of the application and other needs such as load balancing.

Containers package together application code and their dependencies. They obtain the necessary resources from physical or virtual hosts to work efficiently. When complex systems are developed as containers, proper organization and prioritization are required when clustering them for deployment.

That is where Container orchestration tools come in to play along with numerous advantages, such as:

- Better environmental adaptability and portability.

- Effortless deploying and managing.

- Higher scalability.

- Stabler virtualization of OS resources.

- Constant availability and redundancy.

- Handles and spread application load evenly across the system.

- Improved networking within the application.

Comparing the Top Orchestration Tools

Kubernetes (K8s)

Google initially developed Kubernetes. It has since become a flagship project of the Cloud Native Computing Foundation. It is an open-source, portable, cluster managed orchestration framework. Most importantly, Kubernetes is backed by google. The design of Kubernetes allows containerized applications to run multiple clusters for more reliable accessibility and organization.

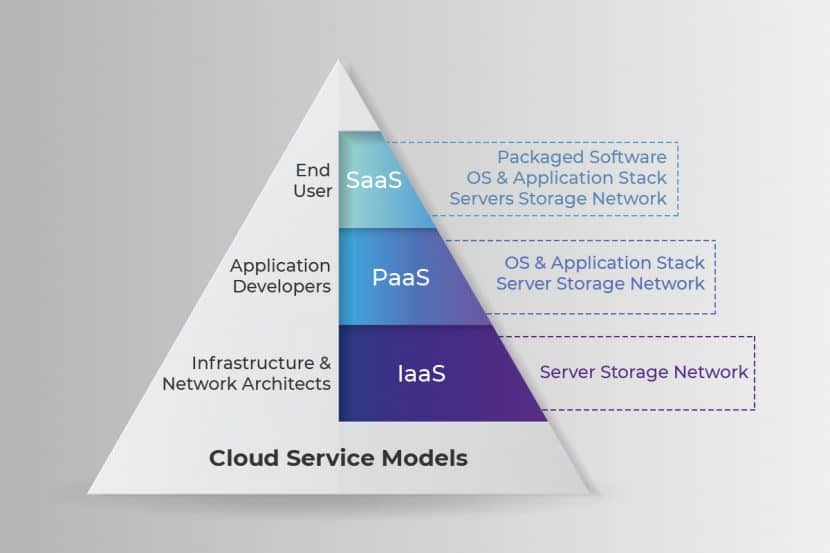

Kubernetes is extremely popular within DevOps circles because tools like Docker offer Kubernetes as Platform as a Service (PaaS) or infrastructure as a Service (IaaS).

Key Features

- Automated deployment, rollouts, and rollbacks.

- Automatic scalability and controllability

- Isolation of containers.

- Ability to keep track of service health

- Service discovery and load balancing

- It works as a platform providing service.

Advantages

- Provide complete enterprise-level container and cluster management services.

- It’s well documented and extensible.

- Adjust the workload without redesigning the application.

- Lesser resource costs.

- Flexibility in deploying and managing.

- Enhanced portability due to container isolation.

Many cloud providers use Kubernetes to give managed solutions as it’s the current standard for container orchestration tools.

Kubernetes Engine

Kubernetes Engine is part of the Google cloud platform with container and cluster management services. It provides all the functionality of Kubernetes, like deployment, scaling, and management of containerized applications. Also, it’s faster and more efficient as it’s not necessary to handle individual Kubernetes clusters.

Kubernetes engine manages and runs even Google’s applications like Gmail and YouTube. Synonymous with productivity, innovation, resource efficiency.

Key Features

- Support for Kubernetes based container tools like Docker.

- It offers a hybrid networking system where it allocates a range of IP addresses for a cluster.

- It provides powerful scheduling features.

- Utilizes its OS to manage and control the containers.

- Uses Google Cloud Platform’s control panel to provide integrated logging and monitoring.

Advantages

- Automatic scaling, upgrading, and repairing.

- Facilitate container isolation by removing interdependencies.

- Seamlessly load-balanced and scaled.

- Secure with Google’s Network policies.

- Portability between Clouds and On-Premises.

Amazon Elastic Kubernetes Service (EKS)

Amazon EKS is another well managed Kubernetes service. It takes over the responsibility of managing, securing, and scaling containerized applications. Thus, nullifying the need for the Kubernetes control panel. These EKS clusters run in AWS Fargate in multiple zones, which computes containers without a server. Kubernetes based applications can be conveniently migrated to Amazon EKS without any code refactoring.

EKS integrates with many open-source Kubernetes tools. These come from both the community and several AWS tools like Route 53, AWS Application Load Balancer, and Auto Scaling.

Key Features

- Facilitates a scalable and highly available control plane.

- Support for distributed infrastructure management in multiple AWS availability zones.

- Consumer service mesh features with AWS App Mesh.

- EKS integrates with many services like Amazon Virtual Private Cloud (VPC), Amazon CloudWatch, Auto Scaling Groups, and AWS Identity and Access Management (IAM).

Advantages

- Eliminates the necessity of provision and manage servers.

- Can specify the resources per application and pay accordingly.

- More secure with application isolation design.

- Continues healthy monitoring without any downtime upgrades and patching.

- Avoid single point of failure as it runs in multiple availability zones.

- Monitoring, traffic control, and load balancing are improved.

Azure Kubernetes Service (AKS)

AKS provides a managed service for hosted Kubernetes with continuous integration and continuous delivery approach. It facilitates convenient deploying and managing, serverless Kubernetes with more dependable security and governance.

AKS provides an agile microservices architecture. It enables simplified deployment and management of systems complex enough for machine learning. They can be easily migrated to the cloud with portability for its containers and configurations.

Key Features

- Integrated with Visual Studio Code Kubernetes tools, Azure DevOps and Azure Monitor

- KEDA for auto-scaling and triggers.

- Access management via Azure Active Directory.

- Enforce rules across multiple clusters with Azure Policy.

Advantages

- The ability to build, manage, and scale microservice-based applications.

- Simple portability and application migration options

- Better security and speed when Devops works together with AKS.

- AKS is easily scalable by using additional pods in ACI.

- Real-time processing of data streams.

- Ability to train machine learning models efficiently in AKS clusters using tools like Kubeflow.

- It provides scalable resources to run IoT solutions.

IBM Cloud Kubernetes Service

This option is a fully managed service designed for the cloud. It facilitates modern containerized applications and microservices. Also, it has capabilities to build and operate the existing applications by incorporating DevOps. Furthermore, It integrates with advance services like IBM Watson and Blockchain for swift and efficient application delivery.

Key Features

- Ability to containerize existing apps in the cloud and extend them for new features.

- Automatic rollouts and rollbacks.

- Facilitates horizontal scaling by adding more nodes to the pool.

- Containers with customized configuration management.

- Effective logging and monitoring.

- It has improved security and isolation policies.

Advantages

- Secure and simplified cluster management.

- Service discovery and load balancing capabilities are stabler.

- Elastic scaling and immutable deployment

- Dynamic provisioning

- Resilient and self-healing containers.

Amazon Elastic Container Service (ECS)

Amazon ECS is a container orchestration tool that runs applications in a managed cluster of Amazon EC2 instances. ECS powers many Amazon services such as Amazon.com’s recommendation engine, AWSBatch, and Amazon SageMaker. This setup ensures the credibility of its security, reliability, and availability. Therefore ECS can be considered as suitable to run mission-critical applications.

Key Features

- Similar to EKS, ECS clusters run in serverless AWS Fargate.

- Run and manage Docker containers.

- Integrates with AWS App Mesh and other AWS services to bring out greater capabilities. For example:

- Amazon Route 53,

- Amazon CloudWatch

- Access Management (IAM)

- AWS Identity,

- Secrets Manager

- Support for third party docker image repository.

- Support Docker networking through Amazon VPC.

Advantages

- Payment is based on resources per application.

- Provision and managed servers are not needed.

- Updated resource locations ensure higher availability.

- End to end visibility through service mesh

- Networking via Amazon VPC ensures container isolation and security.

- Scalability without complexity.

- More effective load balancing.

Azure Service Fabric

ASF is a distributed service framework for managing container-based applications or microservices. It can be either cloud-based or on-premise. Its scalable, flexible, data-aware platform delivers low latency and high throughput workloads, addressing many challenges of native cloud-based applications.

A “run anything anywhere” platform, it helps to build and manage Mission-critical applications. ASF supports Multi-tenant SaaS applications. IoT data gathering and processing workloads are its other benefits.

Key Features

- Publish Microservices in different machines and platforms.

- Enabling automatic upgrades.

- Self-repair scaling in or scaling out nodes.

- Scale automatically by removing or populating nodes.

- Facilitates the ability to have multiple instances of the same service.

- Support for multi-language and frameworks.

Advantages

- Low latency and improved efficiency.

- Automatic upgrades with zero downtime

- Supports stateful and stateless services

- It can be installed to run on multiple platforms.

- Allows more dependable resource balancing and monitoring

- Full application lifecycle management with CI/CD abilities.

- Perform leader election and service discovery automatically.

Docker Platform

Docker Orchestration tools facilitate the SDLC from development to production while Docker swarm takes care of cluster management. It provides fast, scalable, and seamless production possibilities for dispersed applications. A proven way to best handle Kubernetes and containers.

It enables building and sharing Docker images within teams as well as large communities. Docker platform is extremely popular among developers. According to a Stack Overflow survey, it ranked as the most “wanted,” “loved,” and “used” platform.

Key Features

- It supports both Windows and Linux OS

- It provides the ability to create Windows applications using the Docker Engine (CS Docker Engine) and Docker Datacenter.

- It uses the same kernel as Linux, which is used in the host computer.

- Supports any container supported infrastructure.

- Docker Datacenter facilitates heterogeneous applications for Windows and Linux.

- Docker tools can containerize legacy applications through Windows server containers.

Advantages

- It provides a perfect platform to build, ship, and run distributed systems faster.

- Docker provides a well-equipped DevOps environment for developers, testers, and the deployment team.

- Improved performance with cloud-like flexibility.

- Smaller size as it uses the same kernel as the host.

- It provides the ability to migrate applications to the cloud without a hassle.

Helios

Helios is an open-source platform for Docker by Spotify. It enables running containers across many servers. Further, it avoids a single point of failure since it can handle many HTTP requests at the same time. Helios logs all deploys, restarts, and version changes. It can be managed through its command-line and via HTTP API.

Key Features

- Fits easily into the way you do DevOps.

- Works with any network topology or operating system.

- It can run many machines at a time or a single machine instance.

- No prescribed service discovery.

- Apache Mesos is not a requirement to run Helios. However, JVM and Zookeeper are prerequisites.

Advantages

- Pragmatic

- Works at scale

- No system dependencies

- Avoid single points of failure

How to Choose a Container Orchestration Tool?

We have looked at several Orchestration Tools that you can consider choosing from when deciding what is best for your organization. To do so, be clear about your organization’s requirements and processes. Then you can more easily assess the pros and cons of each.

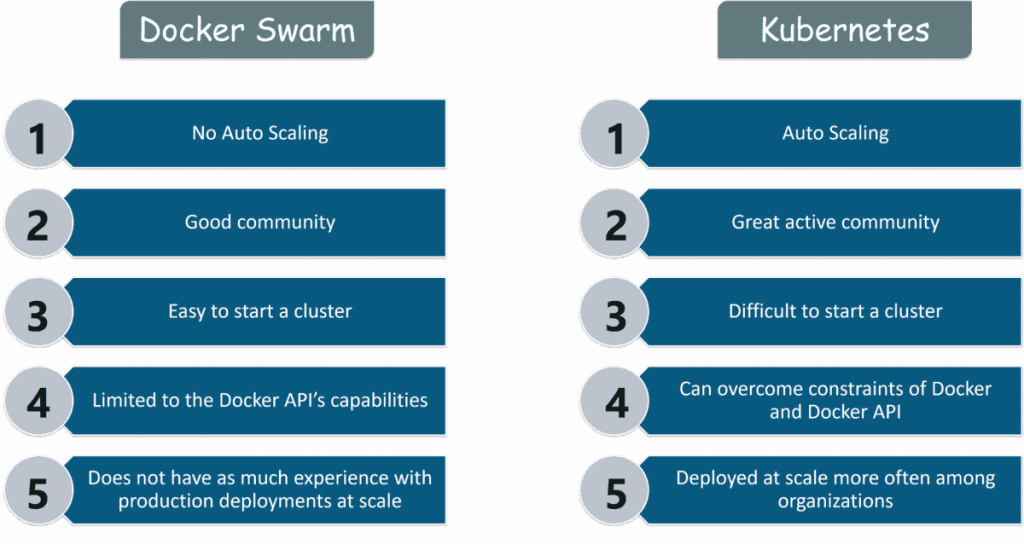

Kubernetes

Kubernetes provides a tremendous amount of functionality and is best suited for enterprise-level containers and cluster management. Various platforms manage Kubernetes like Google, AWS, Azure, Pivotal, and Docker. You have considerable flexibility as the containerized workload scales.

The main drawback is the lack of compatibility with Docker Swarm and Compose CLI manifests. It can also be quite complex to learn and set up. Despite these drawbacks, it’s one of the most sought after platforms to deploy and manage clusters.

Docker Swarm

Docker Swarm is more suitable for those already familiar with Docker Compose. Simple and straightforward it requires no additional software. However, unlike Kubernetes and Amazon ECS, Docker Swarm does not have advanced functionalities like built-in logging and monitoring. Therefore, it is more suitable for small scale organizations that are getting started with containers.

Amazon ECS

If you’re already familiar with AWS, Amazon ECS is an excellent solution for cluster deployment and configuration. A fast and convenient way to start-up and meets demand with scale, it integrates with several other AWS services. Furthermore, it’s ideal for small teams who do not have many resources to maintain containers.

One of its cons is that it’s not suitable for nonstandard deployments. It also has ECS specific configuration files making troubleshooting difficult.

Find Out More About Server Orchestration Tools

The software industry is rapidly moving towards the development of containerized applications. The importance of choosing the right tools to manage them is ever-increasing increasing.

Container Orchestration Platforms have various features and solutions for the challenges caused by their use. We have compared and analyzed the many differences between Container Orchestration Tools. The “Kubernetes vs. Docker-swarm” and “Kubernetes vs. Mesos” articles are noteworthy among them.

If you want more information about which tools suit your architecture best, book a call with one of our experts today

Recent Posts

What is Container Orchestration? Benefits & How It Works

What is Container Orchestration?

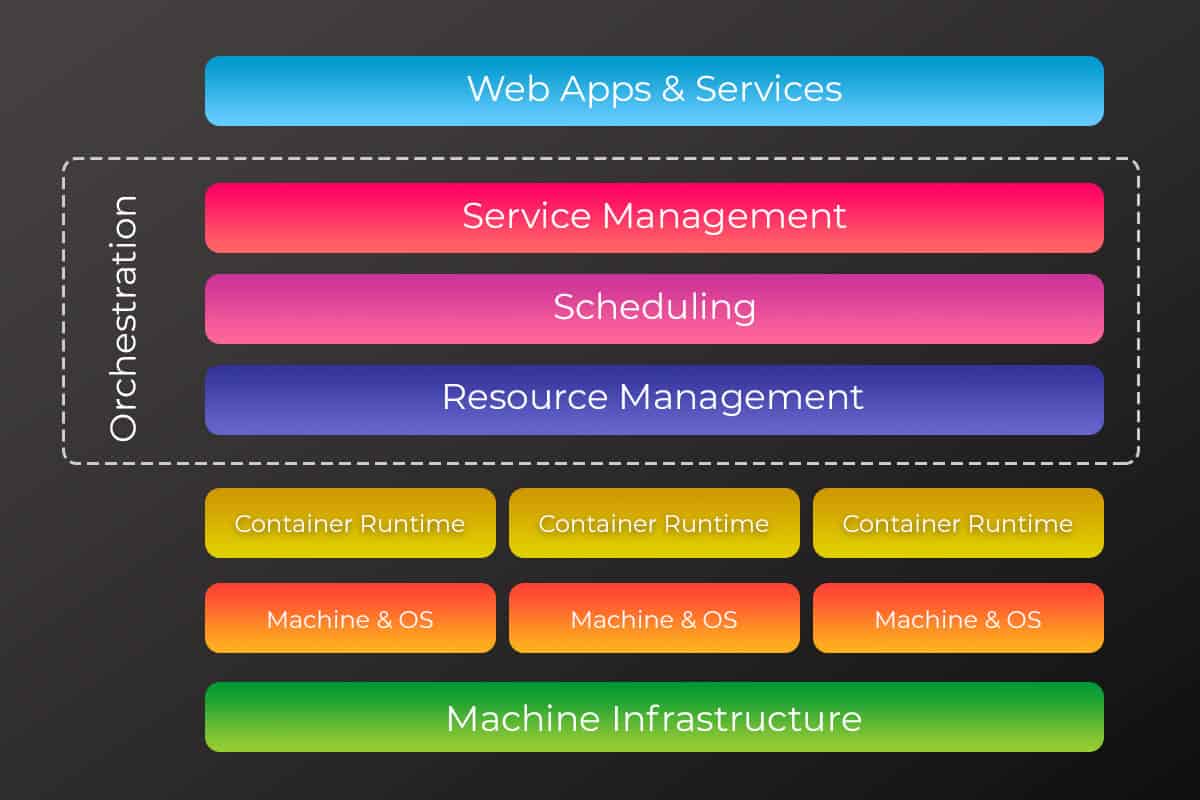

Container orchestration refers to a process that deals with managing the lifecycles of containers in large, dynamic environments. It’s a tool that schedules the workload of individuated containers within many clusters, for applications based on microservices. A process of virtualization, it essentially separates and organizes services and applications at the base operating level. Orchestration is not a hypervisor since the containers are not separate from the rest of the architecture. It shares the same resources and kernel of the operating system.

Containerization has emerged as a new way for software organizations to build and maintain complex applications. Organizations that have adopted microservices in their businesses are using container platforms for application management and packaging.

The problem it solves

Scalability is the problem that containerization resolves when facing operational challenges in utilizing containers effectively. The problem begins when there are many containers and services to manage simultaneously. Their organization becomes complicated and cumbersome. Container orchestration solves that problem by offering practical methods for automating the management, deployment, scaling, networking, and availability of containers.

Microservices use containerization to deliver more scalable and agile applications. This tool gives companies complete access to a specific set of resources, either in the host’s physical or virtual operating system. It’s why containerization platforms have become one of the most sought-after tools for digital transformation.

Software teams in large organizations find container orchestration a highly effective way to control and to automate a series of tasks, including;

- Container Provisioning

- Container Deployment

- Container redundancy and availability

- Removing or scaling up containers to spread the load evenly across the host’s system.

- Allocating resources between containers

- Monitoring the health of containers and hosts

- Configuring an application in relation to specific containers which are using them

- Balancing service discovery load between containers

- Assisting in the movement of containers from one host to another if resources are limited or if a host expires

To explain how containerization works we need to look at the deployment of microservices. Microservices employ containerization to deliver tiny, single-function modules. They work together to produce more scalable and agile applications. This inter-functionality of smaller components (containers) is so advantageous that you do not have to build or deploy a completely new version of your software each time you update or scale a function. It saves time, resources, and allows for flexibility that monolithic architecture cannot provide.

How Does Container Orchestration Work?

There are a host of container orchestration tools available on the market currently with Docker swarm and Kubernetes commanding the largest user-bases in the community.

Software teams use container orchestration tools to scribe the configuration of their applications. Depending on the nature of the orchestration tool being used, the file could be in a JSON or YAML format. These configuration files are responsible for directing the orchestration tool towards the location of container images. Information on other functions that the configuration file is responsible for includes establishing networking between containers, mounting storage volumes, and the location for storing logs for the particular container.

Replicated groups of containers deploy onto the hosts. The container orchestration tool subsequently schedules the deployment, once it’s time to deploy a container into a cluster. It then searches for an appropriate host to place the container, based on constraints such as CPU or memory availability. The organization of containers happens according to labels, Metadata, and their proximity to other hosts.

The orchestration tool manages the container’s lifecycle once it’s running on the host. IT follows specifications laid out by the software team in the container’s definition file. Orchestration tools are increasingly popular due to their versatility. They can work in any environment which supports containers. Thus, they support both traditional on-premise servers and public cloud instances, running on services such as Microsoft Azure or Amazon Web Services.

What are containers used for?

Making deployment of repetitive tasks and jobs easier: Containers assist or support one or several similar processes that are run in the background, i.e. batch jobs or ETL functions.

Giving enhanced support to the microservices architecture: Microservices and distributed applications are effortlessly deployed and easily isolated or scaled by implementing single container building blocks.

Lifting and shifting: Containers can ‘Lift and Shift’, which means to migrate existing applications into modern and upgraded environments.

Creating and developing new container-native apps: This aspect underlines most of the benefits of using containers, such as refactoring, which is more intensive and beneficial than ‘lift-and-shift migration’. You can also isolate test environments for new updates for existing applications.

Giving DevOps more support for (CI/CD): Container technology allows for streamlined building, testing, and deployment from the same container images and assists DevOps to achieve continuous integration and deployment.

Benefits of Containerized Orchestration Tools

Container orchestration tools, once implemented, can provide many benefits in terms of productivity, security, and portability. Below are the main advantages of containerization.

- Enhances productivity: Container orchestration has simplified installation, decreasing the number of dependency errors.

- Deployments are faster and simple: Container orchestration tools are user-friendly, allowing quick creation of new containerized applications to address increasing traffic.

- Lower overhead: Containers take up lesser system resources when you compare to hardware virtual-machine or traditional environments. Why? Operating system images are not included.

- Improvement in security: Container orchestration tools allow users to share specific resources safely, without risking security. Web application security is further enhanced by application isolation, which separates each application’s process into separate containers.

- Increase in Portability: Container orchestration allows users to scale applications with a single command. It only provides scale specific functions which do not affect the entire application.

- Immutability: Container orchestration can encourage the development of distributed systems, adhering to the principles of immutable infrastructure, which cannot be affected by user modifications.

Container Orchestration Tools: Kubernetes vs. Docker Swarm

Kubernetes and Docker are the two current market leaders in building and managing containers.

Docker, when first became available, became synonymous with containerization. It’s a runtime environment that creates and builds software inside containers. According to Statista, over 50% of IT leaders reported using Docker container technology in their companies last year. Kubernetes is a container orchestrator. It recognizes multiple container runtime environments, such as Docker.

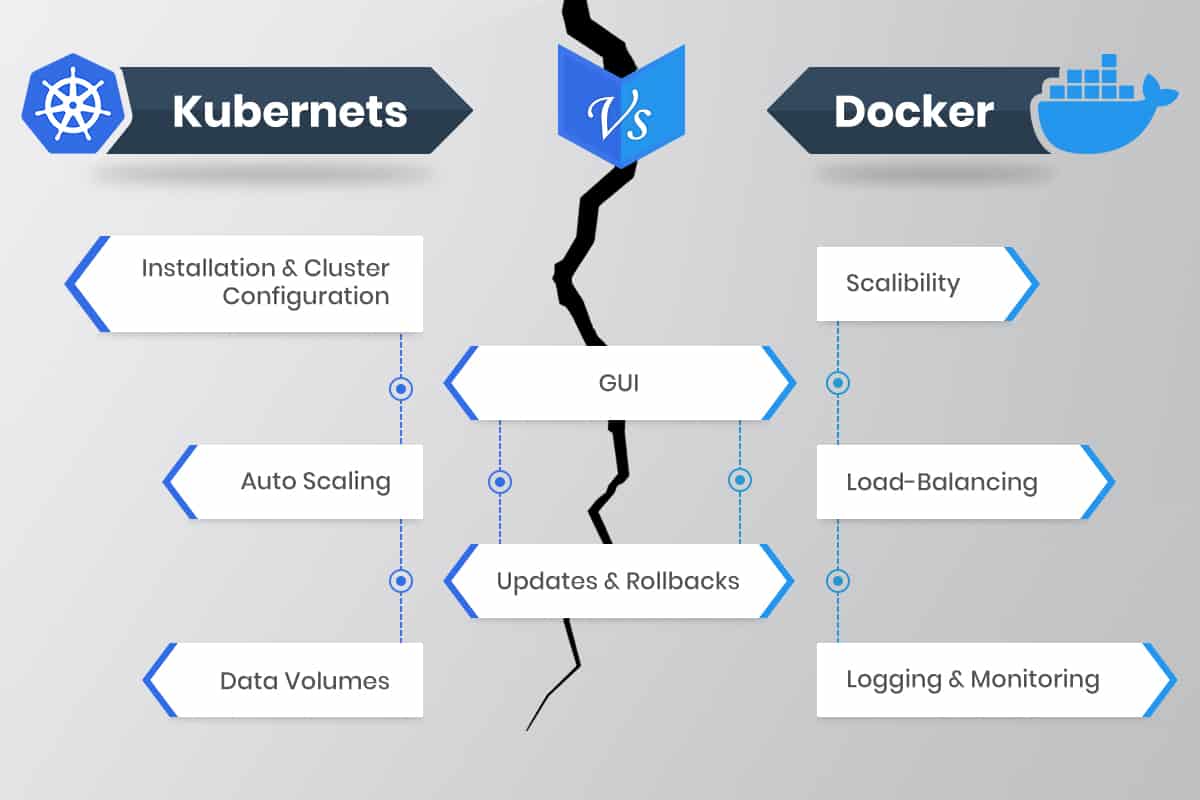

To understand the differences between Kubernetes and Docker Swarm, we should examine it more closely. Each has its own sets of merits and disadvantages, which makes the task of choosing between one of them, a tough one. Indeed, both the technologies differ in some fundamental ways, as evidenced below:

| Points of Difference | Kubernetes | Docker Swarm |

| Container Setup | Docker Compose or Docker CLI cannot define containers. Kubernetes instead uses its own YAML, client definitions, and API. These differ from standard docker equivalents. | The Docker Swarm API offers much of the same functionality of Dockers, although it does not recognize all of Docker’s commands. |

| High Availability | Pods are distributed among nodes, offering high availability as it tolerates the failure of an application. Load balancing services detect unhealthy pods and destroys them. | Docker Swarm also offers high availability as the services can replicate via swarm nodes. The entire cluster is managed by Docker Swarm’s Swarm manager nodes, which also handle the resources of worker nodes. |

| Load Balancing | In most instances, an ingress is necessary for load balancing purposes. | A DNS element inside Swarm nodes can distribute incoming requests to a service name. These services can run on ports defined by the user, or be assigned automatically. |

| Scalability | Since Kubernetes has a comprehensive and complex framework, it tends to provide strong guarantees about a unified set of APIs as well as the cluster state. This setup slows downscaling and deployment. | Docker Swarm is Deploys containers much faster, allowing faster reaction times for achieving scalability. |

| Application Definition | Applications deploy in Kubernetes via a combination of microservices, pods, and deployments. | Applications deploy either as microservices or series in a swarm cluster. Docker-compose helps in installing the application. |

| Networking | Kubernetes has a flat networking model. This setup allows all pods to interact with each other according to network specifications. To do so, it implements as an overlay. | As a node joins a swarm cluster, an overlay network will generate. This overlay network covers every host in the docker swarm, along with a host-only docker bridge network. |

Which Containerization Tool to Use?

Container orchestration tools are still fledgling and constantly evolving technologies. Users should make their decision after looking at a variety of factors such as architecture, flexibility, high availability needs, and learning curve. Besides the two popular tools, Kubernetes and Docker Swarm, there are also a host of other third-party tools and software associated with them both, which allow for continuous deployment.

Kubernetes currently stands as the clear standard when it comes to container orchestration. Many cloud service providers as Google and Microsoft have started offering “Kubernetes-as-a-service” options. Yet, if you’re starting and running a smaller deployment without a lot to scale, then Docker Swarm is the way to go. Read our in-depth article on the key differences between Kubernetes vs Docker Swarm.

To get support with your container development and CI/CD pipeline, or find out how advanced container orchestration can enhance your microservices, connect with one of our experts to explore your options today.

Recent Posts

Kubernetes vs OpenShift: Key Differences Compared

With serverless computing and container technology being at the forefront, the demand for container technology has risen considerably. Container management platforms such as Kubernetes and OpenShift may be well-known, though possibly not as well understood.

Both Kubernetes and OpenShift consist of modern, future proof architecture, which is also robust and scalable. Due to the similarities, the decision to choose one of the two platforms can be difficult. In this article, we compare Kubernetes versus OpenShift in detail and examine the fundamental differences and unique benefits each provides.

What is Kubernetes?

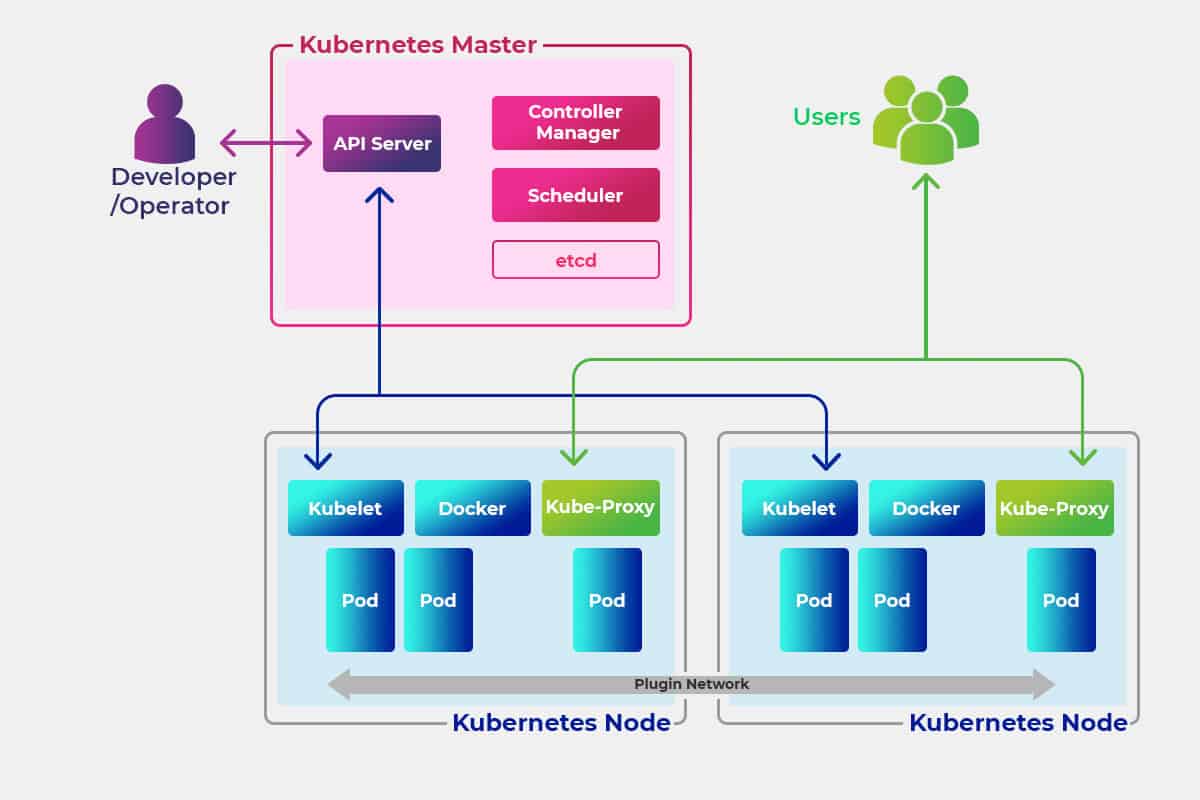

Kubernetes is an open-source container as a service platform (CaaS) that can automate deploying, scaling, and managing containerized apps to speed up the development procedure. Originally developed at Google, the product was later handed over to the Cloud Native Computing Foundation under the Linux Foundation.

Many cloud services tend to offer a variant of a Kubernetes based platform or infrastructure as a service. Here, Kubernetes can be deployed as a platform-providing service, with many vendors providing their own branded distributions of Kubernetes.

Key Features of Kubernetes

- Storage Orchestration: Allows Kubernetes to integrate with most storage systems, such as AWS Elastic Storage.

- Container Balancing: IT enables Kubernetes to calculate the best location for a container automatically.

- Scalability: Kubernetes allow horizontal scaling. This setup allows for organizations to scale out their storage, depending on their workload requirements.

- Flexibility: Kubernetes can be run in multiple environments, including on-premises, public, or hybrid cloud infrastructures.

- Self-Monitoring: Kubernetes provides monitoring capabilities to help check the health of servers and containers.

Why Choose Kubernetes?

A significant part of the industry prefers Kubernetes due to the following reasons:

- Strong Application Support – Kubernetes has added support for a broad spectrum of programming frameworks and languages, which enables it to satisfy a variety of use cases

- Mature Architecture: The architecture of Kubernetes is preferred because of its association with Google’s Engineers, who have worked on the product for almost ten years.

- Developmental Support: Because Kubernetes has a large and active online user community, new features get added frequently. Additionally, the user community also provides technical support that encourages collaborations.

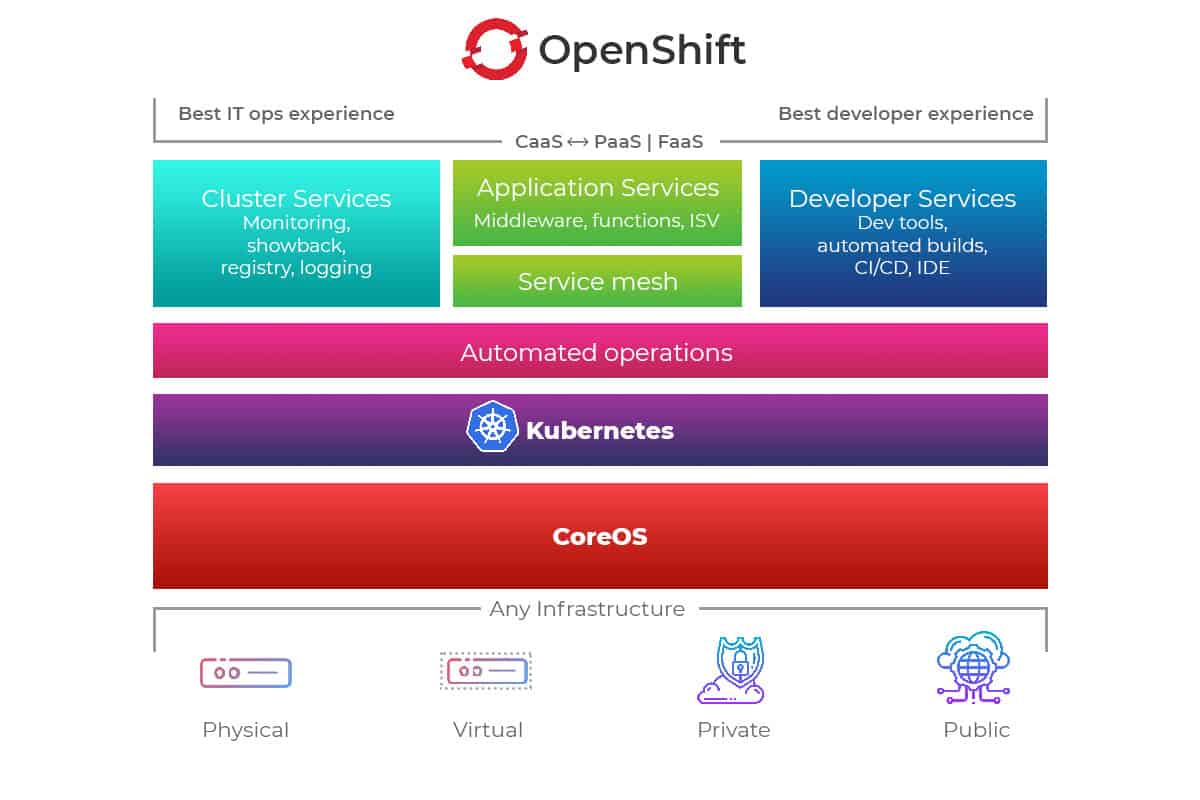

What is OpenShift?

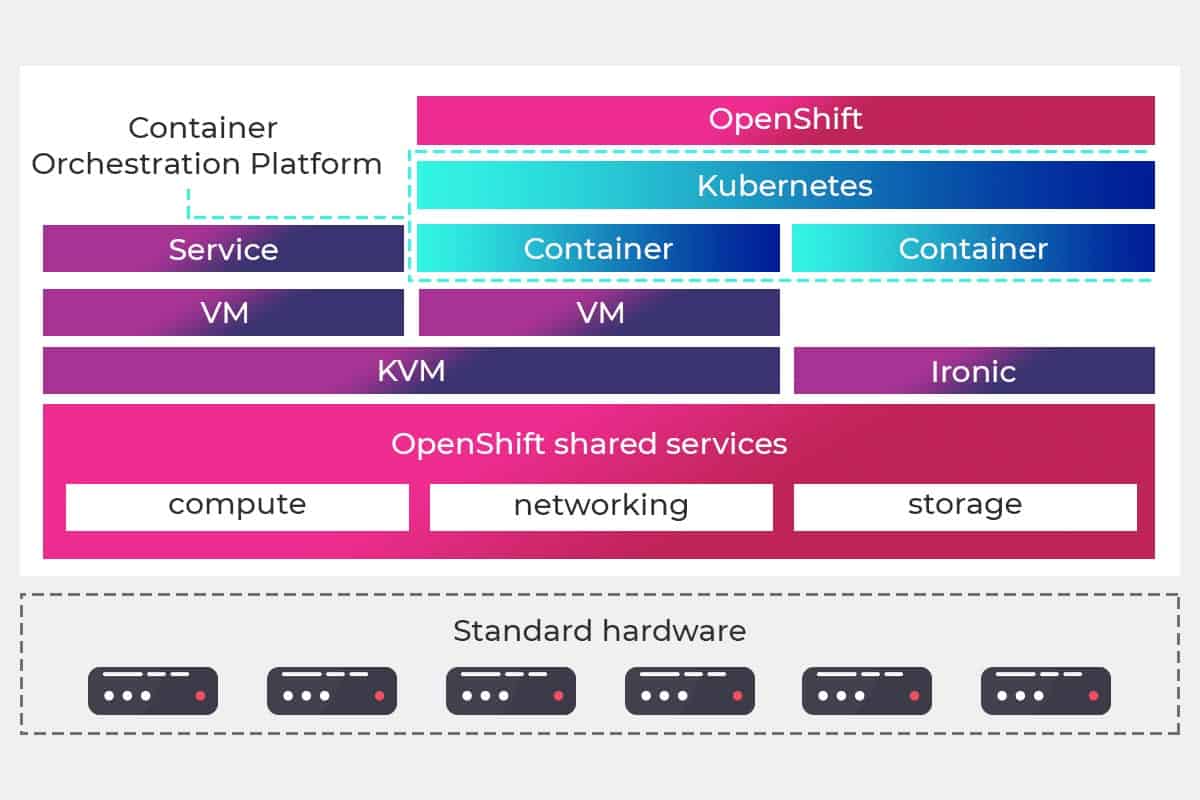

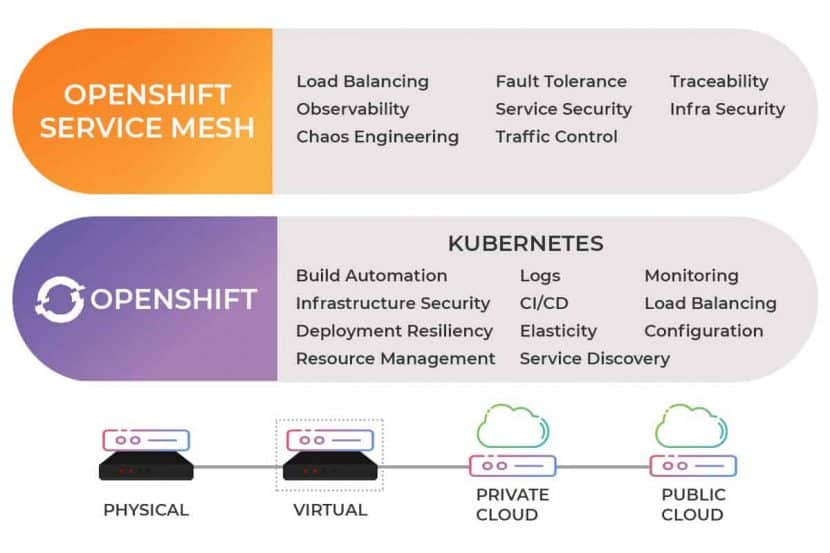

OpenShift is also a containerization software solution, possessing an Apache License. Developed by Red Hat. Its original product is the OpenShift container platform, a Platform-as-a-Service (PaaS), which can be managed by Kubernetes. Termed as the ‘Enterprise Kubernetes,’ the program is written in a combination of both Go and AngularJS languages. Its primary function allows developers to deploy and develop apps directly on the cloud. It also appends tools over a Kubernetes core to quicken the process.

A substantial change came with the recent introduction of OpenShift V3 (released October 2019). Before the release of this version, custom-developed technologies had to be used for container orchestration. With V3, OpenShift added Docker as their prime container technology, and Kubernetes as the prime container orchestration technology, which will be continued in subsequent releases.

OpenShift brings along with it a set of products such as the OpenShift Container Platform, OpenShift Dedicated, Red Hat OpenShift Online, and OpenShift origin.

Key Features of OpenShift

- Compatibility: As part of the certified Kubernetes program, OpenShift has compatibility with Kubernetes container workloads.

- Constant Security: OpenShift has security checks that are built into the container stack.

- Centralized policy management: OpenShift has a single console across clusters. This control panel provides users with a centralized place to implement policies.

- Built-in Monitoring: OpenShift comes with Prometheus, which is a devops database and application monitoring tool. It allows users to visualize the applications in real-time, using a Grafana dashboard.

Why Choose OpenShift?

Popular reason users prefer OpenShift are highlighted below:

- Self-service Provisioning: OpenShift provides users with the capability of integrating the tools they use the most. For instance, as a result of this, a video game developer can use OpenShift while developing games.

- Faster Application Development: Can stream and automate the entire container management process, which in turn enhances the DevOps process.

- No vendor lock-in: Provides a vendor-agnostic open-source platform, allowing users to migrate their own container processes to other operating systems as required without having to take any extra step.

What is the difference Between OpenShift and Kubernetes?

OpenShift and Kubernetes share many foundational and functional similarities since OpenShift is intentionally based on Kubernetes. Yet, there are other fundamental technical differences explained in the table below.

| Points of Difference | Kubernetes | OpenShift |

| Programming Language Used | Go | Angular JS and Go |

| Release Year | 2014 | 2011 |

| Developed by | Cloud-Native Computing Foundation | Red Hat |

| Origin | It was released as an open-source framework or project, and not as a product | It is a product, but with many variations. For example, open-source OpenShift is not a project and rather an OKD. |

| Base | Kubernetes is flexible when it comes to running on different operating systems. However, RPM is the preferred package manager, which is a Linux distribution. It is preferred that Kubernetes be run on Ubuntu, Fedora, and Debian. This setup allows it to run on major LaaS platforms like AWS, GCP, and Azure. | OpenShift, on the other hand, can be installed on the Red Hat Enterprise Linux or RHEL, as well as the Red Hat Enterprise Linux Atomic Host. Thus, it can also run on CentOS and Fedora. |

| Web UI | The dashboard inside Kubernetes requires separate installation and can be accessed only through the Kube proxy for forwarding a port of the user’s local machine to the cluster admin’s server. Since it lacks a login page, users need to create a bearer token for authorization and authentication manually. All this makes the Web UI complicated and not suited for daily administrative work. | OpenShift comes with a login page, which can be easily accessed. It provides users with the ability to create and change resources using a form. Users can thus visualize servers, cluster roles, and even projects, using the web. |

| Networking | It does not include a native networking solution and only offers an interface that can be used by network plugins made by third parties. | IT includes a native networking solution called Open Switch, which provides three different plugins. |

| Rollout | Kubernetes provides a myriad of solutions to create Kubernetes clusters. Users can use installers such as Rancher Kubernetes Everywhere or Kops. | OpenShift does not require any additional components after the rollout. It thus comes with a proprietary Ansible based installer, with the capabilities of installing OpenShift with the minimum configuration parameters. |

| Integrated Image Registry | Kubernetes does not have any concept of integrated image registries. Users can set up their own Docker registry. | OpenShift includes their image registry, which can be used with Red Hat or DockerHub. It also allows users to search for information regarding images and image streams related to projects, via a registry console. |

| Key Cloud Platform Availability | It is available on EKS for Amazon AWS, AKS for Microsoft Azure, and GKE for Google GCP. | Has a product known as OpenShift Online, OpenShift Dedicated, as well as OpenShift on Azure. |

| CI/CD | Possible with Jenkins but is not integrated within it. | Seamless integration with Jenkins is available. |

| Updates | Supports many concurrent updates simultaneously | Does not support concurrent updates |

| Learning Curve | It has a complicated web console, which makes it difficult for novices. | It has a very user-friendly web console ideal for novices. |

| Security and authentication | Does not have a well-defined security protocol | Has secure policies and stricter security models |

| Who Uses it | HCA Healthcare, BMW, Intermountain Healthcare, ThoughtWorks, Deutsche Bank, Optus, Worldpay Inc, etc. | NAV, Nokia, IBM, Phillips, AppDirect, Spotify, Anti Financial, China Unicom, Amadeus, Bose, eBay, Comcast, etc. |

Evident in the comparison table are their similar features. Kubernetes and OpenShift are both open-source software platforms that facilitate application development via container orchestration. They make managing and deploying containerized apps easy. OpenShift’s web console which allows users to perform most tasks directly on it.

Both facilitate faster application development. OpenShift has a slight advantage when it comes to easy installations, primarily since it relies on Kubernetes to a high degree. Kubernetes does not have a proper strategy in place for installation, despite being the more advanced option. Installing Kubernetes requires managed Kubernetes clusters or a turnkey solution.

OpenShift has also introduced many built-in components and out-of-the-box features to make the process of containerization, faster. Below is a broader comparison table of their points of difference:

Making the Decision: Kubernetes or OpenShift?

Which one you decide to use will come down to the requirements of your system and the application you’re building.

The question to ask in the Kubernetes vs. OpenShift debate is figuring out what features take precedence: Flexibility or an excellent web interface for the development process? Notwithstanding IT experience, infrastructure, and expertise to handle the entire development lifecycle of the application.

Want more information? Connect with us, and allow us to assist you in containerizing your development processes.

Recent Posts

Kubernetes vs OpenStack: How Do They Stack Up?

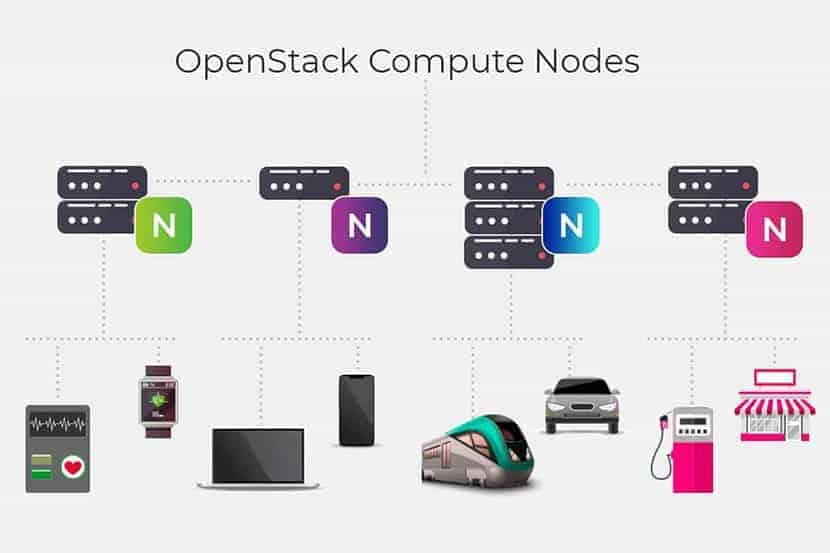

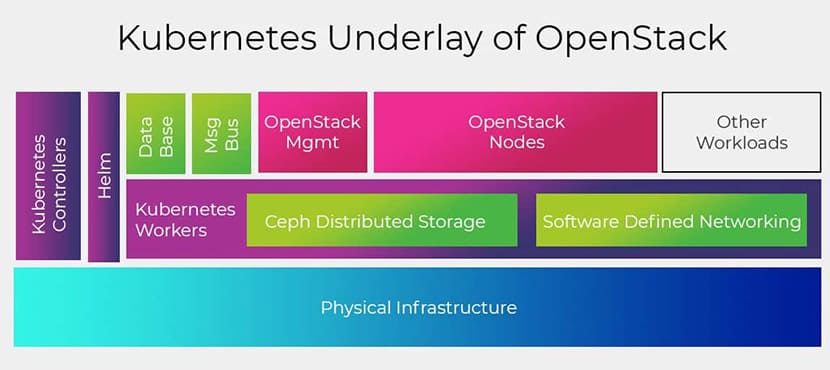

Cloud interoperability keeps evolving alongside its platforms. Kubernetes and OpenStack are not merely direct competitors but can now also be combined to create cloud-native applications. Kubernetes is the most widely used container orchestration tool to manage/orchestrate Linux containers. It deploys, maintains, and schedules applications efficiently. OpenStack lets businesses run their Infrastructure-as-a-Service (IaaS) and is a powerful software application.

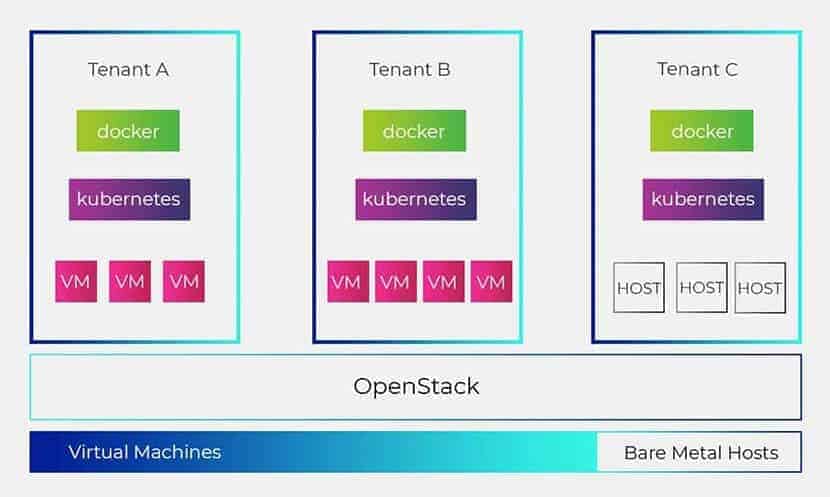

Kubernetes and OpenStack have been regarded as competitors, but in actuality, both these open-source technologies can be combined and are complementary to one other. They both offer solutions to problems that are relatively similar but do so on different layers of the stack. When you combine Kubernetes and OpenStack, it can give you noticeably enhanced scalability and automation.

It’s now possible for Kubernetes to deploy and manage applications on an OpenStack cloud infrastructure. OpenStack as a cloud orchestration tool allows you to run Kubernetes clusters on top of white label hardware more efficiently. Containers can be aligned with this open infrastructure, which enables them to share computer resources in rich environments, such as networking and storage.

Difference between OpenStack and Kubernetes

Kubernetes and OpenStack still do compete for users, despite their overlapping features. Both have their own sets of merits and use cases. It’s why it’s necessary to take a closer look at both options to determine their differences and find out which technology or combination is best for your business.

To present a more precise comparison between the two technologies, let’s start with the basics.

What is Kubernetes?

Kubernetes is an open-source cloud platform for managing containerized workloads and services. Kubernetes is a tool used to manage clusters of containerized applications. In computing, this process is often referred to as orchestration.

The analogy with a music orchestra is, in many ways, fitting. Much as a conductor would, Kubernetes coordinates multiple microservices that together form a useful application. It automatically and perpetually monitors the cluster and makes adjustments to its components. Kubernetes architecture provides a mix of portability, extensibility, and functionality, facilitating both declarative configuration and automation. It handles scheduling by using nodes set up in a compute cluster. Kubernetes also actively manages workloads, ensuring that their state matches with the intentions and desired state set by the user.

Kubernetes is designed to make all its components swappable and thus have modular designs. It is built for use with multiple clouds, whether it is public, private, or a combination of the two. Developers tend to prefer Kubernetes for its lightweight, simple, and accessible nature. It operates using a straightforward model. We input how we would like our system to function – Kubernetes compares the desired state to the current state within a cluster. Its service then works to align the two states and achieve and maintain the desired state.

How is Kubernetes Employed?

Kubernetes is arguably one of the most popular tools employed when it comes to getting the most value out of containers. Its features ensure that it is a near-perfect tool designed to automate scaling, deployment, and operating containerized applications.

Kubernetes is not only an orchestration system. It is a set of independent, interconnected control processes. Its role is to continuously work on the current state and move the processes in the desired direction. Kubernetes is ideal for service consumers, such as developers working in enterprise environments as it provides support for programmable, agile, and rapidly deployable environments.

Kubernetes is used for several different reasons:

- High Availability: Kubernetes includes several high-availability features such as multi-master and cluster federation. The cluster federation feature allows clusters to be linked together. This setup exists so that containers can automatically move to another cluster if one fails or goes down.

- Heterogeneous Clusters: Kubernetes can run on heterogeneous clusters allowing users to build clusters from a mix of virtual machines (VMs) running the cloud, according to user requirements.

- Persistent Storage: Kubernetes has extended support for persistent storage, which is connected to stateless application containers.

- Built-in Service Discovery and Auto-Scaling: Kubernetes supports service discovery, out of the box, by using environment variables and DNS. For increased resource utilization, users can also configure CPU based auto-scaling for containers.

- Resource Bin Packing: Users can declare the maximum and minimum compute resources for both CPU and memory when dealing with containers. It slots the containers into wherever they fit, which increases compute efficiency, which results in lower costs.

- Container Deployments and Rollout Controls: The Deployment feature allows users to describe their containers and specify the required quantity. It keeps those containers running and also handles deploying changes. This enables users to pause, resume, and rollback changes as per requirements.

What is OpenStack?

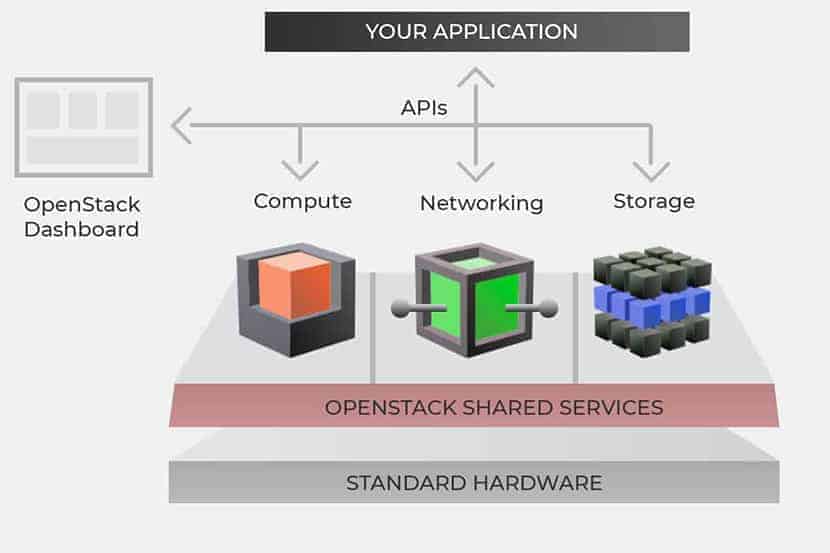

OpenStack an open-source cloud operating system that is employed to develop public and private cloud environments. Made up of multiple interdependent microservices, it offers an IaaS layer that is production-ready for virtual machines and applications. OpenStack, first developed as a cloud infrastructure in July 2010, was a product of the joint effort of many companies, including NASA and Rackspace.

Their goal since has been to provide an open alternative to the top cloud providers. It’s also considered a cloud operating system that can control large pools of computation, storage, and networking resources through a centralized datacenter. All of this is managed through a user-friendly dashboard, which provides users with increased control by allowing them to provision resources through a simple graphic web interface. OpenStack’s growing in popularity because it offers open-source software to businesses wanting to deploy their own private cloud infrastructure versus using a public cloud platform.

How is OpenStack Used?

It’s known for its complexity, consisting of around sixty components, also called ‘services’, six of them are core components, and they control the most critical aspects of the cloud. These services are for the compute, identity, storage management, and networking of the cloud, including access management.

OpenStack comprises of a series of commands known as scripts, which are bundled together into packages called projects. The projects are responsible for relaying tasks that create cloud environments. OpenStack does not virtualize resources itself; instead, it uses them to build clouds.

When it comes to cloud infrastructure management, OpenStack can be employed for the following.

Containers

OpenStack provides a stable foundation for public and private clouds. Containers are used to speed up the application delivery time while also simplifying application management and deployment. Containers running on OpenStack can thus scale container benefits ranging from single teams to even enterprise-wide interdepartmental operations.

Network Functions Virtualization

OpenStack can be used for network functions virtualization, and many global communications service providers include it on their agenda. OpenStack separates a network’s key functions to distribute it among different environments.

Private Clouds

Private cloud distributions tend to run on OpenStack better than other DIY approaches due to the easy installation and management facilities provided by OpenStack. The most advantageous feature is its vendor-neutral API. Its open API erases the worries of single-vendor lock-in for businesses and offers maximum flexibility in the cloud.

Public Clouds

OpenStack is considered as one of the leading open-source options when it comes to creating public cloud environments. OpenStack can be used to set up public clouds with services that are on the same level as most other major public cloud providers. This makes them useful for small scale startups as well as multibillion-dollar enterprises.

What are the Differences between Kubernetes and OpenStack?

Both OpenStack and Kubernetes provide solutions for cloud computing and networking in very different ways. Some of the notable differences between the two are explained in the table below.

| Points of Difference | Kubernetes | OpenStack |

| Classification | Classified as a Container tool | Classified as an Open Source Cloud tool |

| User Base | It has a large Github community of over 55k users as well as, 19.1 Github forks. | Not much of an organized community behind it |