Definitive Cloud Migration Checklist For Planning Your Move

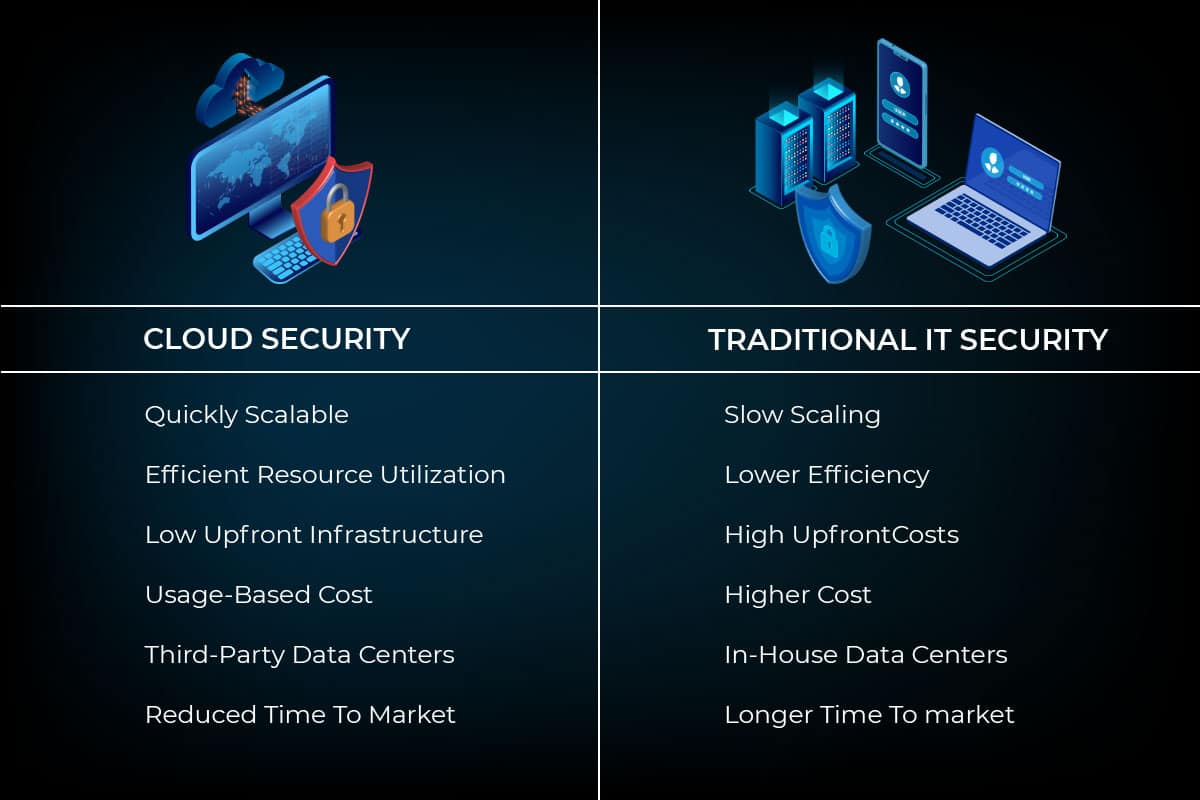

Embracing the cloud may be a cost-effective business solution, but moving data from one platform to another can be an intimidating step for technology leaders.

Ensuring smooth integration between the cloud and traditional infrastructure is one of the top challenges for CIOs. Data migrations do involve a certain degree of risk. Downtime and data loss are two critical scenarios to be aware of before starting the process.

Given the possible consequences, it is worth having a practical plan in place. We have created a useful strategy checklist for cloud migration.

1. Create a Cloud Migration Checklist

Before you start reaping the benefits of cloud computing, you first need to understand the potential migration challenges that may arise.

Only then can you develop a checklist or plan that will ensure minimal downtime and ensure a smooth transition.

There are many challenges involved with the decision to move from on-premise architecture to the cloud. Finding a cloud technology provider that can meet your needs is the first one. After that, everything comes down to planning each step.

The very migration is the tricky part since some of your company’s data might be unavailable during the move. You may also have to take your in-house servers temporarily offline. To minimize any negative consequences, every step should be determined ahead of time.

With that said, you need to remain willing to change the plan or rewrite it as necessary in case something brings your applications and data to risk.

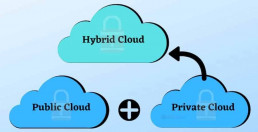

2. Which Cloud Solution To Choose, Public, Hybrid, Private?

Public Cloud

A public cloud provides service and infrastructure off-site through the internet. While public clouds offer the best opportunity for efficiency by sharing resources, it comes with a higher risk of vulnerability and security breaches.

Public clouds make the most sense when you need to develop and test application code, collaboratively working on projects, or you need incremental capacity. Be sure to address security concerns in advance so that they don’t turn into expensive issues in the future.

Private Cloud

A private cloud provides services and infrastructure on a private network. The allure of a private cloud is the complete control over security and your system.

Private clouds are ideal when your security is of the utmost importance. Especially if the information stored contains sensitive data. They are also the best cloud choice if your company is in an industry that must adhere to stringent compliance or security measures.

Hybrid Cloud

A hybrid cloud is a combination of both public and private options.

Separating your data throughout a hybrid cloud allows you to operate in the environment which best suits each need. The drawback, of course, is the challenge of managing different platforms and tracking multiple security infrastructures.

A hybrid cloud is the best option for you if your business is using a SaaS application but wants to have the comfort of upgraded security.

3. Communication and Planning Are Key

Of course, you should not forget your employees when coming up with a cloud migration project plan. There are psychological barriers that employees must work through.

Some employees, especially older ones who do not entirely trust this mysterious “cloud” might be tough to convince. Be prepared to spend some time teaching them about how the new infrastructure will work and assure them they will not notice much of a difference.

Not everyone trusts the cloud, particularly those who are used to physical storage drives and everything that they entail. They – not the actual cloud service that you use – might be one of your most substantial migration challenges.

Other factors that go into a successful cloud migration roadmap are testing, runtime environments, and integration points. Some issues can occur if the cloud-based information does not adequately populate your company’s operating software. Such scenarios can have a severe impact on your business and are a crucial reason to test everything.

A good cloud migration plan considers all of these things. From cost management and employee productivity to operating system stability and database security. Yes, your stored data has some security needs, especially when its administration is partly trusted to an outside company

When coming up with and implementing your cloud migration system, remember to take all of these things into account. Otherwise, you may come across some additional hurdles that will make things tougher or even slow down the entire process.

4. Establish Security Policies When Migrating To The Cloud

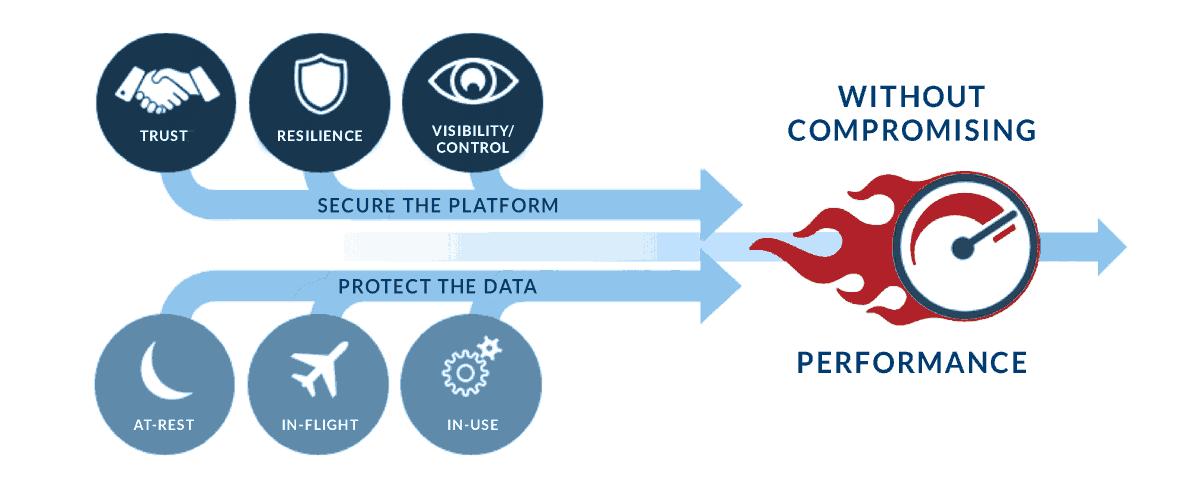

Before you begin your migration to the cloud, you need to be aware of the related security and regulatory requirements.

There are numerous regulations that you must follow when moving to the cloud. These are particularly important if your business is in healthcare or payment processing. In this case, one of the challenges is working with your provider on ensuring your architecture complies with government regulations.

Another security issue includes identity and access management to cloud data. Only a designated group in your company needs to have access to that information to minimize the risks of a breach.

Whether your company needs to follow HIPAA Compliance laws, protect financial information or even keep your proprietary systems private, security is one of the main points your cloud migration checklist needs to address.

Not only does the data in the cloud need to be stored securely, but the application migration strategy should keep it safe as well. No one – hackers included – who are not supposed to have it should be able to access that information during the migration process. Plus, once the business data is in the cloud, it needs to be kept safe when it is not in use.

It needs to be encrypted according to the highest standards to be able to resist breaches. Whether it resides in a private or public cloud environment, encrypting your data and applications is essential to keeping your business data safe.

Many third-party cloud server companies have their security measures in place and can make additional changes to meet your needs. The continued investments in security by both providers and business users have a positive impact on how the cloud is perceived.

According to recent reports, security concerns fell from 29% to 25% last year. While this is a positive trend in both business and cloud industries, security is still a sensitive issue that needs to be in focus.

5. Plan for Efficient Resource Management

Most businesses find it hard to realize that the cloud often requires them to introduce new IT management roles.

With a set configuration and cloud monitoring tools, tasks switch to a cloud provider. A number of roles stay in-house. That often involves hiring an entirely new set of talents.

Employees who previously managed physical servers may not be the best ones to deal with the cloud.

There might be migration challenges that are over their heads. In fact, you will probably find that the third-party company that you contracted to handle your migration needs is the one who should be handling that segment of your IT needs.

This situation is something else that your employees may have to get used to – calling when something happens, and they cannot get the information that they need.

While you should not get rid of your IT department altogether, you will have to change some of their functions over to adjust to the new architecture.

However, there is another type of cloud migration resource management that you might have overlooked – physical resource management.

When you have a company server, you have to have enough electricity to power it securely. You need a cold room to keep the computers in, and even some precautionary measures in place to ensure that sudden power surges will not harm the system. These measures cost quite a bit of money in upkeep.

When you use a third-party data center, you no longer have to worry about these things. The provider manages the servers and is in place to help with your cloud migration. Moreover, it can assist you with any further business needs you may have. It can provide you with additional hardware, remote technical assistance, or even set up a disaster recovery site for you.

These possibilities often make the cloud pay for itself.

According to a survey of 1,037 IT professionals by TechTarget, companies spend around 31% of their dedicated cloud spending budgets on cloud services. This figure continues to increase as businesses continue discovering the potential of the cloud

6. Calculate your ROI

Cloud migration is not inexpensive. You need to pay for the cloud server space and the engineering involved in moving and storing your data.

However, although this appears to be one of the many migration challenges, it is not. As cloud storage has become popular, its costs are falling. The Return on Investment or ROI, for cloud storage also makes the price worthwhile.

According to a survey conducted in September 2017, 82% of organizations realized that the prices of their cloud migration met or exceeded their ROI expectations. Another study showed that the costs are still slightly higher than planned.

In this study, 58% of the people responding spent more on cloud migration than planned. The ROI is not affected as they still may have saved money in the long run, even if the original migration challenges sent them over budget.

One of the reasons why people receive a positive ROI is because they will no longer have to store their current server farm. Keeping a physical server system running uses up quite a few physical utilities, due to the need to keep it powered and cool.

You will also need employees to keep the system architecture up to date and troubleshoot any problems. With a cloud server, these expenses go away. There are other advantages to using a third party server company, including the fact that these businesses help you with cloud migration and all of the other details.

The survey included some additional data, including the fact that most people who responded – 68% of them – accepted the help of their contracted cloud storage company to handle the migration. An overwhelming majority also used the service to help them come up with and implement a cloud migration plan.

Companies are not afraid to turn to the experts when it comes to this type of IT service. Not everyone knows everything, so it is essential to know when to reach out with questions or when implementing a new service.

Final Thoughts on Cloud Migration Planning

If you’re still considering the next steps for your cloud migration, the tactics outlined above should help you move forward. A migration checklist is the foundation for your success and should be your first step.

Cloud migration is not a simple task. However, understanding and preparing for challenges, you can migrate successfully.

Remember to evaluate what is best for your company and move forward with a trusted provider.

Recent Posts

NSX-V vs NSX-T: Discover the Key Differences

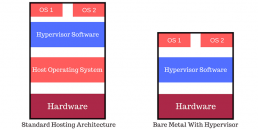

Virtualization has changed the way data centers are built. Modern data centers utilize physical servers and hardware as hypervisors to run virtual machines. Virtualizing these functions enhances flexibility, cost-effectiveness, and improve the scalability of the data center. VMware is a leader in the virtualization platform market space. It allows for multiple virtual machines to run on a single physical machine.

One of the most important elements of each data center, including virtualized ones, is the network. Companies that require large or complex network configurations prefer using software-defined networking (SDN).

SDN or Software-defined-networking is an architecture to make networks agile and flexible. It improves network control by equipping companies and service providers with the ability to rapidly respond and adapt to changing technical requirements. It’s a dynamic technology in the world of virtualization.

VMware

In the virtualization market space, VMware is one of the biggest names, offering a wide range of products connected to their virtual workstation, network virtualization, and security platform. VMware NSX has two variants of the product called NSX-V and NSX-T.

In this article, we explore VMware NSX and examine some differences between VMware NSX-V and VMware NSX-T.

What is NSX?

NSX refers to a specialized software-defined networking solution offered by VMware. Its main function is to provide virtualized networking to its users. NSX Manager is the centralized component of NSX, which is used for the management of networks. NSX also provides essential security measures to ensure that the virtualization process is safe and secure.

Businesses seeing the scale and complexity of their networks growing rapidly will need greater power invested in visibility and management. Modernization can be achieved in the implementation of a top-grade data center SDN solution with agile controls. SDN empowers this vision by centralizing and automating management and control.

What is NSX-T?

NSX-T by VMware offers an agile software-defined infrastructure for building cloud-native application environments. It aims to provide automation, simplicity in operations, networking and security.

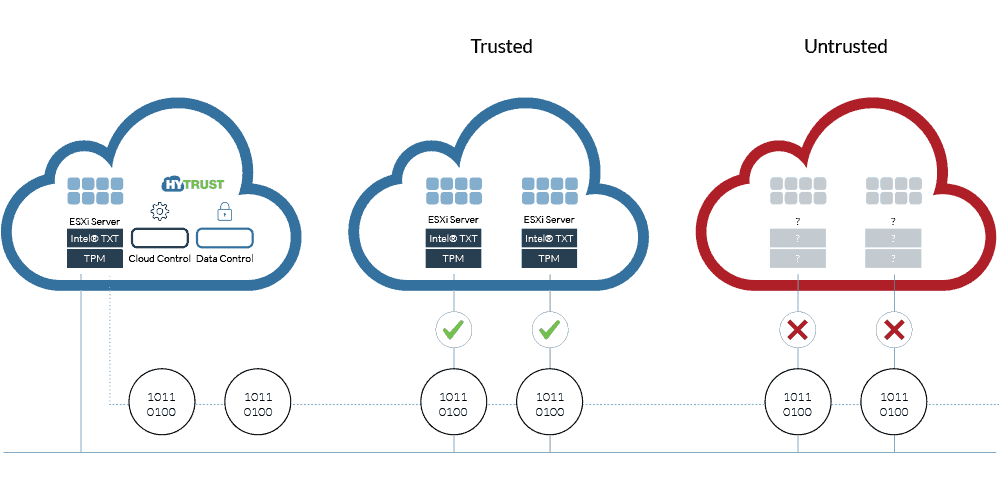

NSX-T supports multiple clouds, multi-hypervisor environments, and bare-metal workloads. It also supports cloud-native applications. NSX-T supports network virtualization stack for OpenStack, Kubernetes, KVM, and Docker as well as AWS native workloads. It can be deployed without a vCenter Server, and it’s adopted for heterogeneous compute systems. NSX-T is considered the future of VMware.

What is NSX-V?

NSX-V architecture features deployment reconfiguration, rapid provisioning, and destruction of the on-demand virtual networks. It integrates with VMware vSphere and is specific to hypervisor environments. Such design utilizes the vSphere distributed switch, allowing a single virtual switch to connect multiple hosts in a cluster.

NSX Components

The primary components of VMware are NSX Edge gateways, NSX Manager, and NSX controllers.

NSX Manager is a primary component that works to manage networks, from a private data center to native public clouds. With NSX-V, the NSX Manager works with one vCenter Server. In the case of NSX-T, the NSX Manager can be deployed as ESXi VM or KVM VM and NSX Cloud. NXT-T Manager runs on the Ubuntu operating system while NSX-V is on Photon OS. The NSX controller is the central hub, controlling all logical switches that are within a network, and secures information of all virtual machines, VXLANs, and hosts.

NSX Edge

NSX Edge is a gateway service that allows VMs access to physical and virtual networks. It can be installed as a services gateway or as a distributed virtual router and provides the following services: Firewalls, Load Balancing, Dynamic routing, Dynamic Host Configuration Protocol (DHCP), Network Address Translation (NAT), and Virtual Private Network (VPN).

NSX Controllers

NSX Controllers is a distributed state management system that overlays transport tunnels. It controls virtual networks that are deployed as a VM on KVM or ESXi hypervisors. It monitors and controls all logical switches within the network, and manages information about VMs, VXLANs, switches, and hosts. Structured with three controller nodes, it ensures data redundancy if one NSX Controller node malfunctions or fails.

Features of NSX

There are many similar features and capabilities for both NSX types. These include:

- Distributed routing

- API-driven automation

- Detailed monitoring and statistics

- Software-based overlay

- Enhanced user interface

There are many differences as well. For example, NSX-T is cloud-based. It is not focused on any specific platform or hypervisor. NSX-V offers tight integration with vSphere. It also uses a manual process to configure the IP addressing scheme for network segments. APIs are also different for NSX-V and NSX-T.

To better understand these concepts, view the VMware NSX-V vs NSX-T table below.

VMware NSX-V vs NSX-T – Feature Comparison

| Comparison of Features | NSX-V | NSX-T |

| Basic Functions | NSX-V offers rich features such as deployment reconfiguration and rapid provisioning and destruction of any on-demand virtual network.

This allows a single virtual switch to connect to multiple hosts in a cluster, by utilizing the vSphere distributed switch. |

NSX-T provides users with an agile software-defined infrastructure. It can be used for building cloud-native application environments.

It also provides simplicity when it comes to operations in networking and security.

Multiple clouds, multi-hypervisor environments, and bare-metal workloads are all supported by its data structure. |

| Origins | Originally released in 2012, NSX-V is built around the VMWare vSphere environment. | NSX-T also originates from the vSphere ecosystem, designed to address some of the use cases not covered by the NSX-V. |

| Coverage | NSX-V is designed for the sole purpose of allowing on-premises (physical network) vSphere deployments.

A single NSX-V manager can work only with a single VMware vCenter server instance. It is only applicable for VMware Virtual Machines.

This leaves a significant coverage gap, leaving out organizations and businesses using hybrid infrastructure models. |

NSX-T extends its coverage to include multi-hypervisors, containers, public clouds, and bare metal servers.

Since it is decoupled from VMware’s hypervisor platform, it can easily incorporate agents. This is done to perform micro-segmentation even on non-VMware platforms.

The NSX-T’s limitations include some feature gaps. It also leaves out certain micro-segmentation solutions like Guardicore Centra. |

| Working with NSX Manager | NSX-V works with only one vCenter Server. It runs on Photon OS. | NSX-T can be deployed as ESXi VM or KVM VM and NSX Cloud. It runs on the Ubuntu operating system. |

| Deployment | NSX-V requires registration with VMware as the NSX Manager needs to be registered.

The NSX Manager calls for extra NSX Controllers for deployment. |

NSX-T requires the ESXi hosts or transport nodes to be registered first.

The NSX Manager acts as a standalone solution. NSX-T requires users to configure the N-VDS which includes the uplink. |

| Routing | NSX-V uses network edge security and gateway services which are used to isolate virtualized networks.

NSX Edge is installed both as a logical distributed router as well as an edge services gateway. |

NSX-T routing is designed for cloud environments and multi-cloud use. It is designed for multi-tenancy use cases. |

| Overlay encapsulation protocols | VXLAN – NSX-V uses the VXLAN encapsulation protocol | GENEVE – NSX-T uses GENEVE which is a more advanced protocol |

| Logical switch replication modes | Unicast, Multicast, Hybrid | Unicast (Two-tier or Head) |

| Virtual switches (N-VDS) used | vSphere Distributed Switch (VDS) | Open vSwitch (OVS) or VDS |

| Two-tier distributed routing | Not Available | Available |

| APR Suppression | Available | Available |

| Integration for traffic inspection | Available | Not Available |

| Configuring IP addresses scheme for network segments | Manual | Automatic |

| Kernel Level Distributed Firewall | Available | Available |

Deployment Options

The process of deployment looks quite similar for both, yet there are many differences between the NSX-V and NSX-T features. Here are some critical differences in deployment:

- With NSX-V, there is a requirement to register with VMware. An NSX Manager needs to be registered.

- NSX-T allows pointing the NSX-T solution to the VMware vCenter for registering the ESXi hosts or Transport Nodes.

- NSX-V Manager provides a standalone solution. It calls for extra NSX Controllers for deployment.

- NSX-Manager integrates controller functionality and NSX Manager in the virtual appliance. NSX-T Manager becomes a combined appliance.

- NSX-T has an extra configuration of N-VDS which should be completed. This includes the uplink.

Routing

The differences in routing are evident between NSX-T and NSX-V. NSX-T is designed for the cloud and multi-cloud. It is for multi-tenancy use cases, which requires the support of multi-tier routing.

NSX-V features network edge security and gateway services, which can isolate virtualized networks. NSX Edge is installed as a logical distributed router. It is also installed as an edge services gateway.

Choosing between NSX-V and NSX-T

The major differences are evident as seen in the table above, and help us understand the variables in NSX-V vs. NSX-T systems. One is closely associated with the VMWare ecosystem. The other is unrestricted, not focused on any specific platform or hypervisor. To identify for whom each software is best, take into consideration how each option will be used and where it will run:

Choosing NSX-V:

- NSX-V is recommended in cases where a customer already has a virtualized application in the data center. The customer might want to create network virtualization for the current application.

- For customers who value the presence of several tightly integrated features which would be most beneficial in this case.

- If a customer is considering a virtualization application for a current application, NSX-V is recommended.

| Use Cases For NSX-V: |

| Security – Secure end-user, DMZ anywhere |

| Application continuity – Disaster recovery, Multi data center pooling, Cross cloud |

Choosing NSX-T:

- In cases where a customer wants to build modern applications on platforms such as Pivotal Cloud Foundry or OpenShift, NSX-T is recommended. This is due to the vSphere enrollment support (migration coordinator) it provides.

- You plan to build on modern applications, like OpenShift or Pivotal Cloud.

- There are multiple types of hypervisors available.

- If there are any network interfaces to modern applications.

- You are using multi-cloud-based and cloud networking applications.

- You are using a variety of environments.

| Use Cases For NSX-T: |

| Security – Micro-segmentation |

| Automation – Automating IT, Developer cloud, Multi-tenant infrastructure |

Note: VMware NSX-V and NSX-T have many distinct features, a totally different code base, and cater to different use cases.

Conclusion: VMware’s NSX Options Provide a Strong Network Virtualization Platform

NSX-T and NSX-V both solve many virtualization issues, offer full feature sets, and provide an agile and secure environment. NSX-V is the proven and original software-defined solution. It is best if you need a network virtualization platform for existing applications.

NSX-T is the way of the future. It provides you with all of the necessary tools for moving your data, no matter the underlying physical network, and helps you adjust to the constant change in applications.

The choice you make depends on which NSX features meet your business needs. What do you use or prefer? Contact us for more information on NSX-T pricing and NSX-V to NSX-T migration. Keep reading our blog to learn more about different tools and how to find best-suited solutions for your networking requirements.

Recent Posts

Cloud-Native Application Architecture: The Future of Development?

Cloud native application architecture allows software and IT to work together in a faster modern environment.

Applications designed around cloud-native structure define the difference between how new technology is built, packaged, and distributed, instead of where it was created and stored. When creating these applications, you retain complete control and have the final say in the process.

Even if you are not currently hosting your application in the cloud, this article will influence how you develop modern applications moving forward. Read on to find out what cloud native is, how it works, and it’s future implications.

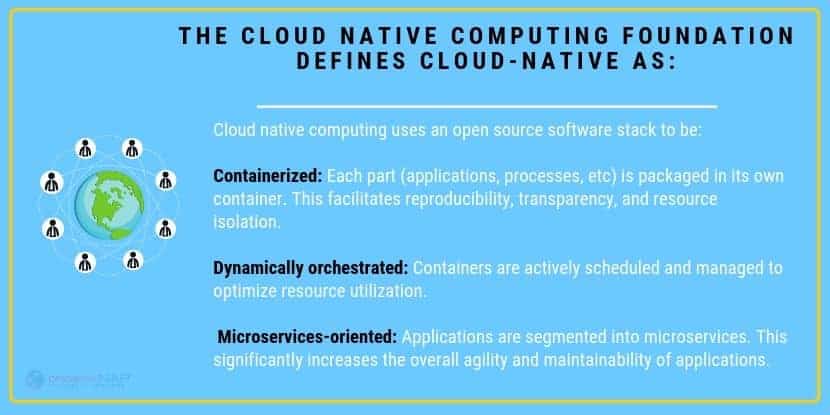

What is Cloud-Native? Defined

Cloud native in terms of applications are container-based environments or packaged apps of microservices. Cloud-native technologies build applications that contain several services packaged together which are deployed together and managed on cloud infrastructure using DevOps processes which provide uninterrupted delivery workflows. These microservices create what is called the architectural approach, which is in place to create smaller bundled applications.

What is a Cloud-Native Architecture?

Cloud–native architecture is built specifically to run in the cloud.

Cloud-native apps start as packaged software called containers. Containers are run through a virtual environment and become isolated from their original environments to make them independent and portable. You can run your personalized design through test systems to see where it’s located. Once you’ve tested it, you can edit to add or remove options.

Cloud-native development allows you to build and update applications quickly while improving quality and reducing risk. It’s efficient, run responsive, and scalable. These are fault-tolerant apps which be run anywhere, from public or private environments, or in hybrid clouds. You can test and build your application until it is precisely how you want it to be. For development aspects that you are not an expert in, you can easily outsource them.

The architecture of your system can be built up with the help of microservices. With the help of these services, you can set up the smaller parts of your apps individually, instead of reworking the entire app, all at once. More specifically, with DevOps and containers, applications become easier to update and release. As a collection of loosely connected services, such as microservices are easier to upgrade instead of waiting for one significant release which takes more time and effort.

Lastly, you’ll want to make sure your application has access to the elasticity of the cloud. With this elasticity it allows your developers to push code to production much faster than in traditional server-based models. You can move and scale your app’s resources at any time.

What Are The Characteristics of Cloud Native Applications?

Now that you know the basics about cloud-native apps, here are a few design princicples that you discuss with your developer in the development stages:

Develop With The Best Languages And Frameworks

All services of cloud-native applications are made using the best languages and frameworks. Make sure you can choose what language and framework suites your apps best.

Build With APIs For Collaboration & Interaction

Find out if you’ll be using fine-grained API-driven services for interaction and collaborating your apps, which are based on different protocols for different parts of the app. For example, Google’s open-source remote procedure call, or gRPC, is used for communication inside different services.

Agile DevOps & Automation

Confirm the capability of your app of becoming fully automated, to manage large applications.

How is your application being defined via protocols? Such protocols are CPU and storage quotas and network policies. The difference between you and an IT department when it comes to these protocols is that you are the owner and have access to everything, the department doesn’t.

Managing your app through DevOps will give your app its own independent life. See how different pipelines may work together to send out and manage your application.

Building Cloud-Native Applications

Application development will differ from developer to developer, depending on their skills and capabilities. Common to most cloud-native apps are the following characteristics, which are added in during the development process.

- Updates – Your app will always be available and up to date.

- Multitenancy – The app will work in a virtual space, sharing resources with other applications.

- Downtime – If a cloud provider has an outage, another data center can pick up where it left off.

- Automation – Speed, and agility rely on audited, reliable, and proven processes that are repeated, as needed.

- Languages – Cloud-native apps can be written in HTML, Java, .Net, PHP, Ruby, CSS, JavaScript, Node.js, Python, and Go, never in C/C++, C#, or any virtual studio language.

- Statelessness – With cloud-native apps being loosely coupled, apps aren’t tied to anything. The app stores itself in a database or another external entity, but you can still find them easily.

- Designed modularly – Microservices run the functions of your app, they can be shut off when not needed, or be updated in one section, rather than the entire app being shut down.

The Future of Cloud-Native Technologies

Cloud-native has already proven with its efficiencies that it is the future of developing software. By 2025, 80% of enterprise apps will become cloud-based or be in the process of transferring themselves over to cloud-native apps. IT departments are already switching to developing cloud-native apps to save money and keep their designs secure off-site, safe from competitors.

Adoption now will save yourself the hassle of doing it later, when it’s more expensive.

By switching over to cloud-native apps, you’ll be able to see first-hand what they have to offer and benefit from running them yourself for years to come. Now, that you know how to take advantage of new types of infrastructure, you can continue to improve it by giving your app developers the tools they need. Go cloud-native and get the benefits of flexible, scalable, reusable apps that use the best container and cloud technology available.

What to Look for When Outsourcing Cloud Native Apps

During the planning process, many companies decide to hire a freelancer to help out in developing and executing a cloud-native strategy. It pays off to have a developer experienced in increasing the speed of applications development and organizing compute resources across different environments. It can save you time, money, and a lot of frustration.

When looking for an application developer remember to take these things into consideration,

- Trust – Ensure that they will keep your information safe and secure

- Quality – Have they produced and provided high quality services to other businesses?

- Price – In creating your own apps, you don’t want to overspend Compare process and services to keep prices down

Cloud-native development helps your company derive more value from hybrid cloud architecture. It’s so important to partnerwith a company or contractor that has experience and a great track record.

Go cloud-native and partner with PhoenixNAP Global IT Services. Contact us today for more information.

Recent Posts

What is a Security Operations Center (SOC)? Best Practices, Benefits, & Framework

In this article you will learn:

- Understand what a Security Operations Center is and active how detection and response prevent data breaches.

- Six pillars of modern security operations you can’t afford to overlook.

- The eight forward-thinking SOC best practices to keep an eye on the future of cybersecurity. Including an overview and comparison of current Framework Models.

- Discover why your organization needs to implement a security program based on advanced threat intelligence.

- In-house or outsource to a managed security provider? We help you decide.

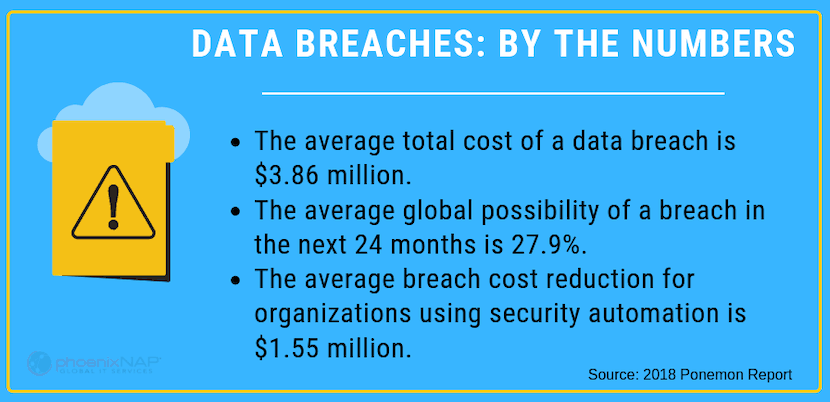

The average total cost of a data breach in 2018 was $3.86 million. As businesses grow increasingly reliant on technology, cybersecurity is becoming a more critical concern.

Cloud security can be a challenge, particularly for small to medium-sized businesses that don’t have a dedicated security team on-staff. The good news is that there is a viable option available for companies looking for a better way to manage security risks – security operations centers (SOCs).

In this article, we’ll take a closer look at what SOCs are, the benefits that they offer. We will also take a look at how businesses of all sizes can take advantage of SOCs for data protection.

What is a Security Operations Center?

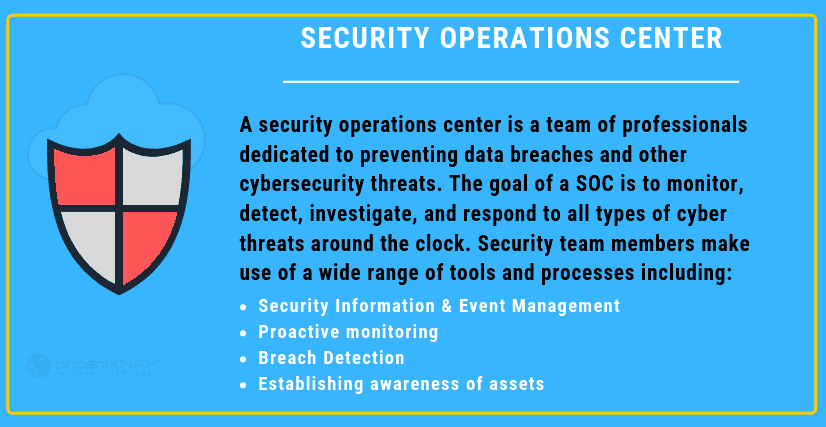

A security operations center is a team of cybersecurity professionals dedicated to preventing data breaches and other cybersecurity threats. The goal of a SOC is to monitor, detect, investigate, and respond to all types of cyber threats around the clock.

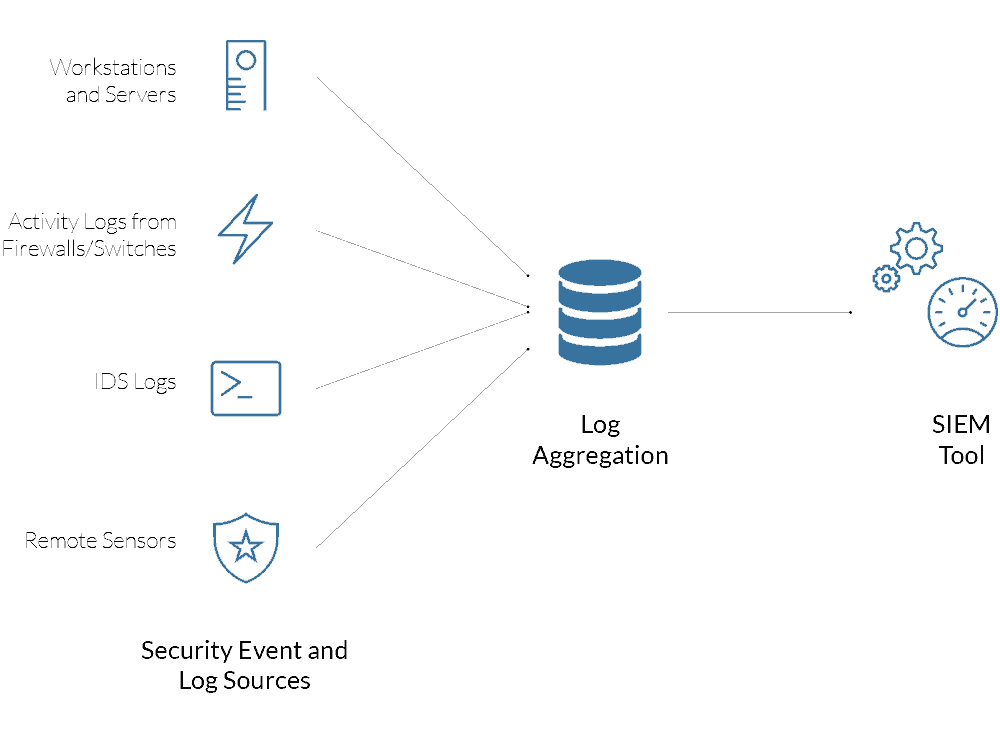

Team members make use of a wide range of technological solutions and processes. These include security information and event management systems (SIEM), firewalls, breach detection, intrusion detection, and probes. SOCs have many tools to continuously perform vulnerability scans of a network for threats and weaknesses and address those threats and deficiencies before they turn into a severe issue.

It may help to think of a SOC as an IT department that is focused solely on security as opposed to network maintenance and other IT tasks.

6 Pillars of Modern SOC Operations

Companies can choose to build a security operations center in-house or outsource to an MSSP or managed security service providers that offer SOC services. For small to medium-sized businesses that lack resources to develop their own detection and response team, outsourcing to a SOC service provider is often the most cost-effective option.

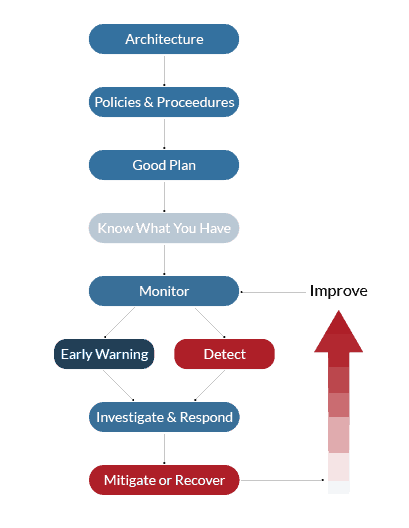

Through the six pillars of security operations, you can develop a comprehensive approach to cybersecurity.

-

-

Establishing Asset Awareness

The first objective is asset discovery. The tools, technologies, hardware, and software that make up these assets may differ from company to company, and it is vital for the team to develop a thorough awareness of the assets that they have available for identifying and preventing security issues.

-

Preventive Security Monitoring

When it comes to cybersecurity, prevention is always going to be more effective than reaction. Rather than responding to threats as they happen, a SOC will work to monitor a network around-the-clock. By doing so, they can detect malicious activities and prevent them before they can cause any severe damage.

-

Keeping Records of Activity and Communications

In the event of a security incident, soc analysts need to be able to retrace activity and communications on a network to find out what went wrong. To do this, the team is tasked detailed log management of all the activity and communications that take place on a network.

-

-

Ranking Security Alerts

When security incidents do occur, the incident response team works to triage the severity. This enables a SOC to prioritize their focus on preventing and responding to security alerts that are especially serious or dangerous to the business.

-

Modifying Defenses

Effective cybersecurity is a process of continuous improvement. To keep up with the ever-changing landscape of cyber threats, a security operations center works to continually adapt and modify a network’s defenses on an ongoing, as-needed basis.

-

Maintaining Compliance

In 2019, there are more compliance regulations and mandatory protective measures regarding cybersecurity than ever before. In addition to threat management, a security operations center also must protect the business from legal trouble. This is done by ensuring that they are always compliant with the latest security regulations.

Security Operations Center Best Practices

As you go about building a SOC for your organization, it is essential to keep an eye on what the future of cybersecurity holds in store. Doing so allows you to develop practices that will secure the future.

SOC Best Practices Include:

Widening the Focus of Information Security

Cloud computing has given rise to a wide range of new cloud-based processes. It has also dramatically expanded the virtual infrastructure of most organizations. At the same time, other technological advancements such as the internet of things have become more prevalent. This means that organizations are more connected to the cloud than ever before. However, it also means that they are more exposed to threats than ever before. As you go about building a SOC, it is crucial to widen the scope of cybersecurity to continually secure new processes and technologies as they come into use.

Expanding Data Intake

When it comes to cybersecurity, collecting data can often prove incredibly valuable. Gathering data on security incidents enables a security operations center to put those incidents into the proper context. It also allows them to identify the source of the problem better. Moving forward, an increased focus on collecting more data and organizing it in a meaningful way will be critical for SOCs.

Improved Data Analysis

Collecting more data is only valuable if you can thoroughly analyze it and draw conclusions from it. Therefore, an essential SOC best practice to implement is a more in-depth and more comprehensive analysis of the data that you have available. Focusing on better data security analysis will empower your SOC team to make more informed decisions regarding the security of your network.

Take Advantage of Security Automation

Cybersecurity is becoming increasingly automated. Taking DevSecOps best practices to complete more tedious and time-consuming security tasks free up your team to focus all of their time and energy on other, more critical tasks. As cybersecurity automation continues to advance, organizations need to focus on building SOCs that are designed to take advantage of the benefits that automation offers.

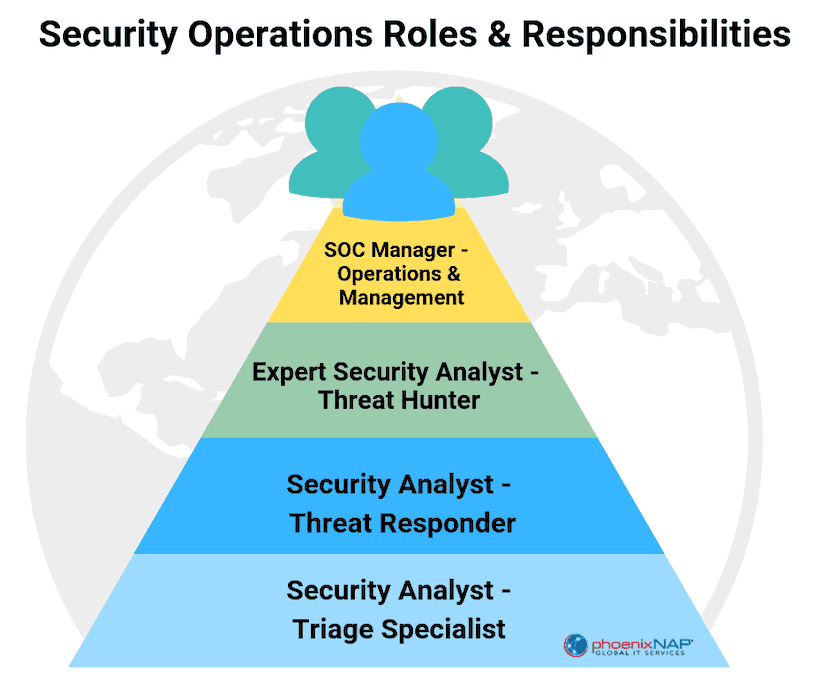

Security Operations Center Roles and Responsibilities

A security operations center is made up of a number of individual team members. Each team member has unique duties. The specific team members that comprise the incident response team may vary. Common positions – along with their roles and responsibilities – that you will find in a security team include:

-

SOC Manager

The manager is the head of the team. They are responsible for managing the team, setting budgets and agendas, and reporting to executive managers within the organization.

-

Security Analyst

A security analyst is responsible for organizing and interpreting security data from SOC report or audit. Also, providing real-time risk management, vulnerability assessment, and security intelligence provide insights into the state of the organization’s preparedness.

-

Forensic Investigator

In the event of an incident, the forensic investigator is responsible for analyzing the incident to collect data, evidence, and behavior analytics.

-

Incident Responder

Incident responders are the first to be notified when security alerts happen. They are then responsible for performing an initial evaluation and threat assessment of the alert.

-

Compliance Auditor

The compliance auditor is responsible for ensuring that all processes carried out by the team are done so in a way that complies with regulatory standards.

SOC Organizational Models

Not all SOCs are structured under the same organizational model. Security operations center processes and procedures vary based on many factors, including your unique security needs.

Organizational models of security operations centers include:

- Internal SOC

An internal SOC is an in-house team comprised of security and IT professionals who work within the organization. Internal team members can be spread throughout other departments. They can also comprise their own department dedicated to security. - Internal Virtual SOC

An internal virtual SOC is comprised of part-time security professionals who work remotely. Team members are primarily responsible for reacting to security threats when they receive an alert. - Co-Managed SOC

A co-managed SOC is a team of security professionals who work alongside a third-party cybersecurity service provider. This organizational model essentially combines a semi-dedicated in-house team with a third-party SOC service provider for a co-managed approach to cybersecurity. - Command SOC

Command SOCs are responsible for overseeing and coordinating other SOCs within the organization. They are typically only found in organizations large enough to have multiple in-house SOCs. - Fusion SOC

A fusion SOC is designed to oversee the efforts of the organization’s larger IT team. Their objective is to guide and assist the IT team on matters of security. - Outsourced Virtual SOC

An outsourced virtual SOC is made up of team members that work remotely. Rather than working directly for the organization, though, an outsourced virtual SOC is a third-party service. Outsourced virtual SOCs provide security services to organizations that do not have an in-house security operations center team on-staff.

Take Advantage of the Benefits Offered by a SOC

Faced with ever-changing security threats, the security offered by a security operations center is one of the most beneficial avenues that organizations have available. Having a team of dedicated information security professionals monitoring your network, security threat detection, and working to bolster your defenses can go a long way toward keeping your sensitive data secure.

If you would like to learn more about the benefits offered by a security operations center team and the options that are available for your organization, we invite you to contact us today.

Recent Posts

HIPAA Compliant Cloud Storage Solutions: Maintain Healthcare Compliance

Hospitals, clinics, and other health organizations have had a bumpy road towards cloud adoption over the past few years. The implied security risks of using the public cloud or working with a third-party service provider considerably delayed cloud adoption in the healthcare industry.

Even today, when 84% of healthcare organizations use cloud services, the question of choosing the right HIPAA compliant cloud provider can be a headache.

All healthcare providers whose clients’ data is stored in the U.S. are a subject to a set of regulations known as HIPAA compliance

Today, any organization that handles confidential patient data needs abide by HIPAA storage requirements.

What is HIPAA Compliance?

HIPAA standards provide protection of health data. Any vendor working with a healthcare organization or business handling health files must abide by the HIPAA privacy rules. There are also many ancillary industries that must adhere to the guidelines if they have access to medical and patient data. This is where HIPPA Compliant cloud storage plays a significant role.

In 1996, “the U.S. Department of Health and Human Services (“HHS”) issued the Privacy Rule to implement the requirement of the Health Insurance Portability and Accountability Act (HIPAA) of 1996.” The Privacy Rule addresses patients’ “electronic protected health information” and how organizations, or “HIPAA covered entities” subject to the Privacy Rules must comply.

Most healthcare institutions use some form of electronic devices to provide medical care. This means that information no longer resides on a paper chart, but on a computer or in the cloud. Unlike general businesses or most commercial entities, healthcare institutions are legally obliged to employ the most reliable data backup practices.

So, how does this affect their choice of a cloud provider?

When planning their move to cloud computing, health care institutions need to ensure their vendor meets specific security criteria.

These criteria translate into requirements and thresholds that a company must meet and maintain to become HIPAA-ready. These come down to a set of certifications, SOC auditing and reporting, encryption levels, and physical security features.

HIPAA cloud storage solutions should work to make becoming compliant simple and straightforward. This way, healthcare organizations have one less thing to worry about and can focus on improving their critical processes.

HIPAA Cloud Storage and Data Backup Requirements

A cloud service provider doing business with a company operating under the HIPAA-HITECH act rules is considered a business associate. As such, it must show that it within cloud compliance standards and follows any relevant standards. Although the vendor does not directly handle patient information, it does receive, manage, and store Protected Health Information (PHI). This fact alone makes them responsible for protecting it according to HIPAA-HITECH act guidelines.

Being HIPAA compliant means implementing all of the rules and regulations that the Act proposes. Any vendor offering services that are subject to the act must provide documentation as proof of their conformity. This documentation needs to be sent not only to their clients but also to the Office for Civil Rights (OCR). The OCR is a sub-agency of the U.S. Department of Education, which promotes equal access to healthcare and human services programs.

Healthcare industry organizations looking to work with a HIPAA Compliant cloud storage provider should request proof of compliance to protect themselves. If the provider follows all standards, it should have no qualms about sharing the appropriate documentation with you.

HIPAA requirements for cloud hosting organizations are the same as the requirements for business associates. They fall into three distinct categories: administrative, physical, and technical safeguards.

- Administrative Safeguards: These types of safeguards are transparent policies that outline how the business will comply from an operational standpoint. The operations can include managing security risk assessments, appropriate procedures, disaster and emergency response, and managing passwords.

- Physical Safeguard: Physical safeguards are usually systems that are in place to protect customer data. They might include proper storage, data backup, and appropriate disposal of media at a data center. Important security precautions for facilities where hardware or software storage devices reside are also a part of this category.

- Technical Safeguards: This group of safeguards refers to technical features implemented to minimize data risk and maximize protection. Requiring unique login information, auto-logoff policies, and authentication for PHI access are just some of the technical safeguards that should be in place.

What Makes a HIPAA Certified Cloud Provider Compliant?

Providing HIPAA compliant file storage hardware or software is not as simple as flipping a switch. It takes a tremendous amount of time and effort for a company to become compliant.

The critical element to look for in a HIPAA certified cloud storage provider is its willingness to make a Business Associate Agreement. Known as a BAA, this agreement is completed between two parties planning to transmit, process, or receive PHI. Its primary purpose is to protect both parties from any legal repercussions resulting in the misuse of protected health information.

A Business Associate Agreement BAA must not add, subtract, or contradict the overall standards of the HIPAA. However, if both parties agree, supplementing specific terminology is acceptable. There are also some core terms that make up the groundwork for a compliant business associate agreement and must remain for the contract to be considered legally binding.

The level of encryption enabled by the cloud provider needs proper attention. The company should be encrypting files not only in transit but also at rest. Advanced Encryption Standard (AES) is the minimum level of encryption that it should use for file storage and sharing. AES is a successor to Data Encryption Standard (DES) and was developed by the National Institute of Standards and Technology (NIST) in 1997. It is an advanced encryption algorithm that offers improved defense against different security incidents.

Selecting a Compliant Cloud Storage Vendor

When choosing a HIPAA compliant provider, look for HIPAA web Hosting that meets the measures outlined in the previous section. Make sure you ask them about their data storage security practices to how secure your PHI data will be.

Does the potential vendor offer a service level agreement?

An SLA contract indicates guaranteed response times to threats, typically within a twenty-four-hour window. As a company that transmits PHI, you need to know how quickly the provider can notify you in the event of an incident. The faster you receive a breach notification, the more efficiently you can respond.

Don’t forget that the storage of electronic cloud-based medical records should be in a secure data center.

What are the security measures in place in case of an incident? How is access to the facility determined? Ask for a detailed outline of how they implement and enforce physical security. Check how they respond in the event of a data breach. Make sure you get all the relevant details before you bring your data to risk.

Your selected vendor should also have a Disaster Recovery and Continuity Plan in place.

A continuity plan will anticipate loss due to natural disasters, data breaches, and other unforeseen incidents. It will also provide the necessary processes and procedures if or when such events occur. Concerning data loss prevention best practices, it is also essential to determine how often the proposed method undergoes rigorous testing.

Healthcare Medical Records Security – How can I be Sure?

Cloud providers that take compliance seriously will ensure their certifications are current. There are several ways to check if they follow standards and relevant regulations.

One way is to audit your potential provider using an independent party. Auditing will bring any possible risks to your attention and reveal the vendor’s security tactics. Cloud storage for medical records providers must regularly audit their systems and environments for securing threats to remain compliant. The term ‘regularly’ is not defined by the act, so it is essential to request documentation and information on at least a quarterly basis. You should also ensure you have constant access to reports and documentation detailing the most recent audit.

Another way to determine whether the company is compliant is to assess the qualifications of its employees. All staff needs to be educated on the most current standards and get familiarized with specific safeguards. Only with these in place organizations can achieve compliance.

Ask your potential vendor tough questions. Anyone with access to PHI needs appropriate training on secure data transmission methods. Training needs to include the ability to securely encrypt patient information no matter where they are stored.

A HIPAA compliant company will not ask you for a backdoor to access your data or permission to bypass your access management protocols. Such vendors recognize the risk of requiring additional authentication or access points. Compromising access to authentication protocols and password requirements is a serious violation and should never happen.

Cloud Backup & Storage Frequently Asked Questions

Ask potential cloud vendors which method they use to evaluate your HIPAA compliance.

Is a HIPAA policy template available for use? Does the provider offer guidance and feedback on compliance? How are they ensuring that you are up to date and aware of security rules and regulations? Do they offer HIPAA compliant email?

Does the company have full-time employees on-premise?

Having a presence on site and available around the clock is a mechanism to ensure advanced security. An available representative makes PHI security more reliable and guarantees a quick response if needed. It also gives you peace of mind knowing that the company in charge of your data protection is thoroughly versed in the required standards.

The right provider should also be quick to adapt to the changes and inform you of anything that directly affects your PHI or your access to it.

Data deletion is a crucial component in choosing the appropriate HIPAA business associate. How long is the information kept for a period before being purged? How is data leakage prevented when servers are taken out of commission or erased? Is the data provided to you before deletion? The act offers no guidelines concerning the required length of time, but it is an agreement you and your provider must reach together.

In addition to your knowledge, determine how well your potential provider is versed in HIPAA regulations. Cloud companies often fail to follow the latest regulation changes, and you have to look for the one with consistent dedication.

Shop around. Do not be content with the first quote.

Many companies tout their HIPAA security, only to discover that they fall short of the measuring stick. Do your research, ask questions, and determine which vendor best suits your needs.

HIPAA-Compliant Cloud Storage is Critical

When it comes to protecting medical records in the cloud, phoenixNAP will support your efforts with the highest service quality, security, and dependability.

We provide a selection of data centers which offer state-of-the-art protection for your medical files. With scalable cloud solutions, a 100% uptime guarantee, and unmatched disaster recovery, you can rest assured that your infrastructure is compliant.

HIPAA certifications can be confusing, complicated, and stressful.

You need to be able to trust your cloud provider to keep your files safe. PhoenixNap Global IT Services will allow you the freedom to focus your attention on other areas of your business and ensure the protection of your entities and business associates.

Recent Posts

Cloud Security Tips to Reduce Security Risks, Threats, & Vulnerabilities

Do you assume that your data in the cloud is backed up and safe from threats? Not so fast.

With a record number of cybersecurity attacks taking place in 2018, it is clear that all data is under threat.

Everyone always thinks “It cannot happen to me.” The reality is, no network is 100% safe from hackers.

According to the Kaspersky Lab, ransomware rose by over 250% in 2018 and continues to trend in a very frightening direction. Following the advice presented here is the ultimate insurance policy from the crippling effects of a significant data loss in the cloud.

How do you start securing your data in the cloud? What are the best practices to keep your data protected in the cloud? How safe is cloud computing?

To help you jump-start your security strategy, we invited experts to share their advice on Cloud Security Risks and Threats.

Key Takeaways From Our Experts on Cloud Protection & Security Threats

- Accept that it may only be a matter of time before someone breaches your defenses, plan for it.

- Do not assume your data in the cloud is backed up.

- Enable two-factor authentication and IP-location to access cloud applications.

- Leverage encryption. Encrypt data at rest.

- The human element is among the biggest threats to your security.

- Implement a robust change control process, with weekly patch management cycle.

- Maintain offline copies of your data to in the event your cloud data is destroyed or held ransom.

- Contract with 24×7 security monitoring service.

- Have an security cident response plan.

- Utilize advanced firewall technology including WAF (Web Access Firewalls).

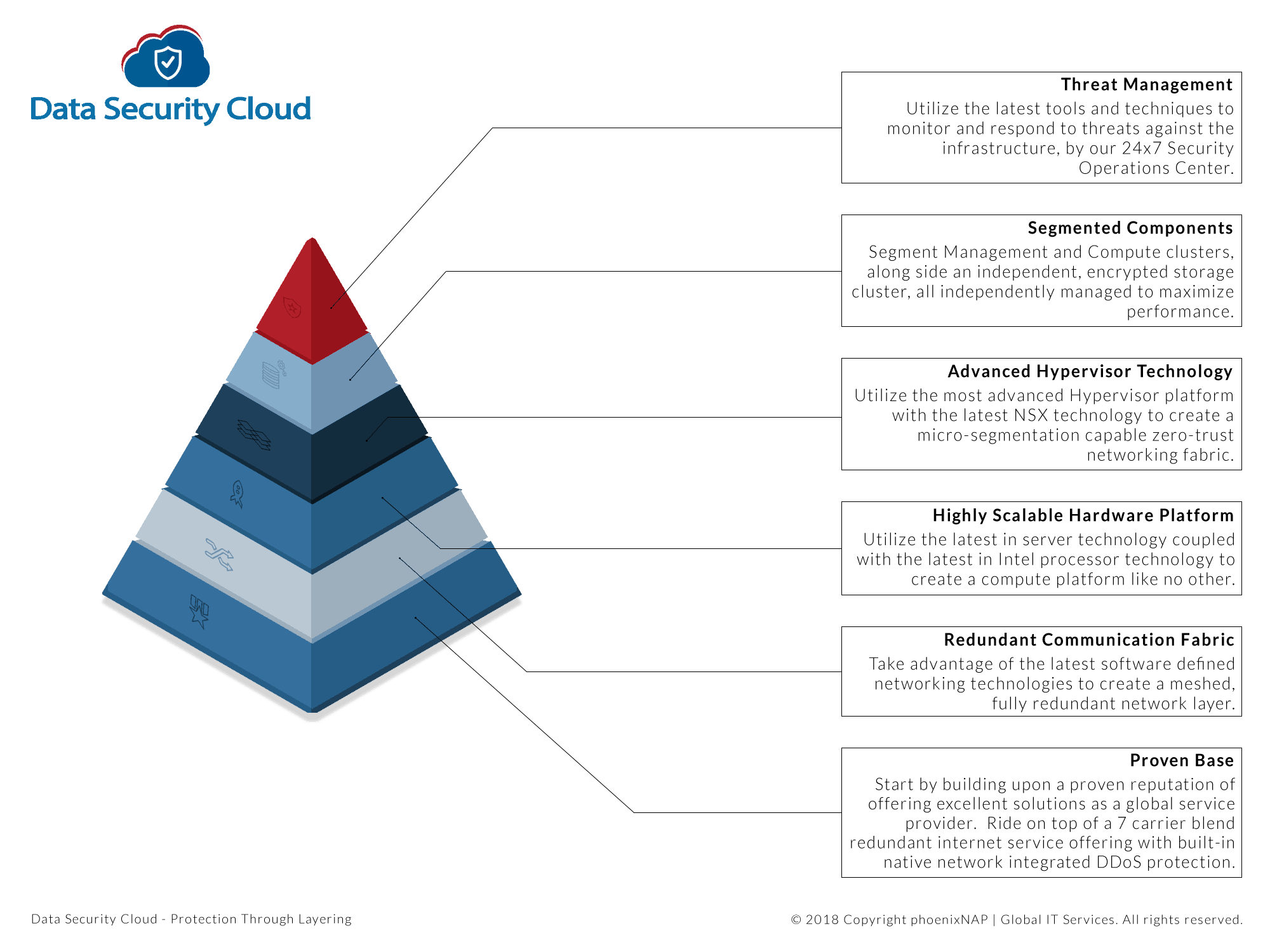

- Take advantage of application services, layering, and micro-segmentation.

1. Maintain Availability In The Cloud

When most people think about the topic of cloud-based security, they tend to think about Networking, Firewalls, Endpoint security, etc. Amazon defines cloud security as:

Security in the cloud is much like security in your on-premises data centers – only without the costs of maintaining facilities and hardware. In the cloud, you do not have to manage physical servers or storage devices. Instead, you use software-based security tools to monitor and protect the flow of information into and of out of your cloud resources.

But one often overlooked risk is maintaining availability. What I mean by that is more than just geo-redundancy or hardware redundancy, I am referring to making sure that your data and applications are covered. Cloud is not some magical place where all your worries disappear; a cloud is a place where all your fears are often easier and cheaper to multiply. Having a robust data protection strategy is key. Veeam has often been preaching about the “3-2-1 Rule” that was coined by Peter Krogh.

The rule states that you should have three copies of your data, storing them on two different media, and keeping one offsite. The one offsite is usually in the “cloud,” but what about when you are already in the cloud?

This is where I see most cloud issues arise, when people are already in the cloud they tend to store the data in the same cloud. This is why it is important to remember to have a detailed strategy when moving to the cloud. By leveraging things like Veeam agents to protect cloud workloads and Cloud Connect to send the backups offsite to maintain that availability outside of the same datacenter or cloud. Don’t assume that it is the providers’ job to protect your data because it is not.

2. Cloud MIgration is Outpacing The Evolution of Security Controls

According to a new survey conducted by ESG, 75% of organizations said that at least 20% of their sensitive data stored in public clouds is insufficiently secured. Also, 81% of those surveyed believe that on-premise data security is more mature than public cloud data.

Yet, businesses are migrating to the cloud faster than ever to maximize organizational benefits: an estimated 83% of business workloads will be in the cloud by 2020, according to LogicMonitor’s Cloud Vision 2020 report. What we have is an increasingly urgent situation in which organizations are migrating their sensitive data to the cloud for productivity purposes at a faster rate than security controls are evolving to protect that data.

Companies must look at solutions that control access to data within cloud shares based on the level of permission that user has, but they must also have the means to be alerted when that data is being accessed in unusual or suspicious ways, even by what appears to be a trusted user.

Remember that many hackers and insider leaks come from bad actors with stolen, legitimate credentials that allow them to move freely around in a cloud share, in search of valuable data to steal. Deception documents, called decoys, can also be an excellent tool to detect this. Decoys can alert security teams in the early stage of a cloud security breach to unusual behaviors, and can even fool a would-be cyber thief into thinking they have stolen something of value when in reality, it’s a highly convincing fake document. Then, there is the question of having control over documents even when they have been lifted out of the cloud share.

This is where many security solutions start to break down. Once a file has been downloaded from a cloud repository, how can you track where it travels and who looks at it? There must be more investment in technologies such as geofencing and telemetry to solve this.

3. Minimize Cloud Computing Threats and Vulnerabilities With a Security Plan

The Hybrid cloud continues to grow in popularity with the enterprise – mainly as the speed of deployment, scalability, and cost savings become more attractive to business. We continue to see infrastructure rapidly evolving into the cloud, which means security must develop at a similar pace. It is essential for the enterprise to work with a Cloud Service Provider who has a reliable approach to security in the cloud.

This means the partnership with your Cloud Provider is becoming increasingly important as you work together to understand and implement a security plan to keep your data secure.

Security controls like Multi-factor authentication, data encryption along with the level of compliance you require are all areas to focus on while building your security plan.

4. Never Stop Learning About Your Greatest Vulnerabilities

Isacc Kohen, CEO of Teramind

More and more companies are falling victim to the cloud, and it has to do with cloud misconfiguration and employee negligence.

1. The greatest threats to data security are your employees. Negligent or malicious, employees are one of the top reasons for malware infections and data loss. The reasons why malware attacks and phishing emails are common words in the news is because they are ‘easy’ ways for hackers to access data. Through social engineering, malicious criminals can ‘trick’ employees into giving passwords and credentials over to critical business and enterprise data systems. Ways to prevent this: an effective employee training program and employee monitoring that actively probes the system

2. Never stop learning. In an industry that is continuously changing and adapting, it is important to be updated on the latest trends and vulnerabilities. For example with the Internet of Things (IoT), we are only starting to see the ‘tip of the iceberg’ when it comes to protecting data over increased wi-fi connections and online data storage services. There’s more to develop with this story, and it will have a direct impact on small businesses in the future.

3. Research and understand how the storage works, then educate. We’ve heard the stories – when data is exposed through the cloud, many times it’s due to misconfiguration of the cloud settings. Employees need to understand the security nature of the application and that the settings can be easily tampered with and switched ‘on’ exposing data externally. Educate security awareness through training programs.

4. Limit your access points. An easy way to mitigate this, limit your access points. A common mistake with cloud exposure is due to when employees with access by mistake enable global permissions allowing the data exposed to an open connection. To mitigate, understand who and what has access to the data cloud – all access points – and monitor those connections thoroughly.

5. Monitoring the systems. Progressive and through. For long-term protection of data on the cloud, use a user-analytics and monitoring platform to detect breaches quicker. Monitoring and user analytics streamlines data and creates a standard ‘profile’ of the user – employee and computer. These analytics are integrated and following your most crucial data deposits, which you as the administrator indicated in the detection software. When specific cloud data is tampered with, moved or breached, the system will “ping” an administrator immediately indicating a change in character.

5. Consider Hybrid Solutions

There are several vital things to understand about security in the cloud:

1. Passwords are power – 80% of all password breaches could have been prevented by multifactor identification: by verifying your personal identity via text through to your phone or an email to your account, you can now be alerted when someone is trying to access your details.

One of the biggest culprits at the moment is weakened credentials. That means passwords, passkeys, and passphrases are stolen through phishing scams, keylogging, and brute-force attacks.

Passphrases are the new passwords. Random, easy-to-remember passphrases are much better than passwords, as they tend to be longer and more complicated.

MyDonkeysEatCheese47 is a complicated passphrase and unless you’re a donkey owner or a cheese-maker, unrelated to you. Remember to make use of upper and lowercase letters as well as the full range of punctuation.

2. Keep in touch with your hosting provider. Choose the right hosting provider – a reputable company with high-security standards in place. Communicate with them regularly as frequent interaction allows you to keep abreast of any changes or developing issues.

3. Consider a hybrid solution. Hybrid solutions allow for secure, static systems to store critical data in-house while at the same time opening up lower priority data to the greater versatility of the cloud.

6. Learn How Cloud Security Systems Work

Businesses need to make sure they evaluate cloud computing security risks and benefits. It is to make sure that they educate themselves on what it means to move into the cloud before taking that big leap from running systems in their own datacenter.

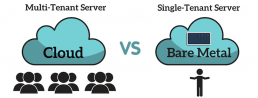

All too often I have seen a business migrate to the cloud without a plan or any knowledge about what it means to them and the security of their systems. They need to recognize that their software will be “living” on shared systems with other customers so if there is a breach of another customer’s platform, it may be possible for the attacker to compromise their system as well.

Likewise, cloud customers need to understand where their data will be stored, whether it will be only in the US, or the provider replicates to other systems that are on different continents. This may cause a real issue if the information is something sensitive like PII or information protected under HIPAA or some other regulatory statute. Lastly, the cloud customer needs to pay close attention to the Service Level Agreements (SLA) that the cloud provider adheres to and ensure that it mirrors their own SLA.

Moving to the cloud is a great way to free up computing resources and ensure uptime, but I always advise my clients to make a move in small steps so that they have time to gain an appreciation for what it means to be “in the cloud.”

7. Do Your Due Diligence In Securing the Cloud

Understand the type of data that you are putting into the cloud and the mandated security requirements around that data.

Once a business has an idea of the type of data they are looking to store in the cloud, they should have a firm understanding of the level of due diligence that is required when assessing different cloud providers. For example, if you are choosing a cloud service provider to host your Protected Health Information (PHI), you should require an assessment of security standards and HIPAA compliance before moving any data into the cloud.

Some good questions to ask when evaluating whether a cloud service provider is a fit for an organization concerned with securing that data include: Do you perform regular SOC audits and assessments? How do you protect against malicious activity? Do you conduct background checks on all employees? What types of systems do you have in place for employee monitoring, access determination, and audit trails?

8. Set up Access Controls and Security Permissions

While the cloud is a growing force in computing for its flexibility for scaling to meet the needs of a business and to increase collaboration across locations, it also raises security concerns with its potential for exposing vulnerabilities relatively out of your control.

For example, BYOD can be a challenge to secure if users are not regularly applying security patches and updates. The number one tip I would is to make the best use of available access controls.

Businesses need to utilize access controls to limit security permissions to allow only the actions related to the employees’ job functions. By limiting access, businesses assure critical files are available only to the staff needing them, therefore, reducing the chances of their exposure to the wrong parties. This control also makes it easier to revoke access rights immediately upon termination of employment to safeguard any sensitive content within no matter where the employee attempts access from remotely.

9. Understand the Pedigree and Processes of the Supplier or Vendor

The use of cloud technologies has allowed businesses of all sizes to drive performance improvements and gain efficiency with more remote working, higher availability and more flexibility.

However, with an increasing number of disparate systems deployed and so many cloud suppliers and software to choose from, retaining control over data security can become challenging. When looking to implement a cloud service, it is essential to thoroughly understand the pedigree and processes of the supplier/vendor who will provide the service. Industry standard security certifications are a great place to start. Suppliers who have an ISO 27001 certification have proven that they have met international information security management standards and should be held in higher regard than those without.

Gaining a full understanding of where your data will to geographically, who will have access to it, and whether it will be encrypted is key to being able to protect it. It is also important to know what the supplier’s processes are in the event of a data breach or loss or if there is downtime. Acceptable downtime should be set out in contracted Service Level Agreements (SLAs), which should be financially backed by them provide reassurance.

For organizations looking to utilize cloud platforms, there are cloud security threats to be aware of, who will have access to data? Where is the data stored? Is my data encrypted? But for the most part cloud platforms can answer these questions and have high levels of security. Organizations utilizing the clouds need to ensure that they are aware of data protection laws and regulations that affect data and also gain an accurate understanding of contractual agreements with cloud providers. How is data protected? Many regulations and industry standards will give guidance on the best way to store sensitive data.

Keeping unsecured or unencrypted copies of data can put it at higher risk. Gaining knowledge of security levels of cloud services is vital.

What are the retention policies, and do I have a backup? Cloud platforms can have widely varied uses, and this can cause (or prevent) issues. If data is being stored in a cloud platform, it could be vulnerable to cloud security risks such as ransomware or corruption so ensuring that multiple copies of data are retained or backed up can prevent this. Guaranteeing these processes have been taken improves the security levels of an organizations cloud platforms and gives an understanding of where any risk could come from

10. Use Strong Passwords and Multi-factor Authentication

Ensure that you require strong passwords for all cloud users, and preferably use multi-factor authentication.

According to the 2017 Verizon Data Breach Investigations Report, 81% of all hacking-related breaches leveraged either stolen and/or weak passwords. One of the most significant benefits of the Cloud is the ability to access company data from anywhere in the world on any device. On the flip side, from a security standpoint, anyone (aka “bad guys”) with a username and password can potentially access the businesses data. Forcing users to create strong passwords makes it vastly more difficult for hackers to use a brute force attack (guessing the password from multiple random characters.)

In addition to secure passwords, many cloud services today can utilize an employee’s cell phone as the secondary, physical security authentication piece in a multi-factor strategy, making this accessible and affordable for an organization to implement. Users would not only need to know the password but would need physical access to their cell phone to access their account.

Lastly, consider implementing a feature that would lock a user’s account after a predetermined amount of unsuccessful logins.

11. Enable IP-location Lockdown

Companies should enable two-factor authentication and IP-location lockdown to access to the cloud applications they use.

With 2FA, you add another challenge to the usual email/password combination by text message. With IP lockdown you can ring-fence access from your office IP or the IP of remote workers. If the platform does not support this, consider asking your provider to enable it.

Regarding actual cloud platform provision, provide a data at rest encryption option. At some point, this will become as ubiquitous as https (SSL/TLS). Should the unthinkable happen and data ends up in the wrong hands, i.e., a device gets stolen or forgotten on a train, then data at rest encryption is the last line of defense to prevent anyone from accessing your data without the right encryption keys. Even if they manage to steal it, they cannot use it. This, for example, would have ameliorated the recent Equifax breach.

12. Cloud Storage Security Solutions With VPN’s

Use VPNs (virtual private networks) whenever you connect to the cloud. VPNs are often used to semi-anonymize web traffic, usually by viewers that are geoblocked by accessing streaming services such as Netflix USA or BBC Player. They also provide a crucial layer of security for any device connecting to your cloud. Without a VPN, any potential intruder with a packet sniffer could determine what members were accessing your cloud account and potentially gain access to their login credentials.

Encrypt data at rest. If for any reason a user account is compromised on your public, private or hybrid cloud, the difference between data in plaintext vs. encrypted format can be measured in hundreds of thousands of dollars — specifically $229,000, the average cost of a cyber attack reported by the respondents of a survey conducted by the insurance company Hiscox. As recent events have shown, the process of encrypting and decrypting this data will prove far more painless than enduring its alternative.

Use two-factor authentication and single sign-on for all cloud-based accounts. Google, Facebook, and PayPal all utilize two-factor authentication, which requires the user to input a unique software-generated code into a form before signing into his/her account. Whether or not your business aspires to their stature, it can and should emulate this core component of their security strategy. Single sign-on simplifies access management, so one pair of user credentials signs the employee into all accounts. This way, system administrators only have one account to delete rather than several that can be forgotten and later re-accessed by the former employee.

13. Beware of the Human Element Risk

To paraphrase Shakespeare, the fault is not in the cloud; the responsibility is in us.

Storing sensitive data in the cloud is a good option for data security on many levels. However, regardless of how secure a technology may be, the human element will always present a potential security danger to be exploited by cybercriminals. Many past cloud security breached have proven not to be due to security lapses by the cloud technology, but rather by actions of individual users of the cloud.

They have unknowingly provided their usernames and passwords to cybercriminals who, through spear phishing emails, phone calls or text messages persuade people to give the critical information necessary to access the cloud account.

The best way to avoid this problem, along with better education of employees to recognize and prevent spear phishing, is to use dual factor authentication such as having a one time code sent to the employee’s cell phone whenever the cloud account is attempted to be accessed.

14. Ensure Data Retrieval From A Cloud Vendor

1. Two-factor authentication protects against account fraud. Many users fail victim to email phishing attempts where bad actors dupe the victim into entering their login information on a fake website. The bad actor can then log in to the real site as the victim, and do all sorts of damage depending on the site application and the user access. 2FA ensures a second code must be entered when logging into the application. Usually, a code sent to the user’s phone.

2. Ensuring you own your data and that can retrieve it in the event you no longer want to do business with the cloud vendor is imperative. Most legitimate cloud vendors should specify in their terms that the customer owns their data. Next, you need to confirm you can extract or export the data in some usable format, or that the cloud vendor will provide it to you on request.

15. Real Time and Continuous Monitoring

1. Create Real-Time Security Observability & Continuous Systems Monitoring

While monitoring is essential in any data environment, it’s critical to emphasize that changes in modern cloud environments, especially those of SaaS environments, tend to occur more frequently; their impacts are felt immediately.

The results can be dramatic because of the nature of elastic infrastructure. At any time, someone’s accidental or malicious actions could severely impact the security of your development, production, or test systems.

Running a modern infrastructure without real-time security observability and continuous monitoring is like flying blind. You have no insight into what’s happening in your environment, and no way to start immediate mitigation when an issue arises. You need to monitor application and host-based access to understand the state of your application over time.

- Monitoring systems for manual user actions. This is especially important in the current DevOps world where engineers are likely to have access to production. It’s possible they are managing systems using manual tasks, so use this as an opportunity to identify processes that are suited for automation.

- Tracking application performance over time to help detect anomalies. Understanding “who did what and when” is fundamental to investigating changes that are occurring in your environment.

2. Set & Continuously Monitor Configuration Settings

Security configurations in cloud environments such as Amazon Direct Connect can be complicated, and it is easy to inadvertently leave access to your systems and data open to the world, as has been proven by all the recent stories about S3 leaks.